aiaio

lightweight, python based chat ui

Stars: 282

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

README:

A lightweight, privacy-focused web UI for interacting with AI models. Supports both local and remote LLM deployments through OpenAI-compatible APIs.

- 🌓 Dark/Light mode support

- 💾 Local SQLite database for conversation storage

- 📁 File upload and processing (images, documents, etc.)

- ⚙️ Configurable model parameters through UI

- 🔒 Privacy-focused (all data stays local)

- 📱 Responsive design for mobile/desktop

- 🎨 Syntax highlighting for code blocks

- 📋 One-click code block copying

- 🔄 Real-time conversation updates

- 📝 Automatic conversation summarization

- 🎯 Customizable system prompts

- 🌐 WebSocket support for real-time updates

- 📦 Docker support for easy deploymen

- 📦 Multiple API endpoint support

- 📦 Multiple system prompt support

- Python 3.10+

- An OpenAI-compatible API endpoint (local or remote)

aiaio works with any OpenAI-compatible API endpoint, including:

- OpenAI API

- vLLM

- Text Generation Inference (TGI)

- Hugging Face Inference Endpoints

- llama.cpp server

- LocalAI

- Custom OpenAI-compatible APIs

For example, you can serve llama 8b using vLLM using:

vllm serve Meta-Llama-3.1-8B-Instruct.Q4_K_M.gguf --tokenizer meta-llama/Llama-3.1-8B-Instruct --max_model_len 125000and once the api is running, you can access it using aiaio ui.

pip install aiaiogit clone https://github.com/abhishekkrthakur/aiaio.git

cd aiaio

pip install -e .- Start the server:

aiaio app --host 127.0.0.1 --port 5000-

Open your browser and navigate to

http://127.0.0.1:5000 -

Configure your API endpoint and model settings in the UI

- Build the Docker image:

docker build -t aiaio .- Run the container:

docker run --network host \

-v /path/to/data:/data \

aiaioThe /data volume mount is optional but recommended for persistent storage of the SQLite database and uploaded files.

All model and API settings can be configured through the UI:

- Temperature (0-2): Controls response randomness. Higher values make output more creative but less focused

- Max Tokens (1-32k): Maximum length of generated responses

- Top P (0-1): Controls diversity via nucleus sampling. Lower values make output more focused

- Model Name: Name/path of the model to use (depends on your API endpoint)

- Host: URL of your OpenAI-compatible API endpoint

- API Key: Authentication key if required by your endpoint

These settings are stored in the local SQLite database and persist between sessions.

aiaio supports uploading and processing various file types, depending on the model's capabilities:

- Images (PNG, JPG, GIF, etc.)

- Documents (PDF, DOC, DOCX)

- Text files (TXT, CSV, JSON)

- Audio files (depends on model capabilities)

- Video files (depends on model capabilities)

Uploaded files are stored temporarily and can be referenced in conversations.

aiaio uses SQLite for storage with the following main tables:

-

conversations: Stores chat histories and summaries -

messages: Stores individual messages within conversations -

attachments: Stores file attachment metadata -

settings: Stores UI and model configuration

Each conversation can have its own system prompt that guides the AI's behavior. Click the "System Prompt" section above the chat to customize it.

- Create new conversations using the "+ New Chat" button

- Switch between conversations in the left sidebar

- Delete conversations using the trash icon

- View conversation summaries in the sidebar

-

Ctrl/Cmd + Enter: Send message -

Esc: Clear input -

Ctrl/Cmd + K: Focus chat input -

Ctrl/Cmd + /: Toggle settings sidebar

# Clone the repository

git clone https://github.com/abhishekkrthakur/aiaio.git

cd aiaio

# Create a virtual environment

python -m venv venv

source venv/bin/activate # or `venv\Scripts\activate` on Windows

# Install development dependencies

pip install -e ".[dev]"

# Run tests

pytest

# Run with auto-reload for development

uvicorn aiaio.app.app:app --reload --port 5000Contributions are welcome! Please:

- Fork the repository

- Create a feature branch

- Make your changes

- Run the tests (

pytest) - Submit a pull request

Apache License 2.0 - see LICENSE file for details

This project was primarily written with GitHub Copilot's assistance. While the human guided the development, Copilot generated much of the actual code.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiaio

Similar Open Source Tools

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

qwery-core

Qwery is a platform for querying and visualizing data using natural language without technical knowledge. It seamlessly integrates with various datasources, generates optimized queries, and delivers outcomes like result sets, dashboards, and APIs. Features include natural language querying, multi-database support, AI-powered agents, visual data apps, desktop & cloud options, template library, and extensibility through plugins. The project is under active development and not yet suitable for production use.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

comfyui-web-viewer

The ComfyUI Web Viewer by vrch.ai is a real-time AI-generated interactive art framework that integrates realtime streaming into ComfyUI workflows. It supports keyboard control nodes, OSC control nodes, sound input nodes, and more, accessible from any device with a web browser. It enables real-time interaction with AI-generated content, ideal for interactive visual projects and enhancing ComfyUI workflows with efficient content management and display.

BodhiApp

Bodhi App runs Open Source Large Language Models locally, exposing LLM inference capabilities as OpenAI API compatible REST APIs. It leverages llama.cpp for GGUF format models and huggingface.co ecosystem for model downloads. Users can run fine-tuned models for chat completions, create custom aliases, and convert Huggingface models to GGUF format. The CLI offers commands for environment configuration, model management, pulling files, serving API, and more.

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

CodeWebChat

Code Web Chat is a versatile, free, and open-source AI pair programming tool with a unique web-based workflow. Users can select files, type instructions, and initialize various chatbots like ChatGPT, Gemini, Claude, and more hands-free. The tool helps users save money with free tiers and subscription-based billing and save time with multi-file edits from a single prompt. It supports chatbot initialization through the Connector browser extension and offers API tools for code completions, editing context, intelligent updates, and commit messages. Users can handle AI responses, code completions, and version control through various commands. The tool is privacy-focused, operates locally, and supports any OpenAI-API compatible provider for its utilities.

chat-vue

Full-featured AI Chatbot Vue application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support, authentication via GitHub OAuth, chat history persistence, markdown rendering, and easy deployment to Vercel with zero configuration.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

gemini-multimodal-playground

Gemini Multimodal Playground is a basic Python app for voice conversations with Google's Gemini 2.0 AI model. It features real-time voice input and text-to-speech responses. Users can configure settings through the GUI and interact with Gemini by speaking into the microphone. The application provides options for voice selection, system prompt customization, and enabling Google search. Troubleshooting tips are available for handling audio feedback loop issues that may occur during interactions.

chat

Full-featured AI Chatbot Nuxt application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support via various AI providers, authentication via nuxt-auth-utils, chat history persistence using PostgreSQL database and Drizzle ORM, easy deploy to Vercel with zero configuration. The application is configured to use Vercel AI Gateway providing a unified API to access hundreds of AI models through a single endpoint with features like high reliability, spend monitoring, load balancing, and automatic retries and fallbacks between providers.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

For similar tasks

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

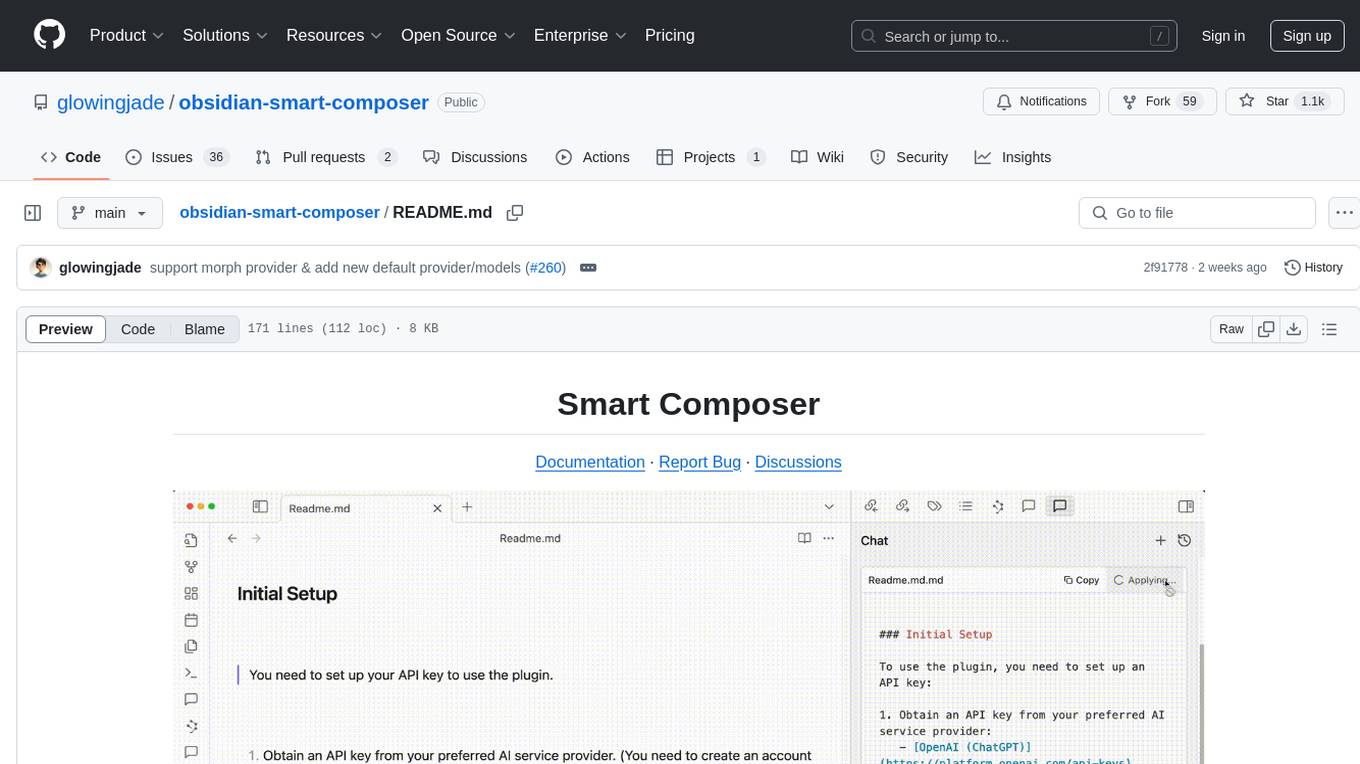

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

1backend

1Backend is a flexible and scalable platform designed for running AI models on private servers and handling high-concurrency workloads. It provides a ChatGPT-like interface for users and a network-accessible API for machines, serving as a general-purpose backend framework. The platform offers on-premise ChatGPT alternatives, a microservices-first web framework, out-of-the-box services like file uploads and user management, infrastructure simplification acting as a container orchestrator, reverse proxy, multi-database support with its own ORM, and AI integration with platforms like LlamaCpp and StableDiffusion.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

For similar jobs

responsible-ai-toolbox

Responsible AI Toolbox is a suite of tools providing model and data exploration and assessment interfaces and libraries for understanding AI systems. It empowers developers and stakeholders to develop and monitor AI responsibly, enabling better data-driven actions. The toolbox includes visualization widgets for model assessment, error analysis, interpretability, fairness assessment, and mitigations library. It also offers a JupyterLab extension for managing machine learning experiments and a library for measuring gender bias in NLP datasets.

LLMLingua

LLMLingua is a tool that utilizes a compact, well-trained language model to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models, achieving up to 20x compression with minimal performance loss. The tool includes LLMLingua, LongLLMLingua, and LLMLingua-2, each offering different levels of prompt compression and performance improvements for tasks involving large language models.

llm-examples

Starter examples for building LLM apps with Streamlit. This repository showcases a growing collection of LLM minimum working examples, including a Chatbot, File Q&A, Chat with Internet search, LangChain Quickstart, LangChain PromptTemplate, and Chat with user feedback. Users can easily get their own OpenAI API key and set it as an environment variable in Streamlit apps to run the examples locally.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

gaianet-node

GaiaNet-node is a tool that allows users to run their own GaiaNet node, enabling them to interact with an AI agent. The tool provides functionalities to install the default node software stack, initialize the node with model files and vector database files, start the node, stop the node, and update configurations. Users can use pre-set configurations or pass a custom URL for initialization. The tool is designed to facilitate communication with the AI agent and access node information via a browser. GaiaNet-node requires sudo privilege for installation but can also be installed without sudo privileges with specific commands.

llmops-duke-aipi

LLMOps Duke AIPI is a course focused on operationalizing Large Language Models, teaching methodologies for developing applications using software development best practices with large language models. The course covers various topics such as generative AI concepts, setting up development environments, interacting with large language models, using local large language models, applied solutions with LLMs, extensibility using plugins and functions, retrieval augmented generation, introduction to Python web frameworks for APIs, DevOps principles, deploying machine learning APIs, LLM platforms, and final presentations. Students will learn to build, share, and present portfolios using Github, YouTube, and Linkedin, as well as develop non-linear life-long learning skills. Prerequisites include basic Linux and programming skills, with coursework available in Python or Rust. Additional resources and references are provided for further learning and exploration.

Awesome-AISourceHub

Awesome-AISourceHub is a repository that collects high-quality information sources in the field of AI technology. It serves as a synchronized source of information to avoid information gaps and information silos. The repository aims to provide valuable resources for individuals such as AI book authors, enterprise decision-makers, and tool developers who frequently use Twitter to share insights and updates related to AI advancements. The platform emphasizes the importance of accessing information closer to the source for better quality content. Users can contribute their own high-quality information sources to the repository by following specific steps outlined in the contribution guidelines. The repository covers various platforms such as Twitter, public accounts, knowledge planets, podcasts, blogs, websites, YouTube channels, and more, offering a comprehensive collection of AI-related resources for individuals interested in staying updated with the latest trends and developments in the AI field.