DevoxxGenieIDEAPlugin

DevoxxGenie is a plugin for IntelliJ IDEA that uses local LLM's (Ollama, LMStudio, GPT4All, Jan and Llama.cpp) and Cloud based LLMs to help review, test, explain your project code. Latest version now also supports Spec Driven Development with CLI Runners.

Stars: 620

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

README:

Devoxx Genie is a fully Java-based LLM Code Assistant plugin for IntelliJ IDEA, designed to integrate with local LLM providers such as Ollama, LMStudio, GPT4All, Llama.cpp and Exo but also cloud based LLM's such as OpenAI, Anthropic, Mistral, Groq, Gemini, DeepInfra, DeepSeek, Kimi, GLM, OpenRouter, Azure OpenAI and Amazon Bedrock

🆕 Security Scanning — Run Gitleaks (secret detection), OpenGrep (SAST) and Trivy (dependency CVEs) directly from the LLM agent. Findings are automatically created as prioritised tasks in the Spec Browser for tracking and remediation!

🆕 Spec Driven Development (SDD) — Define tasks in Backlog.md, browse them in the Spec Browser with Task List and Kanban Board views, then let the Agent implement them autonomously! Use the Agent Loop to run multiple tasks in a single batch with dependency ordering and automatic advancement.

🆕 AI-powered Inline Code Completion — Get context-aware code suggestions as you type using Fill-in-the-Middle (FIM) models via Ollama or LM Studio!

🆕 ACP Runners — Communicate with external agents (Kimi, Gemini CLI, Kilocode, Claude Code, Copilot) via the Agent Communication Protocol (JSON-RPC 2.0 over stdin/stdout) with structured streaming, conversation history, and capability negotiation!

🆕 CLI Runners — CLI Runners let you execute prompts and spec tasks directly from DevoxxGenie's chat interface or Spec Browser using external CLI tools like Claude Code, GitHub Copilot, Codex, Gemini CLI, and Kimi.

🆕 Plugin Integration API — Other IntelliJ plugins can integrate with DevoxxGenie at runtime via a reflection-based ExternalPromptService — no compile-time dependency required. Two real-world POCs show it in action: a SonarLint fork and a SpotBugs fork that each send code-quality findings to DevoxxGenie with a single click, or defer them as Backlog tasks for the SDD workflow.

With Agent Mode, MCPs and frontier models like Claude Opus 4.6, Gemini Pro, DevoxxGenie isn't just another developer tool — it's a glimpse into the future of agentic programming. One thing's clear: we're in the midst of a paradigm shift in AI-Augmented Programming (AAP) 🐒

We also support RAG-based prompt context based on your vectorized project files, Git Diff viewer, and LLM-driven web search with Google and Tavily.

📖 Visit our comprehensive documentation at genie.devoxx.com

Quick links:

- Getting Started - Start using DevoxxGenie in minutes

- Installation Guide - Local and cloud LLM setup

- Configuration - API keys, settings, and customization

- Features - Explore all capabilities

- Security Scanning - Gitleaks, OpenGrep and Trivy as LLM agent tools with auto-backlog task creation

- Agent Mode - Autonomous code tools with parallel sub-agents

- Spec Driven Development - Task management with Backlog.md, Kanban Board, and Agent implementation

- Agent Loop - Batch task execution with dependency ordering and progress tracking

- ACP Runners - Agent Communication Protocol integration with external agents

- CLI Runners - Execute prompts and spec tasks via external CLI tools

- Plugin Integration API - Integrate other IntelliJ plugins with DevoxxGenie at runtime

- Inline Code Completion - AI-powered code suggestions as you type

- MCP Support - Model Context Protocol integration

- RAG Setup - Retrieval-Augmented Generation guide

- Troubleshooting - Common issues and solutions

📖 Full Security Scanning Documentation

DevoxxGenie integrates three best-in-class open-source security scanners as LLM agent tools. When Agent Mode is active, the LLM can invoke them on demand, interpret the results in context, and automatically create prioritised backlog tasks for every finding.

| Scanner | What it detects | Install |

|---|---|---|

| Gitleaks | Hardcoded secrets, API keys, tokens | brew install gitleaks |

| OpenGrep | SAST issues — injection flaws, insecure patterns | brew install opengrep |

| Trivy | Dependency CVEs (SCA) | brew install trivy |

Ask the agent: "Run a full security scan and create backlog tasks for everything you find."

Enable in Settings → DevoxxGenie → Security Scanning. Each scanner has a path browser, a Test button, and install guidance. Findings are deduplicated — re-running a scan will not create duplicate tasks.

Spec Driven Development brings structured task management directly into your IDE. Instead of ad-hoc prompts, define your tasks in Backlog.md files, browse them in the Spec Browser, and let the Agent implement them autonomously.

How it works:

- Create tasks — Use natural language prompts or write Backlog.md task specs manually

- Browse in Specs — View tasks in a Task List or visual Kanban Board with drag-and-drop

- Implement with Agent — Click "Implement with Agent" and let the AI do the work

The Kanban Board gives you a visual overview of task status with drag-and-drop support:

17 built-in backlog tools provide full CRUD operations on tasks, documents, and milestones — all accessible to the LLM agent for autonomous project management.

Select multiple tasks (or click "Run All To Do") and the Agent Loop executes them sequentially in a single batch. Tasks are automatically sorted by dependencies using topological ordering, and each task gets a fresh conversation. The agent implements each task autonomously, and when it marks a task as Done the runner advances to the next one — with progress tracking and notifications throughout.

- Building full-stack AI agents: From project generation to code execution (Devoxx France 2025)

- Agentic programming with DevoxxGenie (VoxxedDays Bucharest 2025)

- DevoxxGenie in action (Devoxx Belgium 2024)

- How ChatMemory works

- Hands-on with DevoxxGenie

- The Era of AAP: Ai Augmented Programming using only Java

- DevoxxGenie Demo 2024

- DevoxxGenie: Your AI Assistant for IDEA

- The Devoxx Genie IntelliJ Plugin Provides Access to Local or Cloud Based LLM Models

- 10K+ Downloads Milestone for DevoxxGenie!

- 🔒 Security Scanning (v0.9.17+): Run Gitleaks (secret detection), OpenGrep (SAST) and Trivy (SCA/CVEs) as LLM agent tools. Each finding is auto-created as a prioritised Backlog.md task. Enable in Settings → Security Scanning.

- 📋 Spec Driven Development (v0.9.7+): Define tasks in Backlog.md, browse them in the Spec Browser (Task List + Kanban Board), and let the Agent implement them. 17 built-in backlog tools for full CRUD on tasks, documents, and milestones. Use the Agent Loop to run multiple tasks in batch with dependency ordering (v0.9.8+).

- 🆕 ACP Runners (v0.9.10+): Communicate with external agents (Kimi, Gemini CLI, Kilocode, Claude Code, Copilot) via the Agent Communication Protocol with structured streaming, conversation history, and capability negotiation.

-

🔌 Plugin Integration API (v0.9.12+): Let other IntelliJ plugins send prompts or create Backlog tasks via a reflection-based

ExternalPromptService— no compile-time dependency required. Two POC integrations available: SonarLint DevoxxGenie and SpotBugs DevoxxGenie. - 🖥️ CLI Runners (v0.9.9+): Execute prompts and spec tasks via external CLI tools (Claude Code, GitHub Copilot, Codex, Gemini CLI, Kimi) directly from the chat interface or the Spec Browser.

- ✨ Inline Code Completion: (v0.9.6+) AI-powered code suggestions as you type using Fill-in-the-Middle (FIM) models. Supports both Ollama and LM Studio with models like StarCoder2, Qwen2.5-Coder, and DeepSeek-Coder.

- 🤖 Agent Mode (v0.9.4+): Autonomous code exploration and modification with built-in tools (read, write, edit, search files). Parallel sub-agents investigate multiple areas of your codebase concurrently, each with configurable provider/model. Enable in Agent Settings!

- 🔥️ MCP Support with Marketplace: Browse and install MCP servers from the integrated marketplace. Add MCP servers and use them in your conversations!

- 🗂️ DEVOXXGENIE.md: By incorporating this into the system prompt, the LLM will gain a deeper understanding of your project and provide more relevant responses.

- 📸 DnD images: You can now DnD images with multimodal LLM's.

- 🧐 RAG Support: Retrieval-Augmented Generation (RAG) support for automatically incorporating project context into your prompts.

- 👀 Chat History: Your chats are stored locally, allowing you to easily restore them in the future.

- 🧠 Project Scanner: Add source code (full project or by package) to prompt context when using Anthropic, OpenAI or Gemini.

- 💰 Token Cost Calculator: Calculate the cost when using Cloud LLM providers.

- 🔍 Web Search: Search the web for a given query using Google or Tavily.

- 🏎️ Streaming responses: See each token as it's received from the LLM in real-time.

- 🧐 Abstract Syntax Tree (AST) context: Automatically include parent class and class/field references in the prompt for better code analysis.

- 💬 Chat Memory Size: Set the size of your chat memory, by default its set to a total of 10 messages (system + user & AI msgs).

- ☕️ 100% Java: An IDEA plugin using local and cloud based LLM models. Fully developed in Java using Langchain4J

- 👀 Code Highlighting: Supports highlighting of code blocks.

- 💬 Chat conversations: Supports chat conversations with configurable memory size.

- 📁 Add files & code snippets to context: You can add open files to the chat window context for producing better answers or code snippets if you want to have a super focused window

- Download and start Ollama

- Open terminal and download a model using command "ollama run llama3.2"

- Start your IDEA and go to plugins > Marketplace and enter "Devoxx"

- Select "DevoxxGenie" and install plugin

- In the DevoxxGenie window select Ollama and available model

- Start prompting

- Start your IDEA and go to plugins > Marketplace and enter "Devoxx"

- Select "DevoxxGenie" and install plugin

- Click on DevoxxGenie cog (settings) icon and click on Cloud Provider link icon to create API KEY

- Paste API Key in Settings panel

- In the DevoxxGenie window select your cloud provider and model

- Start prompting

Initial support for Model Context Protocol (MCP) server tools including debugging of MCP requests & responses! MCP support is a crucial feature towards ful Agentic support within DevoxxGenie. Watch short demo of MCP in action using DevoxxGenie

Example of the Filesystem-server MCP which allows you to interact with the given directory.

Go to the DevoxxGenie settings to enable and add your MCP servers. Browse the MCP Marketplace to discover and install servers with just a few clicks!

When configured correctly you can see the tools that the MCP brings to your LLM conversations

📖 DEVOXXGENIE.md Documentation

You can now generate a DEVOXXGENIE.md file directly from the "Prompts" plugin settings page or just use /init in the prompt input field.

By incorporating this into the system prompt, the LLM will gain a deeper understanding of your project and provide more relevant responses. This is a first step toward enabling agentic AI features for DevoxxGenie 🔥

Once generated, you can edit the DEVOXXGENIE.md file and add more details about your project as needed.

You can now drag and drop images (and project files) directly into the input field when working with multimodal LLMs like Google Gemini, Anthropic Claude, ChatGPT 4.x, or even local models such as LLaVA

You can even combine screenshots together with some code and then ask related questions!

Devoxx Genie now includes starting from v0.4.0 a Retrieval-Augmented Generation (RAG) feature, which enables advanced code search and retrieval capabilities. This feature uses a combination of natural language processing (NLP) and machine learning algorithms to analyze code snippets and identify relevant results based on their semantic meaning.

With RAG, you can:

- Search for code snippets using natural language queries

- Retrieve relevant code examples that match your query's intent

- Explore related concepts and ideas in the codebase

We currently use Ollama and Nomic Text embedding to generates vector representations of your project files. These embedding vectors are then stored in a Chroma DB (v0.6.2) running locally within Docker. The vectors are used to compute similarity scores between search queries and your code all running locally.

The RAG feature is a significant enhancement to Devoxx Genie's code search capabilities, enabling developers to quickly find relevant code examples and accelerate their coding workflow.

See also Demo

Expecting to add also GraphRAG in the near future.

In the IDEA settings you can modify the REST endpoints and the LLM parameters. Make sure to press enter and apply to save your changes.

We now also support Cloud based LLMs, you can paste the API keys on the Settings page.

The language model dropdown is not just a list anymore, it's your compass for smart model selection.

See available context window sizes for each cloud model View associated costs upfront Make data-driven decisions on which model to use for your project

You can now add the full project to your prompt IF your selected cloud LLM has a big enough window context.

Leverage the prompt cost calculator for precise budget management. Get real-time updates on how much of the context window you're using.

See the input/output costs and window context per Cloud LLM. Eventually we'll also allow you to edit these values.

"But wait," you might say, "my project is HUGE!" 😅

Fear not! We've got options:

- Leverage Gemini's Massive Context:

Gemini's colossal 1 million token window isn't just big, it's massive. We're talking about the capacity to digest approximately 30,000 lines of code in a single go. That's enough to digest most codebases whole, from the tiniest scripts to some decent projects.

But if that's not enough you have more options...

- Smart Filtering:

The new "Copy Project" panel lets you:

Exclude specific directories Filter by file extensions Remove JavaDocs to slim down your context

- Selective Inclusion

Right-click to add only the most relevant parts of your project to the context.

The DevoxxGenie project itself, at about 70K tokens, fits comfortably within most high-end LLM context windows. This allows for incredibly nuanced interactions – we're talking advanced queries and feature requests that leave tools like GitHub Copilot scratching their virtual heads!

DevoxxGenie now also supports the 100% Modern Java LLM inference engines: JLama.

JLama offers a REST API compatible with the widely-used OpenAI API. Use the Custom OpenAI URL to connect.

You can also integrate it seamlessly with Llama3.java but using the Spring Boot OpenAI API wrapper coupled with the JLama DevoxxGenie option.

Use the custom OpenAI URL to connect to Exo, a local LLM cluster for Apple Silicon which allows you to run Llama 3.1 8b, 70b and 405b on your own Apple computers 🤩

Write a unit test and let DevoxxGenie generated the implementation for that unit test. This approach was explained by Bouke Nijhuis in his Devoxx Belgium presentation

An demo on how to accomplish this can be seen in this 𝕏 post.

As of today (February 2, 2025), alongside the DeepSeek API Key, you can access the full 671B model for FREE using either Nvidia or Chutes! Simply update the Custom OpenAI URL, Model and API Key on the Settings page as follows:

Chutes URL : https://chutes-deepseek-ai-deepseek-r1.chutes.ai/v1/

Nvidia URL : https://integrate.api.nvidia.com/v1

Create an account on Grok and generated an API Key. Now open the DevoxxGenie settings and enter the OpenAI compliant URL for Grok, the model you want to use and your API Key.

-

From IntelliJ IDEA: Go to

Settings->Plugins->Marketplace-> Enter 'Devoxx' to find plugin OR Install plugin from Disk -

From Source Code: Clone the repository, build the plugin using

./gradlew buildPlugin, and install the plugin from thebuild/distributionsdirectory and select file 'DevoxxGenie-X.Y.Z.zip'

- IntelliJ minimum version is 2023.3.4

- Java minimum version is JDK 17

Gradle IntelliJ Plugin prepares a ZIP archive when running the buildPlugin task.

You'll find it in the build/distributions/ directory

./gradlew buildPlugin It is recommended to use the publishPlugin task for releasing the plugin

./gradlew publishPlugin- Select an LLM provider from the DevoxxGenie panel (right corner)

- Select some code

- Enter shortcode command review, explain, generate unit tests of the selected code or enter a custom prompt.

Enjoy!

The DevoxxGenie IDEA Plugin processes user prompts through the following steps:

-

UserPromptPanel→ Captures the prompt from the UI. -

PromptSubmissionListener.onPromptSubmitted()→ Listens for the submission event. -

PromptExecutionController.handlePromptSubmission()→ Starts execution.

-

PromptExecutionService.executeQuery()→ Handles token usage calculations and checks RAG/GitDiff settings. -

ChatPromptExecutor.executePrompt()→ Dispatches the prompt to the selected LLM provider. -

LLMProviderService.getAvailableModelProviders()→ Retrieves the appropriate model fromChatModelFactory.

-

ChatModelFactory.getModels()→ Gets the models for the select LLM provider -

Cloud-based LLMs:

-

Local models:

-

If streaming is enabled:

-

StreamingPromptExecutor.execute()→ Begins token-by-token streaming. -

ChatStreamingResponsePanel.createHTMLRenderer()→ Updates UI in real time.

-

-

If non-streaming:

-

PromptExecutionService.executeQuery()→ Formats the full response. -

ChatResponsePanel.displayResponse()→ Renders the text and code blocks.

-

-

Indexing Source Code for Retrieval

-

ProjectIndexerService.indexFiles()→ Indexes project files -

ChromaDBIndexService.storeEmbeddings()→ Stores embeddings in ChromaDB.

-

-

Retrieval & Augmentation

-

SemanticSearchService.search()→ Fetches relevant indexed code. -

SemanticSearchReferencesPanel→ Displays retrieved results.

-

- The response is rendered in

ChatResponsePanelwith:-

ResponseHeaderPanel→ Shows metadata (LLM name, execution time). -

ResponseDocumentPanel→ Formats text & code snippets. -

MetricExecutionInfoPanel→ Displays token usage and cost.

-

Below is a detailed flow diagram illustrating this workflow:

- Start by exploring

PromptExecutionController.javato see how prompts are routed. - Modify

ChatResponsePanel.javaif you want to enhance response rendering. - To add a new LLM provider, create a factory under

chatmodel/cloud/orchatmodel/local/.

Want to contribute? Submit a PR! 🚀

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DevoxxGenieIDEAPlugin

Similar Open Source Tools

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

actionbook

Actionbook is a browser action engine designed for AI agents, providing up-to-date action manuals and DOM structure to enable instant website operations without guesswork. It offers faster execution, token savings, resilient automation, and universal compatibility, making it ideal for building reliable browser agents. Actionbook integrates seamlessly with AI coding assistants and offers three integration methods: CLI, MCP Server, and JavaScript SDK. The tool is well-documented and actively developed in a monorepo setup using pnpm workspaces and Turborepo.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

agent-zero

Agent Zero is a personal, organic agentic framework designed to be dynamic, transparent, customizable, and interactive. It uses the computer as a tool to accomplish tasks, with features like general-purpose assistant, computer as a tool, multi-agent cooperation, customizable and extensible framework, and communication skills. The tool is fully Dockerized, with Speech-to-Text and TTS capabilities, and offers real-world use cases like financial analysis, Excel automation, API integration, server monitoring, and project isolation. Agent Zero can be dangerous if not used properly and is prompt-based, guided by the prompts folder. The tool is extensively documented and has a changelog highlighting various updates and improvements.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

Cerebr

Cerebr is an intelligent AI assistant browser extension designed to enhance work efficiency and learning experience. It integrates powerful AI capabilities from various sources to provide features such as smart sidebar, multiple API support, cross-browser API configuration synchronization, comprehensive Q&A support, elegant rendering, real-time response, theme switching, and more. With a minimalist design and focus on delivering a seamless, distraction-free browsing experience, Cerebr aims to be your second brain for deep reading and understanding.

next-ai-draw-io

Next AI Draw.io is a next.js web application that integrates AI capabilities with draw.io diagrams. It allows users to create, modify, and enhance diagrams through natural language commands and AI-assisted visualization. Features include LLM-Powered Diagram Creation, Image-Based Diagram Replication, Diagram History, Interactive Chat Interface, and Smart Editing. The application uses Next.js for frontend framework, @ai-sdk/react for chat interface and AI interactions, and react-drawio for diagram representation and manipulation. Diagrams are represented as XML that can be rendered in draw.io, with AI processing commands to generate or modify the XML accordingly.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

For similar tasks

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

gpt_academic

GPT Academic is a powerful tool that leverages the capabilities of large language models (LLMs) to enhance academic research and writing. It provides a user-friendly interface that allows researchers, students, and professionals to interact with LLMs and utilize their abilities for various academic tasks. With GPT Academic, users can access a wide range of features and functionalities, including: * **Summarization and Paraphrasing:** GPT Academic can summarize complex texts, articles, and research papers into concise and informative summaries. It can also paraphrase text to improve clarity and readability. * **Question Answering:** Users can ask GPT Academic questions related to their research or studies, and the tool will provide comprehensive and well-informed answers based on its knowledge and understanding of the relevant literature. * **Code Generation and Explanation:** GPT Academic can generate code snippets and provide explanations for complex coding concepts. It can also help debug code and suggest improvements. * **Translation:** GPT Academic supports translation of text between multiple languages, making it a valuable tool for researchers working with international collaborations or accessing resources in different languages. * **Citation and Reference Management:** GPT Academic can help users manage their citations and references by automatically generating citations in various formats and providing suggestions for relevant references based on the user's research topic. * **Collaboration and Note-Taking:** GPT Academic allows users to collaborate on projects and take notes within the tool. They can share their work with others and access a shared workspace for real-time collaboration. * **Customizable Interface:** GPT Academic offers a customizable interface that allows users to tailor the tool to their specific needs and preferences. They can choose from a variety of themes, adjust the layout, and add or remove features to create a personalized workspace. Overall, GPT Academic is a versatile and powerful tool that can significantly enhance the productivity and efficiency of academic research and writing. It empowers users to leverage the capabilities of LLMs and unlock new possibilities for academic exploration and knowledge creation.

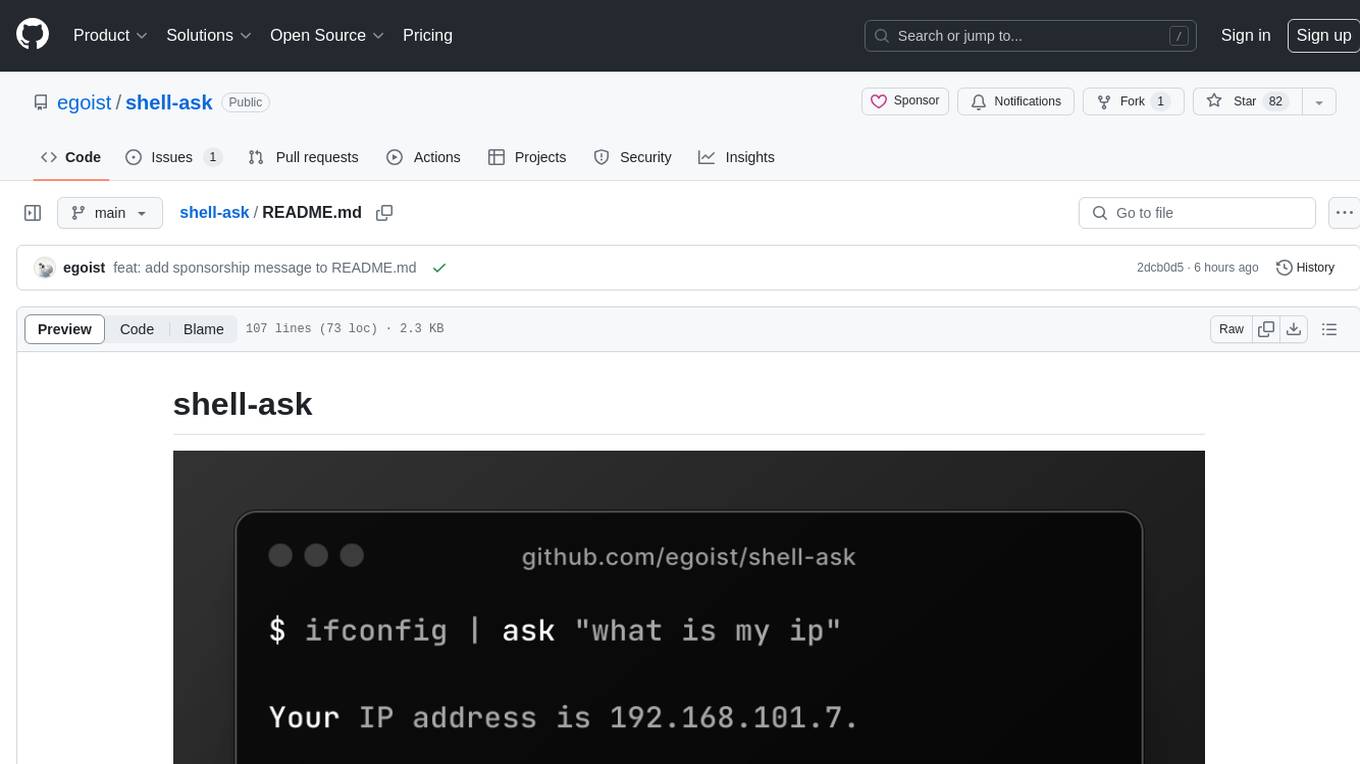

shell-ask

Shell Ask is a command-line tool that enables users to interact with various language models through a simple interface. It supports multiple LLMs such as OpenAI, Anthropic, Ollama, and Google Gemini. Users can ask questions, provide context through command output, select models interactively, and define reusable AI commands. The tool allows piping the output of other programs for enhanced functionality. With AI command presets and configuration options, Shell Ask provides a versatile and efficient way to leverage language models for various tasks.

neural

Neural is a Vim and Neovim plugin that integrates various machine learning tools to assist users in writing code, generating text, and explaining code or paragraphs. It supports multiple machine learning models, focuses on privacy, and is compatible with Vim 8.0+ and Neovim 0.8+. Users can easily configure Neural to interact with third-party machine learning tools, such as OpenAI, to enhance code generation and completion. The plugin also provides commands like `:NeuralExplain` to explain code or text and `:NeuralStop` to stop Neural from working. Neural is maintained by the Dense Analysis team and comes with a disclaimer about sending input data to third-party servers for machine learning queries.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

chatgpt

The ChatGPT R package provides a set of features to assist in R coding. It includes addins like Ask ChatGPT, Comment selected code, Complete selected code, Create unit tests, Create variable name, Document code, Explain selected code, Find issues in the selected code, Optimize selected code, and Refactor selected code. Users can interact with ChatGPT to get code suggestions, explanations, and optimizations. The package helps in improving coding efficiency and quality by providing AI-powered assistance within the RStudio environment.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

claude-code-telegram

Claude Code Telegram Bot is a Telegram bot that connects to Claude Code, offering a conversational AI interface for codebases. Users can chat naturally with Claude to analyze, edit, or explain code, maintain context across conversations, code on the go, receive proactive notifications, and stay secure with authentication and audit logging. The bot supports two interaction modes: Agentic Mode for natural language interaction and Classic Mode for a terminal-like interface. It features event-driven automation, working features like directory sandboxing and git integration, and planned enhancements like a plugin system. Security measures include access control, directory isolation, rate limiting, input validation, and webhook authentication.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.