better-chatbot

Just a Better Chatbot. Powered by Agent & MCP & Workflows.

Stars: 658

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

README:

🚀 Live Demo | See the experience in action in the preview below!

Better Chatbot - A better open-source AI chatbot for individuals and teams, inspired by ChatGPT, Claude, Grok, and Gemini.

• Multi-AI Support - Integrates all major LLMs: OpenAI, Anthropic, Google, xAI, Ollama, and more

• Powerful Tools - MCP protocol, web search, JS/Python code execution, data visualization

• Automation - Custom agents, visual workflows, artifact generation

• Collaboration - Share agents, workflows, and MCP configurations with your team

• Voice Assistant - Realtime voice chat with full MCP tool integration

• Intuitive UX - Instantly invoke any feature with @mention

• Quick Start - Deploy free with Vercel Deploy button

Built with Vercel AI SDK and Next.js, combining the best features of leading AI services into one platform.

# 1. Clone the repository

git clone https://github.com/cgoinglove/better-chatbot.git

cd better-chatbot

# 2. (Optional) Install pnpm if you don't have it

npm install -g pnpm

# 3. Install dependencies

pnpm i

# 4. (Optional) Start a local PostgreSQL instance

pnpm docker:pg

# If you already have your own PostgreSQL running, you can skip this step.

# In that case, make sure to update the PostgreSQL URL in your .env file.

# 5. Enter required information in the .env file

# The .env file is created automatically. Just fill in the required values.

# For the fastest setup, provide at least one LLM provider's API key (e.g., OPENAI_API_KEY, CLAUDE_API_KEY, GEMINI_API_KEY, etc.) and the PostgreSQL URL you want to use.

# 6. Start the server

pnpm build:local && pnpm start

# (Recommended for most cases. Ensures correct cookie settings.)

# For development mode with hot-reloading and debugging, you can use:

# pnpm devOpen http://localhost:3000 in your browser to get started.

- Table of Contents

- Preview

- Getting Started

- 📘 Guides

- 💡 Tips

- 🗺️ Roadmap

- 🙌 Contributing

- 💬 Join Our Discord

This project is evolving at lightning speed! ⚡️ We're constantly shipping new features and smashing bugs. Star this repo to join the ride and stay in the loop with the latest updates!

Get a feel for the UX — here's a quick look at what's possible.

Example: Control a web browser using Microsoft's playwright-mcp tool.

- The LLM autonomously decides how to use tools from the MCP server, calling them multiple times to complete a multi-step task and return a final message.

Sample prompt:

1. Use the @tool('web-search') to look up information about “modelcontetprotocol.”

2. Then, using : @mcp("playwright")

- navigate Google (https://www.google.com)

- Click the “Login” button

- Enter my email address ([email protected])

- Clock the "Next" button

- Close the browser

Example: Create custom workflows that become callable tools in your chat conversations.

- Build visual workflows by connecting LLM nodes (for AI reasoning) and Tool nodes (for MCP tool execution)

- Publish workflows to make them available as

@workflow_nametools in chat - Chain complex multi-step processes into reusable, automated sequences

Example: Create specialized AI agents with custom instructions and tool access.

- Define custom agents with specific system prompts and available tools

- Easily invoke agents in chat using

@agent_name - Build task-specific assistants like a GitHub Manager agent with issue/PR tools and project context

For instance, create a GitHub Manager agent by:

- Providing GitHub tools (issue/PR creation, comments, queries)

- Adding project details to the system prompt

- Calling it with

@github_managerto manage your repository

This demo showcases a realtime voice-based chatbot assistant built with OpenAI's new Realtime API — now extended with full MCP tool integration. Talk to the assistant naturally, and watch it execute tools in real time.

Quickly call tool during chat by typing @toolname.

No need to memorize — just type @ and pick from the list!

Tool Selection vs. Mentions (@) — When to Use What:

- Tool Selection: Make frequently used tools always available to the LLM across all chats. Great for convenience and maintaining consistent context over time.

-

Mentions (

@): Temporarily bind only the mentioned tools for that specific response. Since only the mentioned tools are sent to the LLM, this saves tokens and can improve speed and accuracy.

Each method has its own strengths — use them together to balance efficiency and performance.

You can also create tool presets by selecting only the MCP servers or tools you need. Switch between presets instantly with a click — perfect for organizing tools by task or workflow.

Control how tools are used in each chat with Tool Choice Mode — switch anytime with ⌘P.

- Auto: The model automatically calls tools when needed.

- Manual: The model will ask for your permission before calling a tool.

- None: Tool usage is disabled completely.

This lets you flexibly choose between autonomous, guided, or tool-free interaction depending on the situation.

Built-in web search powered by Exa AI. Search the web with semantic AI and extract content from URLs directly in your chats.

-

Optional: Add

EXA_API_KEYto.envto enable web search - Free Tier: 1,000 requests/month at no cost, no credit card required

- Easy Setup: Get your API key instantly at dashboard.exa.ai

It is a simple JS execution tool.

Interactive Tables: Create feature-rich data tables with advanced functionality:

- Sorting & Filtering: Sort by any column, filter data in real-time

- Search & Highlighting: Global search with automatic text highlighting

- Export Options: Export to CSV or Excel format with lazy-loaded libraries

- Column Management: Show/hide columns with visibility controls

- Pagination: Handle large datasets with built-in pagination

- Data Type Support: Proper formatting for strings, numbers, dates, and booleans

Chart Generation: Visualize data with various chart types (bar, line, pie charts)

Additionally, many other tools are provided, such as an HTTP client for API requests and more.

…and there's even more waiting for you. Try it out and see what else it can do!

This project uses pnpm as the recommended package manager.

# If you don't have pnpm:

npm install -g pnpm# 1. Install dependencies

pnpm i

# 2. Enter only the LLM PROVIDER API key(s) you want to use in the .env file at the project root.

# Example: The app works with just OPENAI_API_KEY filled in.

# (The .env file is automatically created when you run pnpm i.)

# 3. Build and start all services (including PostgreSQL) with Docker Compose

pnpm docker-compose:up

pnpm i

#(Optional) Start a local PostgreSQL instance

# If you already have your own PostgreSQL running, you can skip this step.

# In that case, make sure to update the PostgreSQL URL in your .env file.

pnpm docker:pg

# Enter required information in the .env file

# The .env file is created automatically. Just fill in the required values.

# For the fastest setup, provide at least one LLM provider's API key (e.g., OPENAI_API_KEY, CLAUDE_API_KEY, GEMINI_API_KEY, etc.) and the PostgreSQL URL you want to use.

pnpm build:local && pnpm start

# (Recommended for most cases. Ensures correct cookie settings.)

# For development mode with hot-reloading and debugging, you can use:

# pnpm devAlternative: Use Docker Compose for DB only (run app via pnpm)

# Start Postgres only via compose

# Ensure your .env includes: POSTGRES_USER, POSTGRES_PASSWORD, POSTGRES_DB matching POSTGRES_URL

docker compose -f docker/compose.yml up -d postgres

# Apply migrations

pnpm db:migrate

# Run app locally

pnpm dev # or: pnpm build && pnpm startOpen http://localhost:3000 in your browser to get started.

The pnpm i command generates a .env file. Add your API keys there.

# === LLM Provider API Keys ===

# You only need to enter the keys for the providers you plan to use

GOOGLE_GENERATIVE_AI_API_KEY=****

OPENAI_API_KEY=****

XAI_API_KEY=****

ANTHROPIC_API_KEY=****

OPENROUTER_API_KEY=****

OLLAMA_BASE_URL=http://localhost:11434/api

# Secret for Better Auth (generate with: npx @better-auth/cli@latest secret)

BETTER_AUTH_SECRET=****

# (Optional)

# URL for Better Auth (the URL you access the app from)

BETTER_AUTH_URL=

# === Database ===

# If you don't have PostgreSQL running locally, start it with: pnpm docker:pg

POSTGRES_URL=postgres://your_username:your_password@localhost:5432/your_database_name

# (Optional)

# === Tools ===

# Exa AI for web search and content extraction (optional, but recommended for @web and research features)

EXA_API_KEY=your_exa_api_key_here

# Whether to use file-based MCP config (default: false)

FILE_BASED_MCP_CONFIG=false

# (Optional)

# === OAuth Settings ===

# Fill in these values only if you want to enable Google/GitHub/Microsoft login

#GitHub

GITHUB_CLIENT_ID=

GITHUB_CLIENT_SECRET=

#Google

GOOGLE_CLIENT_ID=

GOOGLE_CLIENT_SECRET=

# Set to 1 to force account selection

GOOGLE_FORCE_ACCOUNT_SELECTION=

# Microsoft

MICROSOFT_CLIENT_ID=

MICROSOFT_CLIENT_SECRET=

# Optional Tenant Id

MICROSOFT_TENANT_ID=

# Set to 1 to force account selection

MICROSOFT_FORCE_ACCOUNT_SELECTION=

# Set this to 1 to disable user sign-ups.

DISABLE_SIGN_UP=

# Set this to 1 to disallow adding MCP servers.

NOT_ALLOW_ADD_MCP_SERVERS=Step-by-step setup guides for running and configuring better-chatbot.

- How to add and configure MCP servers in your environment

- How to self-host the chatbot using Docker, including environment configuration.

- Deploy the chatbot to Vercel with simple setup steps for production use.

- Personalize your chatbot experience with custom system prompts, user preferences, and MCP tool instructions

- Configure Google, GitHub, and Microsoft OAuth for secure user login support.

- Adding openAI like ai providers

- Comprehensive end-to-end testing with Playwright including multi-user scenarios, agent visibility testing, and CI/CD integration

- Open lightweight popup chats for quick side questions or testing — separate from your main thread.

Planned features coming soon to better-chatbot:

- [ ] File Attach & Image Generation

- [ ] Collaborative Document Editing (like OpenAI Canvas: user & assistant co-editing)

- [ ] RAG (Retrieval-Augmented Generation)

- [ ] Web-based Compute (with WebContainers integration)

💡 If you have suggestions or need specific features, please create an issue!

We welcome all contributions! Bug reports, feature ideas, code improvements — everything helps us build the best local AI assistant.

⚠️ Please read our Contributing Guide before submitting any Pull Requests or Issues. This helps us work together more effectively and saves time for everyone.

For detailed contribution guidelines, please see our Contributing Guide.

Language Translations: Help us make the chatbot accessible to more users by adding new language translations. See language.md for instructions on how to contribute translations.

Let's build it together 🚀

Connect with the community, ask questions, and get support on our official Discord server!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for better-chatbot

Similar Open Source Tools

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

actionbook

Actionbook is a browser action engine designed for AI agents, providing up-to-date action manuals and DOM structure to enable instant website operations without guesswork. It offers faster execution, token savings, resilient automation, and universal compatibility, making it ideal for building reliable browser agents. Actionbook integrates seamlessly with AI coding assistants and offers three integration methods: CLI, MCP Server, and JavaScript SDK. The tool is well-documented and actively developed in a monorepo setup using pnpm workspaces and Turborepo.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

eliza

Eliza is a versatile AI agent operating system designed to support various models and connectors, enabling users to create chatbots, autonomous agents, handle business processes, create video game NPCs, and engage in trading. It offers multi-agent and room support, document ingestion and interaction, retrievable memory and document store, and extensibility to create custom actions and clients. Eliza is easy to use and provides a comprehensive solution for AI agent development.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

preswald

Preswald is a full-stack platform for building, deploying, and managing interactive data applications in Python. It simplifies the process by combining ingestion, storage, transformation, and visualization into one lightweight SDK. With Preswald, users can connect to various data sources, customize app themes, and easily deploy apps locally. The platform focuses on code-first simplicity, end-to-end coverage, and efficiency by design, making it suitable for prototyping internal tools or deploying production-grade apps with reduced complexity and cost.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

Alice

Alice is an open-source AI companion designed to live on your desktop, providing voice interaction, intelligent context awareness, and powerful tooling. More than a chatbot, Alice is emotionally engaging and deeply useful, assisting with daily tasks and creative work. Key features include voice interaction with natural-sounding responses, memory and context management, vision and visual output capabilities, computer use tools, function calling for web search and task scheduling, wake word support, dedicated Chrome extension, and flexible settings interface. Technologies used include Vue.js, Electron, OpenAI, Go, hnswlib-node, and more. Alice is customizable and offers a dedicated Chrome extension, wake word support, and various tools for computer use and productivity tasks.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

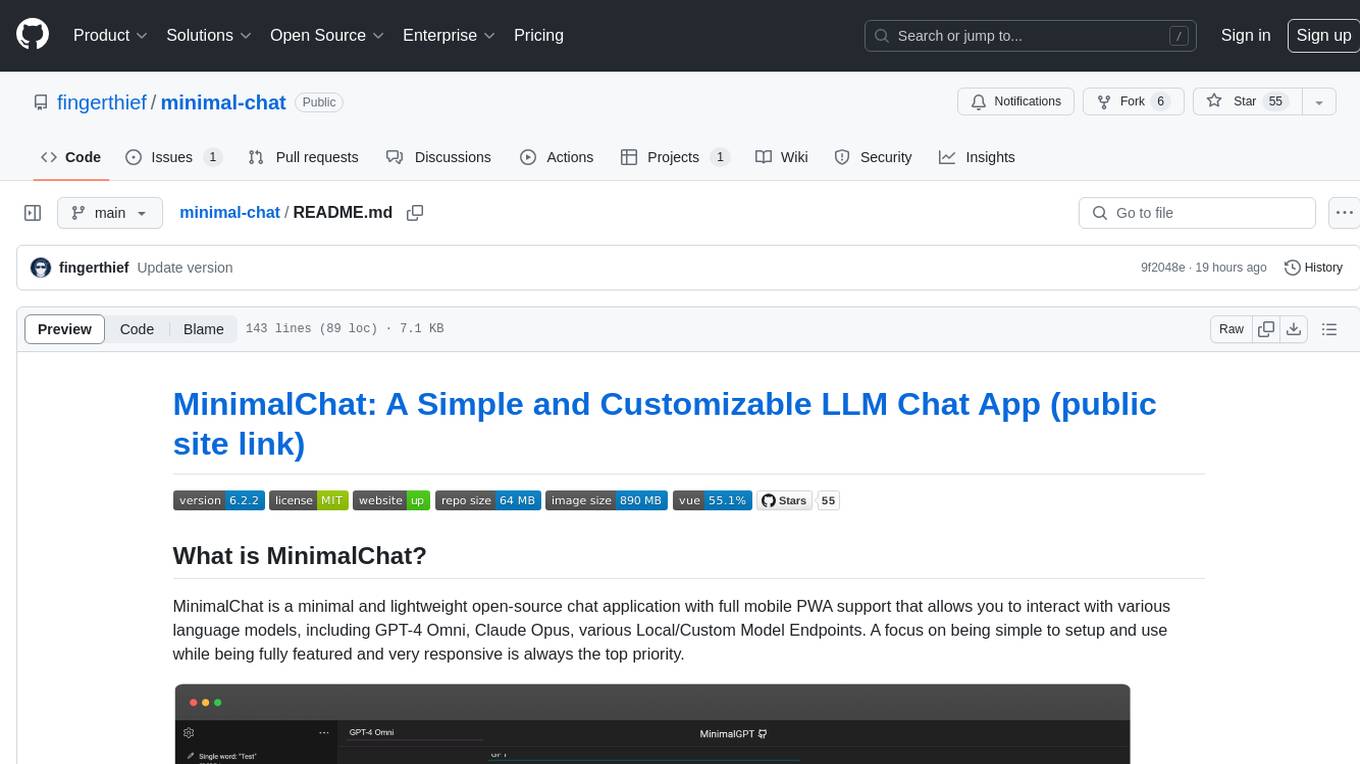

minimal-chat

MinimalChat is a minimal and lightweight open-source chat application with full mobile PWA support that allows users to interact with various language models, including GPT-4 Omni, Claude Opus, and various Local/Custom Model Endpoints. It focuses on simplicity in setup and usage while being fully featured and highly responsive. The application supports features like fully voiced conversational interactions, multiple language models, markdown support, code syntax highlighting, DALL-E 3 integration, conversation importing/exporting, and responsive layout for mobile use.

word-GPT-Plus

Word GPT Plus seamlessly integrates AI models into Microsoft Word, allowing users to generate, translate, summarize, and polish text directly within their documents. The tool supports multiple AI models, offers built-in templates for various text-related tasks, and provides customization options for user preferences. Users can install the tool through a hosted service, Docker deployment, or self-hosting, and can easily fill in API keys to access different AI services. Word GPT Plus enhances writing workflows by providing AI-powered assistance without leaving the Word environment.

CursorLens

Cursor Lens is an open-source tool that acts as a proxy between Cursor and various AI providers, logging interactions and providing detailed analytics to help developers optimize their use of AI in their coding workflow. It supports multiple AI providers, captures and logs all requests, provides visual analytics on AI usage, allows users to set up and switch between different AI configurations, offers real-time monitoring of AI interactions, tracks token usage, estimates costs based on token usage and model pricing. Built with Next.js, React, PostgreSQL, Prisma ORM, Vercel AI SDK, Tailwind CSS, and shadcn/ui components.

For similar tasks

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

openops

OpenOps is a No-Code FinOps automation platform designed to help organizations reduce cloud costs and streamline financial operations. It offers customizable workflows for automating key FinOps processes, comes with its own Excel-like database and visualization system, and enables collaboration between different teams. OpenOps integrates seamlessly with major cloud providers, third-party FinOps tools, communication platforms, and project management tools, providing a comprehensive solution for efficient cost-saving measures implementation.

Sage

Sage is a production-ready, modular, and intelligent multi-agent orchestration framework for complex problem solving. It intelligently breaks down complex tasks into manageable subtasks through seamless agent collaboration. Sage provides Deep Research Mode for comprehensive analysis and Rapid Execution Mode for quick task completion. It offers features like intelligent task decomposition, agent orchestration, extensible tool system, dual execution modes, interactive web interface, advanced token tracking, rich configuration, developer-friendly APIs, and robust error recovery mechanisms. Sage supports custom workflows, multi-agent collaboration, custom agent development, agent flow orchestration, rule preferences system, message manager for smart token optimization, task manager for comprehensive state management, advanced file system operations, advanced tool system with plugin architecture, token usage & cost monitoring, and rich configuration system. It also includes real-time streaming & monitoring, advanced tool development, error handling & reliability, performance monitoring, MCP server integration, and security features.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

DeepBI

DeepBI is an AI-native data analysis platform that leverages the power of large language models to explore, query, visualize, and share data from any data source. Users can use DeepBI to gain data insight and make data-driven decisions.

client

DagsHub is a platform for machine learning and data science teams to build, manage, and collaborate on their projects. With DagsHub you can: 1. Version code, data, and models in one place. Use the free provided DagsHub storage or connect it to your cloud storage 2. Track Experiments using Git, DVC or MLflow, to provide a fully reproducible environment 3. Visualize pipelines, data, and notebooks in and interactive, diff-able, and dynamic way 4. Label your data directly on the platform using Label Studio 5. Share your work with your team members 6. Stream and upload your data in an intuitive and easy way, while preserving versioning and structure. DagsHub is built firmly around open, standard formats for your project. In particular: * Git * DVC * MLflow * Label Studio * Standard data formats like YAML, JSON, CSV Therefore, you can work with DagsHub regardless of your chosen programming language or frameworks.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.