evi-run

Ready-to-use customizable multi-agent AI system with Telegram integration. Combines plug-and-play simplicity with framework-level flexibility.

Stars: 74

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

README:

Ready-to-use customizable multi-agent AI system that combines plug-and-play simplicity with framework-level flexibility

🚀 Quick Start • 🤖 Try Demo • 🔧 Configuration • 🎯 Features • 💡 Use Cases

Connect with fellow developers and AI enthusiasts!

evi-run is a powerful, production-ready multi-agent AI system that bridges the gap between out-of-the-box solutions and custom AI frameworks. Built on Python using the OpenAI Agents SDK, the system has an intuitive interface via a Telegram bot and provides enterprise-level artificial intelligence capabilities.

- 🚀 Instant Deployment - Get your AI system running in minutes, not hours

- 🔧 Ultimate Flexibility - Framework-level customization capabilities

- 📊 Built-in Analytics - Comprehensive usage tracking and insights

- 💬 Telegram Integration - Seamless user experience through familiar messaging interface

- 🏗️ Scalable Architecture - Grows with your needs from prototype to production

- Memory Management - Context control and long-term memory

- Knowledge Integration - Dynamic knowledge base expansion

- Task Scheduling - Scheduling and deferred task execution / once / daily / interval /

- Multi-Agent Orchestration - Complex task decomposition and execution

- Custom Agent Creation - Build specialized AI agents for specific tasks

- Deep Research - Multi-step investigation and analysis

- Web Intelligence - Smart internet search and data extraction

- Document Processing - Handle PDFs, images, and various file formats

- Image Generation - AI-powered visual content creation

- DEX Analytics - Real-time decentralized exchange monitoring

- Solana Token Swap - Easy, fast and secure token swap

- Private Mode - Personal use for bot owner only

- Free Mode - Public access with configurable usage limits

- Pay Mode - Monetized system with balance management and payments

- NSFW Mode - Unrestricted topic exploration and content generation

- Task Scheduler - Automated agent task planning and execution / ✅ completed /

- Automatic Limit Orders - Smart trading with automated take-profit and stop-loss functionality

| Component | Technology |

|---|---|

| Core Language | Python 3.11 |

| AI Framework | OpenAI Agents SDK |

| Communication | Model Context Protocol |

| Blockchain | Solana RPC API |

| Interface | Telegram Bot API |

| Database | PostgreSQL |

| Cache | Redis |

| Deployment | Docker & Docker Compose |

Get evi-run running in under 5 minutes with our streamlined Docker setup:

System Requirements:

- Ubuntu 22.04 server (ensure location is not blocked by OpenAI)

- Root or sudo access

- Internet connection

Required API Keys & Tokens:

- Telegram Bot Token - Create bot via @BotFather

- OpenAI API Key - Get from OpenAI Platform

- Your Telegram ID - Get from @userinfobot

-

Download and prepare the project:

# Navigate to installation directory cd /opt # Clone the project from GitHub git clone https://github.com/pipedude/evi-run.git # Set proper permissions sudo chown -R $USER:$USER evi-run cd evi-run

-

Configure environment variables:

# Copy example configuration cp .env.example .env # Edit configuration files nano .env # Add your API keys and tokens nano config.py # Set your Telegram ID and preferences

-

Run automated Docker setup:

# Make setup script executable chmod +x docker_setup_en.sh # Run Docker installation ./docker_setup_en.sh

-

Launch the system:

# Build and start containers docker compose up --build -d -

Verify installation:

# Check running containers docker compose ps # View logs docker compose logs -f

🎉 That's it! Your evi-run system is now live. Open your Telegram bot and start chatting!

# REQUIRED: Telegram Bot Token from @BotFather

TELEGRAM_BOT_TOKEN=your_bot_token_here

# REQUIRED: OpenAI API Key

API_KEY_OPENAI=your_openai_api_key# REQUIRED: Your Telegram User ID

ADMIN_ID = 123456789

# Usage Mode: 'private', 'free', or 'pay'

TYPE_USAGE = 'private'| Mode | Description | Best For |

|---|---|---|

| Private | Bot owner only | Personal use, development, testing |

| Free | Public access with limits | Community projects, demos |

| Pay | Monetized with balance system | Commercial applications, SaaS |

Create engaging AI personalities for entertainment, education, or brand representation.

Deploy intelligent support bots that understand context and provide helpful solutions.

Build your own AI companion for productivity, research, and daily tasks.

Automate data processing, generate insights, and create reports from complex datasets.

Launch trading agents for DEX with real-time analytics.

Leverage the framework to build specialized AI agents for any domain or industry.

By default, the system is configured for optimal performance and low cost of use. For professional and specialized use cases, proper model selection is crucial for optimal performance and cost efficiency.

For Deep Research and Complex Analysis:

-

o3-deep-research- Most powerful deep research model for complex multi-step research tasks -

o4-mini-deep-research- Faster, more affordable deep research model

For maximum research capabilities using specialized deep research models:

-

Use o3-deep-research for most powerful analysis in

bot/agents_tools/agents_.py:deep_agent = Agent( name="Deep Agent", model="o3-deep-research", # Most powerful deep research model # ... instructions )

-

Alternative: Use o4-mini-deep-research for cost-effective deep research:

deep_agent = Agent( name="Deep Agent", model="o4-mini-deep-research", # Faster, more affordable deep research # ... instructions )

-

Update Main Agent instructions to prevent summarization:

- Locate the main agent instructions in the same file

- Ensure the instruction includes: "VERY IMPORTANT! Do not generalize the answers received from the deep_knowledge tool, especially for deep research, provide them to the user in full, in the user's language."

For the complete list of available models, capabilities, and pricing, see the OpenAI Models Documentation.

evi-run uses the Agents library with a multi-agent architecture where specialized agents are integrated as tools into the main agent. All agent configuration is centralized in:

bot/agents_tools/agents_.py1. Create the Agent

# Add after existing agents

custom_agent = Agent(

name="Custom Agent",

instructions="Your specialized agent instructions here...",

model="gpt-5-mini",

model_settings=ModelSettings(

reasoning=Reasoning(effort="low"),

extra_body={"text": {"verbosity": "medium"}}

),

tools=[WebSearchTool(search_context_size="medium")] # Optional tools

)2. Register as Tool in Main Agent

# In create_main_agent function, add to main_agent.tools list:

main_agent = Agent(

# ... existing configuration

tools=[

# ... existing tools

custom_agent.as_tool(

tool_name="custom_function",

tool_description="Description of what this agent does"

),

]

)Main Agent (Evi) Personality:

Edit the detailed instructions in the main_agent instructions block:

- Character profile and personality

- Expertise areas

- Communication style

- Behavioral patterns

Agent Parameters:

-

name: Agent identifier -

instructions: System prompt and behavior -

model: OpenAI model (gpt-5,gpt-5-mini, etc.) -

model_settings: Model settings (Reasoning, extra_body, etc.) -

tools: Available tools (WebSearchTool, FileSearchTool, etc.) -

mcp_servers: MCP server connections

evi-run supports non-OpenAI models through the Agents library. There are several ways to integrate other LLM providers:

Method 1: LiteLLM Integration (Recommended)

Install the LiteLLM dependency:

pip install "openai-agents[litellm]"Use models with the litellm/ prefix:

# Claude via LiteLLM

claude_agent = Agent(

name="Claude Agent",

instructions="Your instructions here...",

model="litellm/anthropic/claude-3-5-sonnet-20240620",

# ... other parameters

)

# Gemini via LiteLLM

gemini_agent = Agent(

name="Gemini Agent",

instructions="Your instructions here...",

model="litellm/gemini/gemini-2.5-flash-preview-04-17",

# ... other parameters

)Method 2: LitellmModel Class

from agents.extensions.models.litellm_model import LitellmModel

custom_agent = Agent(

name="Custom Agent",

instructions="Your instructions here...",

model=LitellmModel(model="anthropic/claude-3-5-sonnet-20240620", api_key="your-api-key"),

# ... other parameters

)Method 3: Global OpenAI Client

from agents.models._openai_shared import set_default_openai_client

from openai import AsyncOpenAI

# For providers with OpenAI-compatible API

set_default_openai_client(AsyncOpenAI(

base_url="https://api.provider.com/v1",

api_key="your-api-key"

))Documentation & Resources:

- Model Configuration Guide - Complete setup documentation

- LiteLLM Integration - Detailed LiteLLM usage

- Supported Models - Full list of LiteLLM providers

Important Notes:

- Most LLM providers don't support the Responses API yet

- If not using OpenAI, consider disabling tracing:

set_tracing_disabled() - You can mix different providers for different agents

- Focused Instructions: Each agent should have a clear, specific purpose

- Model Selection: Use appropriate models for complexity (gpt-5 vs gpt-5-mini)

- Tool Integration: Leverage WebSearchTool, FileSearchTool, and MCP servers

- Naming Convention: Use descriptive tool names for main agent clarity

- Testing: Test agent responses in isolation before integration

Customizing Bot Interface Messages:

All bot messages and interface text are stored in the I18N directory and can be fully customized to match your needs:

I18N/

├── factory.py # Translation loader

├── en/

│ └── txt.ftl # English messages

└── ru/

└── txt.ftl # Russian messages

Message Files Format:

The bot uses Fluent localization format (.ftl files) for multi-language support:

To customize messages:

- Edit the appropriate

.ftlfile inI18N/en/orI18N/ru/ - Restart the bot container for changes to take effect

- Add new languages by creating new subdirectories with

txt.ftlfiles

evi-run includes comprehensive tracing and analytics capabilities through the OpenAI Agents SDK. The system automatically tracks all agent operations and provides detailed insights into performance and usage.

Automatic Tracking:

- Agent Runs - Each agent execution with timing and results

- LLM Generations - Model calls with inputs/outputs and token usage

- Function Calls - Tool usage and execution details

- Handoffs - Agent-to-agent interactions

- Audio Processing - Speech-to-text and text-to-speech operations

- Guardrails - Safety checks and validations

For ethical reasons, owners of public bots should either explicitly inform users about this, or disable Tracing.

# Disable Tracking in `bot/agents_tools/agents_.py`

set_tracing_disabled(True)evi-run supports integration with 20+ monitoring and analytics platforms:

Popular Integrations:

- Weights & Biases - ML experiment tracking

- LangSmith - LLM application monitoring

- Arize Phoenix - AI observability

- Langfuse - LLM analytics

- AgentOps - Agent performance tracking

- Pydantic Logfire - Structured logging

Enterprise Solutions:

- Braintrust - AI evaluation platform

- MLflow - ML lifecycle management

- Portkey AI - AI gateway and monitoring

Docker Container Logs:

# View all logs

docker compose logs

# Follow specific service

docker compose logs -f bot

# Database logs

docker compose logs postgres_agent_db

# Filter by time

docker compose logs --since 1h bot- Complete Tracing Guide - Full tracing documentation

- Analytics Integration List - All supported platforms

Bot not responding:

# Check bot container status

docker compose ps

docker compose logs botDatabase connection errors:

# Restart database

docker compose restart postgres_agent_db

docker compose logs postgres_agent_dbMemory issues:

# Check system resources

docker stats- Community: Telegram Support Group

- Issues: GitHub Issues

- Telegram: @playa3000

- CPU: 2 cores

- RAM: 2GB

- Storage: 10GB

- Network: Stable internet connection

- CPU: 2+ cores

- RAM: 4GB+

- Storage: 20GB+ SSD

- Network: High-speed connection

- API Keys: Store securely in environment variables

- Database: Use strong passwords and restrict access

- Network: Configure firewalls and use HTTPS

- Updates: Keep dependencies and Docker images updated

This project is licensed under the MIT License - see the LICENSE file for details.

We welcome contributions! Please see our Contributing Guidelines for details.

- Website: evi.run

- Contact: Alex Flash

- Community: Telegram Group

- X (Twitter): alexflash99

- Reddit: Alex Flash

Made with ❤️ by the evi-run team

⭐ Star this repository if evi-run helped you build amazing AI experiences! ⭐

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for evi-run

Similar Open Source Tools

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

claude-007-agents

Claude Code Agents is an open-source AI agent system designed to enhance development workflows by providing specialized AI agents for orchestration, resilience engineering, and organizational memory. These agents offer specialized expertise across technologies, AI system with organizational memory, and an agent orchestration system. The system includes features such as engineering excellence by design, advanced orchestration system, Task Master integration, live MCP integrations, professional-grade workflows, and organizational intelligence. It is suitable for solo developers, small teams, enterprise teams, and open-source projects. The system requires a one-time bootstrap setup for each project to analyze the tech stack, select optimal agents, create configuration files, set up Task Master integration, and validate system readiness.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

sandboxed.sh

sandboxed.sh is a self-hosted cloud orchestrator for AI coding agents that provides isolated Linux workspaces with Claude Code, OpenCode & Amp runtimes. It allows users to hand off entire development cycles, run multi-day operations unattended, and keep sensitive data local by analyzing data against scientific literature. The tool features dual runtime support, mission control for remote agent management, isolated workspaces, a git-backed library, MCP registry, and multi-platform support with a web dashboard and iOS app.

octocode-mcp

Octocode is a methodology and platform that empowers AI assistants with the skills of a Senior Staff Engineer. It transforms how AI interacts with code by moving from 'guessing' based on training data to 'knowing' based on deep, evidence-based research. The ecosystem includes the Manifest for Research Driven Development, the MCP Server for code interaction, Agent Skills for extending AI capabilities, a CLI for managing agent capabilities, and comprehensive documentation covering installation, core concepts, tutorials, and reference materials.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

For similar tasks

inferable

Inferable is an open source platform that helps users build reliable LLM-powered agentic automations at scale. It offers a managed agent runtime, durable tool calling, zero network configuration, multiple language support, and is fully open source under the MIT license. Users can define functions, register them with Inferable, and create runs that utilize these functions to automate tasks. The platform supports Node.js/TypeScript, Go, .NET, and React, and provides SDKs, core services, and bootstrap templates for various languages.

CEO

CEO is an intuitive and modular AI agent framework designed for task automation. It provides a flexible environment for building agents with specific abilities and personalities, allowing users to assign tasks and interact with the agents to automate various processes. The framework supports multi-agent collaboration scenarios and offers functionalities like instantiating agents, granting abilities, assigning queries, and executing tasks. Users can customize agent personalities and define specific abilities using decorators, making it easy to create complex automation workflows.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

Open-WebUI-Functions

Open-WebUI-Functions is a collection of Python-based functions that extend Open WebUI with custom pipelines, filters, and integrations. Users can interact with AI models, process data efficiently, and customize the Open WebUI experience. It includes features like custom pipelines, data processing filters, Azure AI support, N8N workflow integration, flexible configuration, secure API key management, and support for both streaming and non-streaming processing. The functions require an active Open WebUI instance, may need external AI services like Azure AI, and admin access for installation. Security features include automatic encryption of sensitive information like API keys. Pipelines include Azure AI Foundry, N8N, Infomaniak, and Google Gemini. Filters like Time Token Tracker measure response time and token usage. Integrations with Azure AI, N8N, Infomaniak, and Google are supported. Contributions are welcome, and the project is licensed under Apache License 2.0.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

easy-web-summarizer

A Python script leveraging advanced language models to summarize webpages and youtube videos directly from URLs. It integrates with LangChain and ChatOllama for state-of-the-art summarization, providing detailed summaries for quick understanding of web-based documents. The tool offers a command-line interface for easy use and integration into workflows, with plans to add support for translating to different languages and streaming text output on gradio. It can also be used via a web UI using the gradio app. The script is dockerized for easy deployment and is open for contributions to enhance functionality and capabilities.

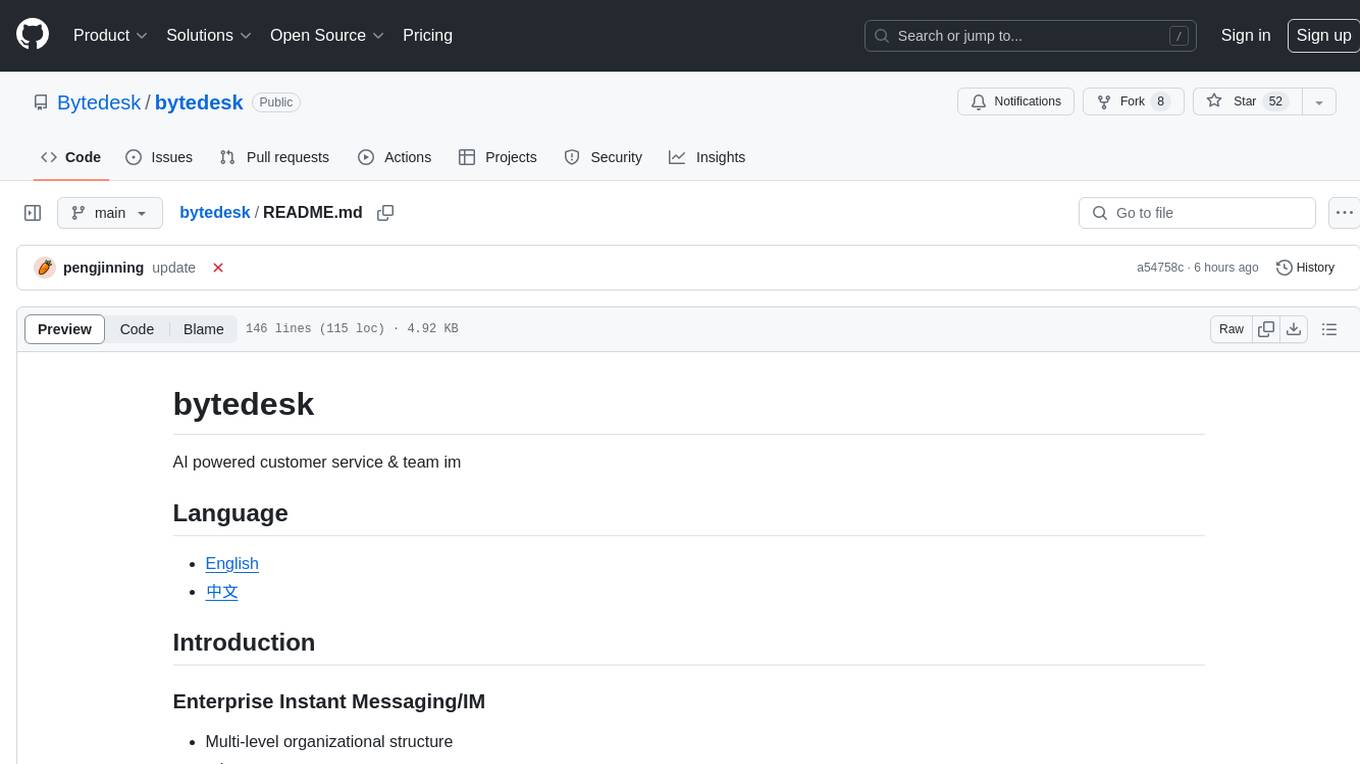

bytedesk

Bytedesk is an AI-powered customer service and team instant messaging tool that offers features like enterprise instant messaging, online customer service, large model AI assistant, and local area network file transfer. It supports multi-level organizational structure, role management, permission management, chat record management, seating workbench, work order system, seat management, data dashboard, manual knowledge base, skill group management, real-time monitoring, announcements, sensitive words, CRM, report function, and integrated customer service workbench services. The tool is designed for team use with easy configuration throughout the company, and it allows file transfer across platforms using WiFi/hotspots without the need for internet connection.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.