shimmy

⚡Local-first AI inference server with OpenAI API compatibility, auto-discovery, hot model swapping, and tool calling. Single-binary Rust solution for GGUF models with LoRA support. FREE now, FREE forever.

Stars: 392

Shimmy is a 5.1MB single-binary local inference server providing OpenAI-compatible endpoints for GGUF models. It offers fast, reliable AI inference with sub-second responses, zero configuration, and automatic port management. Perfect for developers seeking privacy, cost-effectiveness, speed, and easy integration with popular tools like VSCode and Cursor. Shimmy is designed to be invisible infrastructure that simplifies local AI development and deployment.

README:

Shimmy will be free forever. No asterisks. No "free for now." No pivot to paid.

Fast, reliable local AI inference. Shimmy provides OpenAI-compatible endpoints for GGUF models with comprehensive testing and automated quality assurance.

Shimmy is a 5.1MB single-binary local inference server that provides OpenAI API-compatible endpoints for GGUF models. It's designed to be the invisible infrastructure that just works.

| Metric | Shimmy | Ollama |

|---|---|---|

| Binary Size | 5.1MB 🏆 | 680MB |

| Startup Time | <100ms 🏆 | 5-10s |

| Memory Overhead | <50MB 🏆 | 200MB+ |

| OpenAI Compatibility | 100% 🏆 | Partial |

| Port Management | Auto 🏆 | Manual |

| Configuration | Zero 🏆 | Manual |

- Privacy: Your code stays on your machine

- Cost: No per-token pricing, unlimited queries

- Speed: Local inference = sub-second responses

- Integration: Works with VSCode, Cursor, Continue.dev out of the box

BONUS: First-class LoRA adapter support - from training to production API in 30 seconds.

# Install from crates.io (Linux, macOS, Windows)

cargo install shimmy

# Or download pre-built binary (Windows only)

curl -L https://github.com/Michael-A-Kuykendall/shimmy/releases/latest/download/shimmy.exe

⚠️ Windows Security Notice: Windows Defender may flag the binary as a false positive. This is common with unsigned Rust executables. Recommended: Usecargo install shimmyinstead, or add an exclusion for shimmy.exe in Windows Defender.

Shimmy auto-discovers models from:

-

Hugging Face cache:

~/.cache/huggingface/hub/ -

Ollama models:

~/.ollama/models/ -

Local directory:

./models/ -

Environment:

SHIMMY_BASE_GGUF=path/to/model.gguf

# Download models that work out of the box

huggingface-cli download microsoft/Phi-3-mini-4k-instruct-gguf --local-dir ./models/

huggingface-cli download bartowski/Llama-3.2-1B-Instruct-GGUF --local-dir ./models/# Auto-allocates port to avoid conflicts

shimmy serve

# Or use manual port

shimmy serve --bind 127.0.0.1:11435Point your AI tools to the displayed port - VSCode Copilot, Cursor, Continue.dev all work instantly!

-

Rust:

cargo install shimmy - VS Code: Shimmy Extension

-

npm:

npm install -g shimmy-js(coming soon) -

Python:

pip install shimmy(coming soon)

- GitHub Releases: Latest binaries

-

Docker:

docker pull shimmy/shimmy:latest(coming soon)

Full compatibility confirmed! Shimmy works flawlessly on macOS with Metal GPU acceleration.

# Install dependencies

brew install cmake rust

# Install shimmy

cargo install shimmy✅ Verified working:

- Intel and Apple Silicon Macs

- Metal GPU acceleration (automatic)

- Xcode 17+ compatibility

- All LoRA adapter features

{

"github.copilot.advanced": {

"serverUrl": "http://localhost:11435"

}

}{

"models": [{

"title": "Local Shimmy",

"provider": "openai",

"model": "your-model-name",

"apiBase": "http://localhost:11435/v1"

}]

}Works out of the box - just point to http://localhost:11435/v1

I built Shimmy because I was tired of 680MB binaries to run a 4GB model.

This is my commitment: Shimmy stays MIT licensed, forever. If you want to support development, sponsor it. If you don't, just build something cool with it.

Shimmy saves you time and money. If it's useful, consider sponsoring for $5/month — less than your Netflix subscription, infinitely more useful.

| Tool | Binary Size | Startup Time | Memory Usage | OpenAI API |

|---|---|---|---|---|

| Shimmy | 5.1MB | <100ms | 50MB | 100% |

| Ollama | 680MB | 5-10s | 200MB+ | Partial |

| llama.cpp | 89MB | 1-2s | 100MB | None |

-

GET /health- Health check -

POST /v1/chat/completions- OpenAI-compatible chat -

GET /v1/models- List available models -

POST /api/generate- Shimmy native API -

GET /ws/generate- WebSocket streaming

shimmy serve # Start server (auto port allocation)

shimmy serve --bind 127.0.0.1:8080 # Manual port binding

shimmy list # Show available models

shimmy discover # Refresh model discovery

shimmy generate --name X --prompt "Hi" # Test generation

shimmy probe model-name # Verify model loads- Rust + Tokio: Memory-safe, async performance

- llama.cpp backend: Industry-standard GGUF inference

- OpenAI API compatibility: Drop-in replacement

- Dynamic port management: Zero conflicts, auto-allocation

- Zero-config auto-discovery: Just works™

- 🐛 Bug Reports: GitHub Issues

- 💬 Discussions: GitHub Discussions

- 📖 Documentation: docs/

- 💝 Sponsorship: GitHub Sponsors

See our amazing sponsors who make Shimmy possible! 🙏

Sponsorship Tiers:

- $5/month: Coffee tier - My eternal gratitude + sponsor badge

- $25/month: Bug prioritizer - Priority support + name in SPONSORS.md

- $100/month: Corporate backer - Logo on README + monthly office hours

- $500/month: Infrastructure partner - Direct support + roadmap input

Companies: Need invoicing? Email [email protected]

Shimmy maintains high code quality through comprehensive testing:

- Comprehensive test suite with property-based testing

- Automated CI/CD pipeline with quality gates

- Runtime invariant checking for critical operations

- Cross-platform compatibility testing

See our testing approach for technical details.

MIT License - forever and always.

Philosophy: Infrastructure should be invisible. Shimmy is infrastructure.

Testing Philosophy: Reliability through comprehensive validation and property-based testing.

Forever maintainer: Michael A. Kuykendall

Promise: This will never become a paid product

Mission: Making local AI development frictionless

"The best code is code you don't have to think about."

"The best tests are properties you can't break."

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for shimmy

Similar Open Source Tools

shimmy

Shimmy is a 5.1MB single-binary local inference server providing OpenAI-compatible endpoints for GGUF models. It offers fast, reliable AI inference with sub-second responses, zero configuration, and automatic port management. Perfect for developers seeking privacy, cost-effectiveness, speed, and easy integration with popular tools like VSCode and Cursor. Shimmy is designed to be invisible infrastructure that simplifies local AI development and deployment.

ClaraVerse

ClaraVerse is a privacy-first AI assistant and agent builder that allows users to chat with AI, create intelligent agents, and turn them into fully functional apps. It operates entirely on open-source models running on the user's device, ensuring data privacy and security. With features like AI assistant, image generation, intelligent agent builder, and image gallery, ClaraVerse offers a versatile platform for AI interaction and app development. Users can install ClaraVerse through Docker, native desktop apps, or the web version, with detailed instructions provided for each option. The tool is designed to empower users with control over their AI stack and leverage community-driven innovations for AI development.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

PAI

PAI is an open-source personal AI infrastructure designed to orchestrate personal and professional lives. It provides a scaffolding framework with real-world examples for life management, professional tasks, and personal goals. The core mission is to augment humans with AI capabilities to thrive in a world full of AI. PAI features UFC Context Architecture for persistent memory, specialized digital assistants for various tasks, an integrated tool ecosystem with MCP Servers, voice system, browser automation, and API integrations. The philosophy of PAI focuses on augmenting human capability rather than replacing it. The tool is MIT licensed and encourages contributions from the open-source community.

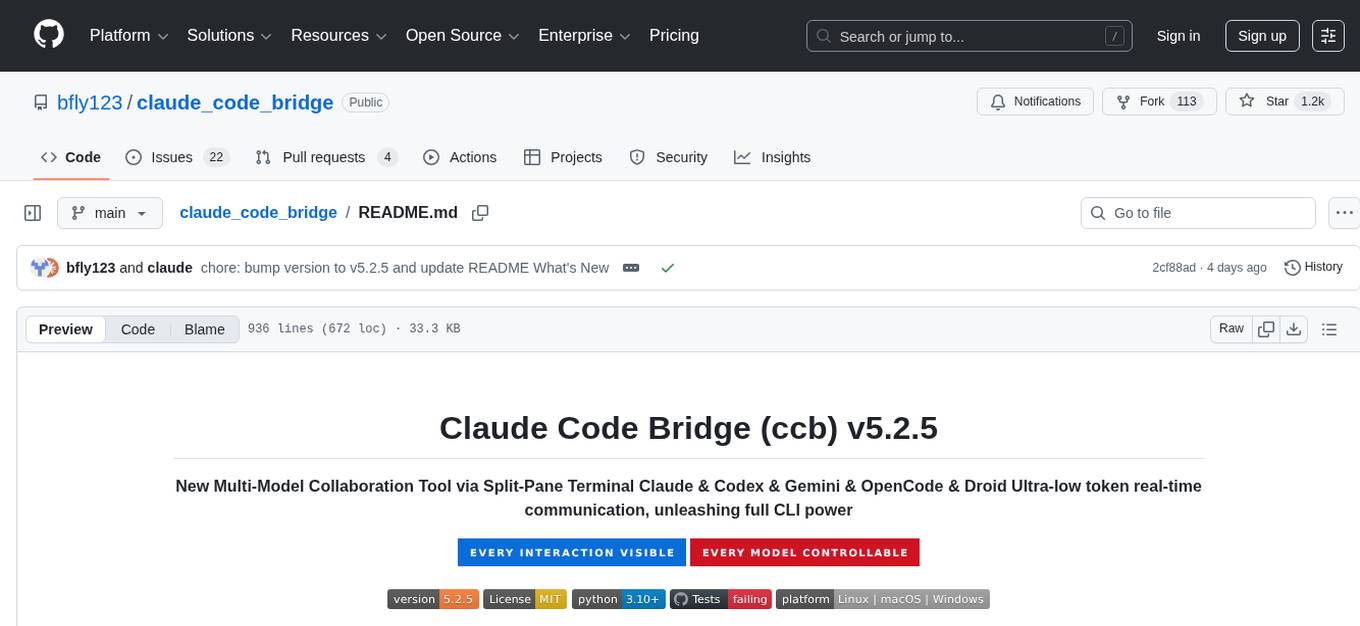

claude_code_bridge

Claude Code Bridge (ccb) is a new multi-model collaboration tool that enables effective collaboration among multiple AI models in a split-pane CLI environment. It offers features like visual and controllable interface, persistent context maintenance, token savings, and native workflow integration. The tool allows users to unleash the full power of CLI by avoiding model bias, cognitive blind spots, and context limitations. It provides a new WYSIWYG solution for multi-model collaboration, making it easier to control and visualize multiple AI models simultaneously.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

local-cocoa

Local Cocoa is a privacy-focused tool that runs entirely on your device, turning files into memory to spark insights and power actions. It offers features like fully local privacy, multimodal memory, vector-powered retrieval, intelligent indexing, vision understanding, hardware acceleration, focused user experience, integrated notes, and auto-sync. The tool combines file ingestion, intelligent chunking, and local retrieval to build a private on-device knowledge system. The ultimate goal includes more connectors like Google Drive integration, voice mode for local speech-to-text interaction, and a plugin ecosystem for community tools and agents. Local Cocoa is built using Electron, React, TypeScript, FastAPI, llama.cpp, and Qdrant.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

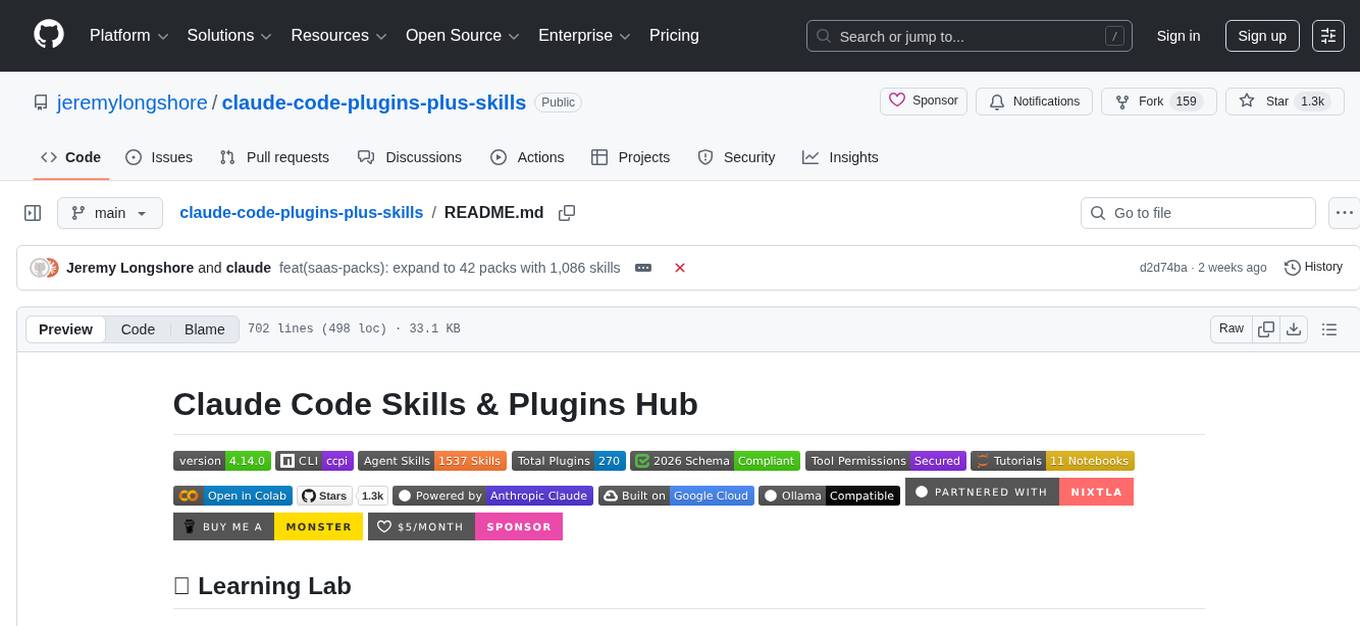

claude-code-plugins-plus-skills

Claude Code Skills & Plugins Hub is a comprehensive marketplace for agent skills and plugins, offering 1537 production-ready agent skills and 270 total plugins. It provides a learning lab with guides, diagrams, and examples for building production agent workflows. The package manager CLI allows users to discover, install, and manage plugins from their terminal, with features like searching, listing, installing, updating, and validating plugins. The marketplace is not on GitHub Marketplace and does not support built-in monetization. It is community-driven, actively maintained, and focuses on quality over quantity, aiming to be the definitive resource for Claude Code plugins.

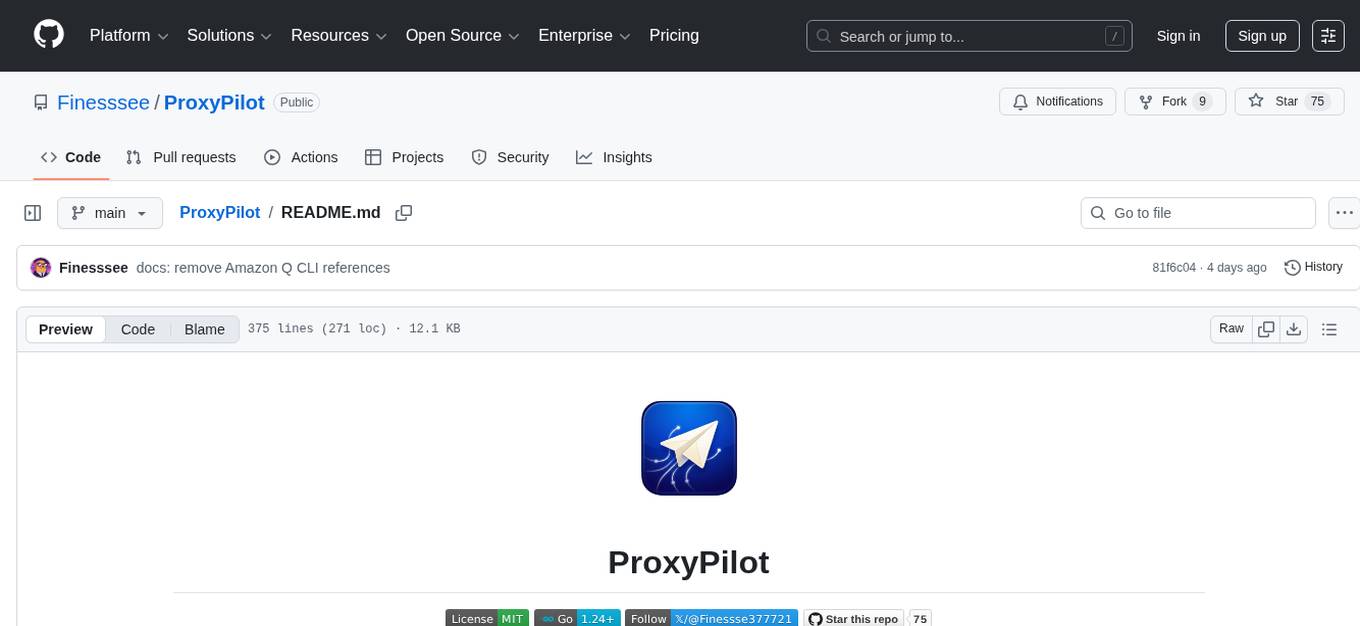

ProxyPilot

ProxyPilot is a powerful local API proxy tool built in Go that eliminates the need for separate API keys when using Claude Code, Codex, Gemini, Kiro, and Qwen subscriptions with any AI coding tool. It handles OAuth authentication, token management, and API translation automatically, providing a single server to route requests. The tool supports multiple authentication providers, universal API translation, tool calling repair, extended thinking models, OAuth integration, multi-account support, quota auto-switching, usage statistics tracking, context compression, agentic harness for coding agents, session memory, system tray app, auto-updates, rollback support, and over 60 management APIs. ProxyPilot also includes caching layers for response and prompt caching to reduce latency and token usage.

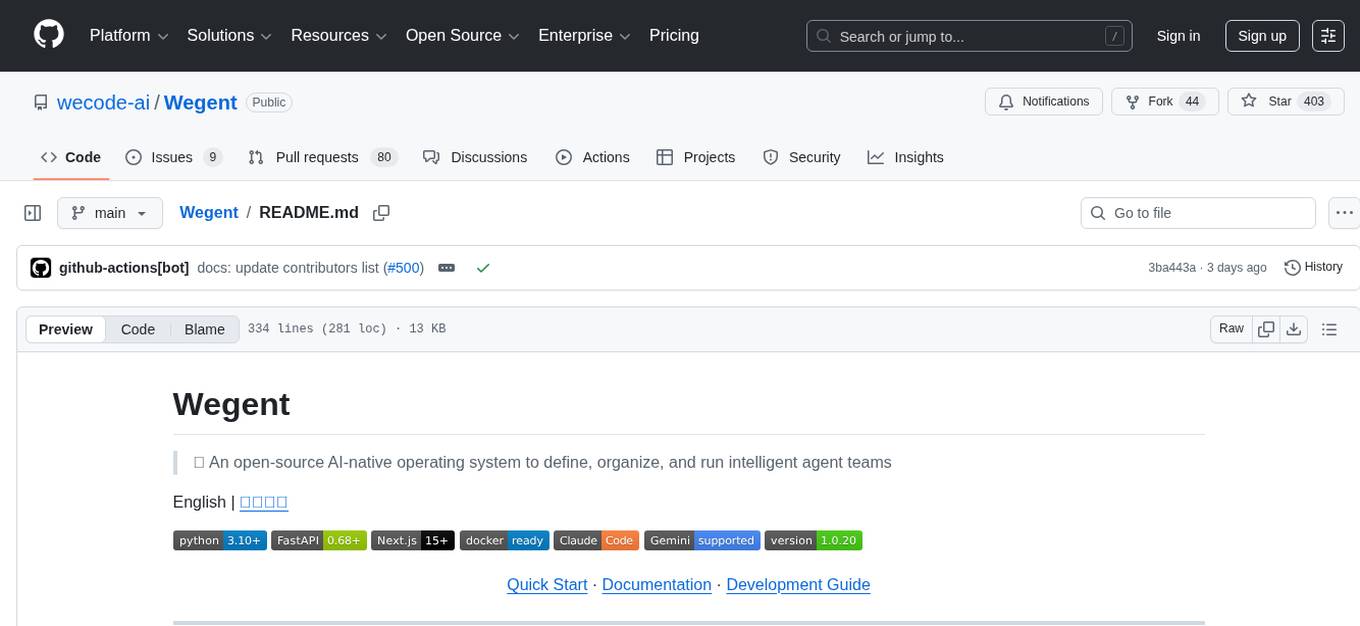

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

agent-skills

Agent Skills is a secure, validated skill registry for professional AI coding agents. It provides a library of verified, tested, and safe capabilities to extend various AI agents with confidence. The tool addresses security concerns in marketplace skills by offering 100% open-source code, static analysis for credential theft prevention, immutable integrity to prevent supply chain attacks, and human curation to ensure safety boundaries. Users can install skills through an interactive wizard, choose from a variety of supported AI coding agents, and benefit from a growing catalog of featured skills for development, cloud, automation, design, and security tasks.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.