ai-on-gke

AI on GKE is a collection of examples, best-practices, and prebuilt solutions to help build, deploy, and scale AI Platforms on Google Kubernetes Engine

Stars: 280

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

README:

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE).

Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers:

- Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale

- Flexible integration with distributed computing and data processing frameworks

- Support for multiple teams on the same infrastructure to maximize utilization of resources

The AI-on-GKE application modules assumes you already have a functional GKE cluster. If not, follow the instructions under infrastructure/README.md to install a Standard or Autopilot GKE cluster.

.

├── LICENSE

├── README.md

├── infrastructure

│ ├── README.md

│ ├── backend.tf

│ ├── main.tf

│ ├── outputs.tf

│ ├── platform.tfvars

│ ├── variables.tf

│ └── versions.tf

├── modules

│ ├── gke-autopilot-private-cluster

│ ├── gke-autopilot-public-cluster

│ ├── gke-standard-private-cluster

│ ├── gke-standard-public-cluster

│ ├── jupyter

│ ├── jupyter_iap

│ ├── jupyter_service_accounts

│ ├── kuberay-cluster

│ ├── kuberay-logging

│ ├── kuberay-monitoring

│ ├── kuberay-operator

│ └── kuberay-serviceaccounts

└── tutorial.mdTo deploy new GKE cluster update the platform.tfvars file with the appropriate values and then execute below terraform commands:

terraform init

terraform apply -var-file platform.tfvars

The repo structure looks like this:

.

├── LICENSE

├── Makefile

├── README.md

├── applications

│ ├── jupyter

│ └── ray

├── contributing.md

├── dcgm-on-gke

│ ├── grafana

│ └── quickstart

├── gke-a100-jax

│ ├── Dockerfile

│ ├── README.md

│ ├── build_push_container.sh

│ ├── kubernetes

│ └── train.py

├── gke-batch-refarch

│ ├── 01_gke

│ ├── 02_platform

│ ├── 03_low_priority

│ ├── 04_high_priority

│ ├── 05_compact_placement

│ ├── 06_jobset

│ ├── Dockerfile

│ ├── README.md

│ ├── cloudbuild-create.yaml

│ ├── cloudbuild-destroy.yaml

│ ├── create-platform.sh

│ ├── destroy-platform.sh

│ └── images

├── gke-disk-image-builder

│ ├── README.md

│ ├── cli

│ ├── go.mod

│ ├── go.sum

│ ├── imager.go

│ └── script

├── gke-dws-examples

│ ├── README.md

│ ├── dws-queues.yaml

│ ├── job.yaml

│ └── kueue-manifests.yaml

├── gke-online-serving-single-gpu

│ ├── README.md

│ └── src

├── gke-tpu-examples

│ ├── single-host-inference

│ └── training

├── indexed-job

│ ├── Dockerfile

│ ├── README.md

│ └── mnist.py

├── jobset

│ └── pytorch

├── modules

│ ├── gke-autopilot-private-cluster

│ ├── gke-autopilot-public-cluster

│ ├── gke-standard-private-cluster

│ ├── gke-standard-public-cluster

│ ├── jupyter

│ ├── jupyter_iap

│ ├── jupyter_service_accounts

│ ├── kuberay-cluster

│ ├── kuberay-logging

│ ├── kuberay-monitoring

│ ├── kuberay-operator

│ └── kuberay-serviceaccounts

├── saxml-on-gke

│ ├── httpserver

│ └── single-host-inference

├── training-single-gpu

│ ├── README.md

│ ├── data

│ └── src

├── tutorial.md

└── tutorials

├── e2e-genai-langchain-app

├── finetuning-llama-7b-on-l4

└── serving-llama2-70b-on-l4-gpusThis repository contains a Terraform template for running JupyterHub on Google Kubernetes Engine. We've also included some example notebooks ( under applications/ray/example_notebooks), including one that serves a GPT-J-6B model with Ray AIR (see here for the original notebook). To run these, follow the instructions at applications/ray/README.md to install a Ray cluster.

This jupyter module deploys the following resources, once per user:

- JupyterHub deployment

- User namespace

- Kubernetes service accounts

Learn more about JupyterHub on GKE here

This repository contains a Terraform template for running Ray on Google Kubernetes Engine.

This module deploys the following, once per user:

- User namespace

- Kubernetes service accounts

- Kuberay cluster

- Prometheus monitoring

- Logging container

Learn more about Ray on GKE here

- Make sure to configure terraform backend to use GCS bucket, in order to persist terraform state across different environments.

- The use of the assets contained in this repository is subject to compliance with Google's AI Principles

- See LICENSE

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-on-gke

Similar Open Source Tools

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

anythingllm-docs

anythingllm-docs is a documentation repository for the AnythingLLM project. It contains detailed guides, setup instructions, and information on features and legal aspects of the project. The repository structure is organized into public, pages, components, and configuration files. Users can contribute by creating issues and pull requests following specific guidelines. The project is licensed under the MIT License and has been migrated to NextJS with the help of @ShadowArcanist.

chronicle

Chronicle is a self-hostable AI system that captures audio/video data from OMI devices and other sources to generate memories, action items, and contextual insights about conversations and daily interactions. It includes a mobile app for OMI devices, backend services with AI features, a web dashboard for conversation and memory management, and optional services like speaker recognition and offline ASR. The project aims to provide a system that records personal spoken context and visual context to generate memories, action items, and enable home automation.

Agentic-ADK

Agentic ADK is an Agent application development framework launched by Alibaba International AI Business, based on Google-ADK and Ali-LangEngine. It is used for developing, constructing, evaluating, and deploying powerful, flexible, and controllable complex AI Agents. ADK aims to make Agent development simpler and more user-friendly, enabling developers to more easily build, deploy, and orchestrate various Agent applications ranging from simple tasks to complex collaborations.

kweaver

KWeaver is an open-source ecosystem for building, deploying, and running decision intelligence AI applications. It adopts ontology as the core methodology for business knowledge networks, with DIP as the core platform, aiming to provide elastic, agile, and reliable enterprise-grade decision intelligence to further unleash productivity. The DIP platform includes key subsystems such as ADP, Decision Agent, DIP Studio, and AI Store.

observers

Observers is a lightweight library for AI observability that provides support for various generative AI APIs and storage backends. It allows users to track interactions with AI models and sync observations to different storage systems. The library supports OpenAI, Hugging Face transformers, AISuite, Litellm, and Docling for document parsing and export. Users can configure different stores such as Hugging Face Datasets, DuckDB, Argilla, and OpenTelemetry to manage and query their observations. Observers is designed to enhance AI model monitoring and observability in a user-friendly manner.

osmedeus

Osmedeus is a security-focused declarative orchestration engine that simplifies complex workflow automation into auditable YAML definitions. It provides powerful automation capabilities without compromising infrastructure integrity and safety. With features like declarative YAML workflows, multiple runners, event-driven triggers, template engine, utility functions, REST API server, distributed execution, notifications, cloud storage, AI integration, SAST integration, language detection, and preset installations, Osmedeus offers a comprehensive solution for security automation tasks.

giztoy

Giztoy is a multi-language framework designed for building AI toys and intelligent applications. It provides a unified abstraction layer that spans from resource-constrained embedded systems to powerful cloud services. With features like native support for ESP32 and other MCUs, cross-platform app development, a unified build system with Bazel, an agent framework for AI agents, audio processing capabilities, support for various Large Language Models, real-time models with WebSocket streaming, secure transport protocols, and multi-language implementations in Go, Rust, Zig, and C/C++, Giztoy serves as a versatile tool for developing AI-powered applications across different platforms and devices.

bumpgen

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

aiohomematic

AIO Homematic (hahomematic) is a lightweight Python 3 library for controlling and monitoring HomeMatic and HomematicIP devices, with support for third-party devices/gateways. It automatically creates entities for device parameters, offers custom entity classes for complex behavior, and includes features like caching paramsets for faster restarts. Designed to integrate with Home Assistant, it requires specific firmware versions for HomematicIP devices. The public API is defined in modules like central, client, model, exceptions, and const, with example usage provided. Useful links include changelog, data point definitions, troubleshooting, and developer resources for architecture, data flow, model extension, and Home Assistant lifecycle.

Zen-Ai-Pentest

Zen-AI-Pentest is a professional AI-powered penetration testing framework designed for security professionals, bug bounty hunters, and enterprise security teams. It combines cutting-edge language models with 20+ integrated security tools, offering comprehensive security assessments. The framework is security-first with multiple safety controls, extensible with a plugin system, cloud-native for deployment on AWS, Azure, or GCP, and production-ready with CI/CD, monitoring, and support. It features autonomous AI agents, risk analysis, exploit validation, benchmarking, CI/CD integration, AI persona system, subdomain scanning, and multi-cloud & virtualization support.

memsearch

Memsearch is a tool that allows users to give their AI agents persistent memory in a few lines of code. It enables users to write memories as markdown and search them semantically. Inspired by OpenClaw's markdown-first memory architecture, Memsearch is pluggable into any agent framework. The tool offers features like smart deduplication, live sync, and a ready-made Claude Code plugin for building agent memory.

pilot

Pilot is an AI tool designed to streamline the process of handling tickets from GitHub, Linear, Jira, or Asana. It plans the implementation, writes the code, runs tests, and opens a PR for you to review and merge. With features like Autopilot, Epic Decomposition, Self-Review, and more, Pilot aims to automate the ticket handling process and reduce the time spent on prioritizing and completing tasks. It integrates with various platforms, offers intelligence features, and provides real-time visibility through a dashboard. Pilot is free to use, with costs associated with Claude API usage. It is designed for bug fixes, small features, refactoring, tests, docs, and dependency updates, but may not be suitable for large architectural changes or security-critical code.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

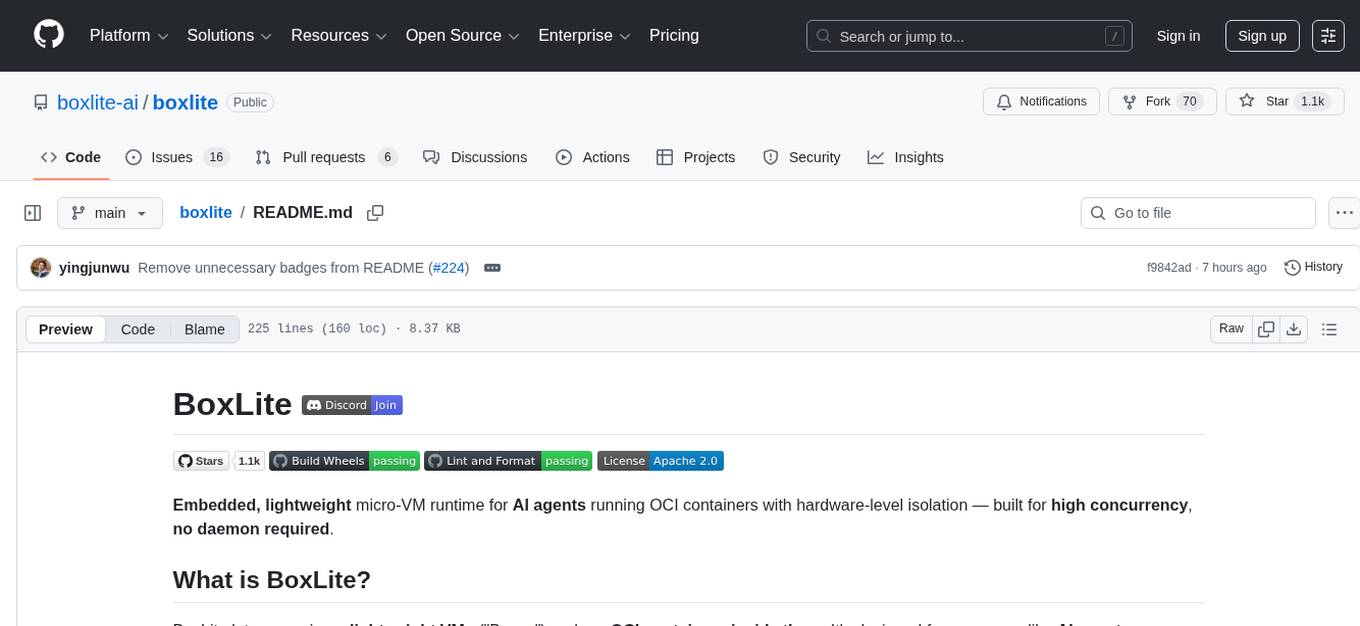

boxlite

BoxLite is an embedded, lightweight micro-VM runtime designed for AI agents running OCI containers with hardware-level isolation. It is built for high concurrency with no daemon required, offering features like lightweight VMs, high concurrency, hardware isolation, embeddability, and OCI compatibility. Users can spin up 'Boxes' to run containers for AI agent sandboxes and multi-tenant code execution scenarios where Docker alone is insufficient and full VM infrastructure is too heavy. BoxLite supports Python, Node.js, and Rust with quick start guides for each, along with features like CPU/memory limits, storage options, networking capabilities, security layers, and image registry configuration. The tool provides SDKs for Python and Node.js, with Go support coming soon. It offers detailed documentation, examples, and architecture insights for users to understand how BoxLite works under the hood.

mesh

MCP Mesh is an open-source control plane for MCP traffic that provides a unified layer for authentication, routing, and observability. It replaces multiple integrations with a single production endpoint, simplifying configuration management. Built for multi-tenant organizations, it offers workspace/project scoping for policies, credentials, and logs. With core capabilities like MeshContext, AccessControl, and OpenTelemetry, it ensures fine-grained RBAC, full tracing, and metrics for tools and workflows. Users can define tools with input/output validation, access control checks, audit logging, and OpenTelemetry traces. The project structure includes apps for full-stack MCP Mesh, encryption, observability, and more, with deployment options ranging from Docker to Kubernetes. The tech stack includes Bun/Node runtime, TypeScript, Hono API, React, Kysely ORM, and Better Auth for OAuth and API keys.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.