twelvet

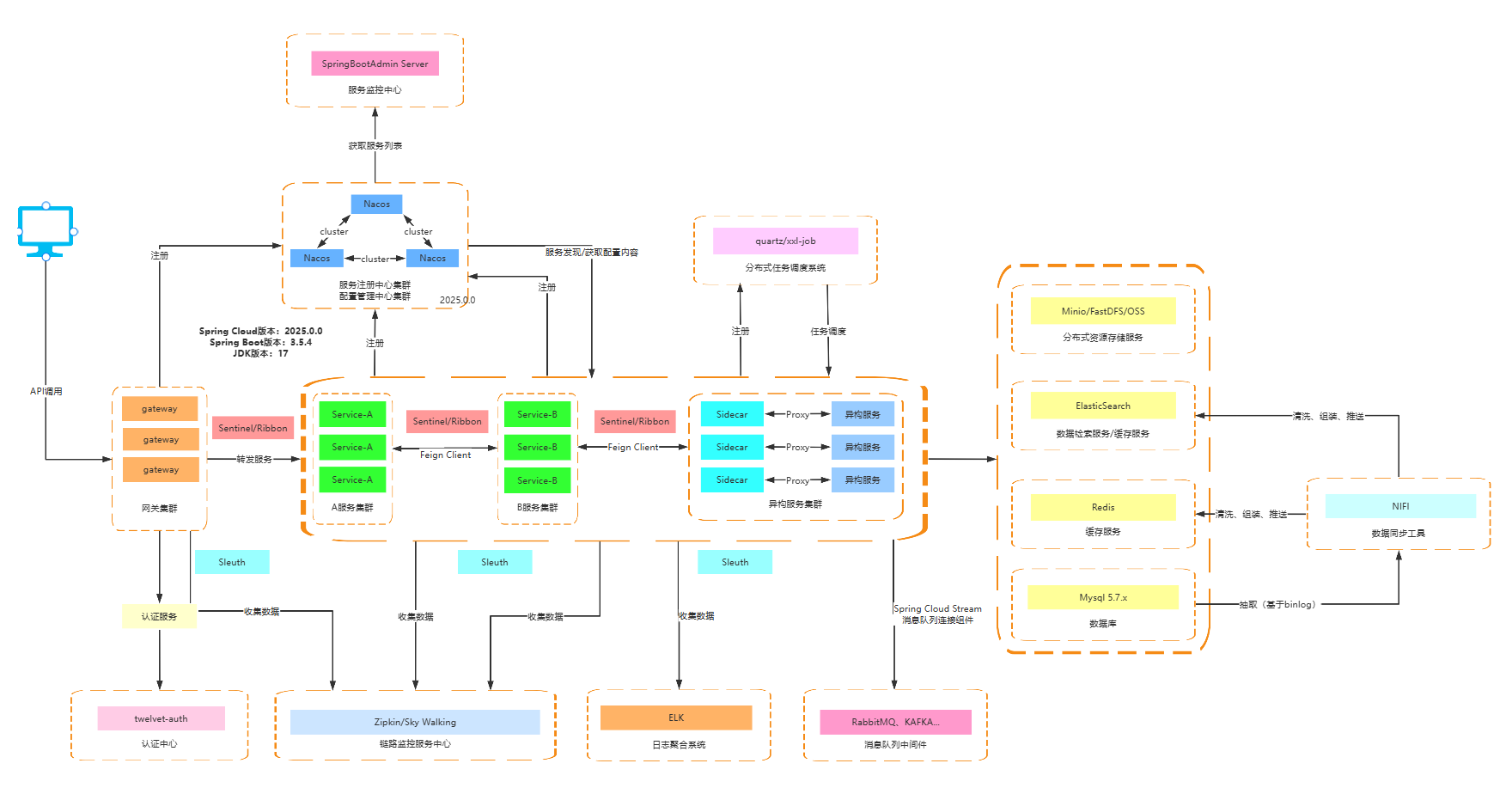

(Spring Boot 3. X Microservices framework) 基于Spring Boot 3.X 的 Spring Cloud Alibaba / Spring Cloud Tencent + React的微服务框架。🔝 🔝 点个starrred 关注更新。Chat GPT(RAG、TTS、STT、LLM)

Stars: 238

Twelvet is a permission management system based on Spring Cloud Alibaba that serves as a framework for rapid development. It is a scaffolding framework based on microservices architecture, aiming to reduce duplication of business code and provide a common core business code for both microservices and monoliths. It is designed for learning microservices concepts and development, suitable for website management, CMS, CRM, OA, and other system development. The system is intended to quickly meet business needs, improve user experience, and save time by incubating practical functional points in lightweight, highly portable functional plugins.

README:

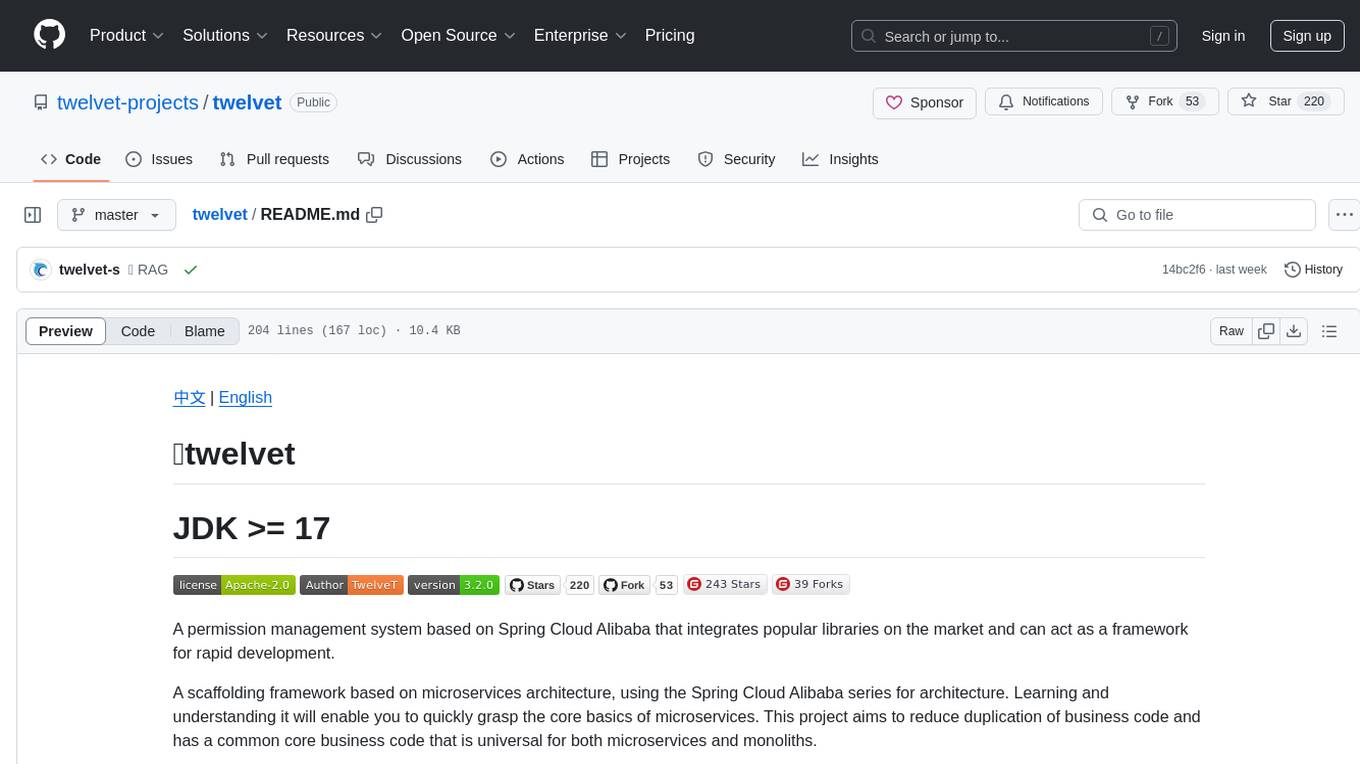

A permission management system based on Spring Cloud Alibaba that integrates popular libraries on the market and can act as a framework for rapid development.

A scaffolding framework based on microservices architecture, using the Spring Cloud Alibaba series for architecture. Learning and understanding it will enable you to quickly grasp the core basics of microservices. This project aims to reduce duplication of business code and has a common core business code that is universal for both microservices and monoliths.

But more importantly, it is for learning the concept of microservices and development. You can use it for website management backstage, website member center, CMS, CRM, OA and other systems development. Of course, not just small systems, we can produce more service modules and continuously improve the project.

The initial intention of the system is to be able to quickly meet the business needs, to bring better experience and more time. It will be used to incubate some practical functional points. We hope that they are lightweight, highly portable functional plugins.

Backend source code: https://github.com/twelvet-projects/twelvet

Frontend source code: https://github.com/twelvet-s/twelvet-ui

Technical documents: https://doc.twelvet.cn/

Official blog: https://twelvet.cn

| Branch | Description | Additional Description |

|---|---|---|

| master | java17 + springboot 3.x + springcloud 2022 + spring cloud alibaba | master |

| jdk8 | java8 + springboot 2.7.x + springcloud 2021 + spring cloud alibaba | jdk8 |

| spring-cloud-tencent | java17 + springboot 3.x + springcloud 2022 + spring cloud tencent | Demonstration branch, does not support compatibility with too many new features |

com.twelvet

├── twelvet-ui // Front-end Framework [80]

├── twelvet-gateway // Gateway module [88]

├── twelvet-nacos // nacos [8848]

├── twelvet-auth // Authentication Center [8888]

├── twelvet-api // Interface module

│ └── twelvet-api-system // System interface

│ └── twelvet-api-dfs // DFS interface

│ └── twelvet-api-job // Scheduled task interface

│ └── twelvet-api-ai // AI interface

├── twelvet-framework // Core module

│ └── twelvet-framework-core // Core module

│ └── twelvet-framework-log // Logging

│ └── twelvet-framework-datascope // Data permission

│ └── twelvet-framework-jdbc // jdbc

│ └── twelvet-framework-swagger // swagger document

│ └── twelvet-framework-redis // Cache service

│ └── twelvet-framework-security // Security module

│ └── twelvet-framework-utils // Tool module

├── twelvet-server // Business module

│ └── twelvet-server-system // System module [8081]

│ └── twelvet-server-job // Scheduled task [8082]

│ └── twelvet-server-dfs // DFS service [8083]

│ └── twelvet-server-gen // Code generation [8084]

│ └── twelvet-server-ai // AI module [8085]

├── twelvet-visual // Graphic Management Module

| └── twelvet-visual-sentinel // sentinel [8101]

│ └── twelvet-visual-monitor // Monitoring center [8102]

├──pom.xml // Public dependencies

- User management: Users are operators of the system, and this function mainly completes the configuration of system users.

- Department management: configure the system organization structure (company, department, group), tree structure display supports data permissions.

- Post management: Configure the positions held by system users.

- Menu Management: Configure system menus, operation permissions, button permission identifiers, etc.

- Role Management: Role menu permission allocation, set role data range permission division by organization.

- Dictionary management: Maintain some relatively fixed data commonly used in the system.

- Parameter management: Dynamic configuration of commonly used parameters in the system.

- Asynchronous: Login log / system operation log / system login log recording and inquiry.

- Scheduled task: Online (add, modify, delete) task scheduling includes execution result logs.

- Code generation: One-click generation of CRUD front-end and back-end code, providing faster speed for business development.

- Service monitoring: Monitor current system CPU, memory, disk, stack and other related information.

- Connection pool monitoring: Monitor the status of the current system database connection pool, and analyze SQL to find out the system performance bottleneck.

- Distributed file storage.

- Swagger gateway aggregation document.

- Sentinel flow restriction center.

- Nacos registration + configuration center.

- RAG knowledge base

|

|

|

|

|

|

|

|

- admin/123456

Demonstration address:https://cloud.twelvet.cn

Memory > 16 Maven, Docker, Docker-compose, Node, and Yarn need to be installed manually.

# mvn twelvet

cd ./twelvet && mvn clean && mvn install -DskipTests

# mvn twelvet-auth

cd ../twelvet-auth && mvn clean && mvn install -DskipTests

# mvn twelvet-gateway

cd ../twelvet-gateway && mvn clean && mvn install -DskipTests

# mvn twelvet-server-system

cd ../twelvet-server/twelvet-server-system && mvn clean && mvn install -DskipTests

# Enter the script directory

cd ../../docker

# Set executable permissions

chmod 751 deploy.sh

# Perform startup (execute parameters as needed, [init | port | base | server | stop | rm])

# Initialization

./deploy.sh init

# Basic services

./deploy.sh base

# Start Twelvet

./deploy.sh server

# Start UI

./deploy.sh nginxThe Twelvet open-source software follows the MIT License Apache 2.0 License。 Permits commercial use, but requires the preservation of the original author and copyright information.

-

Welcome to contribute PR,Make sure to submit to the corresponding branch Code conventions spring-javaformat

Code style guidelines

- Due to spring-javaformat the requirement of enforcing a specific code formatting, any code that is not submitted according to this requirement will not be able to be merged (packaged)

- If you are using IntelliJ IDEA for development, please install the auto-formatting plugin. spring-javaformat-intellij-idea-plugin

- For other development tools, please refer to their respective documentation or community for instructions on

configuring automatic code formatting.

spring-javaformat

Before committing code, please run the following command in the project root directory (requires developer's

computer to support the mvn command) to format the code.

mvn spring-javaformat:apply

-

Welcome to contribute issue,Please provide clear explanations of the issue, development environment, and steps to reproduce.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for twelvet

Similar Open Source Tools

twelvet

Twelvet is a permission management system based on Spring Cloud Alibaba that serves as a framework for rapid development. It is a scaffolding framework based on microservices architecture, aiming to reduce duplication of business code and provide a common core business code for both microservices and monoliths. It is designed for learning microservices concepts and development, suitable for website management, CMS, CRM, OA, and other system development. The system is intended to quickly meet business needs, improve user experience, and save time by incubating practical functional points in lightweight, highly portable functional plugins.

MiniAI-Face-Recognition-LivenessDetection-ServerSDK

The MiniAiLive Face Recognition LivenessDetection Server SDK provides system integrators with fast, flexible, and extremely precise facial recognition that can be deployed across various scenarios, including security, access control, public safety, fintech, smart retail, and home protection. The SDK is fully on-premise, meaning all processing happens on the hosting server, and no data leaves the server. The project structure includes bin, cpp, flask, model, python, test_image, and Dockerfile directories. To set up the project on Linux, download the repo, install system dependencies, and copy libraries into the system folder. For Windows, contact MiniAiLive via email. The C++ example involves replacing the license key in main.cpp, building the project, and running it. The Python example requires installing dependencies and running the project. The Python Flask example involves replacing the license key in app.py, installing dependencies, and running the project. The Docker Flask example includes building the docker image and running it. To request a license, contact MiniAiLive. Contributions to the project are welcome by following specific steps. An online demo is available at https://demo.miniai.live. Related products include MiniAI-Face-Recognition-LivenessDetection-AndroidSDK, MiniAI-Face-Recognition-LivenessDetection-iOS-SDK, MiniAI-Face-LivenessDetection-AndroidSDK, MiniAI-Face-LivenessDetection-iOS-SDK, MiniAI-Face-Matching-AndroidSDK, and MiniAI-Face-Matching-iOS-SDK. MiniAiLive is a leading AI solutions company specializing in computer vision and machine learning technologies.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

xpander.ai

xpander.ai is a Backend-as-a-Service for autonomous agents that abstracts the ops layer, allowing AI engineers to focus on behavior and outcomes. It provides managed agent hosting with version control and CI/CD, a fully managed PostgreSQL memory layer, and a library of 2,000+ functions. The platform features an AI native triggering system that processes inputs from various sources and delivers unified messages to agents. With support for any agent framework or SDK, including Agno and OpenAI, xpander.ai enables users to build intelligent, production-ready AI agents without dealing with infrastructure complexity.

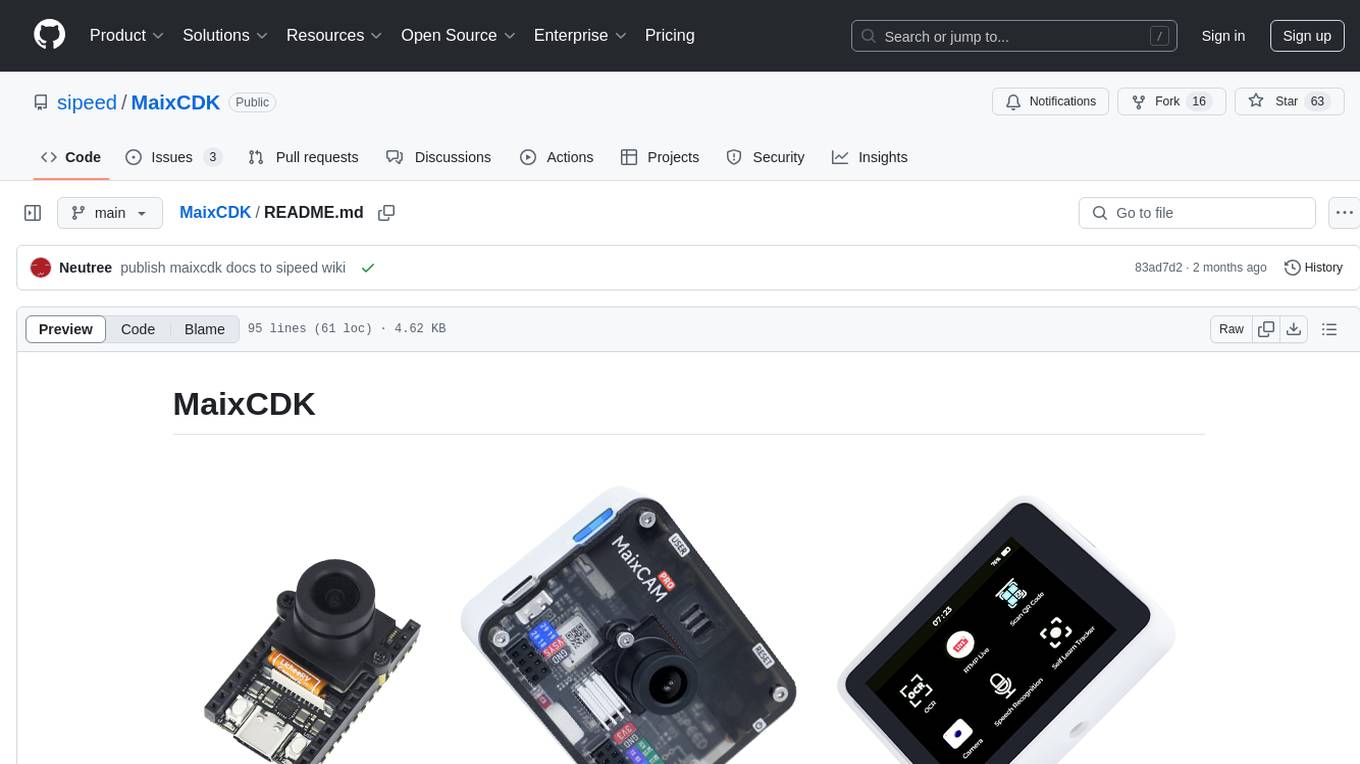

MaixCDK

MaixCDK (Maix C/CPP Development Kit) is a C/C++ development kit that integrates practical functions such as AI, machine vision, and IoT. It provides easy-to-use encapsulation for quickly building projects in vision, artificial intelligence, IoT, robotics, industrial cameras, and more. It supports hardware-accelerated execution of AI models, common vision algorithms, OpenCV, and interfaces for peripheral operations. MaixCDK offers cross-platform support, easy-to-use API, simple environment setup, online debugging, and a complete ecosystem including MaixPy and MaixVision. Supported devices include Sipeed MaixCAM, Sipeed MaixCAM-Pro, and partial support for Common Linux.

openagents

OpenAgents is a platform for AI agents using open protocols. The current flagship product (v4) is an agentic chat app live at openagents.com. This repository holds the new cross-platform version (v5), with an initial focus on Coder, a desktop app intended to replace Claude Code with standard chat UI & thread history and first-class MCP integration. The v5 tech stack includes React, React Native, TypeScript for frontend, Cloudflare stack for backend, better-auth for authentication, and Vercel AI SDK. The architecture considerations aim for cross-platform code reuse, open protocol interoperability, long-running agent processes, composability, proportional payment to contributors, and agent wallets for Bitcoin/Lightning & stablecoins via Spark wallet.

livekit

LiveKit is an open source project providing scalable, multi-user conferencing based on WebRTC. It offers a server written in Go, client SDKs, and advanced features like speaker detection, end-to-end encryption, and SVC codecs. The tool is easy to deploy with support for JWT authentication and robust networking. LiveKit ecosystem includes agents for AI applications, tools like CLI and Docker image, and SDKs for both client and server-side development.

sealos

Sealos is a cloud operating system distribution based on the Kubernetes kernel, designed for a seamless development lifecycle. It allows users to spin up full-stack environments in seconds, effortlessly push releases, and scale production seamlessly. With core features like easy application management, quick database creation, and cloud universality, Sealos offers efficient and economical cloud management with high universality and ease of use. The platform also emphasizes agility and security through its multi-tenancy sharing model. Sealos is supported by a community offering full documentation, Discord support, and active development roadmap.

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

WeKnora

WeKnora is a document understanding and semantic retrieval framework based on large language models (LLM), designed specifically for scenarios with complex structures and heterogeneous content. The framework adopts a modular architecture, integrating multimodal preprocessing, semantic vector indexing, intelligent recall, and large model generation reasoning to build an efficient and controllable document question-answering process. The core retrieval process is based on the RAG (Retrieval-Augmented Generation) mechanism, combining context-relevant segments with language models to achieve higher-quality semantic answers. It supports various document formats, intelligent inference, flexible extension, efficient retrieval, ease of use, and security and control. Suitable for enterprise knowledge management, scientific literature analysis, product technical support, legal compliance review, and medical knowledge assistance.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

1Panel

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

db2rest

DB2Rest is a modern low code REST DATA API platform that enables the rapid development of intelligent applications by combining databases, language models, and vector stores. It facilitates context-aware, reasoning applications without vendor lock-in. The tool accelerates application delivery, fosters faster innovation with AI, serves as a secure database gateway, and simplifies integration. It supports various databases like PostgreSQL, MySQL, MS SQL Server, Oracle, MongoDB, and more, with planned support for additional databases. Users can connect on Discord for support and contact [email protected] for inquiries.

LlamaBot

LlamaBot is an open-source AI coding agent that rapidly builds MVPs, prototypes, and internal tools. It works for non-technical founders, product teams, and engineers by generating working prototypes, embedding AI directly into the app, and running real workflows. Unlike typical codegen tools, LlamaBot can embed directly in your app and run real workflows, making it ideal for collaborative software building where founders guide the vision, engineers stay in control, and AI fills the gap. LlamaBot is built for moving ideas fast, allowing users to prototype an AI MVP in a weekend, experiment with workflows, and collaborate with teammates to bridge the gap between non-technical founders and engineering teams.

xpert

Xpert is a powerful tool for data analysis and visualization. It provides a user-friendly interface to explore and manipulate datasets, perform statistical analysis, and create insightful visualizations. With Xpert, users can easily import data from various sources, clean and preprocess data, analyze trends and patterns, and generate interactive charts and graphs. Whether you are a data scientist, analyst, researcher, or student, Xpert simplifies the process of data analysis and visualization, making it accessible to users with varying levels of expertise.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

For similar tasks

twelvet

Twelvet is a permission management system based on Spring Cloud Alibaba that serves as a framework for rapid development. It is a scaffolding framework based on microservices architecture, aiming to reduce duplication of business code and provide a common core business code for both microservices and monoliths. It is designed for learning microservices concepts and development, suitable for website management, CMS, CRM, OA, and other system development. The system is intended to quickly meet business needs, improve user experience, and save time by incubating practical functional points in lightweight, highly portable functional plugins.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.