learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) using OpenAI, Gemini, Streamlit, Containers, Serverless, Postgres, LangChain, Pinecone, and Next.js

Stars: 592

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

README:

This course is part of the Certified Cloud Applied Generative AI Engineer (GenEng)

- Microsoft Azure Account https://azure.microsoft.com/en-us/free/ai-services/

Note: If possible register your account with a company email address.

Once you have a subscription id apply for Azure Open AI Service here:

https://azure.microsoft.com/en-us/products/ai-services/openai-service

- Google Cloud Account https://cloud.google.com/free

From Microsoft to MIT MBA, the AI reeducation boot camp is coming for every worker and executive

Nvidia says generative AI will be bigger than the internet

Generative AI and Its Economic Impact: What You Need to Know

Must Read: OpenAI DevDay - a pivotal moment for AI

GenEng revolution being led by developers who build deep proficiency in how to best leverage and integrate generative AI technologies into applications

There is a clear separation of roles between those that create and train models (Data Scientists and Engineers) and those who use those models (Developers). This was already on the way, and it much clearer with the GenAI revolution - the future of the GenAI will be determined on how it will be driven to adoption - and it will be driven by how developers adopt it.

GenEng practitioners will need to have many of the same skills of traditional application development, including scalable architecting, integrating enterprise systems, and understanding requirements from the business user. These skills will be augmented with the nuances of building generative AI applications, such as involving the business domain experts in validating aspects of prompt engineering and choosing the right LLM based on price/performance and outcomes

The rise of GenEng: How AI changes the developer role

Google launches its largest and ‘most capable’ AI model, Gemini

Meta, IBM and Intel join alliance for open AI development while Google and Microsoft sit out

Sam Altman to return as CEO of OpenAI

The Year in Tech, 2024: The Insights You Need from Harvard Business Review

The Year in Tech 2024: The Insights You Need about Generative AI and Web 3.0 from Harvard Business Review will help you understand what the latest and most important tech innovations mean for your organization and how you can use them to compete and win in today's turbulent business environment. Business is changing. Will you adapt or be left behind? Get up to speed and deepen your understanding of the topics that are shaping your company's future with the Insights You Need from Harvard Business Review series. You can't afford to ignore how these issues will transform the landscape of business and society. The Insights You Need series will help you grasp these critical ideas--and prepare you and your company for the future.

McKinsey Technology Trends Outlook 2023

Watch Introduction to Generative AI

-

McKinsey: The economic potential of generative AI: The next productivity frontier, McKinsey Digital report, June 2023

-

GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models, Tyna Eloundou, Sam Manning, Pamela Miskin, and Daniel Rock, March 2023 (arXiv:2303.10130)

-

Goldman Sachs: The Potentially Large Effects of Artificial Intelligence on Economic Growth, Joseph Briggs and Devesh Kodnani, March 2023

OpenAI API is a collection of APIs

APIs offer access to various Large Language Models (LLMs)

LLM: Program trained to understand human language

ChatGPT is a web service using the Chat completion API Uses:

- gpt-3.5-turbo (free tier)

- gpt-4.0 (paid tier)

-

Chat completion: Given a series of messages, generate a response

-

Function calling: Choose which function to call

-

Image generation: Given a text description generate an image

-

Speech to text: Given an audio file and a prompt generate a transcript

-

Fine tuning: Train a model using input and output examples

The new Assistants API is a stateful evolution of Chat Completions API meant to simplify the creation of assistant-like experiences, and enable developer access to powerful tools like Code Interpreter and Retrieval.

The primitives of the Chat Completions API are Messages, on which you perform a Completion with a Model (gpt-3.5-turbo, gpt-4, etc). It is lightweight and powerful, but inherently stateless, which means you have to manage conversation state, tool definitions, retrieval documents, and code execution manually.

The primitives of the Assistants API are

- Assistants, which encapsulate a base model, instructions, tools, and (context) documents,

- Threads, which represent the state of a conversation, and

- Runs, which power the execution of an Assistant on a Thread, including textual responses and multi-step tool use.

The OPL Stack stands for OpenAI, Pinecone, and Langchain. It's a collection of open-source tools and libraries that make building and deploying LLMs a breeze.

“AI will be the greatest wealth creator in history because artificial intelligence doesn’t care where you were born, whether you have money, whether you have a PhD,” Higgins tells CNBC Make It. “It’s going to destroy barriers that have prevented people from moving up the ladder, and pursuing their dream of economic freedom.”

It’s already valued at almost $100 billion, and expected to contribute $15.7 trillion to the global economy by 2030.

“It’s not that if you don’t jump on it now, you never can,” Higgins says. “It’s that now is the greatest opportunity for you to capitalize on it.”

A.I. will be the biggest wealth creator in history

Generative AI could add up to $4.4 trillion annually to the global economy

Silicon Valley Sees a New Kind of Mobile Device Powered by GenAI

Microsoft CEO: AI is "bigger than the PC, bigger than mobile" - but is he right?

Artificial General Intelligence Is Already Here

Inside the race to build an ‘operating system’ for generative AI

Business intelligence in the era of GenAI

The Convergence of AI and Web3: A New Era of Decentralized Intelligence

What is the potential of Generative AI and Web 3.0 when combined?

How Web3 Can Unleash the Power of Generative AI

- Generative AI with LangChain: Build large language model (LLM) apps with Python, ChatGPT and other LLMs

- LangChain Crash Course: Build OpenAI LLM powered Apps

- Build and Learn: AI App Development for Beginners: Unleashing ChatGPT API with LangChain & Streamlit

- Generative AI in Healthcare - The ChatGPT Revolution

- Generative AI in Accounting Guide: Explore the possibilities of generative AI in accounting

- Using Generative AI in Business Whitepaper

- Generative AI: what accountants need to know in 2023

- 100 Practical Applications and Use Cases of Generative AI

LangChain Explained in 13 Minutes | QuickStart Tutorial for Beginners

LangChain Crash Course for Beginners

LangChain Crash Course for Beginners Video

LangChain for LLM Application Development

LangChain: Chat with Your Data

A Gentle Intro to Chaining LLMs, Agents, and Utils via LangChain

The LangChain Cookbook - Beginner Guide To 7 Essential Concepts

Greg Kamradt’s LangChain Youtube Playlist

1littlecoder LangChain Youtube Playlist

Pinecone

https://docs.pinecone.io/docs/quickstart

https://python.langchain.com/docs/integrations/vectorstores/pinecone

LangChain - Vercel AI SDK

https://sdk.vercel.ai/docs/guides/providers/langchain

Using Python and Flask in Next.js 13 API

https://github.com/wpcodevo/nextjs-flask-framework

https://vercel.com/templates/python/flask-hello-world

https://vercel.com/docs/functions/serverless-functions/runtimes/python

https://codevoweb.com/how-to-integrate-flask-framework-with-nextjs/#google_vignette

https://github.com/vercel/examples/tree/main/python

https://github.com/orgs/vercel/discussions/2732

https://flask.palletsprojects.com/en/2.3.x/tutorial/

https://flask.palletsprojects.com/en/2.3.x/

Reference Material:

Top 5 Resources to learn LangChain

Official LangChain YouTube channel

Building Custom Q&A Applications Using LangChain and Pinecone Vector Database

End to End LLM Project Using Langchain | NLP Project End to End

Build and Learn: AI App Development for Beginners: Unleashing ChatGPT API with LangChain & Streamlit

Total Questions: 40

Duration: 60 minutes

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for learn-generative-ai

Similar Open Source Tools

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

Pathway-AI-Bootcamp

Welcome to the μLearn x Pathway Initiative, an exciting adventure into the world of Artificial Intelligence (AI)! This comprehensive course, developed in collaboration with Pathway, will empower you with the knowledge and skills needed to navigate the fascinating world of AI, with a special focus on Large Language Models (LLMs).

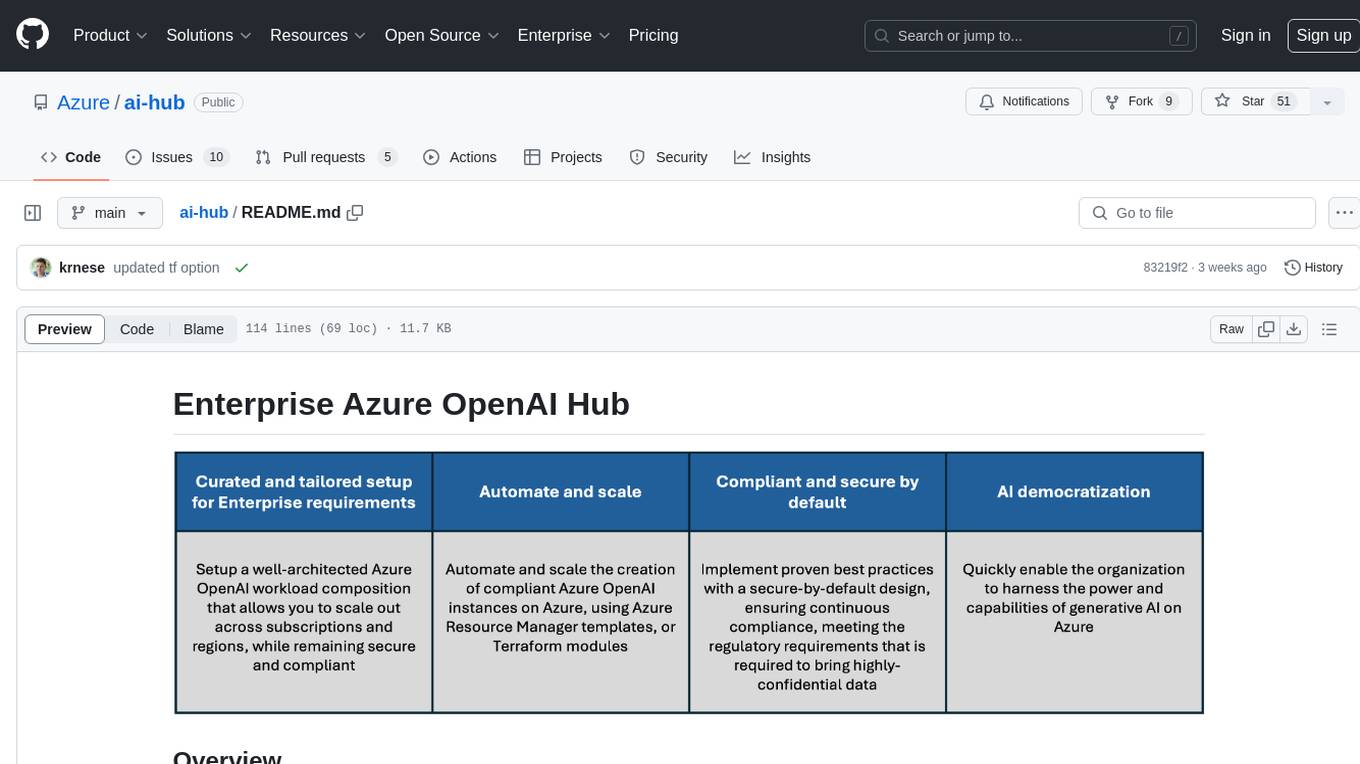

ai-hub

The Enterprise Azure OpenAI Hub is a comprehensive repository designed to guide users through the world of Generative AI on the Azure platform. It offers a structured learning experience to accelerate the transition from concept to production in an Enterprise context. The hub empowers users to explore various use cases with Azure services, ensuring security and compliance. It provides real-world examples and playbooks for practical insights into solving complex problems and developing cutting-edge AI solutions. The repository also serves as a library of proven patterns, aligning with industry standards and promoting best practices for secure and compliant AI development.

husky

Husky is a research-focused programming language designed for next-generation computing. It aims to provide a powerful and ergonomic development experience for various tasks, including system level programming, web/native frontend development, parser/compiler tasks, game development, formal verification, machine learning, and more. With a strong type system and support for human-in-the-loop programming, Husky enables users to tackle complex tasks such as explainable image classification, natural language processing, and reinforcement learning. The language prioritizes debugging, visualization, and human-computer interaction, offering agile compilation and evaluation, multiparadigm support, and a commitment to a good ecosystem.

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

Deep-Dive-Into-AI-With-MLX-PyTorch

Deep Dive into AI with MLX and PyTorch is an educational initiative focusing on AI, machine learning, and deep learning using Apple's MLX and Meta's PyTorch frameworks. The repository contains comprehensive guides, in-depth analyses, and resources for learning and exploring AI concepts. It aims to cater to audiences ranging from beginners to experienced individuals, providing detailed explanations, examples, and translations between PyTorch and MLX. The project emphasizes open-source contributions, knowledge sharing, and continuous learning in the field of AI.

start-machine-learning

Start Machine Learning in 2024 is a comprehensive guide for beginners to advance in machine learning and artificial intelligence without any prior background. The guide covers various resources such as free online courses, articles, books, and practical tips to become an expert in the field. It emphasizes self-paced learning and provides recommendations for learning paths, including videos, podcasts, and online communities. The guide also includes information on building language models and applications, practicing through Kaggle competitions, and staying updated with the latest news and developments in AI. The goal is to empower individuals with the knowledge and resources to excel in machine learning and AI.

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

AliceVision

AliceVision is a photogrammetric computer vision framework which provides a 3D reconstruction pipeline. It is designed to process images from different viewpoints and create detailed 3D models of objects or scenes. The framework includes various algorithms for feature detection, matching, and structure from motion. AliceVision is suitable for researchers, developers, and enthusiasts interested in computer vision, photogrammetry, and 3D modeling. It can be used for applications such as creating 3D models of buildings, archaeological sites, or objects for virtual reality and augmented reality experiences.

For similar tasks

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

miniLLMFlow

Mini LLM Flow is a 100-line minimalist LLM framework designed for agents, task decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on high-level programming paradigms while stripping away low-level implementation details. It serves as a learning resource and allows LLMs to design, build, and maintain projects themselves.

GenAI-Learning

GenAI-Learning is a repository dedicated to providing resources and courses for individuals interested in Generative AI. It covers a wide range of topics from prompt engineering to user-centered design, offering courses on LLM Bootcamp, DeepLearning AI, Microsoft Copilot Learning, Amazon Generative AI, Google Cloud Skills, NVIDIA Learn, Oracle Cloud, and IBM AI Learn. The repository includes detailed course descriptions, partners, and topics for each course, making it a valuable resource for AI enthusiasts and professionals.

GenerativeAIExamples

NVIDIA Generative AI Examples are state-of-the-art examples that are easy to deploy, test, and extend. All examples run on the high performance NVIDIA CUDA-X software stack and NVIDIA GPUs. These examples showcase the capabilities of NVIDIA's Generative AI platform, which includes tools, frameworks, and models for building and deploying generative AI applications.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.