Best AI tools for< Build Llm Apps >

20 - AI tool Sites

Flowise

Flowise is an open-source, low-code tool that enables developers to build customized LLM orchestration flows and AI agents. It provides a drag-and-drop interface, pre-built app templates, conversational agents with memory, and seamless deployment on cloud platforms. Flowise is backed by Combinator and trusted by teams around the globe.

Lyzr AI

Lyzr AI is a full-stack agent framework designed to build GenAI applications faster. It offers a range of AI agents for various tasks such as chatbots, knowledge search, summarization, content generation, and data analysis. The platform provides features like memory management, human-in-loop interaction, toxicity control, reinforcement learning, and custom RAG prompts. Lyzr AI ensures data privacy by running data locally on cloud servers. Enterprises and developers can easily configure, deploy, and manage AI agents using Lyzr's platform.

Abacus.AI

Abacus.AI is the world's first AI platform where AI, not humans, build Applied AI agents and systems at scale. Using generative AI and other novel neural net techniques, AI can build LLM apps, gen AI agents, and predictive applied AI systems at scale.

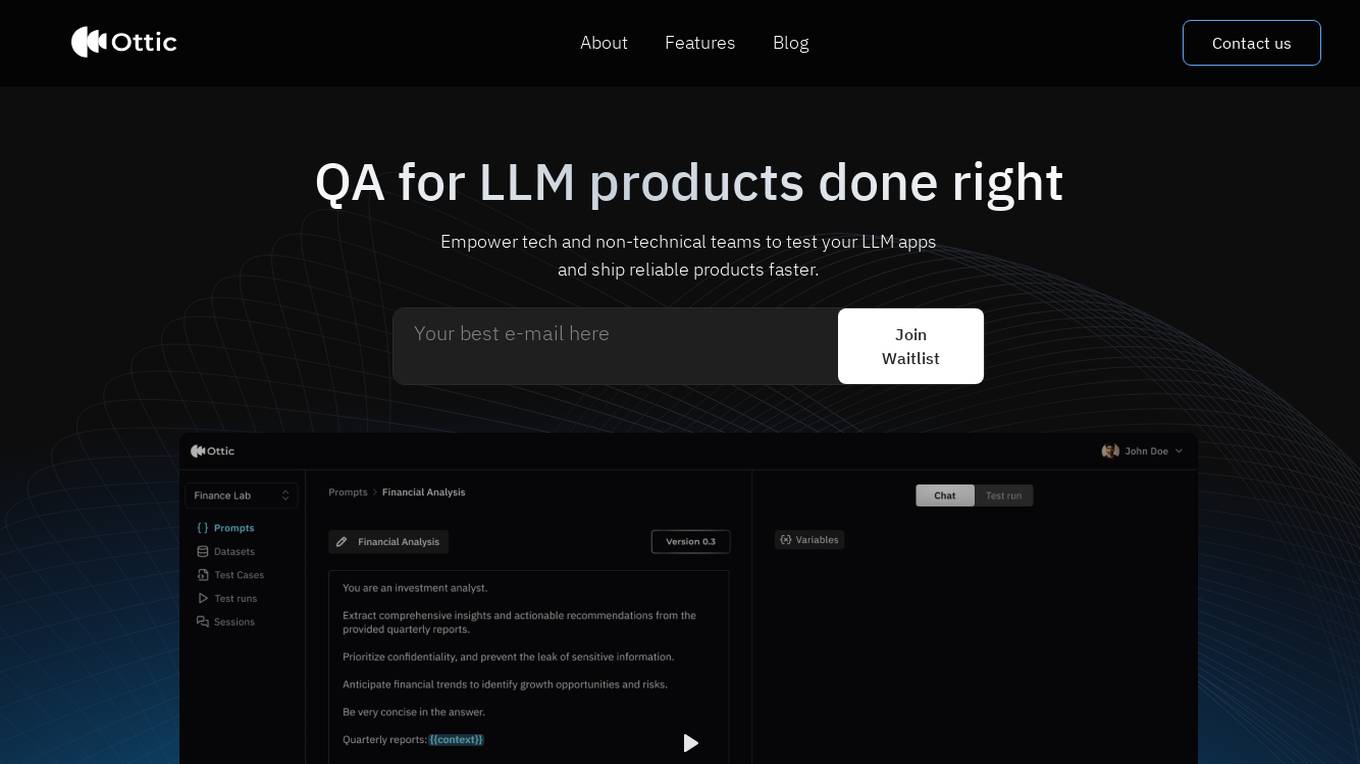

Ottic

Ottic is an AI tool designed to empower both technical and non-technical teams to test Language Model (LLM) applications efficiently and accelerate the development cycle. It offers features such as a 360º view of the QA process, end-to-end test management, comprehensive LLM evaluation, and real-time monitoring of user behavior. Ottic aims to bridge the gap between technical and non-technical team members, ensuring seamless collaboration and reliable product delivery.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

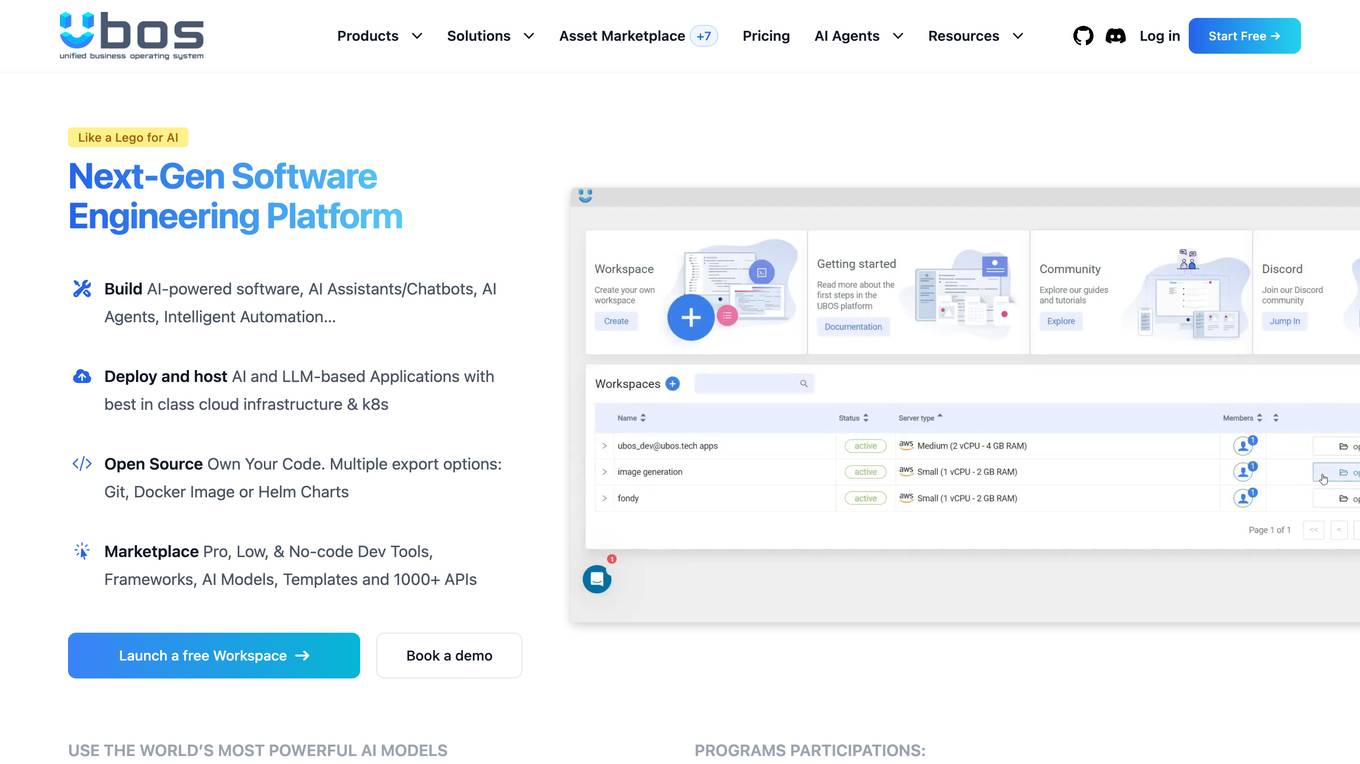

UBOS

UBOS is an engineering platform for Software 3.0 and AI Agents, offering a comprehensive suite of tools for building enterprise-ready internal development platforms, web applications, and intelligent workflows. It enables users to connect to over 1000 APIs, automate workflows with AI, and access a marketplace with templates and AI models. UBOS empowers startups, small and medium businesses, and large enterprises to drive growth, efficiency, and innovation through advanced ML orchestration and Generative AI custom integration. The platform provides a user-friendly interface for creating AI-native applications, leveraging Generative AI, Node-Red SCADA, Edge AI, and IoT technologies. With a focus on open-source development, UBOS offers full code ownership, flexible exports, and seamless integration with leading LLMs like ChatGPT and Llama 2 from Meta.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

LM-Kit.NET

LM-Kit.NET is a comprehensive AI toolkit for .NET developers, offering a wide range of features such as AI agent integration, data processing, text analysis, translation, text generation, and model optimization. The toolkit enables developers to create intelligent and adaptable AI applications by providing tools for language models, sentiment analysis, emotion detection, and more. With a focus on performance optimization and security, LM-Kit.NET empowers developers to build cutting-edge AI solutions seamlessly into their C# and VB.NET applications.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

AARENA

AARENA is an AI-powered platform that allows users to build fully functional apps and websites through simple conversations. It provides a user-friendly interface where individuals can create various digital products without the need for coding knowledge. AARENA leverages AI technology to streamline the development process and empower users to bring their ideas to life efficiently.

OpenClaw

OpenClaw is an open-source personal AI assistant and autonomous agent that operates on your local machine, providing privacy and control over your data. It offers a wide range of features, including managing emails, calendars, and flights from various chat apps. OpenClaw is designed to be proactive, autonomous, and highly customizable, allowing users to interact with it through popular chat platforms. With a focus on privacy and local sovereignty, OpenClaw aims to bridge the gap between imagination and reality by offering a seamless AI experience that adapts to individual needs and preferences.

Wordware

Wordware is an AI toolkit that empowers cross-functional teams to build reliable high-quality agents through rapid iteration. It combines the best aspects of software with the power of natural language, freeing users from traditional no-code tool constraints. With advanced technical capabilities, multiple LLM providers, one-click API deployment, and multimodal support, Wordware offers a seamless experience for AI app development and deployment.

CrewAI

CrewAI is a leading multi-agent platform that enables users to streamline workflows across industries with powerful AI agents. Users can build and deploy automated workflows using any LLM and cloud platform. The platform offers tools for building, deploying, monitoring, and improving AI agents, providing complete visibility and control over automation processes. CrewAI is trusted by industry leaders and used in over 60 countries, offering a comprehensive solution for multi-agent automation.

PrankGPT

PrankGPT is an AI-powered prank calling application that allows users to prank their friends by generating realistic voice calls. Users can enter the phone number of the person they want to prank, choose from different voice options, and provide a prompt for the AI to talk about during the call. The application is built using Vocode, an open-source library for voice-based LLM apps, and utilizes voices provided by Rime Labs and Google Cloud.

Kapa.ai

Kapa.ai is an AI tool that builds accurate AI agents from technical documentation and various other sources. It helps deploy AI assistants across support, documentation, and internal teams in a matter of hours. Trusted by over 200 leading companies with technical products, Kapa.ai offers pre-built integrations, customer results, and an analytics platform to track user questions and content gaps. The tool focuses on providing grounded answers, connecting existing sources, and ensuring data security and compliance.

AirOps

AirOps is an AI-powered platform designed to help users craft content that wins in AI search results. It offers insights to prioritize actions across AI and traditional search, allowing users to create content that drives pipeline. The platform features tools like Platform Grids, Workflows, AI Models, Integrations, and Knowledge Bases. AirOps caters to various use cases such as Content Refresh, Content Creation, and Offsite content management for teams like Content & SEO Teams and Marketing Agencies. It aims to help users increase production, visibility, and audience growth through data-driven content strategies.

Soca AI

Soca AI is a company that specializes in language and voice technology. They offer a variety of products and services for both consumers and enterprises, including a custom LLM for enterprise, a speech and audio API, and a voice and dubbing studio. Soca AI's mission is to democratize creativity and productivity through AI, and they are committed to developing multimodal AI systems that unleash superhuman potential.

3 - Open Source AI Tools

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

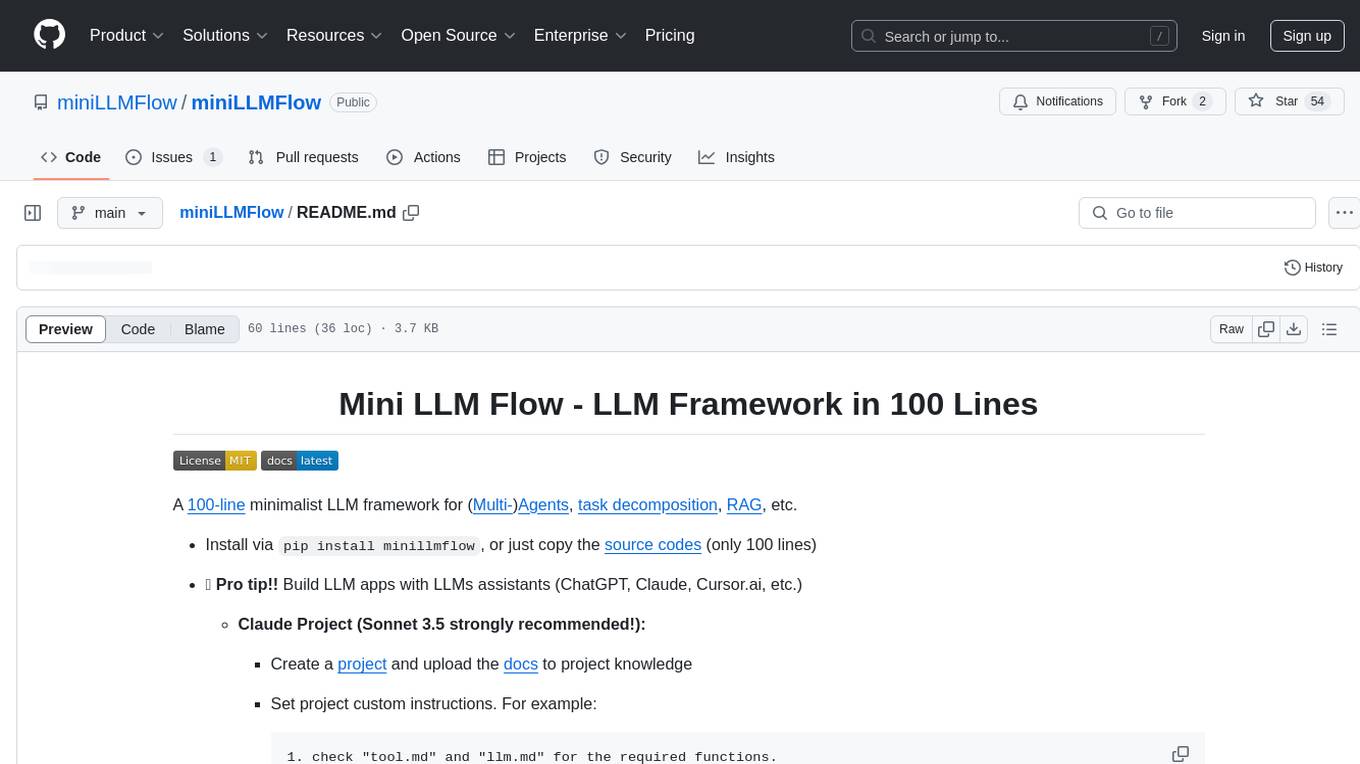

miniLLMFlow

Mini LLM Flow is a 100-line minimalist LLM framework designed for agents, task decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on high-level programming paradigms while stripping away low-level implementation details. It serves as a learning resource and allows LLMs to design, build, and maintain projects themselves.

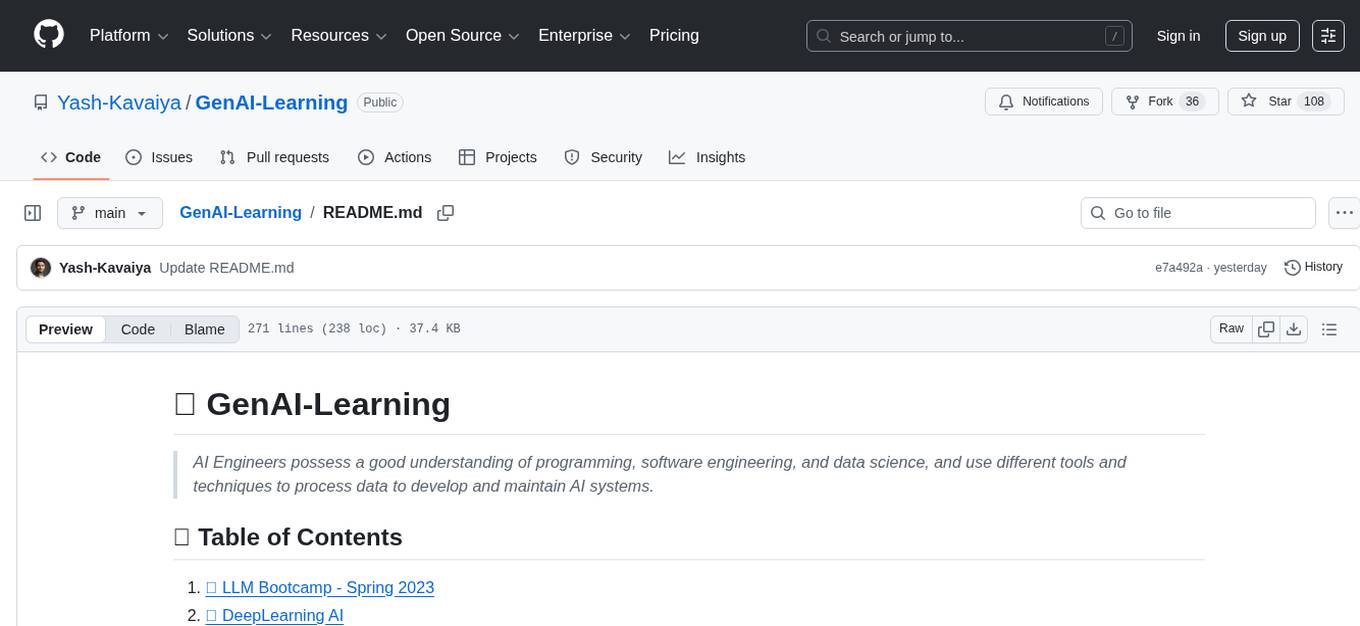

GenAI-Learning

GenAI-Learning is a repository dedicated to providing resources and courses for individuals interested in Generative AI. It covers a wide range of topics from prompt engineering to user-centered design, offering courses on LLM Bootcamp, DeepLearning AI, Microsoft Copilot Learning, Amazon Generative AI, Google Cloud Skills, NVIDIA Learn, Oracle Cloud, and IBM AI Learn. The repository includes detailed course descriptions, partners, and topics for each course, making it a valuable resource for AI enthusiasts and professionals.

20 - OpenAI Gpts

PyRefactor

Refactor python code. Python expert with proficiency in data science, machine learning (including LLM apps), and both OOP and functional programming.

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

![VitalsGPT [V0.0.2.2] Screenshot](/screenshots_gpts/g-cL1rJdm11.jpg)

VitalsGPT [V0.0.2.2]

Simple CustomGPT built on Vitals Inquiry Case in Malta, aimed to help journalists and citizens navigate the inquiry's large dataset in a neutral, informative fashion. Always cross-reference replies to actual data. Do not rely solely on this LLM for verification of facts.

Build a Brand

Unique custom images based on your input. Just type ideas and the brand image is created.

Beam Eye Tracker Extension Copilot

Build extensions using the Eyeware Beam eye tracking SDK

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

League Champion Builder GPT

Build your own League of Legends Style Champion with Abilities, Back Story and Splash Art

RenovaTecno

Your tech buddy helping you refurbish or build a PC from scratch, tailored to your needs, budget, and language.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.