llmops-promptflow-template

LLMOps with Prompt Flow is a "LLMOps template and guidance" to help you build LLM-infused apps using Prompt Flow. It offers a range of features including Centralized Code Hosting, Lifecycle Management, Variant and Hyperparameter Experimentation, A/B Deployment, reporting for all runs and experiments and so on.

Stars: 222

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

README:

This template can be used for both Azure AI Studio and Azure Machine Learning.It can be used for both AZURE and LOCAL execution.It supports all types of flow - python Class flows, Function flows and YAML flows.It supports Github, Azure DevOps and Jenkins CI/CD orchestration.It supports pure python based Evaluation as well using promptflow-evals package.It should be used for INNER-LOOP Experimentation and Evaluation.It should be used for OUTER-LOOP Deployment and Inferencing.

NOTE: A new FAQ section is added to help Engineers, Data Scientist and developers find answers to general questions on configuring and using this template.

Large Language Model Operations, or LLMOps, has become the cornerstone of efficient prompt engineering and LLM induced application development and deployment. As the demand for LLM induced applications continues to soar, organizations find themselves in need of a cohesive and streamlined process to manage their end-to-end lifecycle.

The rise of AI and large language models (LLMs) has transformed various industries, enabling the development of innovative applications with human-like text understanding and generation capabilities. This revolution has opened up new possibilities across fields such as customer service, content creation, and data analysis.

As LLMs rapidly evolve, the importance of Prompt Engineering becomes increasingly evident. Prompt Engineering plays a crucial role in harnessing the full potential of LLMs by creating effective prompts that cater to specific business scenarios. This process enables developers to create tailored AI solutions, making AI more accessible and useful to a broader audience.

It is an experimentation and evaluation framework for Prompt Flow. It is just not CI/CD pipelines for Prompt Flow, although it supports it. It has rich set of features for experimentation, evaluation, deployment and monitoring of Prompt Flow. It is a complete end-to-end solution for Prompt Flow operationalization.

The template supports both Azure AI Studio as well as Azure Machine Learning. Depending on the configuration, the template can be used for both Azure AI Studio and Azure Machine Learning. It provides a seamless migration experience for experimentation, evaluation and deployment of Prompt Flow across services.

This template supports different types of flows, allowing you to define and execute workflows based on your specific requirements. The two main flow types supported are:

-

Flexible Flows

- Function based flows (python functions)

- Class based flows (python classes)

-

Directed Acyclic Graph (DAG) Flows

- YAML based flows

One of the powerful features of this project is its ability to automatically detect the flow type and execute the flow accordingly. This allows you to experiment with different flow types and choose the one that best suits your needs.

This template supports:

- Local invocation and execution of flows for experimentation and evaluation locally.

- Local invocation but execution of flows for experimentation and evaluation on Azure.

- Pipeline based invocation but execution of flows for experimentation and evaluation on Azure.

- Pipeline based invocation and execution of flows for experimentation and evaluation on Build Server.

- Deployment of all types of flows to Kubernetes, Azure Web Apps and AML/AI Studio Managed compute.

-

Managing Large Language based flows, from local experimentation to production deployment, has been far from straightforward and isn't a one-size-fits-all task.

-

Each flow has its unique lifecycle, from initial experimentation to deployment, and each stage presents its own set of challenges.

-

Organizations often deal with multiple flows concurrently, each with its objectives, requirements, and complexities. This can quickly become overwhelming without proper management tools.

-

It involves handling multiple flows, their unique lifecycles, experimentation with various configurations, and ensuring smooth deployments.

- LLM-infused applications are designed to understand and generate human-like text based on the input they receive. They comprise of prompts that need engineering cadence and rigour.

- Prompt flow is a powerful feature that simplifies and streamlines the Prompt Engineering process for LLM-infused applications. It enables users to create, evaluate, and deploy high-quality flows with ease and efficiency.

- How do we best augment LLM-infused applications with LLMOps and engineering rigour? This template aims to assist in the development of those types of applications using Prompt flow and LLMOps.

- Bringing discipline to the data preparation for LLM app development by following DataOps best practices.

That's where LLMOps with Prompt flow comes in. LLMOps with Prompt flow is a "LLMOps template and guidance" to help you build LLM-infused apps using Prompt flow. It provides the following features:

-

Centralized Code Hosting: This repo supports hosting code for multiple flows based on prompt flow, providing a single repository for all your flows. Think of this platform as a single repository where all your prompt flow code resides. It's like a library for your flows, making it easy to find, access, and collaborate on different projects.

-

Lifecycle Management: Each flow enjoys its own lifecycle, allowing for smooth transitions from local experimentation to production deployment.

-

Variant and Hyperparameter Experimentation: Experiment with multiple variants and hyperparameters, evaluating flow variants with ease. Variants and hyperparameters are like ingredients in a recipe. This platform allows you to experiment with different combinations of variants across multiple nodes in a flow.

-

A/B Deployment: Seamlessly implement A/B deployments, enabling you to compare different flow versions effortlessly. Just as in traditional A/B testing for websites, this platform facilitates A/B deployment for prompt flow flows. This means you can effortlessly compare different versions of a flow in a real-world setting to determine which performs best.

-

Many-to-many dataset/flow relationships: Accommodate multiple datasets for each standard and evaluation flow, ensuring versatility in flow test and evaluation. The platform is designed to accommodate multiple datasets for each flow.

-

Multiple Deployment Targets: The repo supports deployment of flows to Kubernetes and Azure Managed computes driven through configuration ensuring that your flows can scale as needed.

-

Comprehensive Reporting: Generate detailed reports for each variant configuration, allowing you to make informed decisions. Provides detailed metric collection for all variant bulk runs and experiments, enabling data-driven decisions in csv as well as HTML files.

-

Offers BYOF (bring-your-own-flows). A complete platform for developing multiple use-cases related to LLM-infused applications.

-

Offers configuration based development. No need to write extensive boiler-plate code.

-

Provides execution of both prompt experimentation and evaluation locally as well on cloud.

-

Provides notebooks for local evaluation of the prompts. Provides library of functions for local experimentation.

-

Endpoint testing within pipeline after deployment to check its availability and readiness.

-

Provides optional Human-in-loop to validate prompt metrics before deployment.

LLMOps with Prompt flow provides capabilities for both simple as well as complex LLM-infused apps. It is completely customizable to the needs of the application.

Each use case (set of Prompt flow standard and evaluation flows) should follow the folder structure as shown here:

- .azure-pipelines : It contains the CI and PR related pipelines for Azure DevOps and specific to a use-case

- configs : It contains the configuration files for the use-case deployment

- data : This folder contains data files related to Prompt flow standard and evaluation flow

- environment : It contains a dockerfile used for running containers with flows for inferencing on Azure webapps and env.yml for declaring app specific environment variables.

- flows : It should contain minimally two folder - one for standard Prompt flow related files and another for Evaluation flow related file. There can be multiple evaluation flow related folders.

- tests : contains unit tests for the flows

- data-pipelines : It contains the data pipelines to generate the datasets (experimentation, evaluation etc.) necessary for the flows. This folder will have sub-folders specific to the data engineering tool - Microsoft Fabric, Azure ML etc.

Additionally, there is a experiment.yaml file that configures the use-case (see file description and specs for more details). There is also a sample-request.json file containing test data for testing endpoints after deployment.

-

The '.azure-pipelines' folder contains the common Azure DevOps pipelines for the platform and any changes to them will impact execution of all the flows.

-

The '.github' folder contains the Github workflows for the platform as well as the use-cases. This is bit different than Azure DevOps because all Github workflows should be within this single folder for execution.

-

The '.jenkins' folder contains the Jenkins declarative pipelines for the platform as well as the use-cases and individual jobs.

-

The 'docs' folder contains documentation for step-by-step guides for both Azure DevOps, Github Workflow and Jenkins related configuration.

-

The 'llmops' folder contains all the code related to flow execution, evaluation and deployment.

-

The 'dataops' folder contains all the code related to data pipeline deployment.

-

The 'local_execution' folder contains python scripts for executing both the standard and evaluation flow locally.

The project includes 6 examples demonstrating different scenarios:

- Web Classification (YAML-based)

Location: ./web_classification Importance: Demonstrates website content summarization with multiple variants, showcasing the flexibility and customization options available in the template.

- Named Entity Recognition (YAML-based)

Location: ./named_entity_recognition Importance: Showcases the extraction of named entities from text, which is valuable for various natural language processing tasks and information extraction.

- Math Coding (YAML-based)

Location: ./math_coding Importance: Showcases the ability to perform mathematical calculations and generate code snippets, highlighting the versatility of the template in handling computational tasks.

- Chat with PDF (YAML-based, RAG-based)

Location: ./chat_with_pdf Importance: Demonstrates a conversational interface for interacting with PDF documents, leveraging the power of retrieval-augmented generation (RAG) to provide accurate and relevant responses.

- Code Generation (Function-based flows)

Location: ./function_flows Importance: Demonstrates the generation of code snippets based on user prompts, showcasing the potential for automating code generation tasks.

- Chat Application (Class-based flows)

Location: ./class_flows Importance: Showcases a chat application built using class-based flows, illustrating the structuring and organization of more complex conversational interfaces.

- Full documentation on using this repo using Azure DevOps can be found here

- Full documentation on using this repo using Github Workflows can be found here

- Full documentation on using this repo using Jenkins can be found here

- Documentation about adding a new flow is available here

- New experiment.yaml configuration here

The repo helps in deploying to Kubernetes, Kubernetes ARC , Azure Web Apps and AzureML Managed compute along with A/B deployment for AzureML Managed compute.

The pipeline execution consists of multiple stages and jobs in each stage:

The repo generates multiple reports (experiments-runs and metrics examples are shown) :

To harness the capabilities of the local execution, follow these installation steps:

- Clone the Repository: Begin by cloning the template's repository from its GitHub repository.

git clone https://github.com/microsoft/llmops-promptflow-template.git-

setup env file: create .env file at top folder level and provide information for items mentioned. Add as many connection names as needed. All the flow examples in this repo uses AzureOpenAI connection named

aoai. Add a lineaoai={"api_key": "","api_base": "","api_type": "azure","api_version": "2023-03-15-preview"}with updated values for api_key and api_base. If additional connections with different names are used in your flows, they should be added accordingly. Currently, flow with AzureOpenAI as provider as supported.

experiment_name=

connection_name_1={ "api_key": "","api_base": "","api_type": "azure","api_version": "2023-03-15-preview"}

connection_name_2={ "api_key": "","api_base": "","api_type": "azure","api_version": "2023-03-15-preview"}- Prepare the local conda or virtual environment to install the dependencies.

python -m pip install promptflow promptflow-tools promptflow-sdk jinja2 promptflow[azure] openai promptflow-sdk[builtins] python-dotenv

-

Bring or write your flows into the template based on documentation here.

-

Write python scripts similar to the provided examples in local_execution folder.

DataOps combines aspects of DevOps, agile methodologies, and data management practices to streamline the process of collecting, processing, and analyzing data. DataOps can help to bring discipline in building the datasets (training, experimentation, evaluation etc.) necessary for LLM app development.

The data pipelines are kept seperate from the prompt engineering flows. Data pipelines create the datasets and the datasets are registered as data assets in Azure ML for the flows to consume. This approach helps to scale and troubleshoot independently different parts of the system.

For details on how to get started with DataOps, please follow this document - How to Configure DataOps.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Copyright (c) Microsoft Corporation. All rights reserved.

Licensed under the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llmops-promptflow-template

Similar Open Source Tools

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

DevOpsGPT

DevOpsGPT is an AI-driven software development automation solution that combines Large Language Models (LLM) with DevOps tools to convert natural language requirements into working software. It improves development efficiency by eliminating the need for tedious requirement documentation, shortens development cycles, reduces communication costs, and ensures high-quality deliverables. The Enterprise Edition offers features like existing project analysis, professional model selection, and support for more DevOps platforms. The tool automates requirement development, generates interface documentation, provides pseudocode based on existing projects, facilitates code refinement, enables continuous integration, and supports software version release. Users can run DevOpsGPT with source code or Docker, and the tool comes with limitations in precise documentation generation and understanding existing project code. The product roadmap includes accurate requirement decomposition, rapid import of development requirements, and integration of more software engineering and professional tools for efficient software development tasks under AI planning and execution.

get-started-with-ai-agents

The 'Getting Started with Agents Using Azure AI Foundry' repository provides a solution that deploys a web-based chat application with an AI agent running in Azure Container App. The agent leverages Azure AI services for knowledge retrieval from uploaded files, enabling it to generate responses with citations. The solution includes built-in monitoring capabilities for easier troubleshooting and optimized performance. Users can deploy AI models, customize the agent, and evaluate its performance. The repository offers flexible deployment options through GitHub Codespaces, VS Code Dev Containers, or local environments.

intelligence-toolkit

The Intelligence Toolkit is a suite of interactive workflows designed to help domain experts make sense of real-world data by identifying patterns, themes, relationships, and risks within complex datasets. It utilizes generative AI (GPT models) to create reports on findings of interest. The toolkit supports analysis of case, entity, and text data, providing various interactive workflows for different intelligence tasks. Users are expected to evaluate the quality of data insights and AI interpretations before taking action. The system is designed for moderate-sized datasets and responsible use of personal case data. It uses the GPT-4 model from OpenAI or Azure OpenAI APIs for generating reports and insights.

btp-cap-genai-rag

This GitHub repository provides support for developers, partners, and customers to create advanced GenAI solutions on SAP Business Technology Platform (SAP BTP) following the Reference Architecture. It includes examples on integrating Foundation Models and Large Language Models via Generative AI Hub, using LangChain in CAP, and implementing advanced techniques like Retrieval Augmented Generation (RAG) through embeddings and SAP HANA Cloud's Vector Engine for enhanced value in customer support scenarios.

Document-Knowledge-Mining-Solution-Accelerator

The Document Knowledge Mining Solution Accelerator leverages Azure OpenAI and Azure AI Document Intelligence to ingest, extract, and classify content from various assets, enabling chat-based insight discovery, analysis, and prompt guidance. It uses OCR and multi-modal LLM to extract information from documents like text, handwritten text, charts, graphs, tables, and form fields. Users can customize the technical architecture and data processing workflow. Key features include ingesting and extracting real-world entities, chat-based insights discovery, text and document data analysis, prompt suggestion guidance, and multi-modal information processing.

llm-d

LLM-D is a machine learning model for sentiment analysis. It is designed to classify text data into positive, negative, or neutral sentiment categories. The model is trained on a large dataset of labeled text samples and uses natural language processing techniques to analyze and predict sentiment in new text inputs. LLM-D is a powerful tool for businesses and researchers looking to understand customer feedback, social media sentiment, and other text data sources. It can be easily integrated into existing applications or used as a standalone tool for sentiment analysis tasks.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

wandbot

Wandbot is a question-answering bot designed for Weights & Biases documentation. It employs Retrieval Augmented Generation with a ChromaDB backend for efficient responses. The bot features periodic data ingestion, integration with Discord and Slack, and performance monitoring through logging. It has a fallback mechanism for model selection and is evaluated based on retrieval accuracy and model-generated responses. The implementation includes creating document embeddings, constructing the Q&A RAGPipeline, model selection, deployment on FastAPI, Discord, and Slack, logging and analysis with Weights & Biases Tables, and performance evaluation.

motleycrew

Motleycrew is an ultimate framework for building multi-agent AI systems, allowing users to mix and match AI agents and tools from popular frameworks, design advanced workflows, and leverage dynamic knowledge graphs with simplicity and elegance. It acts as a conductor orchestrating a symphony of AI agents and tools, providing building blocks for creating AI systems and enabling users to focus on high-level design while taking care of the rest. The framework offers integration with various tools, flexibility in providing agents with tools or other agents, advanced flow design capabilities, and built-in observability and caching features.

Multi-Agent-Custom-Automation-Engine-Solution-Accelerator

The Multi-Agent -Custom Automation Engine Solution Accelerator is an AI-driven orchestration system that manages a group of AI agents to accomplish tasks based on user input. It uses a FastAPI backend to handle HTTP requests, processes them through various specialized agents, and stores stateful information using Azure Cosmos DB. The system allows users to focus on what matters by coordinating activities across an organization, enabling GenAI to scale, and is applicable to most industries. It is intended for developing and deploying custom AI solutions for specific customers, providing a foundation to accelerate building out multi-agent systems.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

db-ally

db-ally is a library for creating natural language interfaces to data sources. It allows developers to outline specific use cases for a large language model (LLM) to handle, detailing the desired data format and the possible operations to fetch this data. db-ally effectively shields the complexity of the underlying data source from the model, presenting only the essential information needed for solving the specific use cases. Instead of generating arbitrary SQL, the model is asked to generate responses in a simplified query language.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

For similar tasks

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

langflow

Langflow is an open-source Python-powered visual framework designed for building multi-agent and RAG applications. It is fully customizable, language model agnostic, and vector store agnostic. Users can easily create flows by dragging components onto the canvas, connect them, and export the flow as a JSON file. Langflow also provides a command-line interface (CLI) for easy management and configuration, allowing users to customize the behavior of Langflow for development or specialized deployment scenarios. The tool can be deployed on various platforms such as Google Cloud Platform, Railway, and Render. Contributors are welcome to enhance the project on GitHub by following the contributing guidelines.

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

cheat-sheet-pdf

The Cheat-Sheet Collection for DevOps, Engineers, IT professionals, and more is a curated list of cheat sheets for various tools and technologies commonly used in the software development and IT industry. It includes cheat sheets for Nginx, Docker, Ansible, Python, Go (Golang), Git, Regular Expressions (Regex), PowerShell, VIM, Jenkins, CI/CD, Kubernetes, Linux, Redis, Slack, Puppet, Google Cloud Developer, AI, Neural Networks, Machine Learning, Deep Learning & Data Science, PostgreSQL, Ajax, AWS, Infrastructure as Code (IaC), System Design, and Cyber Security.

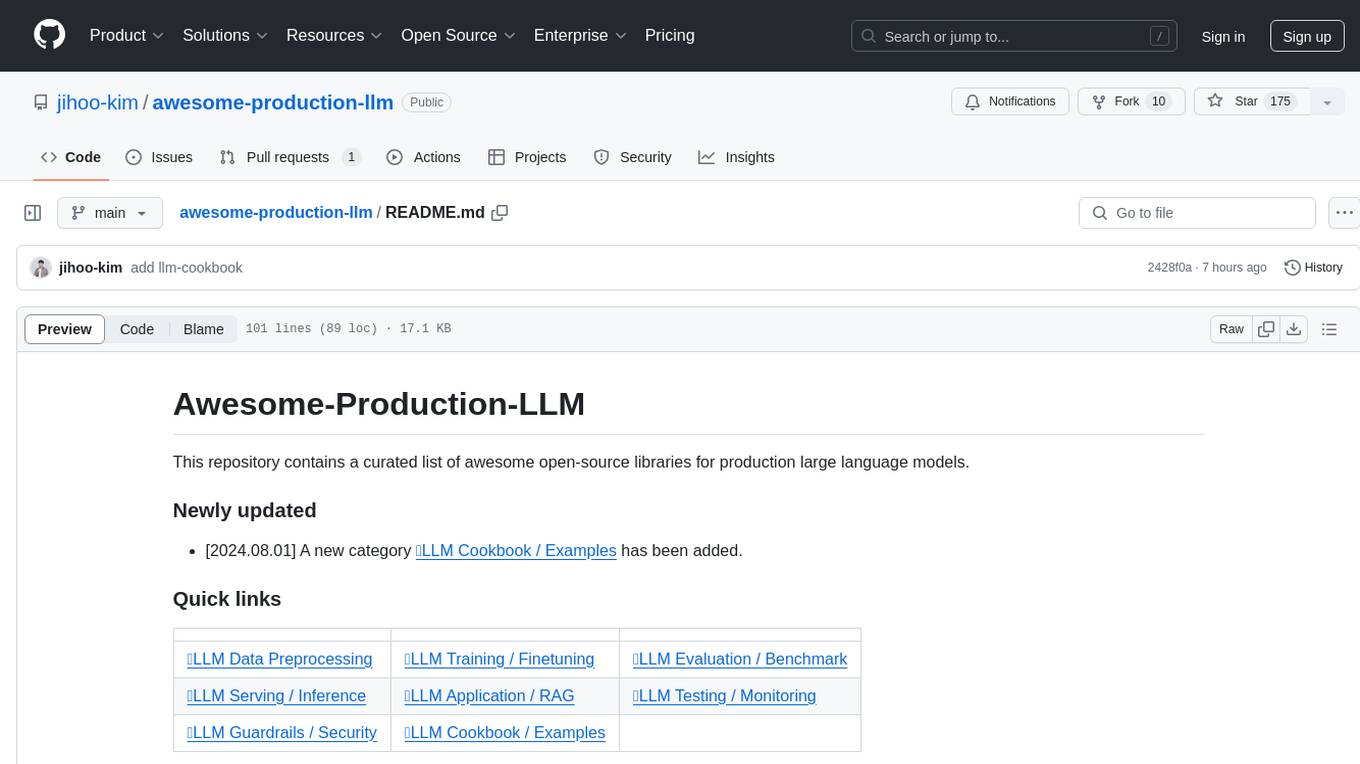

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

generative-ai-on-aws

Generative AI on AWS by O'Reilly Media provides a comprehensive guide on leveraging generative AI models on the AWS platform. The book covers various topics such as generative AI use cases, prompt engineering, large-language models, fine-tuning techniques, optimization, deployment, and more. Authors Chris Fregly, Antje Barth, and Shelbee Eigenbrode offer insights into cutting-edge AI technologies and practical applications in the field. The book is a valuable resource for data scientists, AI enthusiasts, and professionals looking to explore generative AI capabilities on AWS.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.