Chinese-Tiny-LLM

None

Stars: 168

Chinese-Tiny-LLM is a repository containing procedures for cleaning Chinese web corpora and pre-training code. It introduces CT-LLM, a 2B parameter language model focused on the Chinese language. The model primarily uses Chinese data from a 1,200 billion token corpus, showing excellent performance in Chinese language tasks. The repository includes tools for filtering, deduplication, and pre-training, aiming to encourage further research and innovation in language model development.

README:

🌐 Homepage | 🤗 MAP-CC | 🤗 CHC-Bench | 🤗 CT-LLM | 📖 arXiv | GitHub

This repository contains our suite of procedures for cleaning Chinese web corpora and pre-training code.

🔥[2024/5/10]: The pretraining code has been released, and we also invite you to follow Neo. 😆

We introduce CT-LLM, a 2B parameter language model, marking a shift towards focusing on the Chinese language for LLM development. Starting from scratch, CT-LLM primarily uses Chinese data from a 1,200 billion token corpus, including 800 billion Chinese, 300 billion English, and 100 billion code tokens. This mix enhances its Chinese processing abilities, further improved by alignment techniques. CT-LLM shows excellent performance in Chinese language tasks on the CHC-Bench and is also adept in English through SFT. This approach challenges the norm of relying on English corpora for LLM training, expanding training methodologies. By open-sourcing CT-LLM's training process, including data processing and the Massive Appropriate Pretraining Chinese Corpus (MAP-CC), and introducing the Chinese Hard Case Benchmark (CHC-Bench), we encourage further research and innovation, aiming for more inclusive and adaptable language models.

-

MAP-CC An open-source Chinese pretraining dataset with a scale of 800 billion tokens, along with a detailed suite of procedures for cleaning Chinese web corpora, offering the NLP community high-quality Chinese pretraining data and an effective methodology for data preparation.

-

CHC-Bench A well-chosen multidisciplinary Chinese hard cases instruction understanding and following benchmark.

-

CT-LLM The first Chinese-centric large language model, both pre-training and fine-tuned primarily on Chinese corpora, offers significant insights into potential biases, Chinese language ability, and multilingual adaptability.

You first need to download fasttext model in the filter directory. The download link. The command example is

wget -P filter/ https://dl.fbaipublicfiles.com/fasttext/supervised-models/lid.176.bin

Once you download the model bin of fasttext, then you use command like follows to filter text.

python3 filter/filter.py --input_data input_dir --output_dir output_dir --success_dir success_dir --log_dir log_dir --worker num_worker

where input_dir is the direcotry containing jsonl files, output_dir is the directory to store the filtered jsonl files, success_dir is dirtory to save files about whether files are processed successfully, log_dir is the directory to save logs and num_worker is the total number of process to execute the job in parallel.

Here is an example command to run full text deduplication

python3 deduplication/full_text_dedup/full_text_dedup.py --input_dir input_dir --output_dir output_dir --content_field_name content_field_name

where input_dir is the directory to story the jsonl files, output_dir is the dirtory to save the deduplicated jsonl file and content_field_name is the field name of content in jsonl file.

To reduce the total processing time, you can partition jsonl files to multiple partitions, and then run multiple job for each partition in parallel.

Here is an example command to run Generate Minhash

python3 deduplication/minhash_lsh/generate_minhash.py --input_dir input_dir --output_dir output_dir --workers num_workers --content_field_name content_field_name

where input_dir is dirtory to save jsonl files, output_dir is the directory to save minhash value, num_worker is the total number of processes executing the job and content_field_name is the field name of content in jsonl file.

Here is an example command to run Gereate Duplicate Pairs

python3 deduplication/minhash_lsh/generate_dup_pairs.py --input_dir input_dir --output_dir output_dir

where input_dir is the directory to storay the minhash values, which is the same with the output_dir in Generate Minhash. Output_dir is the directory to save the duplicate paris files.

Here is an example command to run Genereate Connected Componenets

python3 deduplication/minhash_lsh/generate_connected_components.py --input_dir input_dir --output_file output_file --num_workers num_workers

where input_dir is the directory containing the duplicate lines file which is the same as the output_dir in Generate Duplicate Pairs. Output_file is file path to save information about connected components. Num_workers is the total number of processes executing the job.

Here is an example command to run Genereate Connected Componenets

python3 deduplication/minhash_lsh/generate_dup_line_id_for_each_file.py --input_file input_file --output_dir output_dir

where input_file is the file containing nformation about connected components which is the same as output_file in Genereate Connected Componenets. Output_dir is the directory to save duplicate line id information for each file.

Here is an example command to run Remove Duplicates

python3 deduplication/minhash_lsh/remove_dup.py --input_dir input_dir --output_dir output_dir --dup_line_id_dir dup_line_id_dir --worker num_worker

where input_dir is the directory containing the original jsonl files which is the same as the input_dir in Generate Minhash. Output_dir is the directory to save the dedupliacted jsonl files. Dup_line_id_dir is the directory containing duplicate line ids of all jsonl files which is the same as output_dir in Generate Duplicated Line ID for Each File.

Here is an example command to run Remove Duplicates

python3 deduplication/simlar_line_dedup/similar_line_dedup.py --input_dir input_dir --output_dir output_dir --wokers num_worker --content_field_name content_field_name

where input_dir is the directory containing the jsonl files, output_dir is the direcotry to save deduplicated jsonl files, num_worker is the total number of processes executing the job and content_field_name is the field name of content in jsonl file.

For detailed information and access to the pre-training code used in this project, please refer to Neo. This repo contains all the necessary code and configurations needed to replicate the pre-training process.

Welcome to follow Neo.

This model, developed for academic purposes, employs rigorously compliance-checked training data to uphold the highest standards of integrity and compliance. Despite our efforts, the inherent complexities of data and the broad spectrum of model applications prevent us from ensuring absolute accuracy or appropriateness of the model outputs in every scenario.

It is essential to highlight that our model and its associated training data are intended solely for scholarly research. We explicitly disclaim any liability for problems that may arise from improper use, interpretation errors, unlawful activities, the dissemination of false information, or any data security issues related to the utilization of our model or its training data.

We strongly encourage users to report any concerns related to data misuse, security breaches, or potential infringement issues directly to us for immediate investigation and resolution.

Contact: [email protected]; [email protected]

Our commitment to responsible data sharing and the security of our academic tools is paramount. We thank you for your cooperation in maintaining the ethical use of this technology.

The MAP-CC Dataset is made available under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND 4.0).

By using the MAP-CC Dataset, you accept and agree to be bound by the terms and conditions of the CC BY-NC-ND 4.0 License. This license allows users to share (copy and redistribute the material in any medium or format) the MAP-CC Dataset for non-commercial purposes only, and with no modifications or derivatives, as long as proper attribution is given to the creators. For further details, please refer to the LICENSE file.

We chose the CC BY-NC-ND 4.0 License for the MAP-CC Dataset to facilitate academic and educational use, promoting the spread of knowledge while protecting the work of the creators from unauthorized commercial use or modification.

@misc{du2024chinese,

title={Chinese Tiny LLM: Pretraining a Chinese-Centric Large Language Model},

author={Xinrun Du and Zhouliang Yu and Songyang Gao and Ding Pan and Yuyang Cheng and Ziyang Ma and Ruibin Yuan and Xingwei Qu and Jiaheng Liu and Tianyu Zheng and Xinchen Luo and Guorui Zhou and Binhang Yuan and Wenhu Chen and Jie Fu and Ge Zhang},

year={2024},

eprint={2404.04167},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Chinese-Tiny-LLM

Similar Open Source Tools

Chinese-Tiny-LLM

Chinese-Tiny-LLM is a repository containing procedures for cleaning Chinese web corpora and pre-training code. It introduces CT-LLM, a 2B parameter language model focused on the Chinese language. The model primarily uses Chinese data from a 1,200 billion token corpus, showing excellent performance in Chinese language tasks. The repository includes tools for filtering, deduplication, and pre-training, aiming to encourage further research and innovation in language model development.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

LLMonFHIR

LLMonFHIR is an iOS application that utilizes large language models (LLMs) to interpret and provide context around patient data in the Fast Healthcare Interoperability Resources (FHIR) format. It connects to the OpenAI GPT API to analyze FHIR resources, supports multiple languages, and allows users to interact with their health data stored in the Apple Health app. The app aims to simplify complex health records, provide insights, and facilitate deeper understanding through a conversational interface. However, it is an experimental app for informational purposes only and should not be used as a substitute for professional medical advice. Users are advised to verify information provided by AI models and consult healthcare professionals for personalized advice.

hi-ml

The Microsoft Health Intelligence Machine Learning Toolbox is a repository that provides low-level and high-level building blocks for Machine Learning / AI researchers and practitioners. It simplifies and streamlines work on deep learning models for healthcare and life sciences by offering tested components such as data loaders, pre-processing tools, deep learning models, and cloud integration utilities. The repository includes two Python packages, 'hi-ml-azure' for helper functions in AzureML, 'hi-ml' for ML components, and 'hi-ml-cpath' for models and workflows related to histopathology images.

Open-DocLLM

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

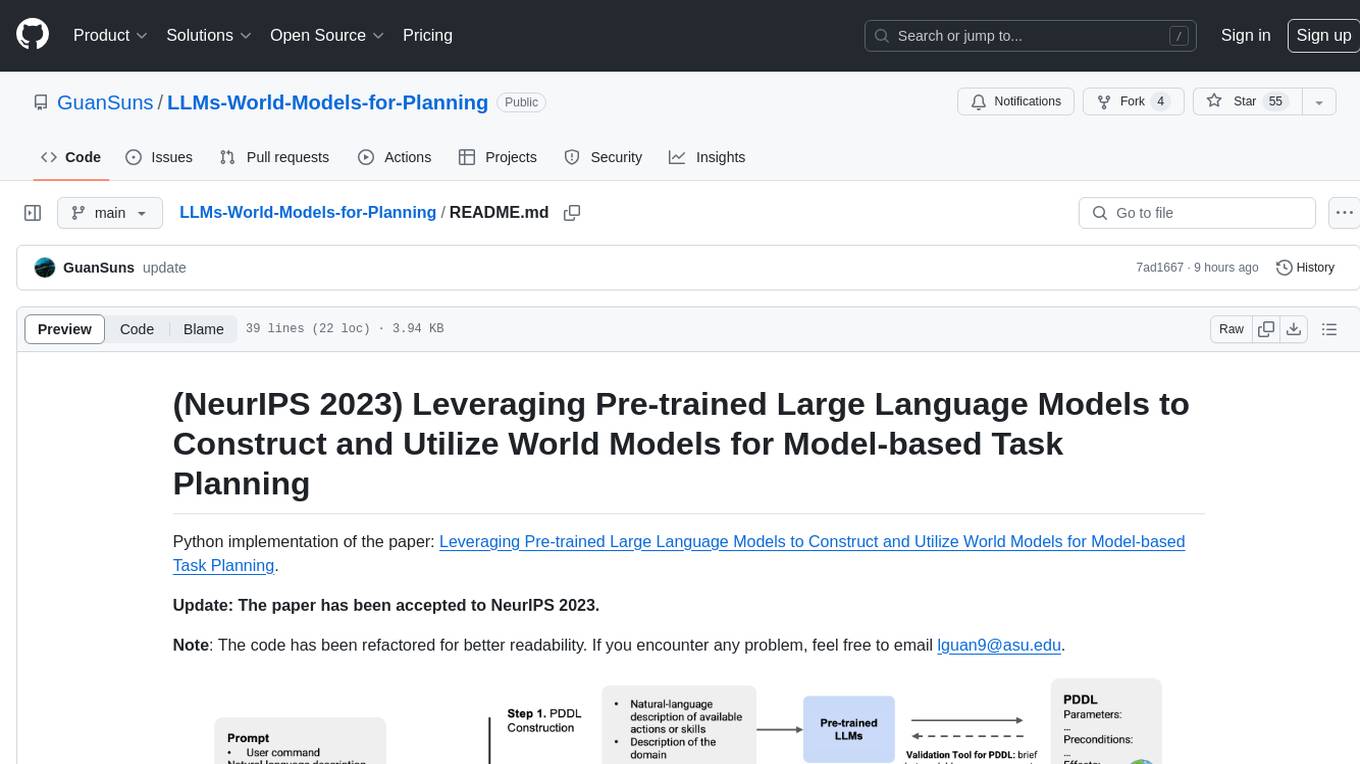

LLMs-World-Models-for-Planning

This repository provides a Python implementation of a method that leverages pre-trained large language models to construct and utilize world models for model-based task planning. It includes scripts to generate domain models using natural language descriptions, correct domain models based on feedback, and support plan generation for tasks in different domains. The code has been refactored for better readability and includes tools for validating PDDL syntax and handling corrective feedback.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

intelligence-toolkit

The Intelligence Toolkit is a suite of interactive workflows designed to help domain experts make sense of real-world data by identifying patterns, themes, relationships, and risks within complex datasets. It utilizes generative AI (GPT models) to create reports on findings of interest. The toolkit supports analysis of case, entity, and text data, providing various interactive workflows for different intelligence tasks. Users are expected to evaluate the quality of data insights and AI interpretations before taking action. The system is designed for moderate-sized datasets and responsible use of personal case data. It uses the GPT-4 model from OpenAI or Azure OpenAI APIs for generating reports and insights.

db-ally

db-ally is a library for creating natural language interfaces to data sources. It allows developers to outline specific use cases for a large language model (LLM) to handle, detailing the desired data format and the possible operations to fetch this data. db-ally effectively shields the complexity of the underlying data source from the model, presenting only the essential information needed for solving the specific use cases. Instead of generating arbitrary SQL, the model is asked to generate responses in a simplified query language.

dstoolkit-text2sql-and-imageprocessing

This repository provides sample code for improving RAG applications with rich data sources including SQL Warehouses and documents analysed with Azure Document Intelligence. It includes components for Text2SQL generation and querying, linking Azure Document Intelligence with AI Search for processing complex documents, and deploying AI search indexes. The plugins and skills aim to enhance response quality in RAG applications by accessing and pulling data from SQL tables, drawing insights from complex charts and images, and intelligently grouping similar sentences.

asreview

The ASReview project implements active learning for systematic reviews, utilizing AI-aided pipelines to assist in finding relevant texts for search tasks. It accelerates the screening of textual data with minimal human input, saving time and increasing output quality. The software offers three modes: Oracle for interactive screening, Exploration for teaching purposes, and Simulation for evaluating active learning models. ASReview LAB is designed to support decision-making in any discipline or industry by improving efficiency and transparency in screening large amounts of textual data.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

For similar tasks

Chinese-Tiny-LLM

Chinese-Tiny-LLM is a repository containing procedures for cleaning Chinese web corpora and pre-training code. It introduces CT-LLM, a 2B parameter language model focused on the Chinese language. The model primarily uses Chinese data from a 1,200 billion token corpus, showing excellent performance in Chinese language tasks. The repository includes tools for filtering, deduplication, and pre-training, aiming to encourage further research and innovation in language model development.

WordLlama

WordLlama is a fast, lightweight NLP toolkit optimized for CPU hardware. It recycles components from large language models to create efficient word representations. It offers features like Matryoshka Representations, low resource requirements, binarization, and numpy-only inference. The tool is suitable for tasks like semantic matching, fuzzy deduplication, ranking, and clustering, making it a good option for NLP-lite tasks and exploratory analysis.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.