WordLlama

Things you can do with the token embeddings of an LLM

Stars: 1407

WordLlama is a fast, lightweight NLP toolkit optimized for CPU hardware. It recycles components from large language models to create efficient word representations. It offers features like Matryoshka Representations, low resource requirements, binarization, and numpy-only inference. The tool is suitable for tasks like semantic matching, fuzzy deduplication, ranking, and clustering, making it a good option for NLP-lite tasks and exploratory analysis.

README:

WordLlama is a fast, lightweight NLP toolkit designed for tasks like fuzzy deduplication, similarity computation, ranking, clustering, and semantic text splitting. It operates with minimal inference-time dependencies and is optimized for CPU hardware, making it suitable for deployment in resource-constrained environments.

- 2025-01-04 We're excited to announce support for model2vec static embeddings. See also: Model2Vec

- 2024-10-04 Added semantic splitting inference algorithm. See our technical overview.

- Quick Start

- Features

- What is WordLlama?

- MTEB Results

- How Fast?

- Usage

- Training Notes

- Roadmap

- Extracting Token Embeddings

- Community Projects

- Citations

- License

Install WordLlama via pip:

pip install wordllamaLoad the default 256-dimensional model:

from wordllama import WordLlama

# Load the default WordLlama model

wl = WordLlama.load()

# Calculate similarity between two sentences

similarity_score = wl.similarity("I went to the car", "I went to the pawn shop")

print(similarity_score) # Output: e.g., 0.0664

# Rank documents based on their similarity to a query

query = "I went to the car"

candidates = ["I went to the park", "I went to the shop", "I went to the truck", "I went to the vehicle"]

ranked_docs = wl.rank(query, candidates)

print(ranked_docs)

# Output:

# [

# ('I went to the vehicle', 0.7441),

# ('I went to the truck', 0.2832),

# ('I went to the shop', 0.1973),

# ('I went to the park', 0.1510)

# ]- Fast Embeddings: Efficiently generate text embeddings using a simple token lookup with average pooling.

- Similarity Computation: Calculate cosine similarity between texts.

- Ranking: Rank documents based on their similarity to a query.

- Fuzzy Deduplication: Remove duplicate texts based on a similarity threshold.

- Clustering: Cluster documents into groups using KMeans clustering.

- Filtering: Filter documents based on their similarity to a query.

- Top-K Retrieval: Retrieve the top-K most similar documents to a query.

- Semantic Text Splitting: Split text into semantically coherent chunks.

- Binary Embeddings: Support for binary embeddings with Hamming similarity for even faster computations.

- Matryoshka Representations: Truncate embedding dimensions as needed for flexibility.

- Low Resource Requirements: Optimized for CPU inference with minimal dependencies.

WordLlama is a utility for natural language processing (NLP) that recycles components from large language models (LLMs) to create efficient and compact word representations, similar to GloVe, Word2Vec, or FastText.

Starting by extracting the token embedding codebook from state-of-the-art LLMs (e.g., LLaMA 2, LLaMA 3 70B), WordLlama trains a small context-less model within a general-purpose embedding framework. This approach results in a lightweight model that improves on all MTEB benchmarks over traditional word models like GloVe 300d, while being substantially smaller in size (e.g., 16MB default model at 256 dimensions).

WordLlama's key features include:

- Matryoshka Representations: Allows for truncation of the embedding dimension as needed, providing flexibility in model size and performance.

- Low Resource Requirements: Utilizes a simple token lookup with average pooling, enabling fast operation on CPUs without the need for GPUs.

- Binary Embeddings: Models trained using the straight-through estimator can be packed into small integer arrays for even faster Hamming distance calculations.

- Numpy-only Inference: Lightweight inference pipeline relying solely on NumPy, facilitating easy deployment and integration.

Because of its fast and portable size, WordLlama serves as a versatile tool for exploratory analysis and utility applications, such as LLM output evaluators or preparatory tasks in multi-hop or agentic workflows.

The following table presents the performance of WordLlama models compared to other similar models.

| Metric | WL64 | WL128 | WL256 (X) | WL512 | WL1024 | GloVe 300d | Komninos | all-MiniLM-L6-v2 |

|---|---|---|---|---|---|---|---|---|

| Clustering | 30.27 | 32.20 | 33.25 | 33.40 | 33.62 | 27.73 | 26.57 | 42.35 |

| Reranking | 50.38 | 51.52 | 52.03 | 52.32 | 52.39 | 43.29 | 44.75 | 58.04 |

| Classification | 53.14 | 56.25 | 58.21 | 59.13 | 59.50 | 57.29 | 57.65 | 63.05 |

| Pair Classification | 75.80 | 77.59 | 78.22 | 78.50 | 78.60 | 70.92 | 72.94 | 82.37 |

| STS | 66.24 | 67.53 | 67.91 | 68.22 | 68.27 | 61.85 | 62.46 | 78.90 |

| CQA DupStack | 18.76 | 22.54 | 24.12 | 24.59 | 24.83 | 15.47 | 16.79 | 41.32 |

| SummEval | 30.79 | 29.99 | 30.99 | 29.56 | 29.39 | 28.87 | 30.49 | 30.81 |

WL64 to WL1024: WordLlama models with embedding dimensions ranging from 64 to 1024.

Note: The l2_supercat is a LLaMA 2 vocabulary model. To train this model, we concatenated codebooks from several models, including LLaMA 2 70B and phi 3 medium, after removing additional special tokens. Because several models have used the LLaMA 2 tokenizer, their codebooks can be concatenated and trained together. The performance of the resulting model is comparable to training the LLaMA 3 70B codebook, while being 4x smaller (32k vs. 128k vocabulary).

- LLaMA 3-based: l3_supercat

- Results

8k documents from the ag_news dataset

- Single core performance (CPU), i9 12th gen, DDR4 3200

- NVIDIA A4500 (GPU)

Load pre-trained embeddings and embed text:

from wordllama import WordLlama

# Load pre-trained embeddings (truncate dimension to 64)

wl = WordLlama.load(trunc_dim=64)

# Embed text

embeddings = wl.embed(["The quick brown fox jumps over the lazy dog", "And all that jazz"])

print(embeddings.shape) # Output: (2, 64)Compute the similarity between two texts:

similarity_score = wl.similarity("I went to the car", "I went to the pawn shop")

print(similarity_score) # Output: e.g., 0.0664Rank documents based on their similarity to a query:

query = "I went to the car"

candidates = ["I went to the park", "I went to the shop", "I went to the truck", "I went to the vehicle"]

ranked_docs = wl.rank(query, candidates, sort=True, batch_size=64)

print(ranked_docs)

# Output:

# [

# ('I went to the vehicle', 0.7441),

# ('I went to the truck', 0.2832),

# ('I went to the shop', 0.1973),

# ('I went to the park', 0.1510)

# ]Remove duplicate texts based on a similarity threshold:

deduplicated_docs = wl.deduplicate(candidates, return_indices=False, threshold=0.5)

print(deduplicated_docs)

# Output:

# ['I went to the park',

# 'I went to the shop',

# 'I went to the truck']Cluster documents into groups using KMeans clustering:

labels, inertia = wl.cluster(candidates, k=3, max_iterations=100, tolerance=1e-4, n_init=3)

print(labels, inertia)

# Output:

# [2, 0, 1, 1], 0.4150Filter documents based on their similarity to a query:

filtered_docs = wl.filter(query, candidates, threshold=0.3)

print(filtered_docs)

# Output:

# ['I went to the vehicle']Retrieve the top-K most similar documents to a query:

top_docs = wl.topk(query, candidates, k=2)

print(top_docs)

# Output:

# ['I went to the vehicle', 'I went to the truck']Split text into semantic chunks:

long_text = "Your very long text goes here... " * 100

chunks = wl.split(long_text, target_size=1536)

print(list(map(len, chunks)))

# Output: [1055, 1055, 1187]Note that the target size is also the maximum size. The .split() feature attempts to aggregate sections up to the target_size,

but will retain the order of the text as well as sentence and, as much as possible, paragraph structure.

It uses wordllama embeddings to locate more natural indexes to split on. As a result, there will be a range of chunk sizes in the output

up to the target size.

The recommended target size is from 512 to 2048 characters, with the default size at 1536. Chunks that need to be much larger should probably be batched after splitting, and will often be aggregated from multiple semantic chunks already.

For more information see: technical overview

wl = WordLlama.list_configs()

# dict of config names

wl = WordLlama.load_m2v("potion_base_8m") # 256-dim model

wl = WordLlama.load_m2v("m2v_multilingual") # multilingual modelModel2Vec is a different way of creating static embeddings using PCA. Notably, they have produced multilingual models, and glove-based models, which score well in word similarity tasks.

Check them out on huggingface! minishlab

from wordllama import WordLlamaInference

from tokenizers import Tokenizer

tokenizer = Tokenizer.from_pretrained(...)

wl = WordLlamaInference(np_embeddings_ar, tokenizer)The inference class can be used directly with a bring-your-own static embeddings array (n_vocab, dim), rather than using the loader.

Binary embedding models showed more pronounced improvement at higher dimensions, and either 512 or 1024 dimensions are recommended for binary embeddings.

The L2 Supercat model was trained using a batch size of 512 on a single A100 GPU for 12 hours.

-

Additional Example Notebooks:

- DSPy evaluators

- Retrieval-Augmented Generation (RAG) pipelines

To extract token embeddings from a model, ensure you have agreed to the user agreement and logged in using the Hugging Face CLI (for LLaMA models). You can then use the following snippet:

from wordllama.extract.extract_safetensors import extract_safetensors

# Extract embeddings for the specified configuration

extract_safetensors("llama3_70B", "path/to/saved/model-0001-of-00XX.safetensors")Hint: Embeddings are usually in the first safetensors file, but not always. Sometimes there is a manifest; sometimes you have to inspect and figure it out.

For training, use the scripts in the GitHub repository. You have to add a configuration file (copy/modify an existing one into the folder).

pip install wordllama[train]

python train.py train --config your_new_config

# (Training process begins)

python train.py save --config your_new_config --checkpoint ... --outdir /path/to/weights/

# (Saves one model per Matryoshka dimension)If you use WordLlama in your research or project, please consider citing it as follows:

@software{miller2024wordllama,

author = {Miller, D. Lee},

title = {WordLlama: Recycled Token Embeddings from Large Language Models},

year = {2024},

url = {https://github.com/dleemiller/wordllama},

version = {0.3.7}

}This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for WordLlama

Similar Open Source Tools

WordLlama

WordLlama is a fast, lightweight NLP toolkit optimized for CPU hardware. It recycles components from large language models to create efficient word representations. It offers features like Matryoshka Representations, low resource requirements, binarization, and numpy-only inference. The tool is suitable for tasks like semantic matching, fuzzy deduplication, ranking, and clustering, making it a good option for NLP-lite tasks and exploratory analysis.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

pgvecto.rs

pgvecto.rs is a Postgres extension written in Rust that provides vector similarity search functions. It offers ultra-low-latency, high-precision vector search capabilities, including sparse vector search and full-text search. With complete SQL support, async indexing, and easy data management, it simplifies data handling. The extension supports various data types like FP16/INT8, binary vectors, and Matryoshka embeddings. It ensures system performance with production-ready features, high availability, and resource efficiency. Security and permissions are managed through easy access control. The tool allows users to create tables with vector columns, insert vector data, and calculate distances between vectors using different operators. It also supports half-precision floating-point numbers for better performance and memory usage optimization.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

mlx-llm

mlx-llm is a library that allows you to run Large Language Models (LLMs) on Apple Silicon devices in real-time using Apple's MLX framework. It provides a simple and easy-to-use API for creating, loading, and using LLM models, as well as a variety of applications such as chatbots, fine-tuning, and retrieval-augmented generation.

EasySteer

EasySteer is a unified framework built on vLLM for high-performance LLM steering. It offers fast, flexible, and easy-to-use steering capabilities with features like high performance, modular design, fine-grained control, pre-computed steering vectors, and an interactive demo. Users can interactively configure models, adjust steering parameters, and test interventions without writing code. The tool supports OpenAI-compatible APIs and provides modules for hidden states extraction, analysis-based steering, learning-based steering, and a frontend web interface for interactive steering and ReFT interventions.

Consistency_LLM

Consistency Large Language Models (CLLMs) is a family of efficient parallel decoders that reduce inference latency by efficiently decoding multiple tokens in parallel. The models are trained to perform efficient Jacobi decoding, mapping any randomly initialized token sequence to the same result as auto-regressive decoding in as few steps as possible. CLLMs have shown significant improvements in generation speed on various tasks, achieving up to 3.4 times faster generation. The tool provides a seamless integration with other techniques for efficient Large Language Model (LLM) inference, without the need for draft models or architectural modifications.

FlashRank

FlashRank is an ultra-lite and super-fast Python library designed to add re-ranking capabilities to existing search and retrieval pipelines. It is based on state-of-the-art Language Models (LLMs) and cross-encoders, offering support for pairwise/pointwise rerankers and listwise LLM-based rerankers. The library boasts the tiniest reranking model in the world (~4MB) and runs on CPU without the need for Torch or Transformers. FlashRank is cost-conscious, with a focus on low cost per invocation and smaller package size for efficient serverless deployments. It supports various models like ms-marco-TinyBERT, ms-marco-MiniLM, rank-T5-flan, ms-marco-MultiBERT, and more, with plans for future model additions. The tool is ideal for enhancing search precision and speed in scenarios where lightweight models with competitive performance are preferred.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

chromem-go

chromem-go is an embeddable vector database for Go with a Chroma-like interface and zero third-party dependencies. It enables retrieval augmented generation (RAG) and similar embeddings-based features in Go apps without the need for a separate database. The focus is on simplicity and performance for common use cases, allowing querying of documents with minimal memory allocations. The project is in beta and may introduce breaking changes before v1.0.0.

llm-leaderboard

Nejumi Leaderboard 3 is a comprehensive evaluation platform for large language models, assessing general language capabilities and alignment aspects. The evaluation framework includes metrics for language processing, translation, summarization, information extraction, reasoning, mathematical reasoning, entity extraction, knowledge/question answering, English, semantic analysis, syntactic analysis, alignment, ethics/moral, toxicity, bias, truthfulness, and robustness. The repository provides an implementation guide for environment setup, dataset preparation, configuration, model configurations, and chat template creation. Users can run evaluation processes using specified configuration files and log results to the Weights & Biases project.

qa-mdt

This repository provides an implementation of QA-MDT, integrating state-of-the-art models for music generation. It offers a Quality-Aware Masked Diffusion Transformer for enhanced music generation. The code is based on various repositories like AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. The implementation allows for training and fine-tuning the model with different strategies and datasets. The repository also includes instructions for preparing datasets in LMDB format and provides a script for creating a toy LMDB dataset. The model can be used for music generation tasks, with a focus on quality injection to enhance the musicality of generated music.

litdata

LitData is a tool designed for blazingly fast, distributed streaming of training data from any cloud storage. It allows users to transform and optimize data in cloud storage environments efficiently and intuitively, supporting various data types like images, text, video, audio, geo-spatial, and multimodal data. LitData integrates smoothly with frameworks such as LitGPT and PyTorch, enabling seamless streaming of data to multiple machines. Key features include multi-GPU/multi-node support, easy data mixing, pause & resume functionality, support for profiling, memory footprint reduction, cache size configuration, and on-prem optimizations. The tool also provides benchmarks for measuring streaming speed and conversion efficiency, along with runnable templates for different data types. LitData enables infinite cloud data processing by utilizing the Lightning.ai platform to scale data processing with optimized machines.

OpenMusic

OpenMusic is a repository providing an implementation of QA-MDT, a Quality-Aware Masked Diffusion Transformer for music generation. The code integrates state-of-the-art models and offers training strategies for music generation. The repository includes implementations of AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. Users can train or fine-tune the model using different strategies and datasets. The model is well-pretrained and can be used for music generation tasks. The repository also includes instructions for preparing datasets, training the model, and performing inference. Contact information is provided for any questions or suggestions regarding the project.

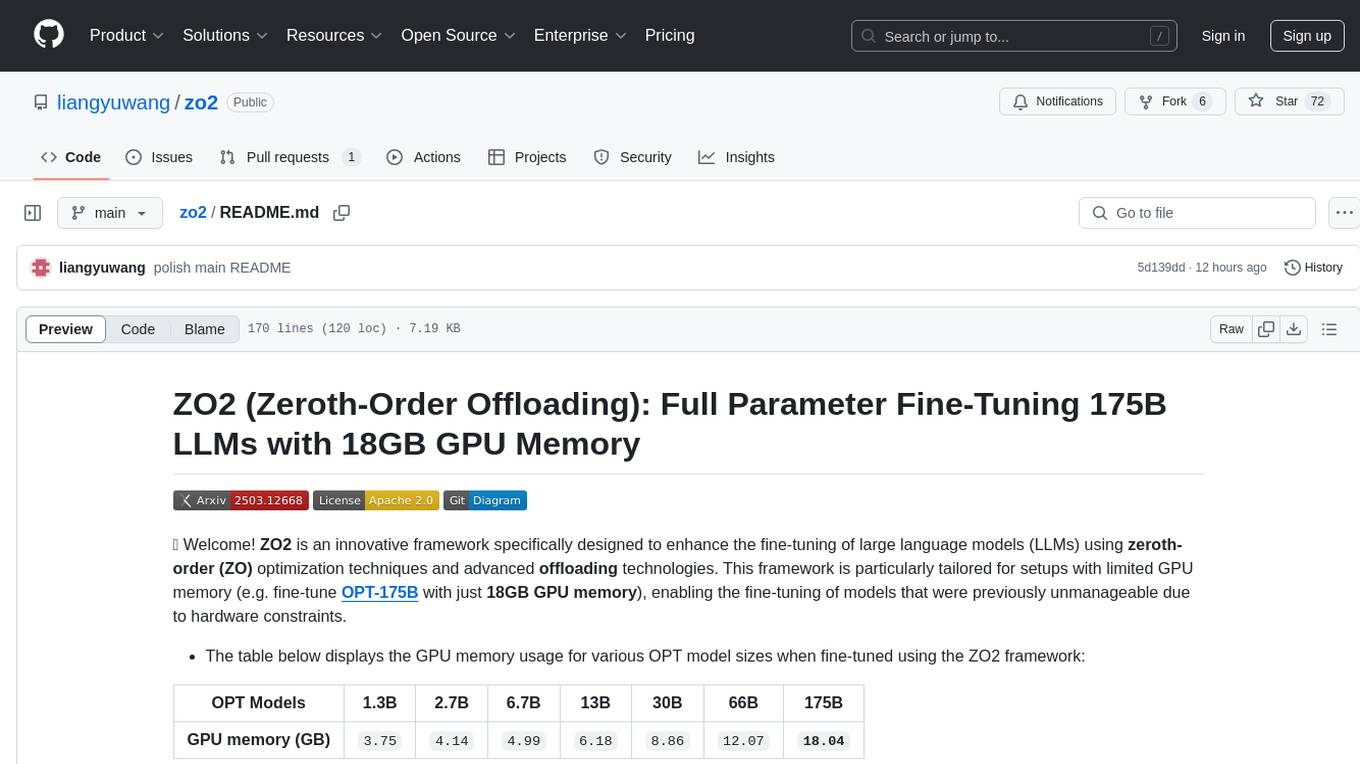

zo2

ZO2 (Zeroth-Order Offloading) is an innovative framework designed to enhance the fine-tuning of large language models (LLMs) using zeroth-order (ZO) optimization techniques and advanced offloading technologies. It is tailored for setups with limited GPU memory, enabling the fine-tuning of models with over 175 billion parameters on single GPUs with as little as 18GB of memory. ZO2 optimizes CPU offloading, incorporates dynamic scheduling, and has the capability to handle very large models efficiently without extra time costs or accuracy losses.

Endia

Endia is a dynamic Array library for Scientific Computing, offering automatic differentiation of arbitrary order, complex number support, dual API with PyTorch-like imperative or JAX-like functional interface, and JIT Compilation for speeding up training and inference. It can handle complex valued functions, perform both forward and reverse-mode automatic differentiation, and has a builtin JIT compiler. Endia aims to advance AI & Scientific Computing by pushing boundaries with clear algorithms, providing high-performance open-source code that remains readable and pythonic, and prioritizing clarity and educational value over exhaustive features.

For similar tasks

phospho

Phospho is a text analytics platform for LLM apps. It helps you detect issues and extract insights from text messages of your users or your app. You can gather user feedback, measure success, and iterate on your app to create the best conversational experience for your users.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

spaCy

spaCy is an industrial-strength Natural Language Processing (NLP) library in Python and Cython. It incorporates the latest research and is designed for real-world applications. The library offers pretrained pipelines supporting 70+ languages, with advanced neural network models for tasks such as tagging, parsing, named entity recognition, and text classification. It also facilitates multi-task learning with pretrained transformers like BERT, along with a production-ready training system and streamlined model packaging, deployment, and workflow management. spaCy is commercial open-source software released under the MIT license.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

lima

LIMA is a multilingual linguistic analyzer developed by the CEA LIST, LASTI laboratory. It is Free Software available under the MIT license. LIMA has state-of-the-art performance for more than 60 languages using deep learning modules. It also includes a powerful rules-based mechanism called ModEx for extracting information in new domains without annotated data.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.