MegaDetector

MegaDetector is an AI model that helps conservation folks spend less time doing boring things with camera trap images.

Stars: 186

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

README:

...helping conservation biologists spend less time doing boring things with camera trap images.

- What's MegaDetector all about?

- How do I get started with MegaDetector?

- Who is using MegaDetector?

- Repo contents

- Contact

- Gratuitous camera trap picture

- License

- Contributing

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems.

MegaDetector only finds animals, it doesn't identify them to species level. If you're looking for a species classifier, check out SpeciesNet, a species classifier that plays nicely with MegaDetector.

Here's a “teaser” image of what MegaDetector output looks like:

Image credit University of Washington.

- If you are looking for a convenient tool to run MegaDetector, you don't need anything from this repository: check out AddaxAI (formerly EcoAssist), a GUI-based tool for running AI models (including MegaDetector) on camera trap images.

- If you're just considering the use of AI in your workflow, and you aren't even sure yet whether MegaDetector would be useful to you, we recommend reading the "getting started with MegaDetector" page.

- If you're already familiar with MegaDetector and you're ready to run it on your data, see the MegaDetector User Guide for instructions on running MegaDetector.

- If you're a programmer-type looking to use tools from this repo, check out the MegaDetector Python package that provides access to everything in this repo (yes, you guessed it, "pip install megadetector").

- If you have any questions, or you want to tell us that MegaDetector was amazing/terrible on your images, or you have a zillion images and you want some help digging out of that backlog, email us!

MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled “Everything I know about machine learning and camera traps”.

We work with ecologists all over the world to help them spend less time annotating images and more time thinking about conservation. You can read a little more about how this works on our getting started with MegaDetector page.

Here are a few of the organizations that have used MegaDetector... we're only listing organizations who (a) we know about and (b) have given us permission to refer to them here (or have posted publicly about their use of MegaDetector), so if you're using MegaDetector or other tools from this repo and would like to be added to this list, email us!

-

Canadian Parks and Wilderness Society (CPAWS) Northern Alberta Chapter

-

Applied Conservation Macro Ecology Lab, University of Victoria

-

Banff National Park Resource Conservation, Parks Canada

-

Blumstein Lab, UCLA

-

Borderlands Research Institute, Sul Ross State University

-

Capitol Reef National Park / Utah Valley University

-

Canyon Critters Project, University of Georgia

-

Center for Biodiversity and Conservation, American Museum of Natural History

-

Centre for Ecosystem Science, UNSW Sydney

-

Cross-Cultural Ecology Lab, Macquarie University

-

DC Cat Count, led by the Humane Rescue Alliance

-

Department of Anthropology and Archaeology, University of Calgary

-

Department of Fish and Wildlife Sciences, University of Idaho

-

Department of Society & Conservation, W.A. Franke College of Forestry & Conservation, University of Montana

-

Department of Wildlife Ecology and Conservation, University of Florida

-

Fodrie Lab, Institute of Marine Sciences, UNC Chapel Hill

-

Gola Forest Programme, Royal Society for the Protection of Birds (RSPB)

-

Graeme Shannon's Research Group, Bangor University

-

Grizzly Bear Recovery Program, U.S. Fish & Wildlife Service

-

Hamaarag, The Steinhardt Museum of Natural History, Tel Aviv University

-

Institut des Science de la Forêt Tempérée (ISFORT), Université du Québec en Outaouais

-

Lab of Dr. Bilal Habib, the Wildlife Institute of India

-

Landscape Ecology Lab, Concordia University

-

Mammal Spatial Ecology and Conservation Lab, Washington State University

-

McLoughlin Lab in Population Ecology, University of Saskatchewan

-

National Wildlife Refuge System, Southwest Region, U.S. Fish & Wildlife Service

-

Northern Great Plains Program, Smithsonian

-

Polar Ecology Group, University of Gdansk

-

Quantitative Ecology Lab, University of Washington

-

San Diego Field Station, U.S. Geological Survey

-

Santa Monica Mountains Recreation Area, National Park Service

-

Seattle Urban Carnivore Project, Woodland Park Zoo

-

Serra dos Órgãos National Park, ICMBio

-

Snapshot USA, Smithsonian

-

TROPECOLNET project, Museo Nacional de Ciencias Naturales

-

Wildlife Coexistence Lab, University of British Columbia

-

Wildlife Research, Oregon Department of Fish and Wildlife

-

Kohl Wildlife Lab, University of Georgia

-

SPEC Lab and Cherry Lab, Caesar Kleberg Wildlife Research Institute, Texas A&M Kingsville

-

Ecology and Conservation of Amazonian Vertebrates Research Group, Federal University of Amapá

-

Department of Ecology, TU Berlin

-

Ghost Cat Analytics

-

Protected Areas Unit, Canadian Wildlife Service

-

Conservation and Restoration Science Branch, New South Wales Department of Climate Change, Energy, the Environment and Water

-

School of Natural Sciences, University of Tasmania (story)

-

Kenai National Wildlife Refuge, U.S. Fish & Wildlife Service (story)

-

Australian Wildlife Conservancy (blog posts 1, 2, 3)

-

Island Conservation (blog posts 1,2) (video)

-

Alberta Biodiversity Monitoring Institute (ABMI) (WildTrax platform) (blog posts 1,2)

-

Shan Shui Conservation Center (blog post) (translated blog post) (Web demo)

-

Endangered Landscapes and Seascapes Programme, Cambridge Conservation Initiative (blog post)

-

Fort Collins Science Center, U.S. Geological Survey (blog post)

-

Road Ecology Center, University of California, Davis (Wildlife Observer Network platform)

-

The Nature Conservancy in California (Animl platform) (story)

-

Wildlife Division, Michigan Department of Natural Resources (blog post)

Also see:

-

The list of MD-related GUIs, platforms, and GitHub repos within the MegaDetector User Guide... although you can never have too many lists, so here they are in a concise comma-separated list: Wildlife Insights, Animal Detect, TrapTagger, WildTrax, Agouti, Trapper, Camelot, WildePod, wpsWatch, TNC Animl (code), Wildlife Observer Network, Zooniverse ML Subject Assistant, Dudek AI Image Toolkit, Zamba Cloud, OCAPI, BoquilaHUB

-

Peter's map of AddaxAI (formerly EcoAssist) users (who are also MegaDetector users!)

-

The list of papers tagged "MegaDetector" on our list of papers about ML and camera traps

MegaDetector was initially developed by the Microsoft AI for Earth program; this repo was forked from the microsoft/cameratraps repo and is maintained by the original MegaDetector developers (who are no longer at Microsoft, but are absolutely fantastically eternally grateful to Microsoft for the investment and commitment that made MegaDetector happen). If you're interested in MD's history, see the downloading the model section in the MegaDetector User Guide to learn about the history of MegaDetector releases, and the can you share the training data? section to learn about the training data used in each of those releases.

The core functionality provided in this repo is:

- Tools for training and running MegaDetector.

- Tools for working with MegaDetector output, e.g. for reviewing the results of a large processing batch.

- Tools to convert among frequently-used camera trap metadata formats.

This repo does not host the data used to train MegaDetector, but we work with our collaborators to make data and annotations available whenever possible on lila.science. See the MegaDetector training data section to learn more about the data used to train MegaDetector.

This repo is organized into the following folders...

Code for running models, especially MegaDetector.

Code for common operations one might do after running MegaDetector, e.g. generating preview pages to summarize your results, separating images into different folders based on AI results, or converting results to a different format.

Small utility functions for string manipulation, filename manipulation, downloading files from URLs, etc.

Tools for visualizing images with ground truth and/or predicted bounding boxes.

Code for:

- Converting frequently-used metadata formats to COCO Camera Traps format

- Converting the output of AI models (especially YOLOv5) to the format used for AI results throughout this repo

- Creating, visualizing, and editing COCO Camera Traps .json databases

Code for hosting our models as an API, either for synchronous operation (i.e., for real-time inference) or as a batch process (for large biodiversity surveys). This folder is largely deprecated, and primarily exists now only to hold documentation that is still relevant, to which there are permanent links. Mostly everything in this folder has been moved to the "archive" folder.

This folder is largely deprecated thanks to the release of SpeciesNet, a species classifier that is better than any of the classifiers we ever trained with the stuff in this folder. That said, this folder contains code for training species classifiers on new data sets, generally trained on MegaDetector crops.

Here's another "teaser image" of what you get at the end of training and running a classifier:

Image credit University of Minnesota, from the Snapshot Safari program.

Code to facilitate mapping data-set-specific category names (e.g. "lion", which means very different things in Idaho vs. South Africa) to a standard taxonomy.

Environment files... specifically .yml files for mamba/conda environments (these are what we recommend in our MegaDetector User Guide), and a requirements.txt for the pip-inclined.

Media used in documentation.

Old code that we didn't quite want to delete, but is basically obsolete.

Random things that don't fit in any other directory, but aren't quite deprecated. Mostly postprocessing scripts that were built for a single use case but could potentially be useful in the future.

A handful of images from LILA that facilitate testing and debugging.

For questions about this repo, contact [email protected].

You can also chat with us and the broader camera trap AI community on the AI for Conservation forum at WILDLABS or the AI for Conservation Slack group.

Image credit USDA, from the NACTI data set.

You will find lots more gratuitous camera trap pictures sprinkled about this repo. It's like a scavenger hunt.

This repository is licensed with the MIT license.

Code written on or before April 28, 2023 is copyright Microsoft.

This project welcomes contributions, as pull requests, issues, or suggestions by email. We have a list of issues that we're hoping to address, many of which would be good starting points for new contributors. We also depend on other open-source tools that help users run MegaDetector (particularly AddaxAI (formerly EcoAssist), and open-source tools that help users work with MegaDetector results (particularly Timelapse). If you are looking to get involved in GUI development, reach out to the developers of those tools as well!

If you are interesting in getting involved in the conservation technology space, and MegaDetector just happens to be the first page you landed on, and none of our open issues are getting you fired up, don't fret! Head over to the WILDLABS discussion forums and let the community know you're a developer looking to get involved. Someone needs your help!

Information about the coding conventions, linting, testing, and documentation tools used by this repo are available in developers.md.

Speaking of contributions... thanks to Erin Roche from Idaho Fish and Game for the MegaDetector logo at the top of this page!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MegaDetector

Similar Open Source Tools

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

ai_summer

AI Summer is a repository focused on providing workshops and resources for developing foundational skills in generative AI models and transformer models. The repository offers practical applications for inferencing and training, with a specific emphasis on understanding and utilizing advanced AI chat models like BingGPT. Participants are encouraged to engage in interactive programming environments, decide on projects to work on, and actively participate in discussions and breakout rooms. The workshops cover topics such as generative AI models, retrieval-augmented generation, building AI solutions, and fine-tuning models. The goal is to equip individuals with the necessary skills to work with AI technologies effectively and securely, both locally and in the cloud.

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

start-machine-learning

Start Machine Learning in 2024 is a comprehensive guide for beginners to advance in machine learning and artificial intelligence without any prior background. The guide covers various resources such as free online courses, articles, books, and practical tips to become an expert in the field. It emphasizes self-paced learning and provides recommendations for learning paths, including videos, podcasts, and online communities. The guide also includes information on building language models and applications, practicing through Kaggle competitions, and staying updated with the latest news and developments in AI. The goal is to empower individuals with the knowledge and resources to excel in machine learning and AI.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

AI-Expert-Roadmap

AI Expert Roadmap is a comprehensive guide to becoming an Artificial Intelligence Expert in 2022. It provides detailed charts and paths for individuals interested in data science, machine learning, and AI. The roadmap covers fundamental concepts, data science, machine learning, deep learning, data engineering, and big data engineering. Created by AMAI GmbH, this resource aims to help individuals navigate the AI landscape and make informed decisions about their learning path. The interactive version with links is available at i.am.ai/roadmap. Stay updated by starring and watching the GitHub repo for new content.

TinyTroupe

TinyTroupe is an experimental Python library that leverages Large Language Models (LLMs) to simulate artificial agents called TinyPersons with specific personalities, interests, and goals in simulated environments. The focus is on understanding human behavior through convincing interactions and customizable personas for various applications like advertisement evaluation, software testing, data generation, project management, and brainstorming. The tool aims to enhance human imagination and provide insights for better decision-making in business and productivity scenarios.

mlforpublicpolicylab

The Machine Learning for Public Policy Lab is a project-based course focused on solving real-world problems using machine learning in the context of public policy and social good. Students will gain hands-on experience building end-to-end machine learning systems, developing skills in problem formulation, working with messy data, communicating with non-technical stakeholders, model interpretability, and understanding algorithmic bias & disparities. The course covers topics such as project scoping, data acquisition, feature engineering, model evaluation, bias and fairness, and model interpretability. Students will work in small groups on policy projects, with graded components including project proposals, presentations, and final reports.

AimStar

AimStar is a free and open-source external cheat for CS2, written in C++. It is available for Windows 8.1+ and features ESP, glow, radar, crosshairs, no flash, bhop, aimbot, triggerbot, language settings, hit sound, and bomb timer. The code is mostly contributed by users and may be messy. The project is for learning purposes only and should not be used for illegal activities.

shitspotter

The 'ShitSpotter' repository is dedicated to developing a poop-detection algorithm and dataset for creating a phone app that helps locate dog poop in outdoor environments. The project involves training a PyTorch network to detect poop in images and provides scripts for detecting poop in unseen images using a pretrained model. The dataset consists of mostly outdoor images taken with a phone, with a process involving before and after pictures of the poop. The project aims to enable various applications, such as AR glasses for poop detection and efficient cleaning of public areas by city governments. The code, dataset, and pretrained models are open source with permissive licensing and distributed via IPFS, BitTorrent, and centralized mechanisms.

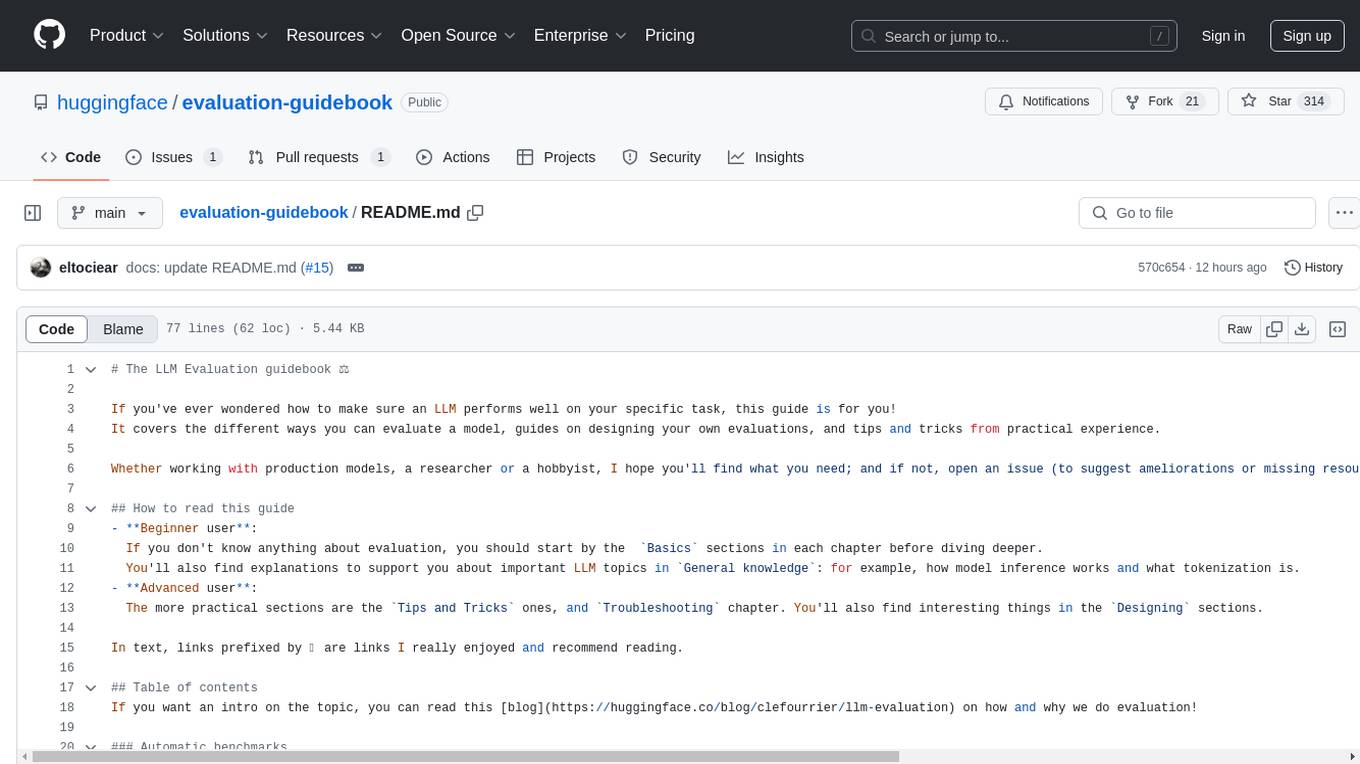

evaluation-guidebook

The LLM Evaluation guidebook provides comprehensive guidance on evaluating language model performance, including different evaluation methods, designing evaluations, and practical tips. It caters to both beginners and advanced users, offering insights on model inference, tokenization, and troubleshooting. The guide covers automatic benchmarks, human evaluation, LLM-as-a-judge scenarios, troubleshooting practicalities, and general knowledge on LLM basics. It also includes planned articles on automated benchmarks, evaluation importance, task-building considerations, and model comparison challenges. The resource is enriched with recommended links and acknowledgments to contributors and inspirations.

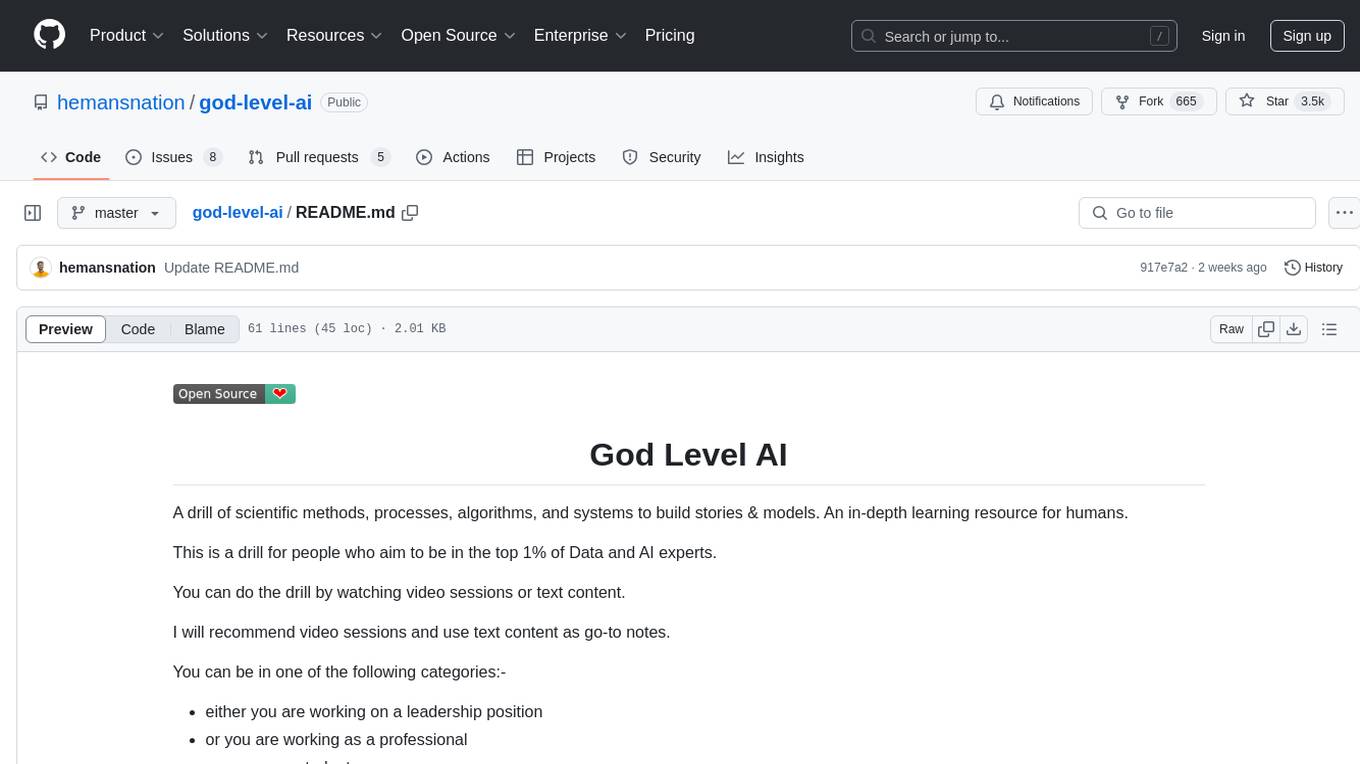

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

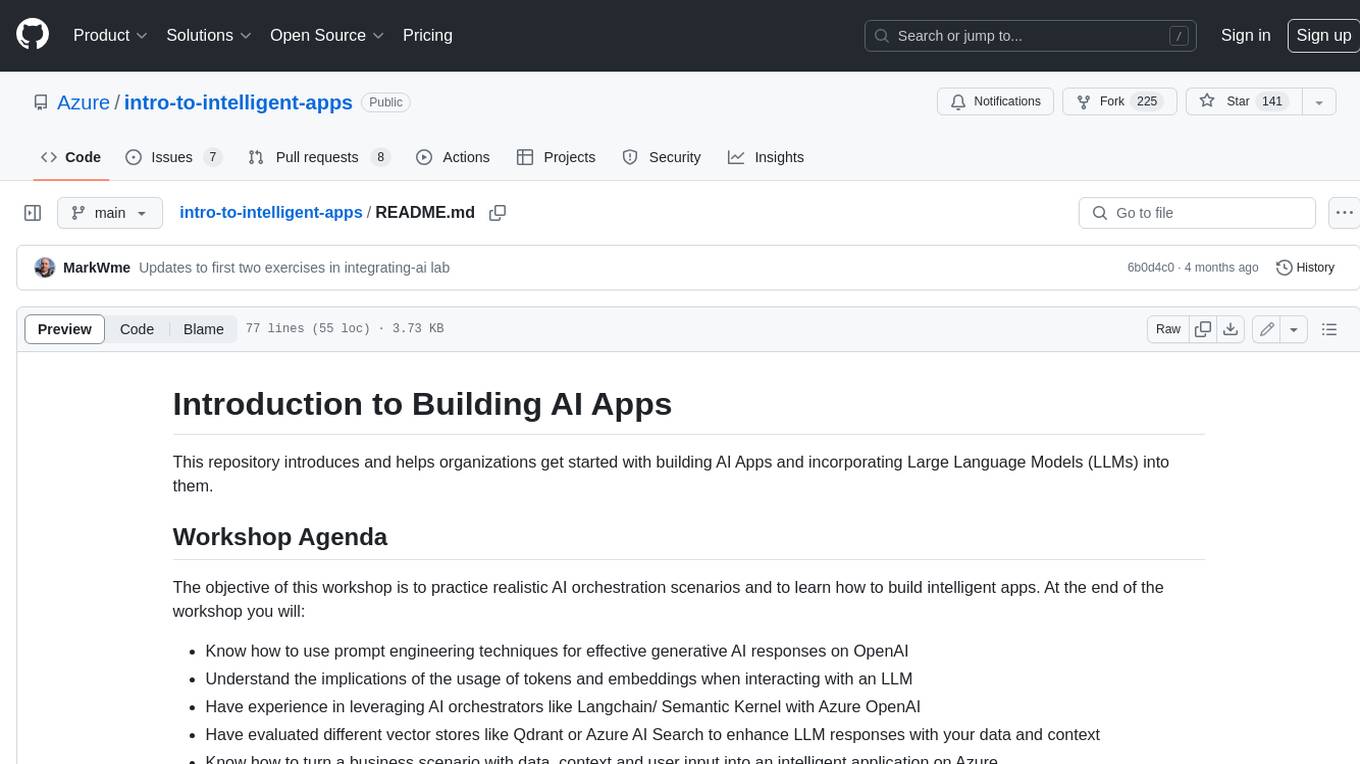

intro-to-intelligent-apps

This repository introduces and helps organizations get started with building AI Apps and incorporating Large Language Models (LLMs) into them. The workshop covers topics such as prompt engineering, AI orchestration, and deploying AI apps. Participants will learn how to use Azure OpenAI, Langchain/ Semantic Kernel, Qdrant, and Azure AI Search to build intelligent applications.

For similar tasks

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

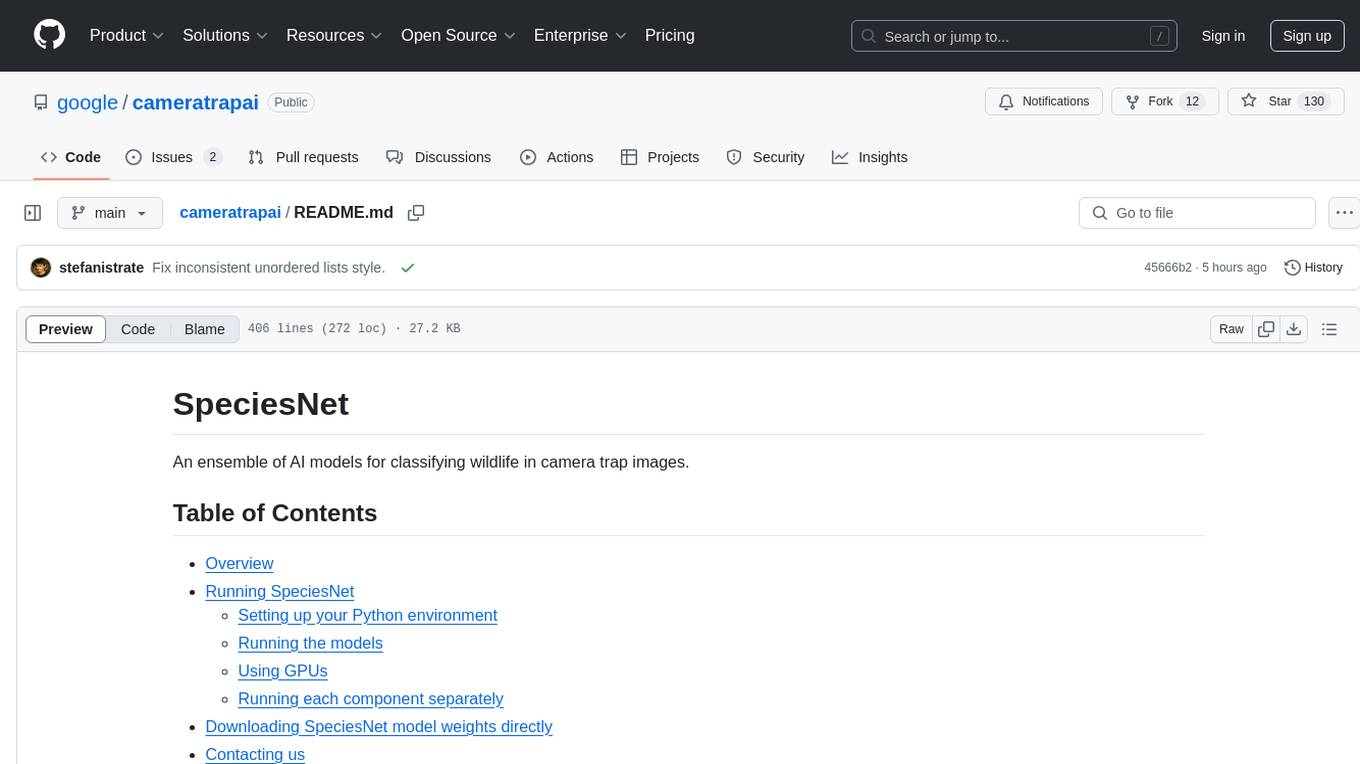

cameratrapai

SpeciesNet is an ensemble of AI models designed for classifying wildlife in camera trap images. It consists of an object detector that finds objects of interest in wildlife camera images and an image classifier that classifies those objects to the species level. The ensemble combines these two models using heuristics and geographic information to assign each image to a single category. The models have been trained on a large dataset of camera trap images and are used for species recognition in the Wildlife Insights platform.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.