IntelliNode

Access the latest AI models like ChatGPT, LLaMA, Diffusion, Gemini Hugging face, and beyond through a unified prompt layer and performance evaluation

Stars: 201

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

README:

IntelliNode is a javascript module that integrates cutting-edge AI into your project. With its intuitive functions, you can easily feed data to models like ChatGPT, LLaMA, WaveNet, Gemini and Stable diffusion and receive generated text, speech, or images. It also offers high-level functions such as semantic search, multi-model evaluation, and chatbot capabilities.

One command and get access to latest models:

npm i intellinode

For detailed usage instructions, refer to the documentation.

The Gen function quickly generates tailored content in one line.

import:

const { Gen } = require('intellinode');call:

// one line to generate html page code (openai gpt4 is default)

text = 'a registration page with flat modern theme.'

await Gen.save_html_page(text, folder, file_name, openaiKey);// or generate blog post (using cohere)

const blogPost = await Gen.get_blog_post(prompt, apiKey, provider='cohere');import:

const { Chatbot, ChatGPTInput } = require('intellinode');call:

// set chatGPT system mode and the user message.

const input = new ChatGPTInput('You are a helpful assistant.');

input.addUserMessage('What is the distance between the Earth and the Moon?');

// get chatGPT responses.

const chatbot = new Chatbot(OPENAI_API_KEY, 'openai');

const responses = await chatbot.chat(input);IntelliNode enable effortless swapping between AI models.

- imports:

const { Chatbot, GeminiInput, SupportedChatModels } = require('intellinode');- call:

const input = new GeminiInput();

input.addUserMessage('Who painted the Mona Lisa?');

const geminiBot = new Chatbot(apiKey, SupportedChatModels.GEMINI);

const responses = await geminiBot.chat(input);The documentation to switch the chatbot provider between ChatGPT, LLama, Cohere, Mistral and more can be found in the IntelliNode Wiki.

import:

const { SemanticSearch } = require('intellinode');call:

const search = new SemanticSearch(apiKey);

// pivotItem is the item to search.

const results = await search.getTopMatches(pivotItem, searchArray, numberOfMatches);

const filteredArray = search.filterTopMatches(results, searchArray)Generate improved prompts using LLMs:

const promptTemp = await Prompt.fromChatGPT("fantasy image with ninja jumping across buildings", openaiApiKey);

console.log(promptTemp.getInput());import:

const { RemoteLanguageModel, LanguageModelInput } = require('intellinode');call openai model:

const langModel = new RemoteLanguageModel('openai-key', 'openai');

model_name = 'gpt-3.5-turbo-instruct'

const results = await langModel.generateText(new LanguageModelInput({

prompt: 'Write a product description for smart plug that works with voice assistant.',

model: model_name,

temperature: 0.7

}));

console.log('Generated text:', results[0]);change to call cohere models:

const langModel = new RemoteLanguageModel('cohere-key', 'cohere');

model_name = 'command-xlarge-20221108'

// ... same codeimport:

const { RemoteImageModel, SupportedImageModels, ImageModelInput } = require('intellinode');call DALL·E:

provider=SupportedImageModels.OPENAI;

const imgModel = new RemoteImageModel(apiKey, provider);

const images = await imgModel.generateImages(new ImageModelInput({

prompt: 'teddy writing a blog in times square',

numberOfImages: 1

}));change to call Stable Diffusion:

provider=SupportedImageModels.STABILITY;

// ... same codeTo access Openai services from your Azure account, you have to call the following function at the beginning of your application:

const { ProxyHelper } = require('intellinode');

ProxyHelper.getInstance().setAzureOpenai(resourceName);To access Openai from a proxy for restricted regions:

ProxyHelper.getInstance().setOpenaiProxyValues(openaiProxyJson);For more details and in-depth code, check the samples.

- Initiate the project:

cd IntelliNode

npm install

- Create a .env file with the access keys:

OPENAI_API_KEY=<key_value>

COHERE_API_KEY=<key_value>

GOOGLE_API_KEY=<key_value>

STABILITY_API_KEY=<key_value>

HUGGING_API_KEY=<key_value>

-

run the remote language models test cases:

node test/integration/RemoteLanguageModel.test.js -

run the remote image models test cases:

node test/integration/RemoteImageModel.test.js -

run the remote speech models test cases:

node test/integration/RemoteSpeechModel.test.js -

run the embedding test cases:

node test/integration/RemoteEmbedModel.test.js -

run the chatBot test cases:

node test/integration/Chatbot.test.js

- IntelliNode Wiki: Check the wiki page for indepeth instructions and practical use cases.

- Showcase: Experience the potential of Intellinode in action, and use your keys to generate content and html pages.

- Samples: Explore a code sample with detailed setup documentation to get started with Intellinode.

- Model Evaluation: Demonstrate a swift approach to compare the performance of multiple models against designated target answers.

- Semantic Search: In-memory semantic search with iterator over large data.

The module foundation:

- The wrapper layer provides low-level access to the latest AI models

- The controller layer offers a unified input to any AI model by handling the differences. So you can switch between models like Openai and Cohere without changing the code.

- The function layer provides abstract functionality that extends based on the app's use cases. For example, an easy-to-use chatbot or marketing content generation utilities.

Call for contributors: registration form .

- [x] Add support to OpenAI completion & GPT4.

- [x] Add support to OpenAI DALL·E 2.

- [ ] Add support to other OpenAI functions.

- [x] Add support to cohere generate models.

- [ ] Add support to Google language models.

- [x] Add support to Google speech models.

- [x] Add support to LLaMa AWS private deployment.

- [ ] Add support to Anthropic claude.

- [ ] Add support to Midjourney image generation.

- [x] Add support to Stable diffusion.

- [x] Add support to hugging face inference.

- [x] Add more high-level functions like semantic search, evaluation, etc.

Apache License

Copyright 2023 Github.com/Barqawiz/IntelliNode

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for IntelliNode

Similar Open Source Tools

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

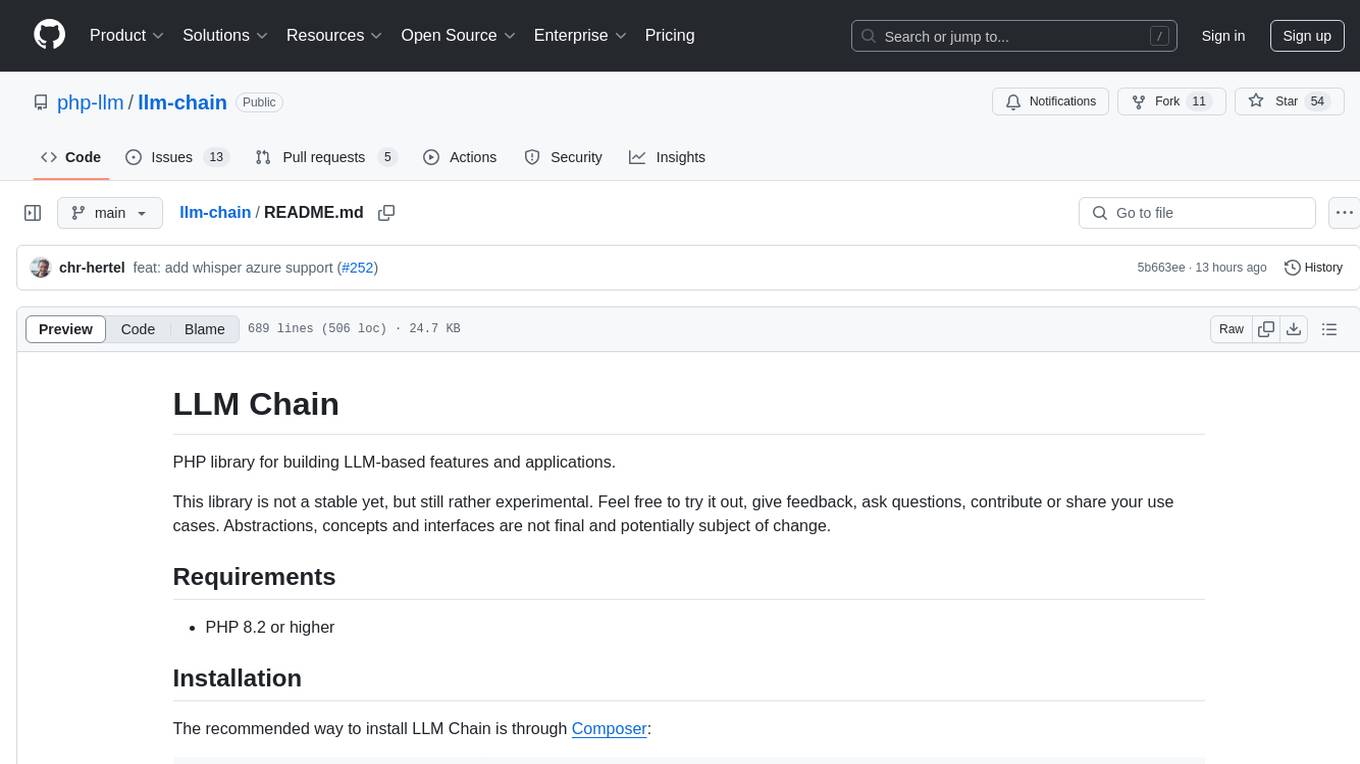

llm-chain

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

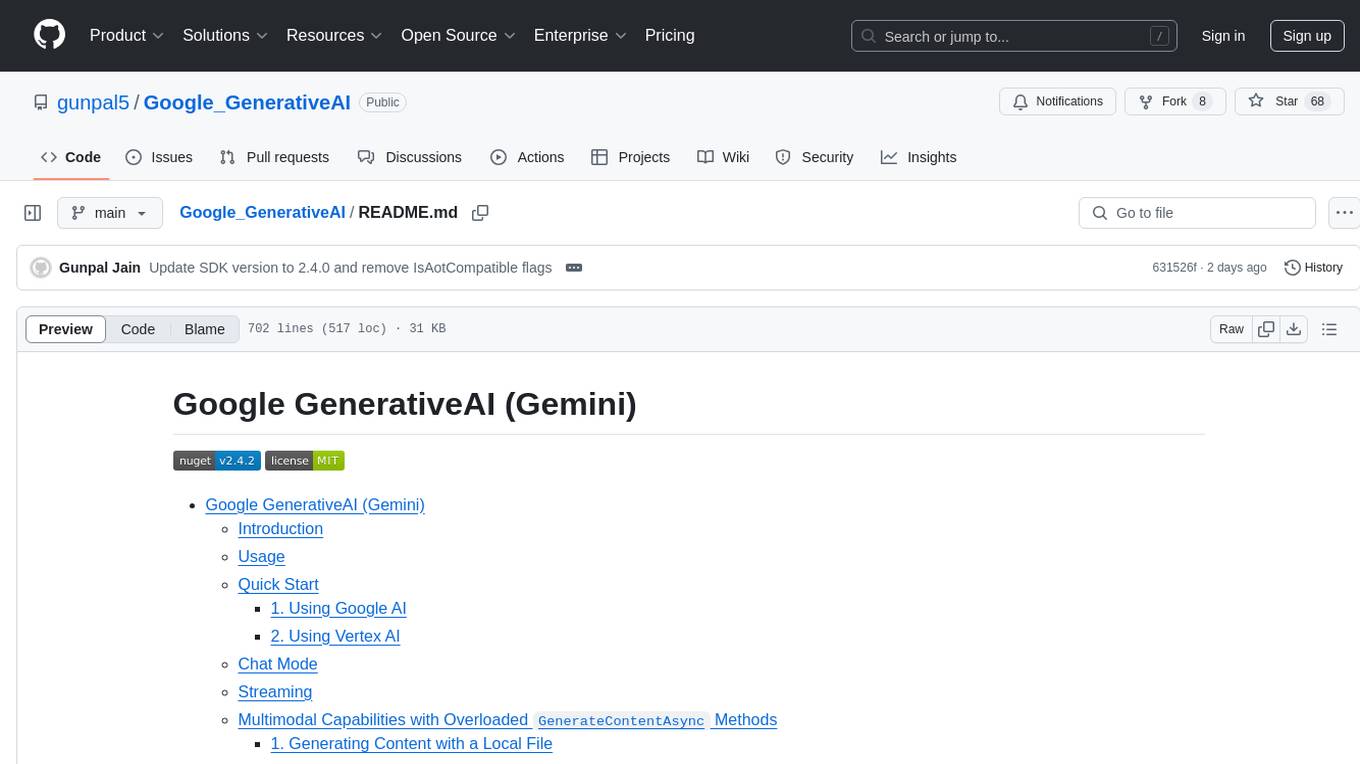

Google_GenerativeAI

Google GenerativeAI (Gemini) is an unofficial C# .Net SDK based on REST APIs for accessing Google Gemini models. It offers a complete rewrite of the previous SDK with improved performance, flexibility, and ease of use. The SDK seamlessly integrates with LangChain.net, providing easy methods for JSON-based interactions and function calling with Google Gemini models. It includes features like enhanced JSON mode handling, function calling with code generator, multi-modal functionality, Vertex AI support, multimodal live API, image generation and captioning, retrieval-augmented generation with Vertex RAG Engine and Google AQA, easy JSON handling, Gemini tools and function calling, multimodal live API, and more.

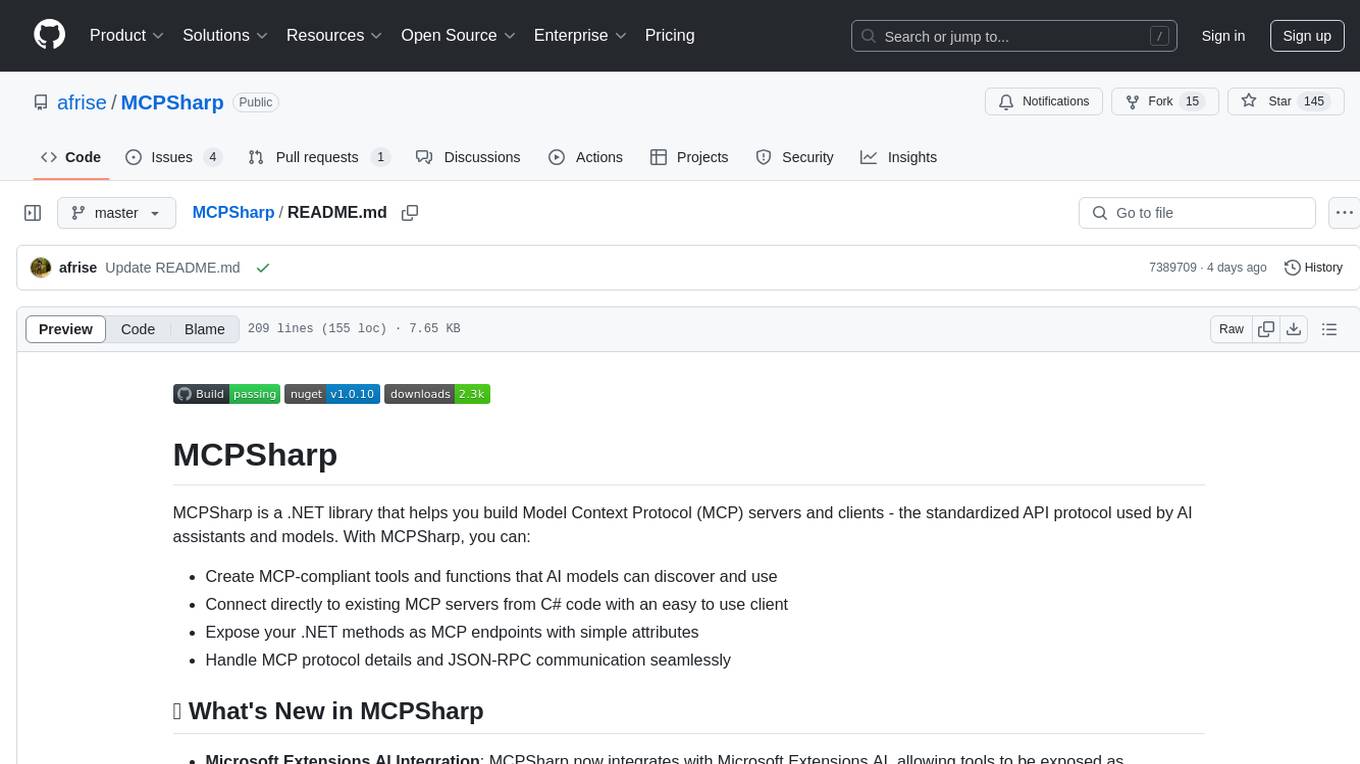

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

generative-ai

The 'Generative AI' repository provides a C# library for interacting with Google's Generative AI models, specifically the Gemini models. It allows users to access and integrate the Gemini API into .NET applications, supporting functionalities such as listing available models, generating content, creating tuned models, working with large files, starting chat sessions, and more. The repository also includes helper classes and enums for Gemini API aspects. Authentication methods include API key, OAuth, and various authentication modes for Google AI and Vertex AI. The package offers features for both Google AI Studio and Google Cloud Vertex AI, with detailed instructions on installation, usage, and troubleshooting.

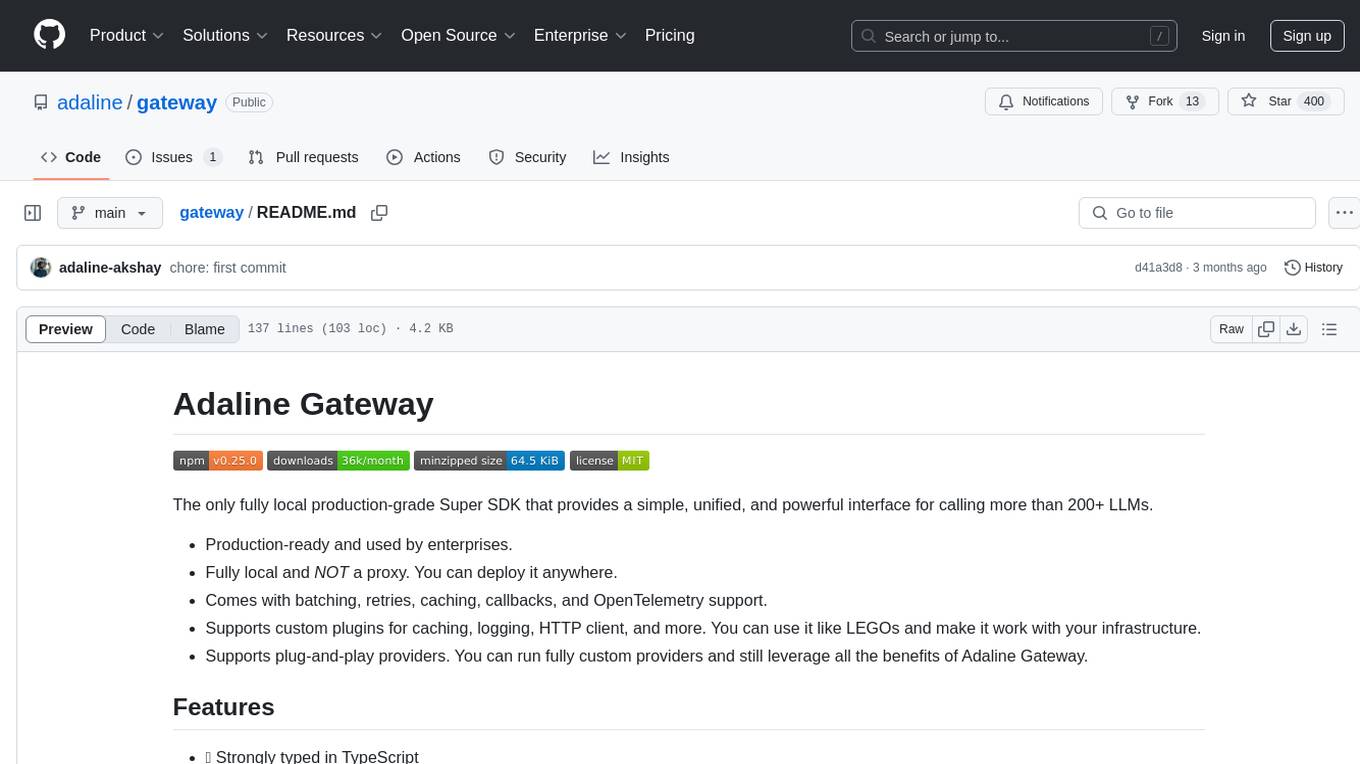

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.