laravel-slower

★ Laravel Slower: Optimize Your DB Queries with AI

Stars: 284

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

README:

Laravel Slower is a powerful package designed for Laravel developers who want to enhance the performance of their applications. It intelligently identifies slow database queries and leverages AI to suggest optimal indexing strategies and other performance improvements. Whether you're debugging or routinely monitoring your application, Laravel Slower provides actionable insights to streamline database interactions.

You can install the package via composer:

composer require halilcosdu/laravel-slowerYou can publish the config file with:

php artisan vendor:publish --tag="slower-config"This is the contents of the published config file:

You can disable AI recommendations by setting the ai_recommendation key to false in the config file. If you disable AI recommendations, the package will not make any API requests to OpenAI.

<?php

// config for HalilCosdu/Slower

use HalilCosdu\Slower\Models\SlowLog;

return [

'enabled' => env('SLOWER_ENABLED', true),

'threshold' => env('SLOWER_THRESHOLD', 10000),

'resources' => [

'table_name' => (new SlowLog)->getTable(),

'model' => SlowLog::class,

],

'ai_recommendation' => env('SLOWER_AI_RECOMMENDATION', true),

'recommendation_model' => env('SLOWER_AI_RECOMMENDATION_MODEL', 'gpt-4'),

'recommendation_use_explain' => env('SLOWER_AI_RECOMMENDATION_USE_EXPLAIN', true),

'ignore_explain_queries' => env('SLOWER_IGNORE_EXPLAIN_QUERIES', true),

'ignore_insert_queries' => env('SLOWER_IGNORE_INSERT_QUERIES', true),

'open_ai' => [

'api_key' => env('OPENAI_API_KEY'),

'organization' => env('OPENAI_ORGANIZATION'),

'request_timeout' => env('OPENAI_TIMEOUT'),

],

'prompt' => env('SLOWER_PROMPT', 'As a distinguished database optimization expert, your expertise is invaluable for refining SQL queries to achieve maximum efficiency. Schema json provide list of indexes and column definitions for each table in query. Also analyse the output of EXPLAIN ANALYSE and provide recommendations to optimize query. Please examine the SQL statement provided below including EXPLAIN ANALYSE query plan. Based on your analysis, could you recommend sophisticated indexing techniques or query modifications that could significantly improve performance and scalability?'),

];You can publish and run the migrations with:

php artisan vendor:publish --tag="slower-migrations"php artisan migratepublic function up()

{

Schema::create(config('slower.resources.table_name'), function (Blueprint $table) {

$table->id();

$table->boolean('is_analyzed')->default(false)->index();

$table->longtext('bindings');

$table->longtext('sql');

$table->float('time')->nullable()->index();

$table->string('connection');

$table->string('connection_name')->nullable();

$table->longtext('raw_sql');

$table->longtext('recommendation')->nullable();

$table->timestamps();

});

}

public function down(): void

{

Schema::dropIfExists(config('slower.resources.table_name'));

}You can register the commands with your scheduler.

php artisan slower:clean /*{days=15} Delete records older than 15 days.*/

php artisan slower:analyze /*Analyze the records where is_analyzed=false*/ use HalilCosdu\Slower\Commands\AnalyzeQuery;

use HalilCosdu\Slower\Commands\SlowLogCleaner;

protected $commands = [

AnalyzeQuery::class,

SlowLogCleaner::class,

];

/**

* Define the application's command schedule.

*/

protected function schedule(Schedule $schedule): void

{

$schedule->command(AnalyzeQuery::class)->runInBackground()->daily();

$schedule->command(SlowLogCleaner::class)->runInBackground()->daily();

}$model = \HalilCosdu\Slower\Models\SlowLog::first();

\HalilCosdu\Slower\Facades\Slower::analyze($model): Model;

dd($model->raw_sql); /*select count(*) as aggregate from "product_prices" where "product_id" = '1' and "price" = '0' and "discount_total" > '0'*/

dd($model->recommendation);

In order to improve database performance and scalability, here are some suggestions below:

- Indexing: Effective database indexing can significantly speed up query performance. For your query, consider adding a combined (composite) index on

product_id,price, anddiscount_total. This index would work well because the where clause covers all these columns.

CREATE INDEX idx_product_prices

ON product_prices (product_id, price, discount_total);(Note: The order of the columns in the index might depend on the selectivity of the columns and the data distribution. Therefore, you might have to reorder them depending on your specific situation.)

- Data Types: Ensure that the values being compared are of appropriate data types. Comparing or converting inappropriate data types at run time will slow down the search. It appears that you're using string comparisons ('1') for

product_id,price, anddiscount_totalwhich are likely numerical columns. Remove the quotes for these where clause conditions.

Updated Query:

SELECT COUNT(*) AS aggregate

FROM product_prices

WHERE product_id = 1

AND price = 0

AND discount_total > 0;- ANALYZE: Another practice to improve query performance could be running the

ANALYZEcommand. This command collects statistics about the contents of tables in the database, and stores the results in the pg_statistic system catalog. Subsequently, the query planner uses these statistics to help determine the most efficient execution plans for queries.

ANALYZE product_prices;Remember to periodically maintain your index to keep up with the CRUD operations that could lead to index fragmentation. Depending on your DBMS, you might want to REBUILD or REORGANIZE your indices.

composer test- [ ] Create a documentation page.

- [ ] Begin development of version 2.

- [ ] Auto Indexer (Premium Feature)

- [ ] Create a FilamentPHP plugin.

Please see CHANGELOG for more information on what has changed recently.

Please see CONTRIBUTING for details.

Please review our security policy on how to report security vulnerabilities.

The MIT License (MIT). Please see License File for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for laravel-slower

Similar Open Source Tools

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

ShannonBase

ShannonBase is a HTAP database provided by Shannon Data AI, designed for big data and AI. It extends MySQL with native embedding support, machine learning capabilities, a JavaScript engine, and a columnar storage engine. ShannonBase supports multimodal data types and natively integrates LightGBM for training and prediction. It leverages embedding algorithms and vector data type for ML/RAG tasks, providing Zero Data Movement, Native Performance Optimization, and Seamless SQL Integration. The tool includes a lightweight JavaScript engine for writing stored procedures in SQL or JavaScript.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

LLMDebugger

This repository contains the code and dataset for LDB, a novel debugging framework that enables Large Language Models (LLMs) to refine their generated programs by tracking the values of intermediate variables throughout the runtime execution. LDB segments programs into basic blocks, allowing LLMs to concentrate on simpler code units, verify correctness block by block, and pinpoint errors efficiently. The tool provides APIs for debugging and generating code with debugging messages, mimicking how human developers debug programs.

redisvl

Redis Vector Library (RedisVL) is a Python client library for building AI applications on top of Redis. It provides a high-level interface for managing vector indexes, performing vector search, and integrating with popular embedding models and providers. RedisVL is designed to make it easy for developers to build and deploy AI applications that leverage the speed, flexibility, and reliability of Redis.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

llm-chain

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

litdata

LitData is a tool designed for blazingly fast, distributed streaming of training data from any cloud storage. It allows users to transform and optimize data in cloud storage environments efficiently and intuitively, supporting various data types like images, text, video, audio, geo-spatial, and multimodal data. LitData integrates smoothly with frameworks such as LitGPT and PyTorch, enabling seamless streaming of data to multiple machines. Key features include multi-GPU/multi-node support, easy data mixing, pause & resume functionality, support for profiling, memory footprint reduction, cache size configuration, and on-prem optimizations. The tool also provides benchmarks for measuring streaming speed and conversion efficiency, along with runnable templates for different data types. LitData enables infinite cloud data processing by utilizing the Lightning.ai platform to scale data processing with optimized machines.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

agentlang

AgentLang is an open-source programming language and framework designed for solving complex tasks with the help of AI agents. It allows users to build business applications rapidly from high-level specifications, making it more efficient than traditional programming languages. The language is data-oriented and declarative, with a syntax that is intuitive and closer to natural languages. AgentLang introduces innovative concepts such as first-class AI agents, graph-based hierarchical data model, zero-trust programming, declarative dataflow, resolvers, interceptors, and entity-graph-database mapping.

For similar tasks

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

THE-SANDBOX-AutoClicker

The Sandbox AutoClicker is a bot designed for the crypto game The Sandbox, allowing users to automate various processes within the game. The tool offers features such as auto tuning, multi-account auto clicker, multi-threading, a convenient menu, and free proxies. It provides full optimization through a simple menu and is guaranteed to be safe for Windows systems, supporting versions 7/8/8.1/10/11 (x32/64).

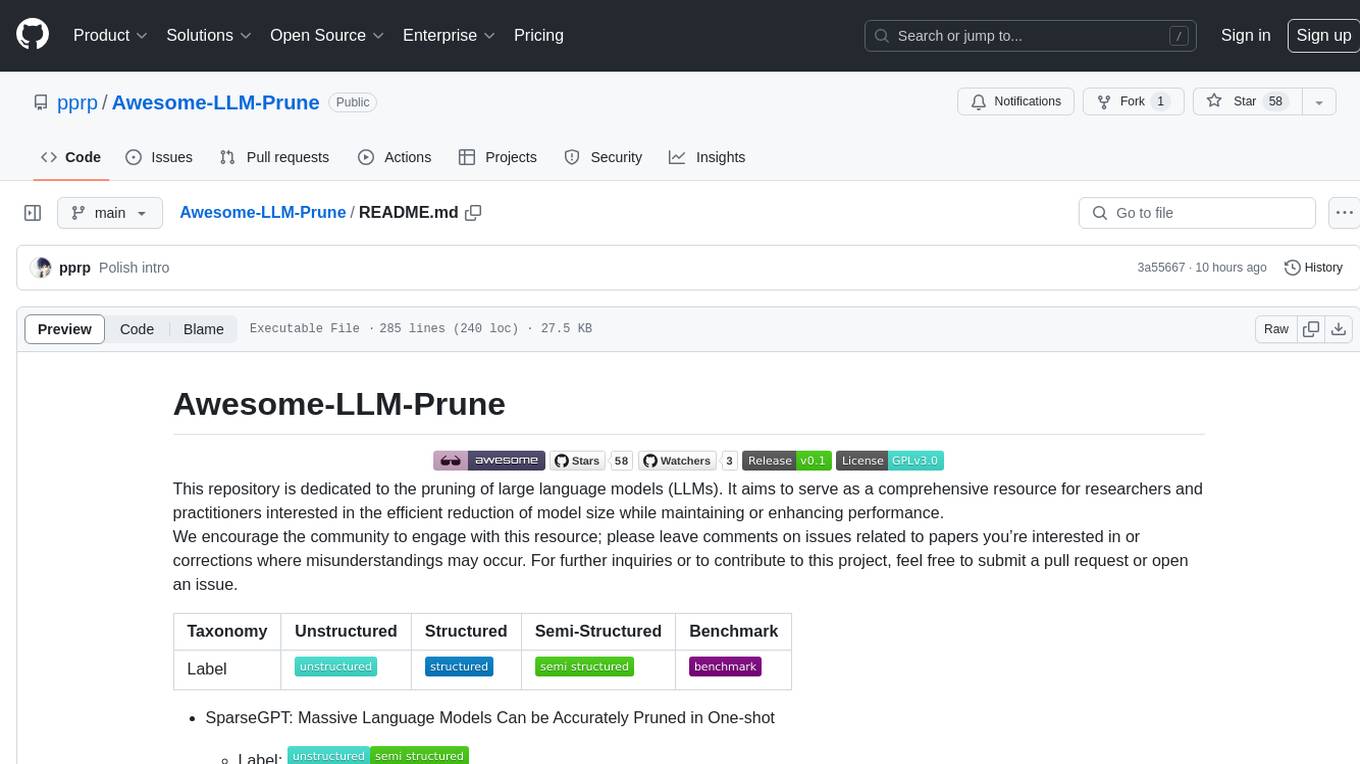

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

SuperCoder

SuperCoder is an open-source autonomous software development system that leverages advanced AI tools and agents to streamline and automate coding, testing, and deployment tasks, enhancing efficiency and reliability. It supports a variety of languages and frameworks for diverse development needs. Users can set up the environment variables, build and run the Go server, Asynq worker, and Postgres using Docker and Docker Compose. The project is under active development and may still have issues, but users can seek help and support from the Discord community or by creating new issues on GitHub.

MoBA

MoBA (Mixture of Block Attention) is an innovative approach for long-context language models, enabling efficient processing of long sequences by dividing the full context into blocks and introducing a parameter-less gating mechanism. It allows seamless transitions between full and sparse attention modes, enhancing efficiency without compromising performance. MoBA has been deployed to support long-context requests and demonstrates significant advancements in efficient attention computation for large language models.

tapes

Tapes is an agentic telemetry system designed for content-addressable LLM interactions. It offers durable storage of agent sessions, plug-and-play OpenTelemetry instrumentation, and deterministic replay of past agent messages. The tool facilitates seamless communication and interaction tracking in a transparent manner, enhancing the efficiency of content-addressable interactions.

Ai-with-Chucky-Colab-Notebooks

Ai-with-Chucky-Colab-Notebooks is a collection of Colab notebooks optimized for various AI tasks. The notebooks provide a comfortable user interface and are designed to enhance the efficiency of AI experiments. The repository includes tools like Z-Image Turbo Pro and Kani TTS-2 for English text-to-speech synthesis. Users can easily access and run these notebooks in Google Colab, making it convenient for AI enthusiasts and researchers to experiment with different AI models and techniques.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.