hugging-chat-api

HuggingChat Python API🤗

Stars: 780

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

README:

English | 简体中文

Unofficial HuggingChat Python API, extensible for chatbots etc.

Note

Some recent versions may no longer be fully backward compatible to some extent, a good idea is to review this README or issues promptly after any problem arise. Custom parameters(temprature, max_token, etc) is no longer supported

Recently new updates:

- Assistant(Image Generator, etc)

- Web search

- Memorize context

- Supports for changing LLMs

pip3 install hugchatThe following are all common usages of this repo, You may not necessarily use all of them, You can add or delete some as needed :)

from hugchat import hugchat

from hugchat.login import Login

# Log in to huggingface and grant authorization to huggingchat

EMAIL = "your email"

PASSWD = "your password"

cookie_path_dir = "./cookies/" # NOTE: trailing slash (/) is required to avoid errors

sign = Login(EMAIL, PASSWD)

cookies = sign.login(cookie_dir_path=cookie_path_dir, save_cookies=True)

# Create your ChatBot

chatbot = hugchat.ChatBot(cookies=cookies.get_dict()) # or cookie_path="usercookies/<email>.json"

message_result = chatbot.chat("Hi!") # note: message_result is a generator, the method will return immediately.

# Non stream

message_str: str = message_result.wait_until_done() # you can also print(message_result) directly.

# get files(such as images)

file_list = message_result.get_files_created() # must call wait_until_done() first!

# tips: model "CohereForAI/c4ai-command-r-plus" can generate images :)

# Stream response

for resp in chatbot.query(

"Hello",

stream=True

):

print(resp)

# Web search

query_result = chatbot.query("Hi!", web_search=True)

print(query_result)

for source in query_result.web_search_sources:

print(source.link)

print(source.title)

print(source.hostname)

# Create a new conversation

chatbot.new_conversation(switch_to = True) # switch to the new conversation

# Get conversations on the server that are not from the current session (all your conversations in huggingchat)

conversation_list = chatbot.get_remote_conversations(replace_conversation_list=True)

# Get conversation list(local)

conversation_list = chatbot.get_conversation_list()

# Get the available models (not hardcore)

models = chatbot.get_available_llm_models()

# Switch model with given index

chatbot.switch_llm(0) # Switch to the first model

chatbot.switch_llm(1) # Switch to the second model

# Get information about the current conversation

info = chatbot.get_conversation_info()

print(info.id, info.title, info.model, info.system_prompt, info.history)

# Assistant

assistant = chatbot.search_assistant(assistant_name="ChatGpt") # assistant name list in https://huggingface.co/chat/assistants

assistant_list = chatbot.get_assistant_list_by_page(page=0)

chatbot.new_conversation(assistant=assistant, switch_to=True) # create a new conversation with assistant

# [DANGER] Delete all the conversations for the logged in user

chatbot.delete_all_conversations()

version 0.0.5.2or newer

Simply run the following command in your terminal to start the CLI mode

python -m hugchat.cliCLI params:

-

-u <your huggingface email>: Provide account email to login. -

-p: Force request password to login, ignores saved cookies. -

-s: Enable streaming mode output in CLI. -

-c: Continue previous conversation in CLI ".

Commands in cli mode:

-

/new: Create and switch to a new conversation. -

/ids: Shows a list of all ID numbers and ID strings in current session. -

/switch: Shows a list of all conversations' info in current session. Then you can choose one to switch to. -

/switch all: Shows a list of all conversations' info in your account. Then you can choose one to switch to. (not recommended if your account has a lot of conversations) -

/del <index>: Deletes the conversation linked with the index passed. Will not delete active session. -

/delete-all: Deletes all the conversations for the logged in user. -

/clear: Clear the terminal. -

/llm: Get available models you can switch to. -

/llm <index>: Switches model to given model index based on/llm. -

/share: Toggles settings for sharing data with model author. On by default. -

/exit: Closes CLI environment. -

/stream: Toggles streaming the response. -

/web: Toggles web search. -

/web-hint: Toggles display web search hint. -

AI is an area of active research with known problems such as biased generation and misinformation. Do not use this application for high-stakes decisions or advice.

-

Server resources are precious, it is not recommended to request this API in a high frequency. (

Hugging Face's CTO🤗just liked the suggestion)

This is not an official Hugging Face product. This is a personal project and is not affiliated with Hugging Face in any way. Don't sue us.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for hugging-chat-api

Similar Open Source Tools

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

memobase

Memobase is a user profile-based memory system designed to enhance Generative AI applications by enabling them to remember, understand, and evolve with users. It provides structured user profiles, scalable profiling, easy integration with existing LLM stacks, batch processing for speed, and is production-ready. Users can manage users, insert data, get memory profiles, and track user preferences and behaviors. Memobase is ideal for applications that require user analysis, tracking, and personalized interactions.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

python-sdks

Python SDK for LiveKit enables developers to easily integrate real-time video, audio, and data features into their Python applications. By connecting to a LiveKit server, users can quickly build interactive live streaming or video call applications with minimal code. The SDK includes packages for real-time participant connection and access token generation, making it simple to create rooms and manage participants. With asyncio and aiohttp support, developers can seamlessly interact with the LiveKit server API and handle real-time communication tasks effortlessly.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

reolink_aio

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

gpt-translate

Markdown Translation BOT is a GitHub action that translates markdown files into multiple languages using various AI models. It supports markdown, markdown-jsx, and json files only. The action can be executed by individuals with write permissions to the repository, preventing API abuse by non-trusted parties. Users can set up the action by providing their API key and configuring the workflow settings. The tool allows users to create comments with specific commands to trigger translations and automatically generate pull requests or add translated files to existing pull requests. It supports multiple file translations and can interpret any language supported by GPT-4 or GPT-3.5.

HuixiangDou

HuixiangDou is a **group chat** assistant based on LLM (Large Language Model). Advantages: 1. Design a two-stage pipeline of rejection and response to cope with group chat scenario, answer user questions without message flooding, see arxiv2401.08772 2. Low cost, requiring only 1.5GB memory and no need for training 3. Offers a complete suite of Web, Android, and pipeline source code, which is industrial-grade and commercially viable Check out the scenes in which HuixiangDou are running and join WeChat Group to try AI assistant inside. If this helps you, please give it a star ⭐

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

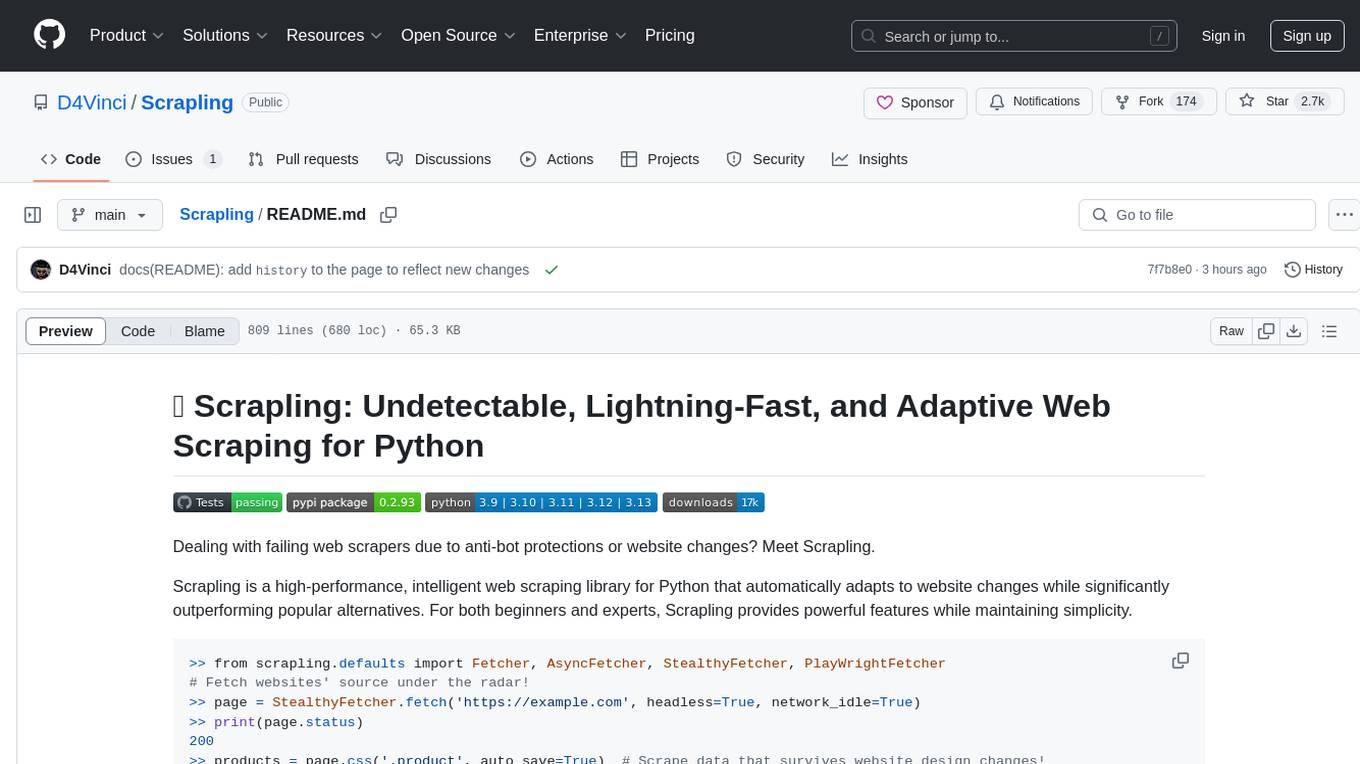

Scrapling

Scrapling is a high-performance, intelligent web scraping library for Python that automatically adapts to website changes while significantly outperforming popular alternatives. For both beginners and experts, Scrapling provides powerful features while maintaining simplicity. It offers features like fast and stealthy HTTP requests, adaptive scraping with smart element tracking and flexible selection, high performance with lightning-fast speed and memory efficiency, and developer-friendly navigation API and rich text processing. It also includes advanced parsing features like smart navigation, content-based selection, handling structural changes, and finding similar elements. Scrapling is designed to handle anti-bot protections and website changes effectively, making it a versatile tool for web scraping tasks.

For similar tasks

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

OpenAssistantGPT

OpenAssistantGPT is an open source platform for building chatbot assistants using OpenAI's Assistant. It offers features like easy website integration, low cost, and an open source codebase available on GitHub. Users can build their chatbot with minimal coding required, and OpenAssistantGPT supports direct billing through OpenAI without extra charges. The platform is user-friendly and cost-effective, appealing to those seeking to integrate AI chatbot functionalities into their websites.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.