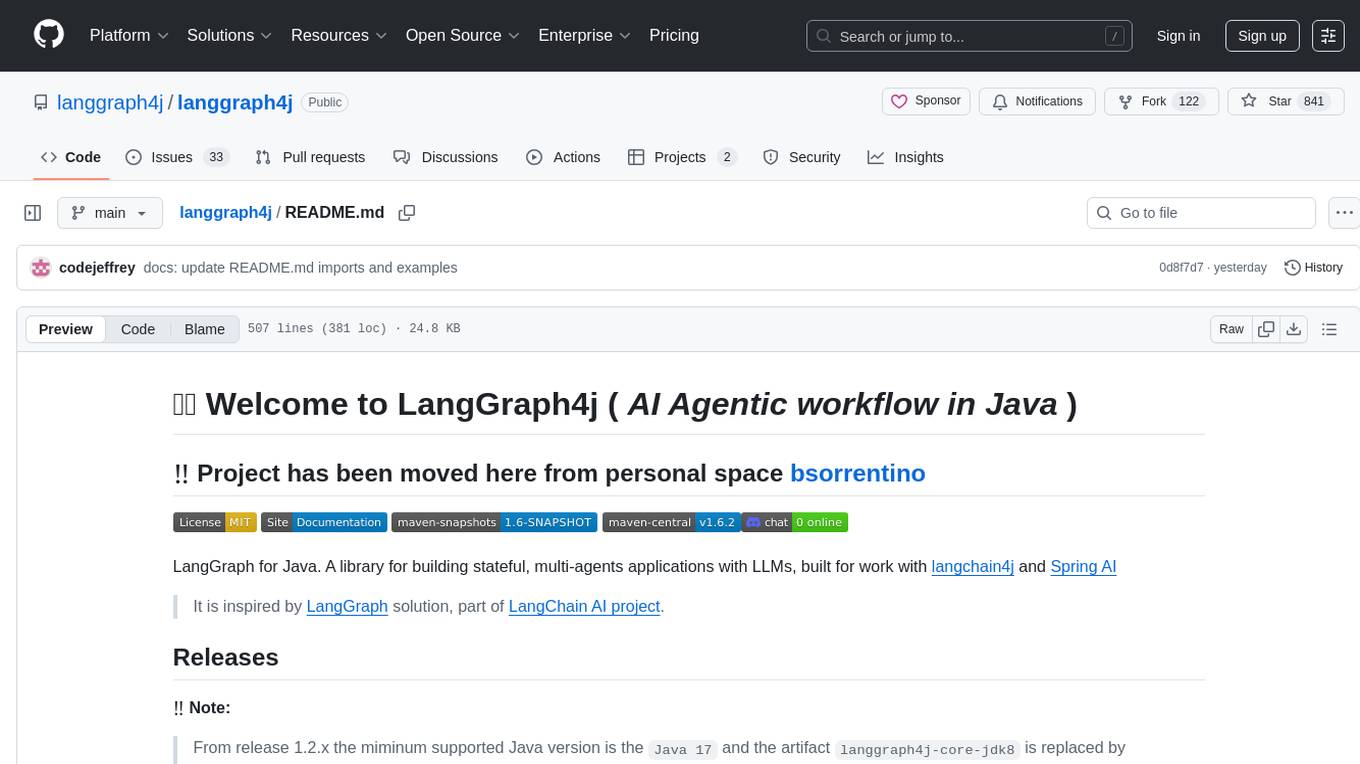

langgraph4j

🚀 LangGraph for Java. A library for developing complex agent-based AI architectures in the Java ecosystem. Designed to work seamlessly with both Langchain4j and Spring AI.

Stars: 916

Langgraph4j is a Java library for language processing tasks such as text classification, sentiment analysis, and named entity recognition. It provides a set of tools and algorithms for analyzing text data and extracting useful information. The library is designed to be efficient and easy to use, making it suitable for both research and production applications.

README:

‼️ Project has been moved here from personal space bsorrentino

LangGraph for Java. A library for building stateful, multi-agents applications with LLMs, built for work with langchain4j and Spring AI

It is inspired by LangGraph solution, part of LangChain AI project.

From release 1.2.x the miminum supported Java version is the

Java 17and the artifactlanggraph4j-core-jdk8is replaced bylanggraph4j-core

| Date | Release | info |

|---|---|---|

| Sep 27, 2025 | 1.6.5 |

official release |

Welcome to LangGraph4j! This guide will help you understand the core concepts of LangGraph4j, install it, and build your first application.

LangGraph4j is a Java library for building stateful, multi-agent applications with Large Language Models (LLMs). It is inspired by the Python library LangGraph and is designed to work seamlessly with popular Java LLM frameworks like Langchain4j and Spring AI.

At its core, LangGraph4j allows you to define cyclical graphs where different components (agents, tools, or custom logic) can interact in a stateful manner. This is crucial for building complex applications that require memory, context, and the ability for different "agents" to collaborate or hand off tasks.

LangGraph4j offers several features and benefits:

- Stateful Execution: Manage and update a shared state across graph nodes, enabling memory and context awareness.

- Cyclical Graphs: Unlike traditional DAGs, LangGraph4j supports cycles, essential for agent-based architectures where control flow can loop back (e.g., an agent retrying a task or asking for clarification).

- Explicit Control Flow: Clearly define the paths and conditions for transitions between nodes in your graph.

- Modularity: Build complex systems from smaller, reusable components (nodes).

- Flexibility: Integrate with various LLM providers and custom Java logic.

-

Observability & Debugging:

- Checkpoints: Save the state of your graph at any point and replay or inspect it later. This is invaluable for debugging and understanding complex interactions.

- Graph Visualization: Generate visual representations of your graph using PlantUML or Mermaid to understand its structure.

- Asynchronous & Streaming Support: Build responsive applications with non-blocking operations and stream results from LLMs.

- Playground & Studio: A web UI to visually inspect, run, and debug your graphs.

Understanding these concepts is key to using LangGraph4j effectively:

The StateGraph is the primary class you'll use to define the structure of your application. It's where you add nodes and edges to create your graph. It is parameterized by an AgentState.

The AgentState (or a class extending it) represents the shared state of your graph. It's essentially a map (Map<String, Object>) that gets passed from node to node. Each node can read from this state and return updates to it.

-

Schema: The structure of the state is defined by a "schema," which is a

Map<String, Channel.Reducer>. Each key in the map corresponds to an attribute in the state. -

Channel.Reducer: A reducer defines how updates to a state attribute are handled. For example, a new value might overwrite the old one, or it might be added to a list of existing values. -

Channel.Default<T>: Provides a default value for a state attribute if it's not already set. -

Channel.Appender<T>/MessageChannel.Appender<M>: A common type of reducer that appends the new value to a list associated with the state attribute. This is useful for accumulating messages, tool calls, or other sequences of data.MessageChannel.Appenderis specifically designed for chat messages and can also handle message deletion by ID.

Nodes are the building blocks of your graph that perform actions. A node is typically a function (or a class implementing NodeAction<S> or AsyncNodeAction<S>) that:

- Receives the current

AgentStateas input. - Performs some computation (e.g., calls an LLM, executes a tool, runs custom business logic).

- Returns a

Map<String, Object>representing updates to the state. These updates are then applied to theAgentStateaccording to the schema's reducers.

Nodes can be synchronous or asynchronous (CompletableFuture).

Edges define the flow of control between nodes.

-

Normal Edges: An unconditional transition from one node to another. After node A completes, control always passes to node B. You define these with

addEdge(sourceNodeName, destinationNodeName). -

Conditional Edges: The next node is determined dynamically based on the current

AgentState. After a source node completes, anEdgeAction<S>(orAsyncEdgeAction<S>) function is executed. This function receives the current state and returns the name of the next node to execute. This allows for branching logic (e.g., if an agent decided to use a tool, go to the "execute_tool" node; otherwise, go to the "respond_to_user" node). Conditional edges are defined withaddConditionalEdges(...). -

Entry Points: You can also define conditional entry points to your graph using

addConditionalEntryPoint(...).

Once you've defined all your nodes and edges in a StateGraph, you compile() it into a CompiledGraph<S extends AgentState>. This compiled graph is an immutable, runnable representation of your logic. Compilation validates the graph structure (e.g., checks for orphaned nodes).

LangGraph4j allows you to save (Checkpoint) the state of your graph at any step. This is extremely useful for:

- Debugging: Inspect the state at various points to understand what happened.

- Resuming: Restore a graph to a previous state and continue execution.

-

Long-running processes: Persist the state of long-running agent interactions.

You'll typically use a

CheckpointSaverimplementation (e.g.,MemorySaverfor in-memory storage, or you can implement your own for persistent storage).

langgraph4j/

├── langgraph4j-bom/ # LangGraph4j dependency management

├── langgraph4j-core/ # LangGraph4j core components

├── langgraph4j-postgres-saver # LangGraph4j persistent checkpoint saver based on PostgresSQL

├── langchain4j/ # LangChain4j integration

│ ├── langchain4j-core/ # LangChain4j core components (integration required)

│ └── langchain4j-agent/ # LangChain4j agent executor

├── spring-ai/ # Spring AI integration

│ └── spring-ai-core/ # Spring AI core components (integration required)

│ └── spring-ai-agent/ # Spring AI agent executor

├── studio/ # LangGraph4j Studio (web UI)

│ └── base/ # Base classes and interfaces

│ └── jetty/ # Jetty server implementation

│ └── quarkus/ # Quarkus server implementation

│ └── springboot/ # Spring Boot implementation

├── how-tos/ # How-tos and examples, examples repository: https://github.com/langgraph4j/langgraph4j-examples

To use LangGraph4j in your project, you need to add it as a dependency.

Maven:

Make sure you are using Java 17 or later.

Latest Stable Version (Recommended):

<properties>

<langgraph4j.version>1.6.5</langgraph4j.version> <!-- Check for the actual latest version -->

</properties>

<!-- Optional: Add the Bill of Materials (BOM) to manage langgraph4j module versions -->

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.bsc.langgraph4j</groupId>

<artifactId>langgraph4j-bom</artifactId>

<version>${langgraph4j.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.bsc.langgraph4j</groupId>

<artifactId>langgraph4j-core</artifactId>

</dependency>

<!-- Add other langgraph4j modules if needed, e.g., langgraph4j-langchain4j -->

</dependencies>(Note: Always check the Maven Central Repository for the latest version number.)

Development Snapshot Version: If you want to use the latest unreleased features, you can use a snapshot version.

<dependency>

<groupId>org.bsc.langgraph4j</groupId>

<artifactId>langgraph4j-core</artifactId>

<version>1.6-SNAPSHOT</version> <!-- Or the current snapshot version -->

</dependency>You might need to configure your settings.xml or pom.xml to include the Sonatype OSS snapshots repository:

<repositories>

<repository>

<id>sonatype-oss-snapshots</id>

<url>https://central.sonatype.com/repository/maven-snapshots</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>Let's create a very simple graph that has two nodes: greeter and responder.

The greeter node will add a greeting message to the state.

The responder node will add a response message based on the greeting.

1. Define the State: Our state will hold a list of messages.

import org.bsc.langgraph4j.state.AgentState;

import org.bsc.langgraph4j.state.Channels;

import org.bsc.langgraph4j.state.Channel;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

// Define the state for our graph

class SimpleState extends AgentState {

public static final String MESSAGES_KEY = "messages";

// Define the schema for the state.

// MESSAGES_KEY will hold a list of strings, and new messages will be appended.

public static final Map<String, Channel<?>> SCHEMA = Map.of(

MESSAGES_KEY, Channels.appender(ArrayList::new)

);

public SimpleState(Map<String, Object> initData) {

super(initData);

}

public List<String> messages() {

return this.<List<String>>value("messages")

.orElse( List.of() );

}

}2. Define the Nodes:

import org.bsc.langgraph4j.action.NodeAction;

import java.util.List;

import java.util.Map;

// Node that adds a greeting

class GreeterNode implements NodeAction<SimpleState> {

@Override

public Map<String, Object> apply(SimpleState state) {

System.out.println("GreeterNode executing. Current messages: " + state.messages());

return Map.of(SimpleState.MESSAGES_KEY, "Hello from GreeterNode!");

}

}

// Node that adds a response

class ResponderNode implements NodeAction<SimpleState> {

@Override

public Map<String, Object> apply(SimpleState state) {

System.out.println("ResponderNode executing. Current messages: " + state.messages());

List<String> currentMessages = state.messages();

if (currentMessages.contains("Hello from GreeterNode!")) {

return Map.of(SimpleState.MESSAGES_KEY, "Acknowledged greeting!");

}

return Map.of(SimpleState.MESSAGES_KEY, "No greeting found.");

}

}3. Define and Compile the Graph:

import org.bsc.langgraph4j.StateGraph;

import org.bsc.langgraph4j.GraphStateException;

import static org.bsc.langgraph4j.action.AsyncNodeAction.node_async;

import static org.bsc.langgraph4j.StateGraph.START;

import static org.bsc.langgraph4j.StateGraph.END;

import java.util.Map;

public class SimpleGraphApp {

public static void main(String[] args) throws GraphStateException {

// Initialize nodes

GreeterNode greeterNode = new GreeterNode();

ResponderNode responderNode = new ResponderNode();

// Define the graph structure

var stateGraph = new StateGraph<>(SimpleState.SCHEMA, initData -> new SimpleState(initData))

.addNode("greeter", node_async(greeterNode))

.addNode("responder", node_async(responderNode))

// Define edges

.addEdge(START, "greeter") // Start with the greeter node

.addEdge("greeter", "responder")

.addEdge("responder", END) // End after the responder node

;

// Compile the graph

var compiledGraph = stateGraph.compile();

// Run the graph

// The `stream` method returns an AsyncGenerator.

// For simplicity, we'll collect results. In a real app, you might process them as they arrive.

// Here, the final state after execution is the item of interest.

for (var item : compiledGraph.stream( Map.of( SimpleState.MESSAGES_KEY, "Let's, begin!" ) ) ) {

System.out.println( item );

}

}

}Explanation:

- We defined

SimpleStatewith aMESSAGES_KEYthat usesAppenderChannelto accumulate strings. -

GreeterNodeadds a "Hello" message. -

ResponderNodechecks for the greeting and adds an acknowledgment. - The

StateGraphis defined, nodes are added, and edges specify the flow:START->greeter->responder->END. -

stateGraph.compile()creates the runnableCompiledGraph. -

compiledGraph.stream(initialState)executes the graph. We iterate through the stream to get the final state. Each item in the stream represents the state after a node has executed.

This example demonstrates the basic workflow: define state, define nodes, wire them with edges, compile, and run.

As shown in the example, you typically run a compiled graph using one of its execution methods:

-

stream(S initialState, RunnableConfig config): Executes the graph and returns anAsyncGenerator<S>. Each yielded item is the stateSafter a node has completed. This is useful for observing the state at each step or for streaming partial results. -

invoke(S initialState, RunnableConfig config): Executes the graph and returns aCompletableFuture<S>that completes with the final state of the graph after it reaches anENDnode.

The RunnableConfig can be used to pass in runtime configuration.

Langgraph4j Studio is an embeddable web application for visualizing and experimenting with graphs:

To explore the Langgraph4j Studio go to studio

As default use case to proof LangChain4j integration, We have implemented AgentExecutor (aka ReACT Agent) using LangGraph4j. In the project's module, you can the complete working code with tests. Feel free to checkout and use it as a reference.

Below usage example of the AgentExecutor.

public class TestTool {

@Tool("tool for test AI agent executor")

String execTest(@P("test message") String message) {

return format( "test tool ('%s') executed with result 'OK'", message);

}

@Tool("return current number of system thread allocated by application")

int threadCount() {

return Thread.getAllStackTraces().size();

}

}var model = OllamaChatModel.builder()

.modelName( "qwen2.5:7b" )

.baseUrl("http://localhost:11434")

.supportedCapabilities(Capability.RESPONSE_FORMAT_JSON_SCHEMA)

.logRequests(true)

.logResponses(true)

.maxRetries(2)

.temperature(0.0)

.build();

var agent = AgentExecutor.builder()

.chatModel(model)

.toolsFromObject(new TestTool())

.build()

.compile();

for (var item : agent.stream( Map.of( "messages", "perform test twice and return number of current active threads" ) ) ) {

System.out.println( item );

}As default use case to proof Spring AI integration, We have implemented AgentExecutor (aka ReACT Agent) using LangGraph4j. In the project's module, you can the complete working code with tests. Feel free to checkout and use it as a reference.

Below usage example of the AgentExecutor.

public class TestTool {

@Tool(description = "tool for test AI agent executor")

String execTest( @ToolParam(description ="test message") String message ) {

return format( "test tool ('%s') executed with result 'OK'", message);

}

@Tool(description = "return current number of system thread allocated by application")

int threadCount() {

return Thread.getAllStackTraces().size();

}

}var model = OllamaChatModel.builder()

.ollamaApi(OllamaApi.builder().baseUrl("http://localhost:11434").build())

.defaultOptions(OllamaOptions.builder()

.model("qwen2.5:7b")

.temperature(0.1)

.build())

.build();

var agent = AgentExecutor.builder()

.chatModel(model)

.toolsFromObject(new TestTool())

.build()

.compile()

;

for (var item : agent.stream( Map.of( "messages", "perform test twice and return number of current active threads" ) ) ) {

System.out.println( item );

}LangGraph4j is packed with features to build sophisticated agentic applications:

-

Asynchronous Operations: Nodes and edges can be asynchronous (returning

CompletableFuture), allowing for non-blocking I/O operations, especially when dealing with LLM calls. - Streaming: Natively supports streaming responses from LLMs through nodes, enabling real-time output. See how-tos/llm-streaming.ipynb.

- Checkpoints (Persistence & Time Travel): Save and load the state of your graph. This allows you to resume long-running tasks, debug by inspecting intermediate states, and even "time travel" to previous states. See how-tos/persistence.ipynb and how-tos/time-travel.ipynb.

- Graph Visualization: Generate PlantUML or Mermaid diagrams of your graph to visualize its structure, which aids in understanding and debugging. See how-tos/plantuml.ipynb.

- Playground & Studio: LangGraph4j comes with an embeddable web UI (Studio) that allows you to visualize, run, and interact with your graphs in real-time. This is excellent for development and debugging.

- Child Graphs (Subgraphs): Compose complex graphs by nesting smaller, reusable graphs within nodes of a parent graph. This promotes modularity and reusability. See how-tos/subgraph-as-nodeaction.ipynb, how-tos/subgraph-as-compiledgraph.ipynb, and how-tos/subgraph-as-stategraph.ipynb.

- Parallel Execution: Configure parts of your graph to execute multiple nodes in parallel, improving performance for tasks that can be run concurrently. See [how-tos/parallel-branch.ipynb].

- Threads (Multi-turn Conversations): Manage distinct, parallel execution threads within a single graph instance, each with its own checkpoint history. This is vital for handling multiple user sessions or conversations simultaneously.

Now that you have a basic understanding of LangGraph4j, here's how you can continue your journey:

-

Explore the

how-to: Thehow-tos/directory in the repository contains Jupyter notebooks (runnable with Java kernels like IJava) that demonstrate various features with code examples. - Study the Examples: Check out the examples from here for more complete application examples, including integrations with Langchain4j and Spring AI.

- Consult the Javadocs: For detailed information on classes and methods, refer to the API documentation (Javadocs). (Link might need updating if official project documentation site changes)

- Experiment! The best way to learn is by doing. Try modifying the examples or building your own simple graphs.

We hope this guide helps you get started with LangGraph4j. Happy building!

- LangGraph4j Meets AG-UI - Building UI/UX in AI Agents era

- LangGraph4j - Implementing Human-in-the-Loop at ease

- LangGraph4j - Multi-Agent handoff implementation with Spring AI

- Microsoft JDConf 2025 - AI Agents Graph: Your following tool in your Java AI journey

- Enhancing AI Agent Development: A Hands-On Weekend with LangGraph4J

- LangGraph4j Generator - Visually scaffold LangGraph Java code

- AI Agent in Java with LangGraph4j

- Building Stateful Multi AI Agents -LangGraph4J & Spring AI

- A deep research assistant based on the Langgraph4j

- research4j - Build your own perplexity for your applications

- Multi Agent Banking Assistant with Java using Langraph4j

- Java Async Generator, a Java version of Javascript async generator

- AIDEEPIN: Ai-based productivity tools (Chat,Draw,RAG,Workflow etc)

- Dynamo Multi AI Agent POC: Unlock the Power of Spring AI and LangGraph4J

- LangChain4j & LangGraph4j Integrate LangFuse

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langgraph4j

Similar Open Source Tools

langgraph4j

Langgraph4j is a Java library for language processing tasks such as text classification, sentiment analysis, and named entity recognition. It provides a set of tools and algorithms for analyzing text data and extracting useful information. The library is designed to be efficient and easy to use, making it suitable for both research and production applications.

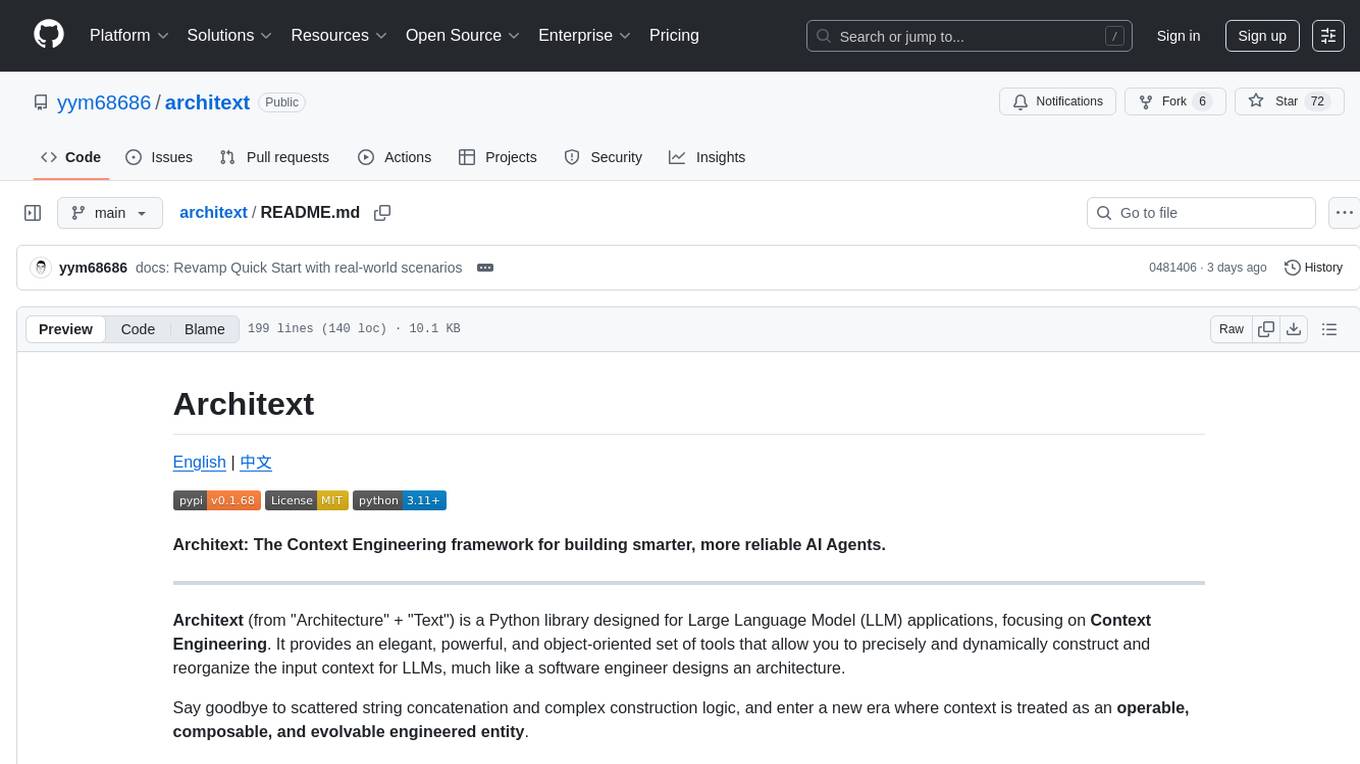

architext

Architext is a Python library designed for Large Language Model (LLM) applications, focusing on Context Engineering. It provides tools to construct and reorganize input context for LLMs dynamically. The library aims to elevate context construction from ad-hoc to systematic engineering, enabling precise manipulation of context content for AI Agents.

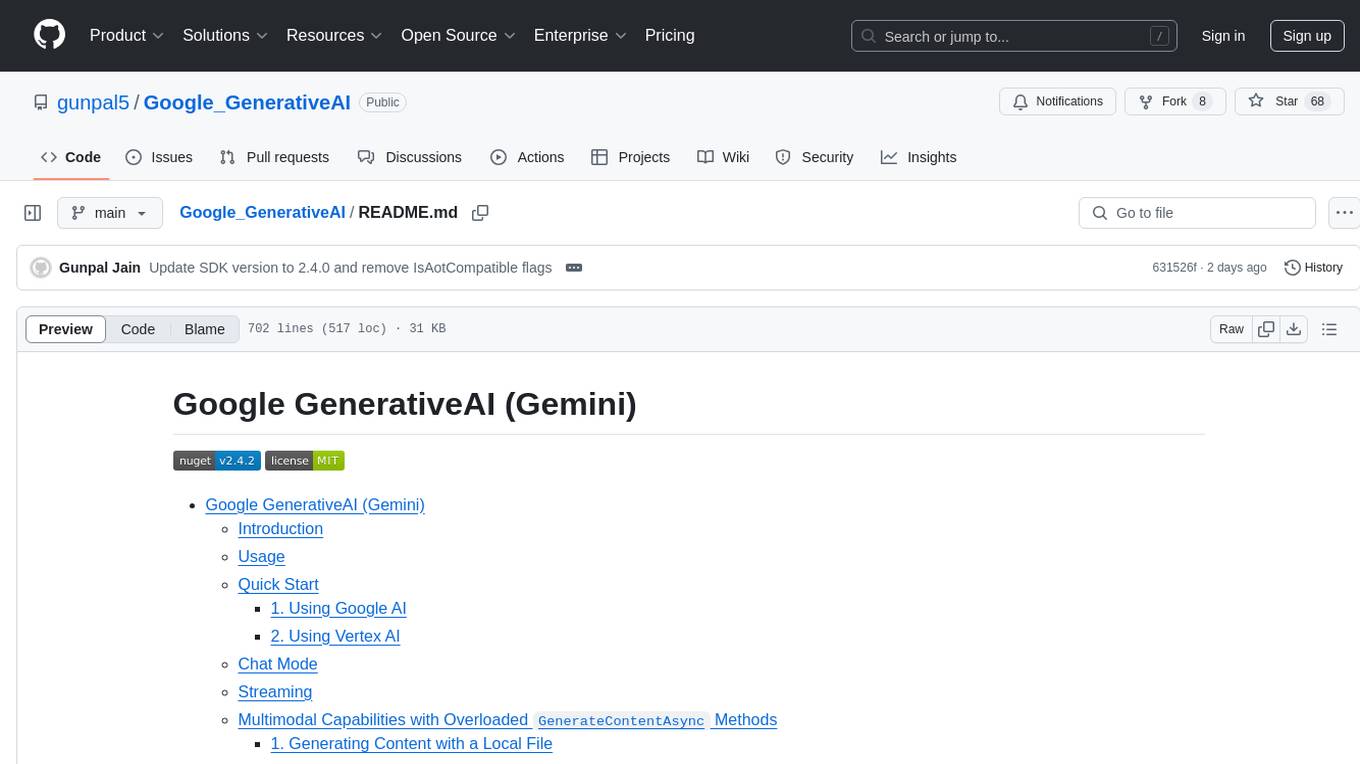

Google_GenerativeAI

Google GenerativeAI (Gemini) is an unofficial C# .Net SDK based on REST APIs for accessing Google Gemini models. It offers a complete rewrite of the previous SDK with improved performance, flexibility, and ease of use. The SDK seamlessly integrates with LangChain.net, providing easy methods for JSON-based interactions and function calling with Google Gemini models. It includes features like enhanced JSON mode handling, function calling with code generator, multi-modal functionality, Vertex AI support, multimodal live API, image generation and captioning, retrieval-augmented generation with Vertex RAG Engine and Google AQA, easy JSON handling, Gemini tools and function calling, multimodal live API, and more.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

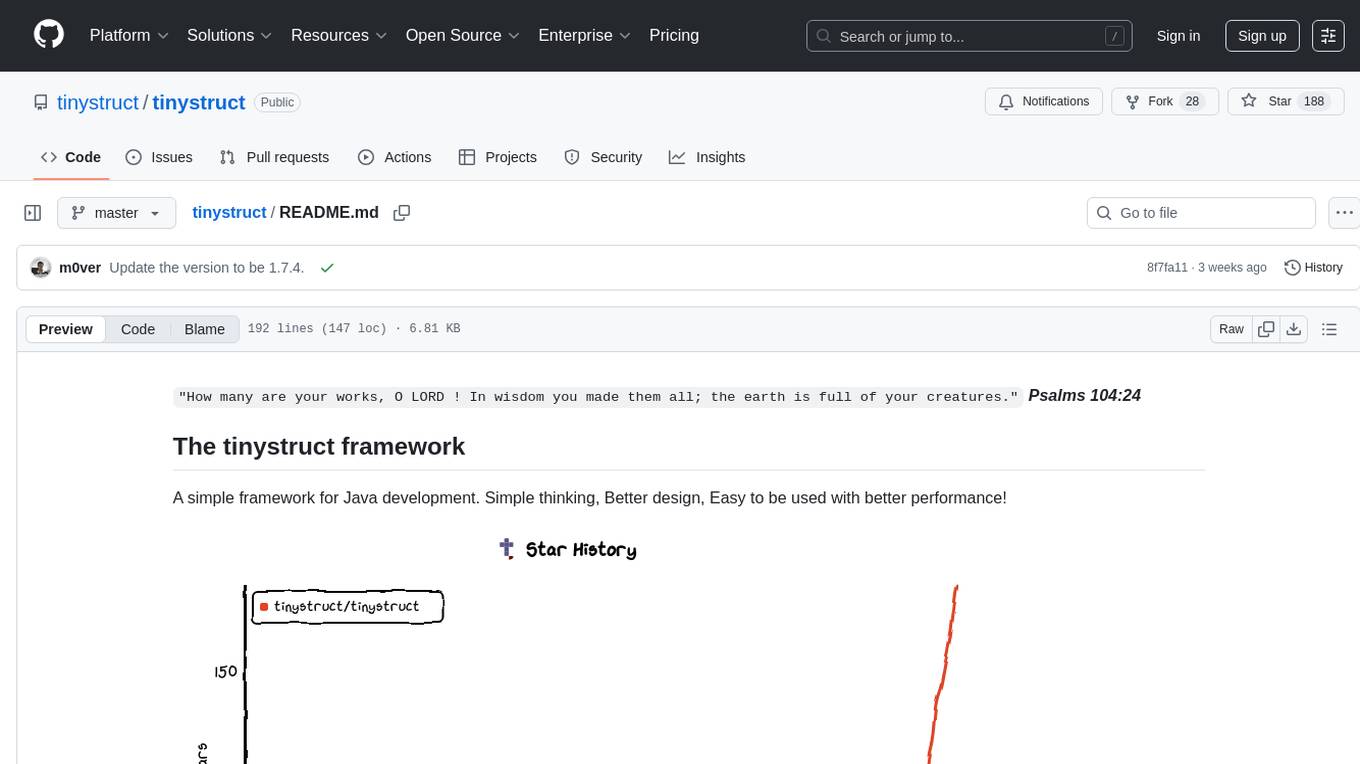

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

playword

PlayWord is a tool designed to supercharge web test automation experience with AI. It provides core features such as enabling browser operations and validations using natural language inputs, as well as monitoring interface to record and dry-run test steps. PlayWord supports multiple AI services including Anthropic, Google, and OpenAI, allowing users to select the appropriate provider based on their requirements. The tool also offers features like assertion handling, frame handling, custom variables, test recordings, and an Observer module to track user interactions on web pages. With PlayWord, users can interact with web pages using natural language commands, reducing the need to worry about element locators and providing AI-powered adaptation to UI changes.

aioreactive

Aioreactive is a Python library that brings ReactiveX functionality to asyncio using async and await. It is built on the Expression functional library and aims to provide a simple, clean, and async-based approach to reactive programming in Python. The library supports Python 3.10+ and focuses on using plain old functions for operators, running on the asyncio event loop, and providing implicit synchronous back-pressure for event processing.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.