evolving-agents

A toolkit for agent autonomy, evolution, and governance. Create agents that can understand requirements, evolve through experience, communicate effectively, and build new agents and tools - all while operating within governance guardrails.

Stars: 403

A toolkit for agent autonomy, evolution, and governance enabling agents to learn from experience, collaborate, communicate, and build new tools within governance guardrails. It focuses on autonomous evolution, agent self-discovery, governance firmware, self-building systems, and agent-centric architecture. The toolkit leverages existing frameworks to enable agent autonomy and self-governance, moving towards truly autonomous AI systems.

README:

Build intelligent AI agent ecosystems that orchestrate complex tasks based on high-level goals.

This toolkit provides a robust, production-grade framework for building autonomous AI agents and multi-agent systems. It uniquely focuses on enabling agents to understand requirements, design solutions (potentially using specialized design agents like ArchitectZero), discover capabilities, evolve components, and orchestrate complex task execution based on high-level goals, all while operating within defined governance boundaries.

-

Goal-Oriented Orchestration: A central

SystemAgentacts as the primary entry point, taking high-level goals and autonomously determining the steps needed, orchestrating component creation, communication, and execution. -

Intelligent Solution Design (Optional): Agents like

ArchitectZerocan analyze requirements and design detailed multi-component solutions, providing blueprints for theSystemAgent. -

Internal Workflow Management: For complex tasks, the

SystemAgentcan internally generate, process, and execute multi-step workflow plans using specialized tools (GenerateWorkflowTool,ProcessWorkflowTool), abstracting this complexity from the caller. -

Semantic Capability Discovery: The

SmartAgentBusallows agents to find and utilize capabilities based on natural language descriptions, enabling dynamic service discovery and routing via a logical "Data Bus". -

Ecosystem Management: The

SmartAgentBusalso provides a logical "System Bus" for managing agent registration, health, and discovery. -

Intelligent Component Management: The

SmartLibraryprovides persistent storage, semantic search (via vector embeddings), versioning, and evolution capabilities for agents and tools. -

Adaptive Evolution: Components can be evolved based on requirements, feedback, or performance data using various strategies (standard, conservative, aggressive, domain adaptation), orchestrated by the

SystemAgent. - Multi-Framework Support: Seamlessly integrate agents built with different frameworks (e.g., BeeAI, OpenAI Agents SDK) through a flexible provider architecture.

-

Governance & Safety: Built-in

Firmwareinjects rules, and guardrails (like theOpenAIGuardrailsAdapter) ensure safe and compliant operation. -

Self-Building Potential: The architecture allows agents (like

ArchitectZeroandSystemAgent) to collaboratively design and implement new agent systems based on user needs.

While many frameworks focus on building individual agents, the Evolving Agents Toolkit focuses on creating intelligent, self-improving agent ecosystems capable of handling complex tasks autonomously. Key differentiators include:

-

High-Level Goal Execution: Interact with the system via goals given to the

SystemAgent, which then handles the "how" (planning, component management, execution). -

Internal Orchestration: Complex workflows (design -> generate -> process -> execute) are managed internally by the

SystemAgent, abstracting the mechanics. -

Semantic Capability Network:

SmartAgentBuscreates a dynamic network where agents discover and interact based on function, not fixed names, via capability requests. -

Deep Component Lifecycle Management: Beyond creation, the

SmartLibraryand evolution tools support searching, reusing, versioning, and adapting components intelligently. -

Agent-Driven Ecosystem: The

SystemAgentisn't just a script runner; it's aReActAgentusing its own tools to manage the entire process, including complex workflow execution when needed. -

True Multi-Framework Integration: Provides abstractions (

Providers,AgentFactory) to treat agents from different SDKs as first-class citizens.

The SystemAgent acts as the central nervous system and primary entry point. It's a ReActAgent equipped with specialized tools to manage the entire ecosystem. It receives high-level goals and autonomously plans and executes the necessary steps.

Example: Prompting the SystemAgent with a high-level goal

# Define the high-level task for the System Agent

invoice_content = "..." # Load or define the invoice text here

high_level_prompt = f"""

**Goal:** Accurately process the provided invoice document and return structured, verified data.

**Functional Requirements:**

- Extract key fields: Invoice #, Date, Vendor, Bill To, Line Items (Description, Quantity, Unit Price, Item Total), Subtotal, Tax Amount, Shipping (if present), Total Due, Payment Terms, Due Date.

- Verify calculations: The sum of line item totals should match the Subtotal. The sum of Subtotal, Tax Amount, and Shipping (if present) must match the Total Due. Report any discrepancies.

**Non-Functional Requirements:**

- High accuracy is critical.

- Output must be a single, valid JSON object containing the extracted data and a 'verification' section (status: 'ok'/'failed', discrepancies: list).

**Input Data:**{invoice_content}

**Action:** Achieve this goal using the best approach available. Create, evolve, or reuse components as needed. Return ONLY the final JSON result.

"""

# Execute the task via the SystemAgent

final_result_obj = await system_agent.run(high_level_prompt)

# Process the final result (assuming extract_json_from_response is defined elsewhere)

# final_json_result = extract_json_from_response(final_result_obj.result.text)

# print(final_json_result)

SystemAgent Internal Process (Conceptual):

When the SystemAgent receives the high_level_prompt, its internal ReAct loop orchestrates the following:

- Receives the high-level goal and input data.

- Analyzes the goal using its reasoning capabilities.

-

Uses Tools (

SearchComponentTool,DiscoverAgentTool) to find existing capabilities suitable for the task. -

Decides If Workflow Needed: If the task is complex or no single component suffices, it determines a multi-step plan is necessary.

-

(Optional) It might internally request a detailed design blueprint from

ArchitectZerousingRequestAgentTool. - It uses

GenerateWorkflowToolinternally to create an executable YAML workflow based on the design or its analysis. - It uses

ProcessWorkflowToolinternally to parse the YAML into a step-by-step execution plan.

-

(Optional) It might internally request a detailed design blueprint from

-

Executes the Plan: It iterates through the plan, using appropriate tools for each step:

-

CreateComponentToolorEvolveComponentToolforDEFINEsteps. - Internal

AgentFactorycalls during component creation. -

RequestAgentToolforEXECUTEsteps, invoking other agents/tools via theSmartAgentBus.

-

-

Returns Result: It returns the final result specified by the plan's

RETURNstep (or the result of a direct action if no complex workflow was needed).

(This internal complexity is hidden from the user interacting with the SystemAgent).

ArchitectZero is a specialized agent, typically invoked by the SystemAgent via the Agent Bus when a complex task requires a detailed plan before execution.

# Conceptual: SystemAgent requesting design from ArchitectZero via AgentBus

# This happens INTERNALLY within the SystemAgent's ReAct loop if needed.

design_request_prompt = f"""

Use RequestAgentTool to ask ArchitectZero to design an automated customer support system.

Requirements: Handle FAQs, escalate complex issues, use sentiment analysis.

Input for ArchitectZero: {{ "requirements": "Design customer support system..." }}

"""

# system_agent_internal_response = await system_agent.run(design_request_prompt)

# solution_design_json = extract_json_from_response(...)

# >> SystemAgent now has the design to proceed internally.Note: End users typically interact with the SystemAgent directly with their goal, not ArchitectZero.

Stores agents, tools, and firmware definitions. Enables semantic search and lifecycle management.

# Semantic component discovery (often used internally by SystemAgent)

similar_tools = await smart_library.semantic_search(

query="Tool that can validate financial calculations in documents",

record_type="TOOL",

domain="finance",

threshold=0.6

)

# Evolve an existing component (often invoked by SystemAgent's EvolveComponentTool)

evolved_record = await smart_library.evolve_record(

parent_id="tool_id_abc",

new_code_snippet="# New improved Python code...",

description="Enhanced version with better error handling"

)Enables dynamic, capability-based communication ("Data Bus") and provides system management functions ("System Bus").

# Register a component (System Bus operation, often via SystemAgent's tool)

await agent_bus.register_agent(

name="SentimentAnalyzerTool_v2",

agent_type="TOOL",

description="Analyzes text sentiment with high accuracy",

capabilities=[{ "id": "sentiment_analysis", "name": "Sentiment Analysis", ... }]

)

# Request a service based on capability (Data Bus operation, often via SystemAgent's RequestAgentTool)

result_payload = await agent_bus.request_capability(

capability="sentiment_analysis",

content={"text": "This service is amazing!"},

min_confidence=0.8

)

# >> result_payload might contain: {'agent_id': '...', 'agent_name': 'SentimentAnalyzerTool_v2', 'content': {'sentiment': 'positive', 'score': 0.95}, ...}Complex tasks are handled via a structured workflow lifecycle orchestrated internally by the SystemAgent.

-

Goal Intake:

SystemAgentreceives a high-level goal from the user/caller. -

Analysis & Planning (Internal):

SystemAgentanalyzes the goal and checks if existing components suffice. -

Design Query (Optional/Internal): If needed,

SystemAgentrequests a solution design (JSON) fromArchitectZerovia the Agent Bus. -

Workflow Generation (Internal): If a multi-step plan is required,

SystemAgentuses itsGenerateWorkflowToolto translate the design (or its internal analysis) into an executable YAML workflow string. -

Plan Processing (Internal):

SystemAgentuses itsProcessWorkflowToolto parse the YAML, validate, substitute parameters, and produce a structured execution plan. -

Plan Execution (Internal):

SystemAgent's ReAct loop iterates through the plan, using its other tools (CreateComponentTool,EvolveComponentTool,RequestAgentTool, etc.) to perform the action defined in each step (DEFINE,CREATE,EXECUTE). -

Result Return:

SystemAgentreturns the final result to the caller.

The external caller interacts only at Step 1 and receives the result at Step 7, unaware of the internal workflow mechanics.

Existing components can be adapted or improved using the EvolveComponentTool (typically invoked internally by SystemAgent).

# Conceptual Example (within the SystemAgent's internal operation)

evolve_prompt = f"""

Use EvolveComponentTool to enhance agent 'id_123'.

Changes needed: Add support for processing PDF files directly.

Strategy: standard

"""

# evolve_result = await system_agent.run(evolve_prompt)

# >> evolve_result indicates success and provides ID of the new evolved agent version.Integrate agents/tools from different SDKs via Providers managed by the AgentFactory (used internally by tools like CreateComponentTool).

# Example: Creating agents from different frameworks via AgentFactory

# (AgentFactory is usually used internally by tools like CreateComponentTool)

# bee_record = await smart_library.find_record_by_name("BeeAgentName")

# openai_record = await smart_library.find_record_by_name("OpenAIAgentName")

# if bee_record:

# bee_agent_instance = await agent_factory.create_agent(bee_record)

# # >> Uses BeeAIProvider internally

# if openai_record:

# openai_agent_instance = await agent_factory.create_agent(openai_record)

# # >> Uses OpenAIAgentsProvider internallySafety and operational rules are embedded via Firmware.

-

Firmwareprovides base rules + domain-specific constraints. - Prompts used by

CreateComponentTool/EvolveComponentToolinclude firmware content. -

OpenAIGuardrailsAdapterconverts firmware rules into runtime checks for OpenAI agents.

# Recommended: Create a virtual environment

python -m venv venv

source venv/bin/activate # On Windows use `venv\Scripts\activate`

# Install from PyPI (when available)

# pip install evolving-agents-framework

# Or install from source

git clone https://github.com/matiasmolinas/evolving-agents.git

cd evolving-agents

pip install -r requirements.txt

pip install -e . # Install in editable mode-

Set up Environment:

- Copy

.env.exampleto.env. - Add your

OPENAI_API_KEYto the.envfile. - Configure other settings like

LLM_MODELif needed.

- Copy

-

Run the Comprehensive Demo: This demo initializes the framework and gives the

SystemAgenta high-level goal to process an invoice, requiring design, component creation/evolution, and execution orchestrated internally.python examples/invoice_processing/architect_zero_comprehensive_demo.py

-

Explore Output: Check the generated files:

-

final_processing_output.json: Contains the final structured result from the SystemAgent executing the task, along with the agent's full output log for debugging. -

smart_library_demo.json: The state of the component library after the run (shows created/evolved components). -

smart_agent_bus_demo.json: The agent registry state. -

agent_bus_logs_demo.json: Logs of agent interactions via the bus. -

(Optional Debug)

architect_design_output.json: The demo still saves the design blueprint generated internally by ArchitectZero for inspection.

-

Explore the examples/ directory:

-

invoice_processing/architect_zero_comprehensive_demo.py: The flagship demo showing theSystemAgenthandling a complex invoice processing task based on a high-level goal, orchestrating design, generation, and execution internally. -

agent_evolution/: Demonstrates creating and evolving agents/tools using both BeeAI and OpenAI frameworks. -

forms/: Shows how the system can design and process conversational forms. -

autocomplete/: Illustrates designing a context-aware autocomplete system. - (Add more examples as they are created)

The toolkit employs an agent-centric architecture. The SystemAgent (a ReAct agent) is the main orchestrator, taking high-level goals. It leverages specialized tools to interact with core components like the SmartLibrary (for component persistence and semantic search via ChromaDB) and the SmartAgentBus (for capability-based routing and system management). For complex tasks, it internally manages the full workflow lifecycle, potentially requesting designs from agents like ArchitectZero and using internal tools to generate, process, and execute plans. Multi-framework support is achieved through Providers and Adapters. Dependencies are managed via a DependencyContainer.

For a detailed breakdown, see docs/ARCHITECTURE.md.

-

LLM Caching: Reduces API costs during development by caching completions and embeddings (

.llm_cache_demo/). - Vector Search: Integrated ChromaDB for powerful semantic discovery of components.

- Modular Design: Core components are decoupled, facilitating extension and testing.

- Dependency Injection: Simplifies component wiring and initialization.

- Clear Logging: Provides insights into agent thinking and component interactions via bus logs and standard logging.

This project is licensed under the Apache License Version 2.0.

-

BeeAI Framework: Used for the core

ReActAgentimplementation and tool structures. - OpenAI Agents SDK: Integrated via providers for multi-framework support.

-

ChromaDB: Powers semantic search capabilities in the

SmartLibraryandSmartAgentBus. - Original Concept Contributors: Matias Molinas and Ismael Faro

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for evolving-agents

Similar Open Source Tools

evolving-agents

A toolkit for agent autonomy, evolution, and governance enabling agents to learn from experience, collaborate, communicate, and build new tools within governance guardrails. It focuses on autonomous evolution, agent self-discovery, governance firmware, self-building systems, and agent-centric architecture. The toolkit leverages existing frameworks to enable agent autonomy and self-governance, moving towards truly autonomous AI systems.

sieves

sieves is a library for zero- and few-shot NLP tasks with structured generation, enabling rapid prototyping of NLP applications without the need for training. It simplifies NLP prototyping by bundling capabilities into a single library, providing zero- and few-shot model support, a unified interface for structured generation, built-in tasks for common NLP operations, easy extendability, document-based pipeline architecture, caching to prevent redundant model calls, and more. The tool draws inspiration from spaCy and spacy-llm, offering features like immediate inference, observable pipelines, integrated tools for document parsing and text chunking, ready-to-use tasks such as classification, summarization, translation, and more, persistence for saving and loading pipelines, distillation for specialized model creation, and caching to optimize performance.

hound

Hound is a security audit automation pipeline for AI-assisted code review that mirrors how expert auditors think, learn, and collaborate. It features graph-driven analysis, sessionized audits, provider-agnostic models, belief system and hypotheses, precise code grounding, and adaptive planning. The system employs a senior/junior auditor pattern where the Scout actively navigates the codebase and annotates knowledge graphs while the Strategist handles high-level planning and vulnerability analysis. Hound is optimized for small-to-medium sized projects like smart contract applications and is language-agnostic.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

LLMBox

LLMBox is a comprehensive library designed for implementing Large Language Models (LLMs) with a focus on a unified training pipeline and comprehensive model evaluation. It serves as a one-stop solution for training and utilizing LLMs, offering flexibility and efficiency in both training and utilization stages. The library supports diverse training strategies, comprehensive datasets, tokenizer vocabulary merging, data construction strategies, parameter efficient fine-tuning, and efficient training methods. For utilization, LLMBox provides comprehensive evaluation on various datasets, in-context learning strategies, chain-of-thought evaluation, evaluation methods, prefix caching for faster inference, support for specific LLM models like vLLM and Flash Attention, and quantization options. The tool is suitable for researchers and developers working with LLMs for natural language processing tasks.

maiar-ai

MAIAR is a composable, plugin-based AI agent framework designed to abstract data ingestion, decision-making, and action execution into modular plugins. It enables developers to define triggers and actions as standalone plugins, while the core runtime handles decision-making dynamically. This framework offers extensibility, composability, and model-driven behavior, allowing seamless addition of new functionality. MAIAR's architecture is influenced by Unix pipes, ensuring highly composable plugins, dynamic execution pipelines, and transparent debugging. It remains declarative and extensible, allowing developers to build complex AI workflows without rigid architectures.

llm-consortium

LLM Consortium is a plugin for the `llm` package that implements a model consortium system with iterative refinement and response synthesis. It orchestrates multiple learned language models to collaboratively solve complex problems through structured dialogue, evaluation, and arbitration. The tool supports multi-model orchestration, iterative refinement, advanced arbitration, database logging, configurable parameters, hundreds of models, and the ability to save and load consortium configurations.

metta

Metta AI is an open-source research project focusing on the emergence of cooperation and alignment in multi-agent AI systems. It explores the impact of social dynamics like kinship and mate selection on learning and cooperative behaviors of AI agents. The project introduces a reward-sharing mechanism mimicking familial bonds and mate selection to observe the evolution of complex social behaviors among AI agents. Metta aims to contribute to the discussion on safe and beneficial AGI by creating an environment where AI agents can develop general intelligence through continuous learning and adaptation.

Autono

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

langgraph4j

Langgraph4j is a Java library for language processing tasks such as text classification, sentiment analysis, and named entity recognition. It provides a set of tools and algorithms for analyzing text data and extracting useful information. The library is designed to be efficient and easy to use, making it suitable for both research and production applications.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

emigo

Emigo is an AI-powered development tool for Emacs that integrates large language models to interact with projects, read files, write code, execute commands, and more. It acts as an agentic AI assistant, leveraging tool use to enhance development workflows within Emacs. Emigo is actively developed, offering features like agentic tool use, Emacs integration, flexible LLM support, and context-aware interactions. Users can install Emigo with Python dependencies and configure it within Emacs for seamless integration. The tool's core strength lies in its agentic tool use, where the AI analyzes requests, selects appropriate tools, executes actions, and provides feedback, enabling users to accomplish complex tasks efficiently.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

For similar tasks

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

searchGPT

searchGPT is an open-source project that aims to build a search engine based on Large Language Model (LLM) technology to provide natural language answers. It supports web search with real-time results, file content search, and semantic search from sources like the Internet. The tool integrates LLM technologies such as OpenAI and GooseAI, and offers an easy-to-use frontend user interface. The project is designed to provide grounded answers by referencing real-time factual information, addressing the limitations of LLM's training data. Contributions, especially from frontend developers, are welcome under the MIT License.

LLMs-at-DoD

This repository contains tutorials for using Large Language Models (LLMs) in the U.S. Department of Defense. The tutorials utilize open-source frameworks and LLMs, allowing users to run them in their own cloud environments. The repository is maintained by the Defense Digital Service and welcomes contributions from users.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

EAGLE

Eagle is a family of Vision-Centric High-Resolution Multimodal LLMs that enhance multimodal LLM perception using a mix of vision encoders and various input resolutions. The model features a channel-concatenation-based fusion for vision experts with different architectures and knowledge, supporting up to over 1K input resolution. It excels in resolution-sensitive tasks like optical character recognition and document understanding.

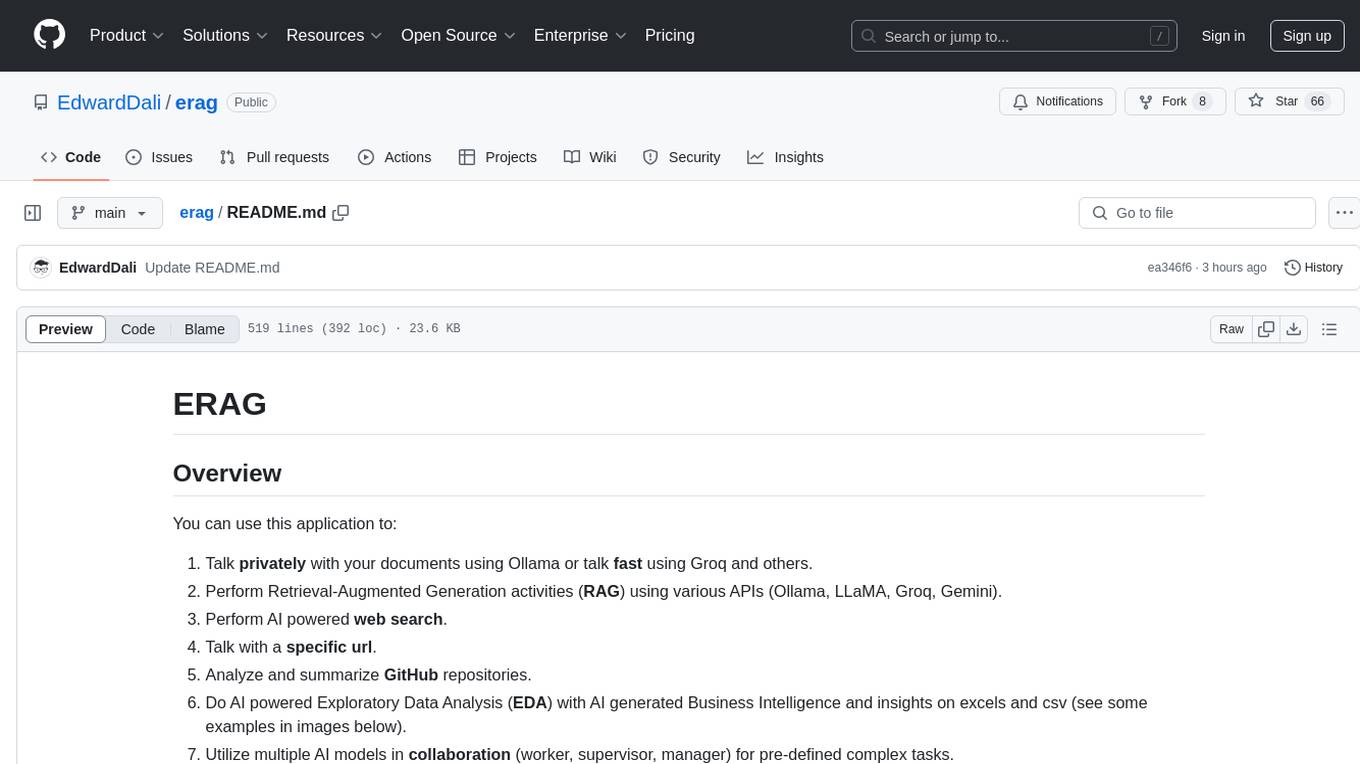

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.