Autono

A ReAct-Based Highly Robust Autonomous Agent Framework

Stars: 191

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

README:

A ReAct Based Highly Robust Autonomous Agent Framework.

MCP is currently supported. How to use McpAgent.

This paper (project) proposes a highly robust autonomous agent framework based on the ReAct paradigm, designed to solve complex tasks through adaptive decision making and multi-agent collaboration. Unlike traditional frameworks that rely on fixed workflows generated by LLM-based planners, this framework dynamically generates next actions during agent execution based on prior trajectories, thereby enhancing its robustness. To address potential termination issues caused by adaptive execution paths, I propose a timely abandonment strategy incorporating a probabilistic penalty mechanism. For multi-agent collaboration, I introduce a memory transfer mechanism that enables shared and dynamically updated memory among agents. The framework's innovative timely abandonment strategy dynamically adjusts the probability of task abandonment via probabilistic penalties, allowing developers to balance conservative and exploratory tendencies in agent execution strategies by tuning hyperparameters. This significantly improves adaptability and task execution efficiency in complex environments. Additionally, agents can be extended through external tool integration, supported by modular design and MCP protocol compatibility, which enables flexible action space expansion. Through explicit division of labor, the multi-agent collaboration mechanism enables agents to focus on specific task components, thereby significantly improving execution efficiency and quality.

The experimental results demonstrate that the autono framework significantly outperforms autogen and langchain in handling tasks of varying complexity, especially in multi-step tasks with possible failures.

| Framework | Version | Model | one-step-task | multi-step-task | multi-step-task-with-possible-failure |

|---|---|---|---|---|---|

autono |

1.0.0 |

gpt-4o-mini qwen-plus deepseek-v3 |

96.7%100%100% | 100%96.7%100% | 76.7%93.3%93.3% |

autogen |

0.4.9.2 |

gpt-4o-mini qwen-plus deepseek-v3 |

90%90%N/A | 53.3%0%N/A | 3.3%3.3%N/A |

langchain |

0.3.21 |

gpt-4o-mini qwen-plus deepseek-v3 |

73.3%73.3%76.7% | 13.3%13.3%13.3% | 10%13.3%6.7% |

-

one-step-task: Tasks that can be completed with a single tool call. -

multi-step-task: Tasks that require multiple tool calls to complete, with no possibility of tool failure. -

multi-step-task-with-possible-failure: Tasks that require multiple tool calls to complete, where tools may fail, requiring the agent to retry and correct errors.

The deepseek-v3 model is not supported by

autogen-agentchat==0.4.9.2.

You can reproduce my experiments here.

If you are incorporating the autono framework into your research, please remember to properly cite it to acknowledge its contribution to your work.

Если вы интегрируете фреймворк autono в своё исследование, пожалуйста, не забудьте правильно сослаться на него, указывая его вклад в вашу работу.

もしあなたが研究に autono フレームワークを組み入れているなら、その貢献を認めるために適切に引用することを忘れないでください.

如果您正在將 autono 框架整合到您的研究中,請務必正確引用它,以聲明它對您工作的貢獻.

@software{Wu_Autono_2025,

author = {Wu, Zihao},

license = {GPL-3.0},

month = apr,

title = {{Autono}},

url = {https://github.com/vortezwohl/Autono},

version = {1.0.0},

year = {2025}

}-

From PYPI

pip install -U autono

-

From Github

Get access to unreleased features.

pip install git+https://github.com/vortezwohl/Autono.git

To start building your own agent, follow the steps listed.

-

set environmental variable

OPENAI_API_KEY# .env OPENAI_API_KEY=sk-... -

import required dependencies

-

Agentlets you instantiate an agent. -

Personalityis an enumeration class used for customizing personalities of agents.-

Personality.PRUDENTmakes the agent's behavior more cautious. -

Personality.INQUISITIVEencourages the agent to be more proactive in trying and exploring.

-

-

get_openai_modelgives you aBaseChatModelas thought engine. -

@ability(brain: BaseChatModel, cache: bool = True, cache_dir: str = '')is a decorator which lets you declare a function as anAbility. -

@agentic(agent: Agent)is a decorator which lets you declare a function as anAgenticAbility.

from autono import ( Agent, Personality, get_openai_model, ability, agentic )

-

-

declare functions as basic abilities

@ability def calculator(expr: str) -> float: # this function only accepts a single math expression return simplify(expr) @ability def write_file(filename: str, content: str) -> str: with open(filename, 'w', encoding='utf-8') as f: f.write(content) return f'{content} written to {filename}.'

-

instantiate an agent

You can grant abilities to agents while instantiating them.

model = get_openai_model() agent = Agent(abilities=[calculator, write_file], brain=model, name='Autono', personality=Personality.INQUISITIVE)

-

You can also grant more abilities to agents later:

agent.grant_ability(calculator)

or

agent.grant_abilities([calculator])

-

To deprive abilities:

agent.deprive_ability(calculator)

or

agent.deprive_abilities([calculator])

You can change an agent's personality using method

change_personality(personality: Personality)agent.change_personality(Personality.PRUDENT)

-

-

assign a request to your agent

agent.assign("Here is a sphere with radius of 9.5 cm and pi here is 3.14159, find the area and volume respectively then write the results into a file called 'result.txt'.")

-

leave the rest to your agent

response = agent.just_do_it() print(response)

autonoalso supports multi-agent collaboration scenario, declare a function as agent calling ability with@agentic(agent: Agent), then grant it to an agent. See example.

Integration with MCP

I provide McpAgent to support tool calls based on the MCP protocol. Below is a brief guide to integrating McpAgent with mcp.stdio_client:

-

import required dependencies

-

McpAgentallows you to instantiate an agent capable of accessing MCP tools. -

StdioMcpConfigis an alias formcp.client.stdio.StdioServerParametersand serves as the MCP server connection configuration. -

@mcp_session(mcp_config: StdioMcpConfig)allows you to declare a function as an MCP session. -

sync_callallows you to synchronizedly call a coroutine function.

from autono import ( McpAgent, get_openai_model, StdioMcpConfig, mcp_session, sync_call )

-

-

create an MCP session

-

To connect with a stdio based MCP server, use

StdioMcpConfig.mcp_config = StdioMcpConfig( command='python', args=['./my_stdio_mcp_server.py'], env=dict(), cwd='./mcp_servers' )

A function decorated with

@mcp_sessionwill receive an MCP session instance as its first parameter. A function can be decorated with multiple@mcp_sessiondecorators to access sessions for different MCP servers.@sync_call @mcp_session(mcp_config) async def run(session, request: str) -> str: ...

-

To connect via HTTP with a SSE based MCP server, just provide the URL.

@sync_call @mcp_session('http://localhost:8000/sse') async def run(session, request: str) -> str: ...

-

To connect via websocket with a WS based MCP server, provide the URL.

@sync_call @mcp_session('ws://localhost:8000/message') async def run(session, request: str) -> str: ...

-

-

create an

McpAgentinstance within the MCP sessionAfter creating

McpAgent, you need to call thefetch_abilities()method to retrieve tool configurations from the MCP server.@sync_call @mcp_session(mcp_config) async def run(session, request: str) -> str: mcp_agent = await McpAgent(session=session, brain=get_openai_model()).fetch_abilities() ...

-

assign tasks to the

McpAgentinstance and await execution result@sync_call @mcp_session(mcp_config) async def run(session, request: str) -> str: mcp_agent = await McpAgent(session=session, brain=get_openai_model()).fetch_abilities() result = await mcp_agent.assign(request).just_do_it() return result.conclusion

-

call the function

if __name__ == '__main__': ret = run(request='What can you do?') print(ret)

I also provide the complete MCP agent test script. See example.

To make the working process of agents observable, I provide two hooks, namely BeforeActionTaken and AfterActionTaken.

They allow you to observe and intervene in the decision-making and execution results of each step of the agent's actions.

You can obtain and modify the agent's decision results for the next action through the BeforeActionTaken hook,

while AfterActionTaken allows you to obtain and modify the execution results of the actions (the tampered execution results will be part of the agent's memory).

To start using hooks, follow the steps listed.

-

bring in hooks and messages from

autonofrom autono.brain.hook import BeforeActionTaken, AfterActionTaken from autono.message import BeforeActionTakenMessage, AfterActionTakenMessage

-

declare functions and encapsulate them as hooks

def before_action_taken(agent: Agent, message: BeforeActionTakenMessage): print(f'Agent: {agent.name}, Next move: {message}') return message def after_action_taken(agent: Agent, message: AfterActionTakenMessage): print(f'Agent: {agent.name}, Action taken: {message}') return message before_action_taken_hook = BeforeActionTaken(before_action_taken) after_action_taken_hook = AfterActionTaken(after_action_taken)

In these two hook functions, you intercepted the message and printed the information in the message. Afterwards, you returned the message unaltered to the agent. Of course, you also have the option to modify the information in the message, thereby achieving intervention in the agent's working process.

-

use hooks during the agent's working process

agent.assign(...).just_do_it(before_action_taken_hook, after_action_taken_hook)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Autono

Similar Open Source Tools

Autono

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

verifiers

Verifiers is a library of modular components for creating RL environments and training LLM agents. It includes an async GRPO implementation built around the `transformers` Trainer, is supported by `prime-rl` for large-scale FSDP training, and can easily be integrated into any RL framework which exposes an OpenAI-compatible inference client. The library provides tools for creating and evaluating RL environments, training LLM agents, and leveraging OpenAI-compatible models for various tasks. Verifiers aims to be a reliable toolkit for building on top of, minimizing fork proliferation in the RL infrastructure ecosystem.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

crb

CRB (Composable Runtime Blocks) is a unique framework that implements hybrid workloads by seamlessly combining synchronous and asynchronous activities, state machines, routines, the actor model, and supervisors. It is ideal for building massive applications and serves as a low-level framework for creating custom frameworks, such as AI-agents. The core idea is to ensure high compatibility among all blocks, enabling significant code reuse. The framework allows for the implementation of algorithms with complex branching, making it suitable for building large-scale applications or implementing complex workflows, such as AI pipelines. It provides flexibility in defining structures, implementing traits, and managing execution flow, allowing users to create robust and nonlinear algorithms easily.

evolving-agents

A toolkit for agent autonomy, evolution, and governance enabling agents to learn from experience, collaborate, communicate, and build new tools within governance guardrails. It focuses on autonomous evolution, agent self-discovery, governance firmware, self-building systems, and agent-centric architecture. The toolkit leverages existing frameworks to enable agent autonomy and self-governance, moving towards truly autonomous AI systems.

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

auto-playwright

Auto Playwright is a tool that allows users to run Playwright tests using AI. It eliminates the need for selectors by determining actions at runtime based on plain-text instructions. Users can automate complex scenarios, write tests concurrently with or before functionality development, and benefit from rapid test creation. The tool supports various Playwright actions and offers additional options for debugging and customization. It uses HTML sanitization to reduce costs and improve text quality when interacting with the OpenAI API.

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

LayerSkip

LayerSkip is an implementation enabling early exit inference and self-speculative decoding. It provides a code base for running models trained using the LayerSkip recipe, offering speedup through self-speculative decoding. The tool integrates with Hugging Face transformers and provides checkpoints for various LLMs. Users can generate tokens, benchmark on datasets, evaluate tasks, and sweep over hyperparameters to optimize inference speed. The tool also includes correctness verification scripts and Docker setup instructions. Additionally, other implementations like gpt-fast and Native HuggingFace are available. Training implementation is a work-in-progress, and contributions are welcome under the CC BY-NC license.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

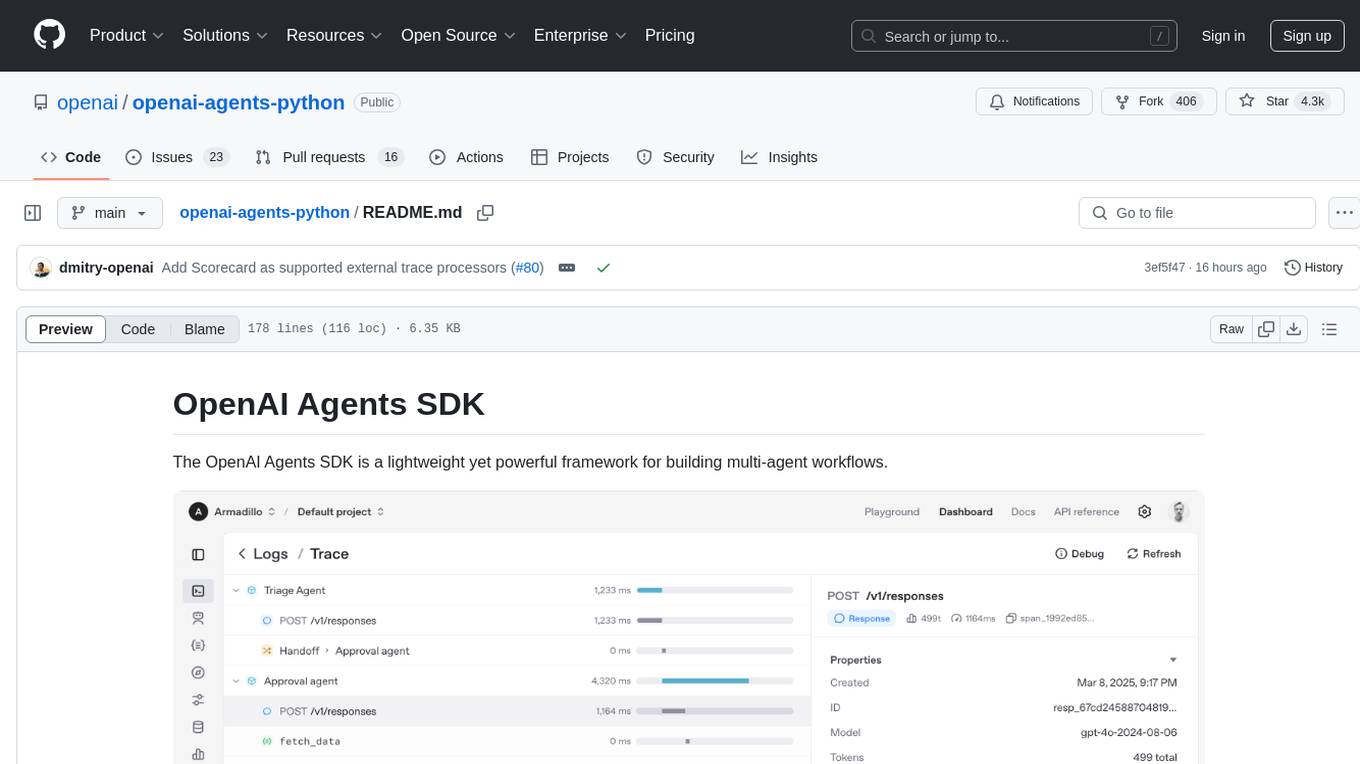

openai-agents-python

The OpenAI Agents SDK is a lightweight framework for building multi-agent workflows. It includes concepts like Agents, Handoffs, Guardrails, and Tracing to facilitate the creation and management of agents. The SDK is compatible with any model providers supporting the OpenAI Chat Completions API format. It offers flexibility in modeling various LLM workflows and provides automatic tracing for easy tracking and debugging of agent behavior. The SDK is designed for developers to create deterministic flows, iterative loops, and more complex workflows.

SpeziLLM

The Spezi LLM Swift Package includes modules that help integrate LLM-related functionality in applications. It provides tools for local LLM execution, usage of remote OpenAI-based LLMs, and LLMs running on Fog node resources within the local network. The package contains targets like SpeziLLM, SpeziLLMLocal, SpeziLLMLocalDownload, SpeziLLMOpenAI, and SpeziLLMFog for different LLM functionalities. Users can configure and interact with local LLMs, OpenAI LLMs, and Fog LLMs using the provided APIs and platforms within the Spezi ecosystem.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

For similar tasks

Autono

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

Pichome

PicHome is a powerful open-source cloud storage program that efficiently manages various types of files and excels in image and media file management. Its highlights include robust file sharing features and advanced AI-assisted management tools, providing users with a convenient and intelligent file management experience. The program offers diverse list modes, customizable file information display, enhanced quick file preview, advanced tagging, custom cover and preview images, multiple preview images, and multi-library management. Additionally, PicHome features strong file sharing capabilities, allowing users to share entire libraries, create personalized showcase web pages, and build complete data sharing websites. The AI-assisted management aspect includes AI file renaming, tagging, description writing, batch annotation, and file Q&A services, all aimed at improving file management efficiency. PicHome supports a wide range of file formats and can be applied in various scenarios such as e-commerce, gaming, design, development, enterprises, schools, labs, media, and entertainment institutions.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

LLMSpeculativeSampling

This repository implements speculative sampling for large language model (LLM) decoding, utilizing two models - a target model and an approximation model. The approximation model generates token guesses, corrected by the target model, resulting in improved efficiency. It includes implementations of Google's and Deepmind's versions of speculative sampling, supporting models like llama-7B and llama-1B. The tool is designed for fast inference from transformers via speculative decoding.

AI-Studio

MindWork AI Studio is a desktop application that provides a unified chat interface for Large Language Models (LLMs). It is free to use for personal and commercial purposes, offers independence in choosing LLM providers, provides unrestricted usage through the providers API, and is cost-effective with pay-as-you-go pricing. The app prioritizes privacy, flexibility, minimal storage and memory usage, and low impact on system resources. Users can support the project through monthly contributions or one-time donations, with opportunities for companies to sponsor the project for public relations and marketing benefits. Planned features include support for more LLM providers, system prompts integration, text replacement for privacy, and advanced interactions tailored for various use cases.

mastering-github-copilot-for-dotnet-csharp-developers

Enhance coding efficiency with expert-led GitHub Copilot course for C#/.NET developers. Learn to integrate AI-powered coding assistance, automate testing, and boost collaboration using Visual Studio Code and Copilot Chat. From autocompletion to unit testing, cover essential techniques for cleaner, faster, smarter code.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.