Hurley-AI

The next-gen framework powering intelligent agent development through Retrieval-Augmented Generation.

Stars: 175

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

README:

CA:2BCBgzukSPb21FeS1tSTDEM4a1VkNi97NYQMgXhcpump

Hurley AI is the next-gen framework powering intelligent agent development through Retrieval-Augmented Generation.

- Enables easy creation of custom AI assistants and agents.

- Create a Hurley RAG tool or search tool with a single line of code.

- Supports

ReAct,OpenAIAgent,LATSandLLMCompileragent types. - Includes pre-built tools for various domains (e.g., finance, legal).

- Integrates with various LLM inference services like OpenAI, DeepSeek, Anthropic, Gemini, GROQ, Together.AI, Cohere, Bedrock and Fireworks

- Built-in support for observability with Arize Phoenix

import os

from hurley_agentic.tools import HurleyToolFactory

vec_factory = HurleyToolFactory(

hurley_api_key=os.environ['HURLEY_API_KEY'],

hurley_customer_id=os.environ['HURLEY_CUSTOMER_ID'],

hurley_corpus_id=os.environ['HURLEY_CORPUS_ID']

)A RAG tool calls the full Hurley RAG pipeline to provide summarized responses to queries grounded in data.

from pydantic import BaseModel, Field

years = list(range(2024, 2025))

tickers = {

"TRUMP": "OFFICIAL TRUMP",

"VINE": "Vine Coin",

"PENGU": "Pudgy Penguins",

"GOAT": "Goatseus Maximus",

}

class QueryMemecoinReportsArgs(BaseModel):

query: str = Field(..., description="The user query.")

year: int | str = Field(..., description=f"The year this query relates to. An integer between {min(years)} and {max(years)} or a string specifying a condition on the year (example: '>2020').")

ticker: str = Field(..., description=f"The company ticker. Must be a valid ticket symbol from the list {tickers.keys()}.")

query_memecoin_reports_tool = vec_factory.create_rag_tool(

tool_name="query_memecoin_reports",

tool_description="Query memecoin reports for a memecoin and date",

tool_args_schema=QueryMemecoinReportsArgs,

lambda_val=0.005,

summary_num_results=7,

# Additional arguments

)In addition to RAG tools, you can generate a lot of other types of tools the agent can use. These could be mathematical tools, tools that call other APIs to get more information, or any other type of tool.

from hurley_agentic import Agent

agent = Agent(

tools=[query_memecoin_reports_tool],

topic="10-K memecoin reports",

custom_instructions="""

- You are a helpful memecoin assistant in conversation with a user. Use your memecoin expertise when crafting a query to the tool, to ensure you get the most accurate information.

- You can answer questions, provide insights, or summarize any information from memecoin reports.

- A user may refer to a memecoin's ticker instead of its full name - consider those the same when a user is asking about a memecoin.

- When calculating a memecoin metric, make sure you have all the information from tools to complete the calculation.

- In many cases you may need to query tools on each sub-metric separately before computing the final metric.

- Report memecoin data in a consistent manner. For example if you report values in Solana, always report values in Solana.

"""

)res = agent.chat("How much did the top traders make on $GOAT?")

print(res.response)Note that:

-

hurley-agenticalso supportsachat()and two streaming variantsstream_chat()andastream_chat(). - The response types from

chat()andachat()are of typeAgentResponse. If you just need the actual string response it's available as theresponsevariable, or just usestr(). For advanced use-cases you can look at otherAgentResponsevariables such assources.

hurley-agentic provides two helper functions to connect with Hurley RAG

-

create_rag_tool()to create an agent tool that connects with a Hurley corpus for querying. -

create_search_tool()to create a tool to search a Hurley corpus and return a list of matching documents.

See the documentation for the full list of arguments for create_rag_tool() and create_search_tool(),

to understand how to configure Hurley query performed by those tools.

A Hurley RAG tool is often the main workhorse for any Agentic RAG application, and enables the agent to query one or more Hurley RAG corpora.

The tool generated always includes the query argument, followed by 1 or more optional arguments used for

metadata filtering, defined by tool_args_schema.

For example, in the quickstart example the schema is:

class QueryMemecoinReportsArgs(BaseModel):

query: str = Field(..., description="The user query.")

year: int | str = Field(..., description=f"The year this query relates to. An integer between {min(years)} and {max(years)} or a string specifying a condition on the year (example: '>2020').")

ticker: str = Field(..., description=f"The token ticker. Must be a valid ticket symbol from the list {tickers.keys()}.")

The query is required and is always the query string.

The other arguments are optional and will be interpreted as Hurley metadata filters.

For example, in the example above, the agent may call the query_memecoin_reports_tool tool with

query='how much did the top traders make?', year=2024 and ticker='GOAT'. Subsequently the RAG tool will issue

a Hurley RAG query with the same query, but with metadata filtering (doc.year=2024 and doc.ticker='GOAT').

There are also additional cool features supported here:

- An argument can be a condition, for example year='>2024' translates to the correct metadata filtering condition doc.year>2024

- if

fixed_filteris defined in the RAG tool, it provides a constant metadata filtering that is always applied. For example, if fixed_filter=doc.filing_type='10K'then a query with query='what is the market cap', year=2024 and ticker='GOAT' would translate into query='what is the market cap' with metadata filtering condition of "doc.year=2024 AND doc.ticker='GOAT' and doc.filing_type='10K'"

Note that tool_args_type is an optional dictionary that indicates the level at which metadata filtering

is applied for each argument (doc or part)

The Hurley search tool allows the agent to list documents that match a query. This can be helpful to the agent to answer queries like "how many documents discuss the iPhone?" or other similar queries that require a response in terms of a list of matching documents.

hurley-agentic provides a few tools out of the box:

- Standard tools:

-

summarize_text: a tool to summarize a long text into a shorter summary (uses LLM) -

rephrase_text: a tool to rephrase a given text, given a set of rephrase instructions (uses LLM)

- Memecoin tools: based on tools from Dexscreener:

- tools to understand the memecoins of a pump.fun:

market_cap,volume,holder_distribution -

token_news: provides news about a token -

token_analyst_recommendations: provides token analyst recommendations for a memecoin.

- Database tools: providing tools to inspect and query a database

-

list_tables: list all tables in the database -

describe_tables: describe the schema of tables in the database -

load_data: returns data based on a SQL query -

load_sample_data: returns the first 25 rows of a table -

load_unique_values: returns the top unique values for a given column

In addition, we include various other tools from LlamaIndex ToolSpecs:

- Tavily search and EXA.AI

- arxiv

- neo4j & Kuzu for Graph DB integration

- Google tools (including gmail, calendar, and search)

- Slack

Note that some of these tools may require API keys as environment variables

You can create your own tool directly from a Python function using the create_tool() method of the ToolsFactory class:

def mult_func(x, y):

return x * y

mult_tool = ToolsFactory().create_tool(mult_func)The main way to control the behavior of hurley-agentic is by passing an AgentConfig object to your Agent when creating it.

This object will include the following items:

-

HURLEY_AGENTIC_AGENT_TYPE: valid values areREACT,LLMCOMPILER,LATSorOPENAI(default:OPENAI) -

HURLEY_AGENTIC_MAIN_LLM_PROVIDER: valid values areOPENAI,ANTHROPIC,TOGETHER,GROQ,COHERE,BEDROCK,GEMINIorFIREWORKS(default:OPENAI) -

HURLEY_AGENTIC_MAIN_MODEL_NAME: agent model name (default depends on provider) -

HURLEY_AGENTIC_TOOL_LLM_PROVIDER: tool LLM provider (default:OPENAI) -

HURLEY_AGENTIC_TOOL_MODEL_NAME: tool model name (default depends on provider) -

HURLEY_AGENTIC_OBSERVER_TYPE: valid values areARIZE_PHOENIXorNONE(default:NONE) -

HURLEY_AGENTIC_API_KEY: a secret key if using the API endpoint option (defaults todev-api-key)

If any of these are not provided, AgentConfig first tries to read the values from the OS environment.

When creating a HurleyToolFactory, you can pass in a hurley_api_key, hurley_customer_id, and hurley_corpus_id to the factory. If not passed in, it will be taken from the environment variables (HURLEY_API_KEY, HURLEY_CUSTOMER_ID and HURLEY_CORPUS_ID). Note that HURLEY_CORPUS_ID can be a single ID or a comma-separated list of IDs (if you want to query multiple corpora).

The custom instructions you provide to the agent guide its behavior. Here are some guidelines when creating your instructions:

- Write precise and clear instructions, without overcomplicating.

- Consider edge cases and unusual or atypical scenarios.

- Be cautious to not over-specify behavior based on your primary use-case, as it may limit the agent's ability to behave properly in others.

The Agent class defines a few helpful methods to help you understand the internals of your application.

- The

report()method prints out the agent object's type, the tools, and the LLMs used for the main agent and tool calling. - The

token_counts()method tells you how many tokens you have used in the current session for both the main agent and tool calling LLMs. This can be helpful if you want to track spend by token.

The Agent class supports serialization. Use the dumps() to serialize and loads() to read back from a serialized stream.

hurley-agentic can be easily hosted locally or on a remote machine behind an API endpoint, by following theses steps:

Ensure that you have your API key set up as an environment variable:

export HURLEY_AGENTIC_API_KEY=<YOUR-ENDPOINT-API-KEY>

if you don't specify an Endpoint API key it uses the default "dev-api-key".

Initialize the agent and start the FastAPI server by following this example:

from hurley_agentic.agent import Agent

from hurley_agentic.agent_endpoint import start_app

agent = Agent(...) # Initialize your agent with appropriate parameters

start_app(agent)

You can customize the host and port by passing them as arguments to start_app():

- Default: host="0.0.0.0" and port=8000. For example:

start_app(agent, host="0.0.0.0", port=8000)

Once the server is running, you can interact with it using curl or any HTTP client. For example:

curl -G "http://<remote-server-ip>:8000/chat" \

--data-urlencode "message=What is Hurley?" \

-H "X-API-Key: <YOUR-ENDPOINT-API-KEY>"

We welcome contributions! Please see our contributing guide for more information.

This project is licensed under the Apache 2.0 License. See the LICENSE file for details.

-

Twitter: @hurley_ai

-

GitHub: Hurley-Ai

-

HuggingFace: Hurley-Ai

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Hurley-AI

Similar Open Source Tools

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

godot-llm

Godot LLM is a plugin that enables the utilization of large language models (LLM) for generating content in games. It provides functionality for text generation, text embedding, multimodal text generation, and vector database management within the Godot game engine. The plugin supports features like Retrieval Augmented Generation (RAG) and integrates llama.cpp-based functionalities for text generation, embedding, and multimodal capabilities. It offers support for various platforms and allows users to experiment with LLM models in their game development projects.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

ash_ai

Ash AI is a tool that provides a Model Context Protocol (MCP) server for exposing tool definitions to an MCP client. It allows for the installation of dev and production MCP servers, and supports features like OAuth2 flow with AshAuthentication, tool data access, tool execution callbacks, prompt-backed actions, and vectorization strategies. Users can also generate a chat feature for their Ash & Phoenix application using `ash_oban` and `ash_postgres`, and specify LLM API keys for OpenAI. The tool is designed to help developers experiment with tools and actions, monitor tool execution, and expose actions as tool calls.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

LayerSkip

LayerSkip is an implementation enabling early exit inference and self-speculative decoding. It provides a code base for running models trained using the LayerSkip recipe, offering speedup through self-speculative decoding. The tool integrates with Hugging Face transformers and provides checkpoints for various LLMs. Users can generate tokens, benchmark on datasets, evaluate tasks, and sweep over hyperparameters to optimize inference speed. The tool also includes correctness verification scripts and Docker setup instructions. Additionally, other implementations like gpt-fast and Native HuggingFace are available. Training implementation is a work-in-progress, and contributions are welcome under the CC BY-NC license.

verifiers

Verifiers is a library of modular components for creating RL environments and training LLM agents. It includes an async GRPO implementation built around the `transformers` Trainer, is supported by `prime-rl` for large-scale FSDP training, and can easily be integrated into any RL framework which exposes an OpenAI-compatible inference client. The library provides tools for creating and evaluating RL environments, training LLM agents, and leveraging OpenAI-compatible models for various tasks. Verifiers aims to be a reliable toolkit for building on top of, minimizing fork proliferation in the RL infrastructure ecosystem.

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

For similar tasks

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

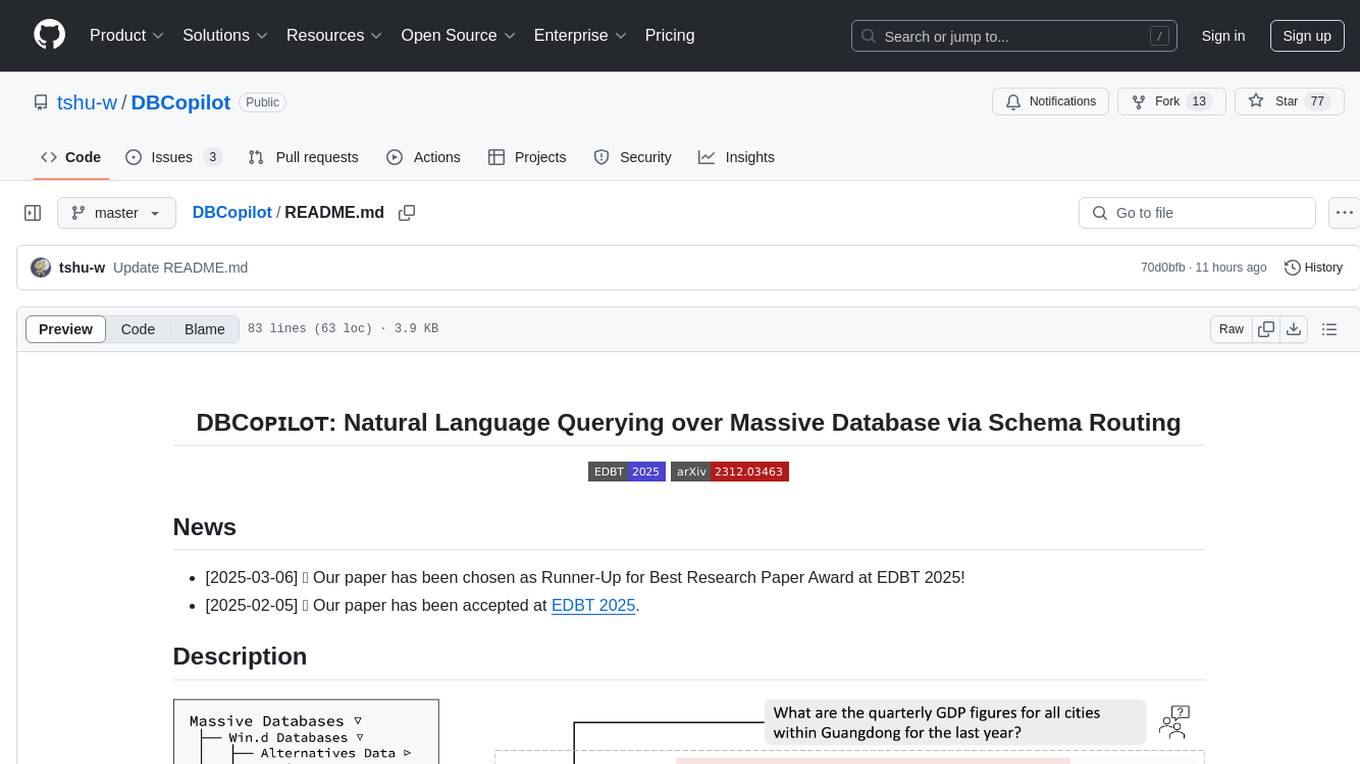

DBCopilot

The development of Natural Language Interfaces to Databases (NLIDBs) has been greatly advanced by the advent of large language models (LLMs), which provide an intuitive way to translate natural language (NL) questions into Structured Query Language (SQL) queries. DBCopilot is a framework that addresses challenges in real-world scenarios of natural language querying over massive databases by employing a compact and flexible copilot model for routing. It decouples schema-agnostic NL2SQL into schema routing and SQL generation, utilizing a lightweight differentiable search index for semantic mappings and relation-aware joint retrieval. DBCopilot introduces a reverse schema-to-question generation paradigm for automatic learning and adaptation over massive databases, providing a scalable and effective solution for schema-agnostic NL2SQL.

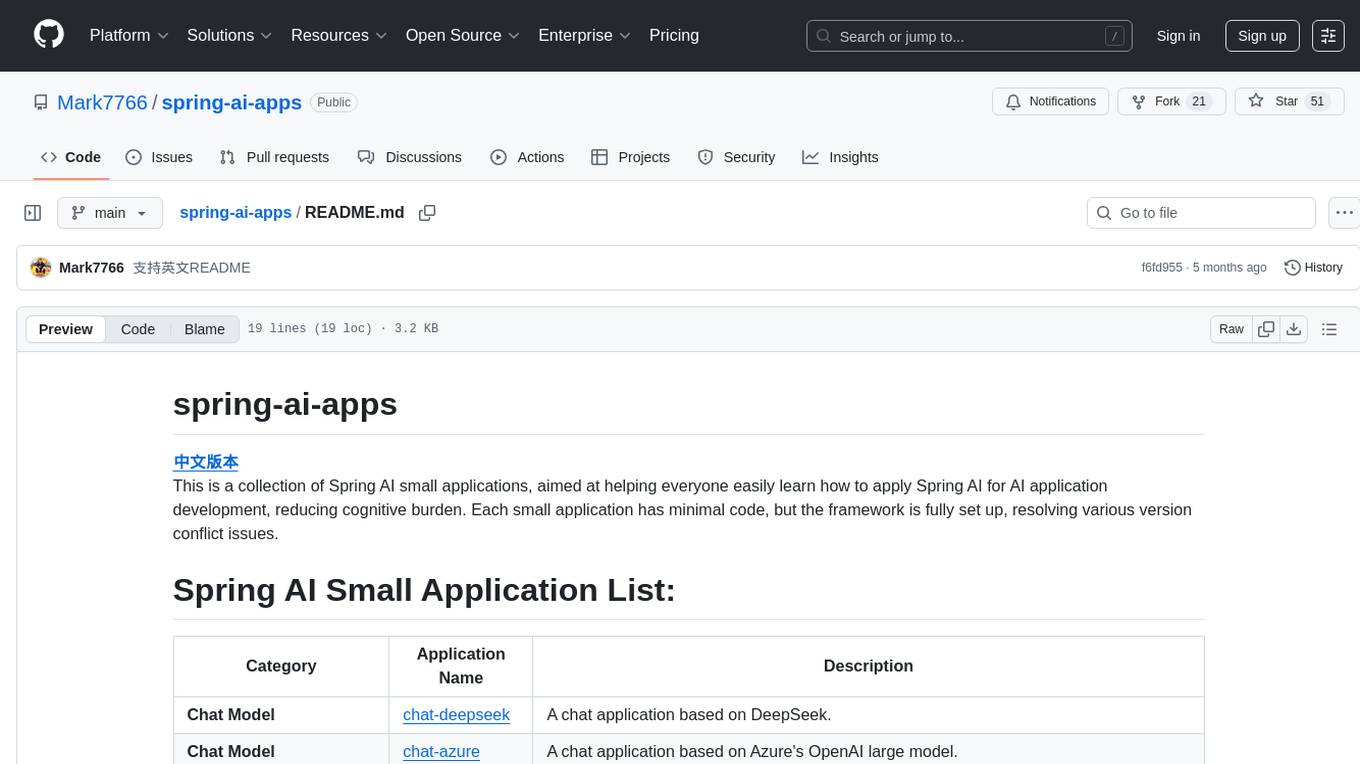

spring-ai-apps

spring-ai-apps is a collection of Spring AI small applications designed to help users easily apply Spring AI for AI application development. Each small application comes with minimal code and a fully set up framework to resolve version conflict issues.

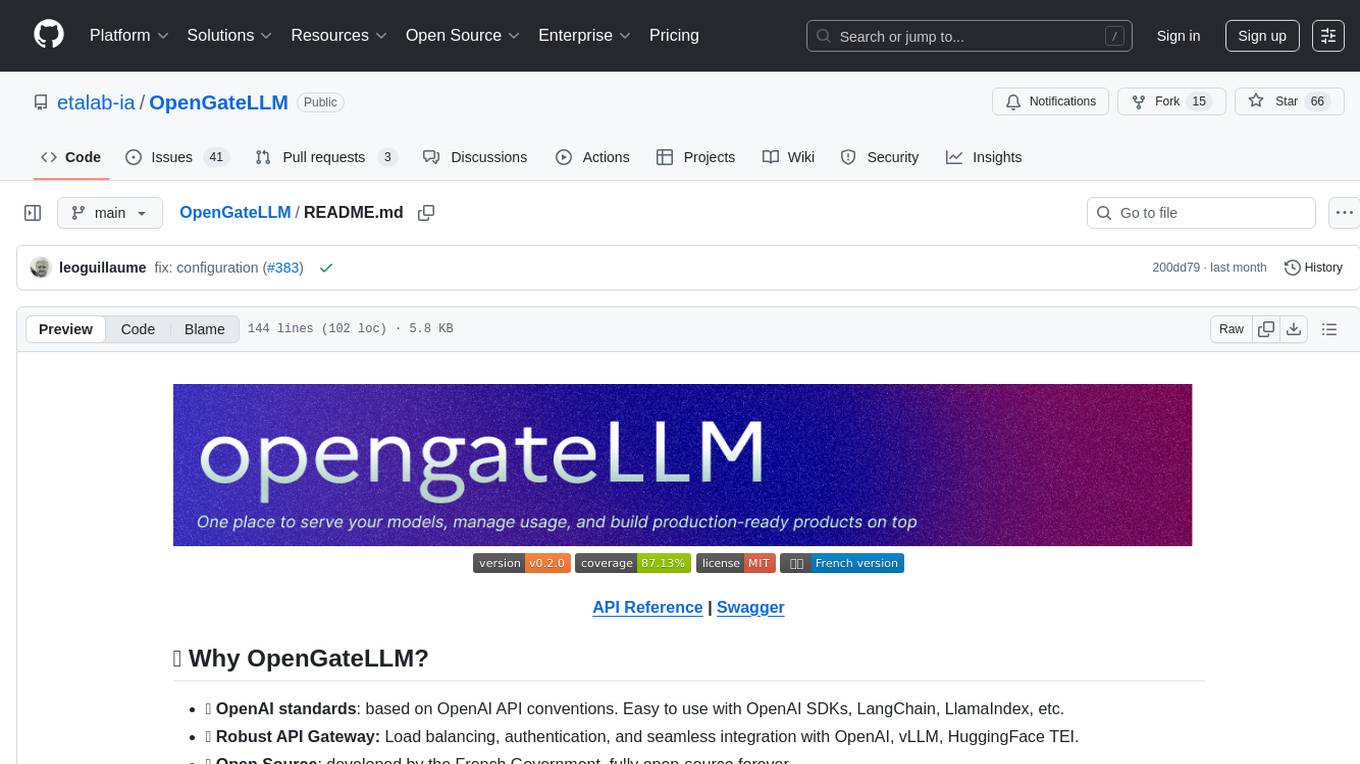

OpenGateLLM

OpenGateLLM is an open-source API gateway developed by the French Government, designed to serve AI models in production. It follows OpenAI standards and offers robust features like RAG integration, audio transcription, OCR, and more. With support for multiple AI backends and built-in security, OpenGateLLM provides a production-ready solution for various AI tasks.

llamabot

LlamaBot is a Pythonic bot interface to Large Language Models (LLMs), providing an easy way to experiment with LLMs in Jupyter notebooks and build Python apps utilizing LLMs. It supports all models available in LiteLLM. Users can access LLMs either through local models with Ollama or by using API providers like OpenAI and Mistral. LlamaBot offers different bot interfaces like SimpleBot, ChatBot, QueryBot, and ImageBot for various tasks such as rephrasing text, maintaining chat history, querying documents, and generating images. The tool also includes CLI demos showcasing its capabilities and supports contributions for new features and bug reports from the community.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.