MiniAgents

An async-first framework for building multi-agent AI systems with an innovative approach to parallelism, so you can focus on creating intelligent agents, not on managing the concurrency of your flows.

Stars: 93

MiniAgents is an open-source Python framework designed to simplify the creation of multi-agent AI systems. It offers a parallelism and async-first design, allowing users to focus on building intelligent agents while handling concurrency challenges. The framework, built on asyncio, supports LLM-based applications with immutable messages and seamless asynchronous token and message streaming between agents.

README:

MiniAgents is an open-source Python framework that takes the complexity out of building multi-agent AI systems. With its innovative approach to parallelism and async-first design, you can focus on creating intelligent agents in an easy to follow procedural fashion while the framework handles the concurrency challenges for you.

Built on top of asyncio, MiniAgents provides a robust foundation for LLM-based applications with immutable, Pydantic-based messages and seamless asynchronous token and message streaming between agents.

pip install -U miniagentsWe STRONGLY RECOMMEND checking this tutorial before you proceed with the README:

The above step-by-step tutorial teaches you how to build a practical multi-agent web research system that can break down complex questions, run parallel searches, and synthesize comprehensive answers.

Following that tutorial first will make the rest of this README easier to understand!

Here's a simple example of how to define an agent:

from miniagents import miniagent, InteractionContext, MiniAgents

@miniagent

async def my_agent(ctx: InteractionContext) -> None:

async for msg_promise in ctx.message_promises:

ctx.reply(f"You said: {await msg_promise}")

async def main() -> None:

async for msg_promise in my_agent.trigger(["Hello", "World"]):

print(await msg_promise)

if __name__ == "__main__":

MiniAgents().run(main())This script will print the following lines to the console:

You said: Hello

You said: World

Despite agents running in completely detached asyncio tasks, MiniAgents ensures proper exception propagation from callee agents to caller agents. When an exception occurs in a callee agent, it's captured and propagated through the promises of response message sequences. These exceptions are re-raised when those sequences are iterated over or awaited in any of the caller agents, ensuring that errors are not silently swallowed and can be properly handled.

Here's a simple example showing exception propagation:

from miniagents import miniagent, InteractionContext, MiniAgents

@miniagent

async def faulty_agent(ctx: InteractionContext) -> None:

# This agent will raise an exception

raise ValueError("Something went wrong in callee agent")

@miniagent

async def caller_agent(ctx: InteractionContext) -> None:

# The exception from faulty_agent WILL NOT propagate here

faulty_response_promises = faulty_agent.trigger("Hello")

try:

# The exception from faulty_agent WILL propagate here

async for msg_promise in faulty_response_promises:

await msg_promise

except ValueError as e:

ctx.reply(f"Exception while iterating over response: {e}")

async def main() -> None:

async for msg_promise in caller_agent.trigger("Start"):

print(await msg_promise)

if __name__ == "__main__":

MiniAgents().run(main())Output:

Exception while iterating over response: Something went wrong in callee agent

MiniAgents provides built-in support for OpenAI and Anthropic language models with possibility to add other integrations.

pip install -U openai and set your OpenAI API key in the OPENAI_API_KEY environment variable before running the example below.

from miniagents import MiniAgents

from miniagents.ext.llms import OpenAIAgent

# NOTE: "Forking" an agent is a convenient way of creating a new agent instance

# with the specified configuration. Alternatively, you could pass the `model`

# parameter to `OpenAIAgent.trigger()` directly everytime you talk to the

# agent.

gpt_4o_agent = OpenAIAgent.fork(model="gpt-4o-mini")

async def main() -> None:

reply_sequence = gpt_4o_agent.trigger(

"Hello, how are you?",

system="You are a helpful assistant.",

max_tokens=50,

temperature=0.7,

)

async for msg_promise in reply_sequence:

async for token in msg_promise:

print(token, end="", flush=True)

# MINOR: Let's separate messages with a double newline (even though in

# this particular case we are actually going to receive only one

# message).

print("\n")

if __name__ == "__main__":

MiniAgents().run(main())Even though OpenAI models return a single assistant response, the OpenAIAgent.trigger() method is still designed to return a sequence of multiple message promises. This generalizes to arbitrary agents, making agents in the MiniAgents framework easily interchangeable (agents in this framework support sending and receiving zero or more messages).

You can read agent responses token-by-token as shown above regardless of whether the agent is streaming token by token or returning full messages. The complete message content will just be returned as a single "token" in the latter case.

The dialog_loop agent is a pre-packaged agent that implements a dialog loop between a user agent and an assistant agent. Here is how you can use it to set up an interaction between a user and your agent (can be bare LLM agent, like OpenAIAgent or AnthropicAgent, can also be a custom agent that you define yourself - a more complex agent that uses LLM agents under the hood but also introduces more complex behavior, i.e. Retrieval Augmented Generation etc.):

pip install -U openai and set your OpenAI API key in the OPENAI_API_KEY environment variable before running the example below.

from miniagents import MiniAgents

from miniagents.ext import (

dialog_loop,

console_user_agent,

MarkdownHistoryAgent,

)

from miniagents.ext.llms import SystemMessage, OpenAIAgent

async def main() -> None:

dialog_loop.trigger(

SystemMessage(

"Your job is to improve the styling and grammar of the sentences "

"that the user throws at you. Leave the sentences unchanged if "

"they seem fine."

),

user_agent=console_user_agent.fork(

# Write chat history to a markdown file (`CHAT.md` in the current

# working directory by default, fork `MarkdownHistoryAgent` if

# you want to customize the filepath to write to).

history_agent=MarkdownHistoryAgent

),

assistant_agent=OpenAIAgent.fork(

model="gpt-4o-mini",

max_tokens=1000,

),

)

if __name__ == "__main__":

MiniAgents(

# Log LLM prompts and responses to `llm_logs/` folder in the current

# working directory. These logs will have a form of time-stamped

# markdown files - single file per single prompt-response pair.

llm_logger_agent=True

).run(main())Here is what the interaction might look like if you run this script:

YOU ARE NOW IN A CHAT WITH AN AI ASSISTANT

Press Enter to send your message.

Press Ctrl+Space to insert a newline.

Press Ctrl+C (or type "exit") to quit the conversation.

USER: hi

OPENAI_AGENT: Hello! The greeting "hi" is casual and perfectly acceptable.

It's grammatically correct and doesn't require any changes. If you wanted

to use a more formal greeting, you could consider "Hello," "Good morning/afternoon/evening,"

or "Greetings."

USER: got it, thanks!

OPENAI_AGENT: You're welcome! "Got it, thanks!" is a concise and clear expression

of understanding and gratitude. It's perfectly fine for casual communication.

A slightly more formal alternative could be "I understand. Thank you!"

Here is how you can implement a dialog loop between an agent and a user from ground up yourself (for simplicity there is no history agent in this example - check out in_memory_history_agent and how it is used if you want to know how to implement your own history agent too):

from miniagents import miniagent, InteractionContext, MiniAgents

from miniagents.ext import agent_loop, AWAIT

@miniagent

async def user_agent(ctx: InteractionContext) -> None:

async for msg_promise in ctx.message_promises:

print("ASSISTANT: ", end="", flush=True)

async for token in msg_promise:

print(token, end="", flush=True)

print()

ctx.reply(input("USER: "))

@miniagent

async def assistant_agent(ctx: InteractionContext) -> None:

# Turn a sequence of message promises into a single message promise (if

# there had been multiple messages in the sequence they would have had

# been separated by double newlines - this is how `as_single_promise()`

# works by default).

aggregated_message = await ctx.message_promises.as_single_promise()

ctx.reply(f'You said "{aggregated_message}"')

async def main() -> None:

agent_loop.trigger(agents=[user_agent, AWAIT, assistant_agent])

if __name__ == "__main__":

MiniAgents().run(main())Output:

USER: hi

ASSISTANT: You said "hi"

USER: nice!

ASSISTANT: You said "nice!"

USER: bye

ASSISTANT: You said "bye"

Remember, with this framework the agents pass promises of message sequences between each other, not the already-resolved message sequences themselves! Even when you pass concrete messages in your code, they are still wrapped into promises of message sequences behind the scenes (while your async agent code is just sent to be processed in the background).

For this reason, the presence of AWAIT sentinel in the agent chain in the example above is important. Without it the agent_loop would have kept scheduling more and more interactions between the agents of the chain without ever taking a break to catch up with their processing.

AWAIT forces the agent_loop to stop and await for the complete sequence of replies from the agent right before AWAIT prior to scheduling (triggering) the execution of the agent right after AWAIT, thus allowing it to catch up with the asynchronous processing of agents and their responses in the background.

-

miniagents.ext.llms-

OpenAIAgent: Connects to OpenAI models like GPT-4o, GPT-4o-mini, etc. Supports all OpenAI API parameters and handles token streaming seamlessly. -

AnthropicAgent: Similar to OpenAIAgent but for Anthropic's Claude models.

-

-

miniagents.ext-

console_input_agent: Prompts the user for input via the console with support for multi-line input. -

console_output_agent: Echoes messages to the console token by token, which is useful when the response is streamed from an LLM (if response messages are delivered all at once instead, this agent will also just print them all at once). -

file_output_agent: Writes messages to a specified file, useful for saving responses from other agents. -

user_agent: A user agent that echoes messages from the agent that called it, then reads the user input and returns the user input as its response. This agent is an aggregation of theconsole_output_agentandconsole_input_agent(these two agents can be substituted with other agents of similar functionality, however). -

agent_loop: Creates an infinite loop of interactions between the specified agents. It's designed for ongoing conversations or continuous processing, particularly useful for chat interfaces where agents need to take turns indefinitely (or unless stopped withKeyboardInterrupt). -

dialog_loop: A special case ofagent_loopdesigned for conversation between a user and an assistant, with optional chat history tracking. -

agent_chain: Executes a sequence of agents in order, where each agent processes the output of the previous agent. This creates a pipeline of processing steps, with messages flowing from one agent to the next in a specified sequence. -

in_memory_history_agent: Keeps track of conversation history in memory, enabling context-aware interactions without external storage. -

MarkdownHistoryAgent: Keeps track of conversation history in a markdown file, allowing to resume a conversation from the same point even if the app is restarted. -

markdown_llm_logger_agent: Logs LLM interactions (prompts and responses) in markdown format, useful for debugging and auditing purposes (look forllm_logger_agent=Trueof theMiniAgents()context manager in one of the code examples above).

-

Feel free to explore the source code in the miniagents.ext package to see how various agents are implemented and get inspiration for building your own!

Let's consider an example that consists of two dummy agents and an aggregator agent that aggregates the responses from the two dummy agents (and also adds some messages of its own):

import asyncio

from miniagents.miniagents import (

MiniAgents,

miniagent,

InteractionContext,

Message,

)

@miniagent

async def agent1(ctx: InteractionContext) -> None:

print("Agent 1 started")

ctx.reply("*** MESSAGE #1 from Agent 1 ***")

print("Agent 1 still working")

ctx.reply("*** MESSAGE #2 from Agent 1 ***")

print("Agent 1 finished")

@miniagent

async def agent2(ctx: InteractionContext) -> None:

print("Agent 2 started")

ctx.reply("*** MESSAGE from Agent 2 ***")

print("Agent 2 finished")

@miniagent

async def aggregator_agent(ctx: InteractionContext) -> None:

print("Aggregator started")

ctx.reply(

[

"*** AGGREGATOR MESSAGE #1 ***",

agent1.trigger(),

agent2.trigger(),

]

)

print("Aggregator still working")

ctx.reply("*** AGGREGATOR MESSAGE #2 ***")

print("Aggregator finished")

async def main() -> None:

print("TRIGGERING AGGREGATOR")

msg_promises = aggregator_agent.trigger()

print("TRIGGERING AGGREGATOR DONE\n")

print("SLEEPING FOR ONE SECOND")

# This is when the agents will actually start processing (in fact, any

# other kind of task switch would have had the same effect).

await asyncio.sleep(1)

print("SLEEPING DONE\n")

print("PREPARING TO GET MESSAGES FROM AGGREGATOR")

async for msg_promise in msg_promises:

# MessagePromises always resolve into Message objects (or subclasses),

# even if the agent was replying with bare strings

message: Message = await msg_promise

print(message)

# You can safely `await` again. Concrete messages (and tokens, if there was

# token streaming) are cached inside the promises. Message sequences (as

# well as token sequences) are "replayable".

print("TOTAL NUMBER OF MESSAGES FROM AGGREGATOR:", len(await msg_promises))

if __name__ == "__main__":

MiniAgents().run(main())This script will print the following lines to the console:

TRIGGERING AGGREGATOR

TRIGGERING AGGREGATOR DONE

SLEEPING FOR ONE SECOND

Aggregator started

Aggregator still working

Aggregator finished

Agent 1 started

Agent 1 still working

Agent 1 finished

Agent 2 started

Agent 2 finished

SLEEPING DONE

PREPARING TO GET MESSAGES FROM AGGREGATOR

*** AGGREGATOR MESSAGE #1 ***

*** MESSAGE #1 from Agent 1 ***

*** MESSAGE #2 from Agent 1 ***

*** MESSAGE from Agent 2 ***

*** AGGREGATOR MESSAGE #2 ***

TOTAL NUMBER OF MESSAGES FROM AGGREGATOR: 5

None of the agent functions start executing upon any of the calls to the trigger() method. Instead, in all cases the trigger() method immediately returns with promises to "talk to the agent(s)" (promises of sequences of promises of response messages, to be very precise - see MessageSequencePromise and MessagePromise classes for details).

As long as the global start_soon setting is set to True (which is the default - see the source code of PromisingContext, the parent class of MiniAgents context manager for details), the actual agent functions will start processing at the earliest task switch (the behaviour of asyncio.create_task(), which is used under the hood). In this example it is going to be await asyncio.sleep(1) inside the main() function, but if this sleep() wasn't there, it would have happened upon the first iteration of the async for loop which is the next place where a task switch happens.

💪 EXERCISE FOR READER: Add another await asyncio.sleep(1) right before print("Aggregator finished") in the aggregator_agent function and then try to predict how the output will change. After that, run the modified script and check if your prediction was correct.

start_soon to False for individual agent calls if you need to prevent them from starting any earlier than the first time their response sequence promise is awaited for: some_agent.trigger(request_messages_if_any, start_soon=False). However, setting it to False for the whole system globally is not recommended because it can lead to deadlocks.

Here's a simple example demonstrating how to use agent_call = some_agent.initiate_call() and then do agent_call.send_message() two times before calling agent_call.reply_sequence() (instead of all-in-one some_agent.trigger()):

from miniagents import miniagent, InteractionContext, MiniAgents

@miniagent

async def output_agent(ctx: InteractionContext) -> None:

async for msg_promise in ctx.message_promises:

ctx.reply(f"Echo: {await msg_promise}")

async def main() -> None:

agent_call = output_agent.initiate_call()

agent_call.send_message("Hello")

agent_call.send_message("World")

reply_sequence = agent_call.reply_sequence()

async for msg_promise in reply_sequence:

print(await msg_promise)

if __name__ == "__main__":

MiniAgents().run(main())This will output:

Echo: Hello

Echo: World

There are three ways to use the MiniAgents() context:

-

Calling its

run()method with your main function as a parameter (themain()function in this example should be defined asasync):MiniAgents().run(main())

-

Using it as an async context manager:

async with MiniAgents(): ... # your async code that works with agents goes here

-

Directly calling its

activate()(and, potentially,afinalize()at the end) methods:mini_agents = MiniAgents() mini_agents.activate() try: ... # your async code that works with agents goes here finally: await mini_agents.afinalize()

The third way might be ideal for web applications and other cases when there is no single function that you can encapsulate with the MiniAgents() context manager (or it is unclear what such function would be). You just do mini_agents.activate() somewhere upon the init of the server and forget about it.

from miniagents.ext.llms import UserMessage, SystemMessage, AssistantMessage

user_message = UserMessage("Hello!")

system_message = SystemMessage("System message")

assistant_message = AssistantMessage("Assistant message")The difference between these message types is in the default values of the role field of the message:

-

UserMessagehasrole="user"by default -

SystemMessagehasrole="system"by default -

AssistantMessagehasrole="assistant"by default

You can create custom message types by subclassing Message.

from miniagents.messages import Message

class CustomMessage(Message):

custom_field: str

message = CustomMessage("Hello", custom_field="Custom Value")

print(message.content) # Output: Hello

print(message.custom_field) # Output: Custom ValueFor some more examples, check out the examples directory.

There are two main features of MiniAgents the idea of which motivated the creation of this framework:

- It is very easy to throw bare strings, messages, message promises, collections, and sequences of messages and message promises (as well as the promises of the sequences themselves) all together into an agent reply (see

MessageType). This entire hierarchical structure will be asynchronously resolved in the background into a flat and uniform sequence of message promises (it will be automatically "flattened" in the background). - By default, agents work in so called

start_soonmode, which is different from the usual way coroutines work where you need to actively await on them or iterate over them (in case of asynchronous generators). Instart_soonmode, every agent, after it was invoked, actively seeks every opportunity to proceed its processing in the background when async tasks switch.

The second feature combines this start_soon approach with regular async/await and async generators by using so called streamed promises (see StreamedPromise and Promise classes) which were designed to be "replayable".

The design choice for immutable messages was made specifically to enable this kind of highly parallelized agent execution. Since Generative AI applications are inherently IO-bound (with models typically hosted externally), immutable messages eliminate concerns about concurrent state mutations. This approach allows multiple agents to process messages simultaneously without risk of race conditions or data corruption, maximizing throughput in distributed LLM workflows.

MiniAgents provides a way to persist messages as they are resolved from promises using the @MiniAgents().on_persist_message decorator. This allows you to implement custom logic for storing or logging messages.

Additionally, messages (as well as any other Pydantic models derived from Frozen) have a hash_key property. This property calculates the sha256 hash of the content of the message and is used as the id of the Messages (or any other Frozen model), much like there are commit hashes in git.

Here's a simple example of how to use the on_persist_message decorator:

from miniagents import MiniAgents, Message

mini_agents = MiniAgents()

@mini_agents.on_persist_message

async def persist_message(_, message: Message) -> None:

print(f"Persisting message with hash key: {message.hash_key}")

# Here you could save the message to a database or log it to a fileHere are some of the core concepts in the MiniAgents framework:

-

MiniAgent: A wrapper around an async function (or a whole class withasync def __call__()method) that defines an agent's behavior. Created using the@miniagentdecorator. -

InteractionContext: Passed to each agent function, provides access to incoming messages and allows sending replies. -

Message: Represents a message exchanged between agents. Can contain content, metadata, and nested messages. Immutable once created. -

MessagePromise: A promise of a message that can be streamed token by token. -

MessageSequencePromise: A promise of a sequence of message promises. -

Frozen: An immutable Pydantic model with a git-style hash key calculated from its JSON representation. The base class forMessage.

More underlying concepts (you will rarely need to use them directly, if at all):

-

StreamedPromise: A promise that can be resolved piece by piece, allowing for streaming. The base class forMessagePromiseandMessageSequencePromise. -

Promise: Represents a value that may not be available yet, but will be resolved in the future. The base class forStreamedPromise.

Join our Discord community to get help with your projects. We welcome questions, feature suggestions, and contributions!

MiniAgents is released under the MIT License.

Happy coding with MiniAgents! 🚀

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MiniAgents

Similar Open Source Tools

MiniAgents

MiniAgents is an open-source Python framework designed to simplify the creation of multi-agent AI systems. It offers a parallelism and async-first design, allowing users to focus on building intelligent agents while handling concurrency challenges. The framework, built on asyncio, supports LLM-based applications with immutable messages and seamless asynchronous token and message streaming between agents.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

aiorun

aiorun is a Python package that provides a `run()` function as the starting point of your `asyncio`-based application. The `run()` function handles everything needed during the shutdown sequence of the application, such as creating a `Task` for the given coroutine, running the event loop, adding signal handlers for `SIGINT` and `SIGTERM`, cancelling tasks, waiting for the executor to complete shutdown, and closing the loop. It automates standard actions for asyncio apps, eliminating the need to write boilerplate code. The package also offers error handling options and tools for specific scenarios like TCP server startup and smart shield for shutdown.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

airflow-ai-sdk

This repository contains an SDK for working with LLMs from Apache Airflow, based on Pydantic AI. It allows users to call LLMs and orchestrate agent calls directly within their Airflow pipelines using decorator-based tasks. The SDK leverages the familiar Airflow `@task` syntax with extensions like `@task.llm`, `@task.llm_branch`, and `@task.agent`. Users can define tasks that call language models, orchestrate multi-step AI reasoning, change the control flow of a DAG based on LLM output, and support various models in the Pydantic AI library. The SDK is designed to integrate LLM workflows into Airflow pipelines, from simple LLM calls to complex agentic workflows.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

mentals-ai

Mentals AI is a tool designed for creating and operating agents that feature loops, memory, and various tools, all through straightforward markdown syntax. This tool enables you to concentrate solely on the agent’s logic, eliminating the necessity to compose underlying code in Python or any other language. It redefines the foundational frameworks for future AI applications by allowing the creation of agents with recursive decision-making processes, integration of reasoning frameworks, and control flow expressed in natural language. Key concepts include instructions with prompts and references, working memory for context, short-term memory for storing intermediate results, and control flow from strings to algorithms. The tool provides a set of native tools for message output, user input, file handling, Python interpreter, Bash commands, and short-term memory. The roadmap includes features like a web UI, vector database tools, agent's experience, and tools for image generation and browsing. The idea behind Mentals AI originated from studies on psychoanalysis executive functions and aims to integrate 'System 1' (cognitive executor) with 'System 2' (central executive) to create more sophisticated agents.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

Tools4AI

Tools4AI is a Java-based Agentic Framework for building AI agents to integrate with enterprise Java applications. It enables the conversion of natural language prompts into actionable behaviors, streamlining user interactions with complex systems. By leveraging AI capabilities, it enhances productivity and innovation across diverse applications. The framework allows for seamless integration of AI with various systems, such as customer service applications, to interpret user requests, trigger actions, and streamline workflows. Prompt prediction anticipates user actions based on input prompts, enhancing user experience by proactively suggesting relevant actions or services based on context.

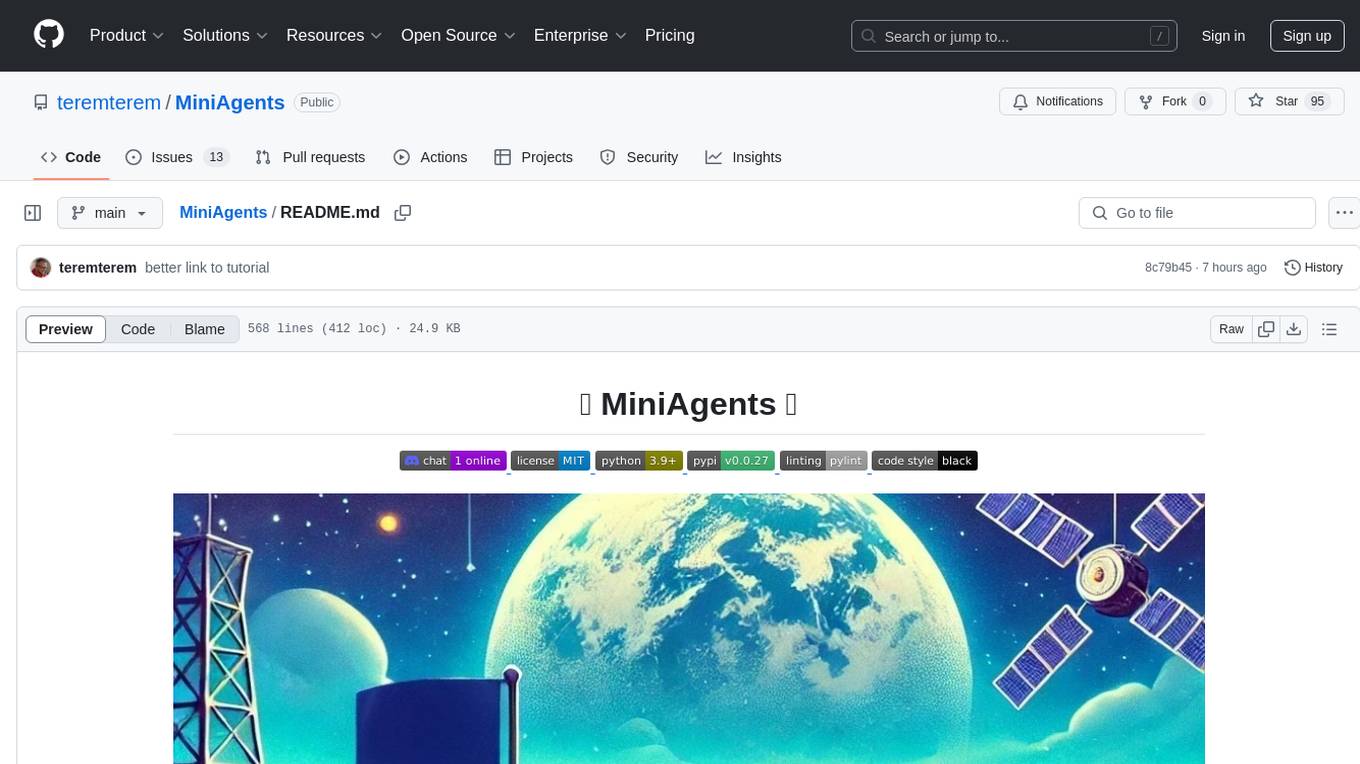

openai-agents-python

The OpenAI Agents SDK is a lightweight framework for building multi-agent workflows. It includes concepts like Agents, Handoffs, Guardrails, and Tracing to facilitate the creation and management of agents. The SDK is compatible with any model providers supporting the OpenAI Chat Completions API format. It offers flexibility in modeling various LLM workflows and provides automatic tracing for easy tracking and debugging of agent behavior. The SDK is designed for developers to create deterministic flows, iterative loops, and more complex workflows.

fabrice-ai

A lightweight, functional, and composable framework for building AI agents that work together to solve complex tasks. Built with TypeScript and designed to be serverless-ready. Fabrice embraces functional programming principles, remains stateless, and stays focused on composability. It provides core concepts like easy teamwork creation, infrastructure-agnosticism, statelessness, and includes all tools and features needed to build AI teams. Agents are specialized workers with specific roles and capabilities, able to call tools and complete tasks. Workflows define how agents collaborate to achieve a goal, with workflow states representing the current state of the workflow. Providers handle requests to the LLM and responses. Tools extend agent capabilities by providing concrete actions they can perform. Execution involves running the workflow to completion, with options for custom execution and BDD testing.

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

tonic_validate

Tonic Validate is a framework for the evaluation of LLM outputs, such as Retrieval Augmented Generation (RAG) pipelines. Validate makes it easy to evaluate, track, and monitor your LLM and RAG applications. Validate allows you to evaluate your LLM outputs through the use of our provided metrics which measure everything from answer correctness to LLM hallucination. Additionally, Validate has an optional UI to visualize your evaluation results for easy tracking and monitoring.

For similar tasks

MiniAgents

MiniAgents is an open-source Python framework designed to simplify the creation of multi-agent AI systems. It offers a parallelism and async-first design, allowing users to focus on building intelligent agents while handling concurrency challenges. The framework, built on asyncio, supports LLM-based applications with immutable messages and seamless asynchronous token and message streaming between agents.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

hello-agents

Hello-Agents is a comprehensive tutorial on building intelligent agent systems, covering both theoretical foundations and practical applications. The tutorial aims to guide users in understanding and building AI-native agents, diving deep into core principles, architectures, and paradigms of intelligent agents. Users will learn to develop their own multi-agent applications from scratch, gaining hands-on experience with popular low-code platforms and agent frameworks. The tutorial also covers advanced topics such as memory systems, context engineering, communication protocols, and model training. By the end of the tutorial, users will have the skills to develop real-world projects like intelligent travel assistants and cyber towns.

EverMemOS

EverMemOS is an AI memory system that enables AI to not only remember past events but also understand the meaning behind memories and use them to guide decisions. It achieves 93% reasoning accuracy on the LoCoMo benchmark by providing long-term memory capabilities for conversational AI agents through structured extraction, intelligent retrieval, and progressive profile building. The tool is production-ready with support for Milvus vector DB, Elasticsearch, MongoDB, and Redis, and offers easy integration via a simple REST API. Users can store and retrieve memories using Python code and benefit from features like multi-modal memory storage, smart retrieval mechanisms, and advanced techniques for memory management.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.