ai2-scholarqa-lib

Repo housing the open sourced code for the ai2 scholar qa app and also the corresponding library

Stars: 142

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

README:

This repo houses the code for the live demo and can be run as local docker containers or embedded into another application as a python package.

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across our corpus and synthesizing an organized report with evidence for each claim. As a RAG based architecture, Ai2 Scholar QA has a retrieval component and a three step generator pipeline.

-

The retrieval component consists of two sub-components:

i. Retriever - Based on the user query, relevant evidence passages are fetched using the Semantic Scholar public api's snippet/search end point which looks up an index of open source papers. Further, we also use the api's keyword search to suppliement the results from the index with paper abstracts. The user query is preprocessed to extract entities for filtering the papers and re-writing the query as needed. Prompt

ii. Reranker - The results from the retriever are then reranked with mixedbread-ai/mxbai-rerank-large-v1 and top k results are retained and aggregated at the paper-level to combine all the passages from a single paper.

These components are encapsulated in the PaperFinder class.

-

The generation pipeline comprises of three steps:

i. Quote Extraction - The user query along with the aggregated passages from the retrieval component are sent to an LLM (Claude Sonnet 3.5 default) to extract exact quotes relevant to answer the query. Prompt

ii. Planning and Clustering - The llm is then prompted to generate an organization of the output report with sections headings and format of the section. The quotes from step (i) are clustered and assigned to each heading. Prompt

iii. Summary Generation - Each section is generated based on the quotes assigned to that section and all the prior text generated in the report. Prompt

These steps are encapsulated in the MultiStepQAPipeline class. For some sections, we also generate literature review tables that compare and contrast all papers referenced in that section. We generate these tables using the pipeline proposed by the ArxivDIGESTables paper, which is available here.

Both the PaperFinder and MultiStepQAPipeline are in turn members of ScholarQA, which is the main class powering our system.

For more info please refer to our blogpost.

Environment Variables

Ai2 Scholar QA requires Semantic Scholar api and LLMs for its core functionality of retrieval and generation. So please ensure to create a .env file in the root directory with the following environment variables:

export S2_API_KEY=

export ANTHROPIC_API_KEY=

export OPENAI_API_KEY=

S2_API_KEY : Used to retrieve the relevant paper passages , keyword search results and associated metadata via the Semantic Scholar public api.

ANTHROPIC_API_KEY : Ai2 Scholar QA uses Anthropic's Claude 3.5 Sonnet as the primary LLM for generation, but any model served by litellm should work. Please configure the corresponding api key here.

OPENAI_API_KEY: OpenAI's GPT 4o is configured as the fallback llm.

Note: We also use OpenAI's text moderation api to validate and filter harmful queries. If you don't have access to an OpenAI api key, this feature will be disabled.

If you use Modal to serve your models, please configure MODAL_TOKEN and MODAL_TOKEN_SECRET here as well.

Please refer to default.json for the default runtime config.

{

"logs": {

"log_dir": "logs",

"llm_cache_dir": "llm_cache",

"event_trace_loc": "scholarqa_traces",

"tracing_mode": "local"

},

"run_config": {

"retrieval_service": "public_api",

"retriever_args": {

"n_retrieval": 256,

"n_keyword_srch": 20

},

"reranker_service": "modal",

"reranker_args": {

"app_name": "ai2-scholar-qa",

"api_name": "inference_api",

"batch_size": 256,

"gen_options": {}

},

"paper_finder_args": {

"n_rerank": 50,

"context_threshold": 0.5

},

"pipeline_args": {

"validate": true,

"llm": "anthropic/claude-3-5-sonnet-20241022",

"decomposer_llm": "anthropic/claude-3-5-sonnet-20241022"

}

}

}The config is used to populate the AppConfig instance:

Logging

class LogsConfig(BaseModel):

log_dir: str = Field(default="logs", description="Directory to store logs, event traces and litellm cache")

llm_cache_dir: str = Field(default="llm_cache", description="Sub directory to cache llm calls")

event_trace_loc: str = Field(default="scholarqa_traces", description="Sub directory to store event traces"

"OR the GCS bucket name")

tracing_mode: Literal["local", "gcs"] = Field(default="local",

description="Mode to store event traces (local or gcs)")Note:

i. Event Traces are json documents containing a trace of the entire pipeline i.e. the results of retrieval, reranking, each step of the qa pipeline and associated costs, if any.

ii. llm_cache_dir is used to initialize the local disk cache for caching llm calls via litellm.

iii. The traces are stored locally in {log_dir}/{event_trace_loc} by

default. They can also be persisted in a Google Cloud Storage (GCS)

bucket. Please set the tracing_mode="gcs" and event_trace_loc=<GCS bucket name> here and the export GOOGLE_APPLICATION_CREDENTIALS=<Service Account Key json file path>

in .env.

iv. By default, the working directory is ./api , so the log_dir will be created inside it as a sub-directory unless the config is modified.

You can also activate Langsmith based log traces if you have an api key configured. Please add the following environment variables:

LANGCHAIN_API_KEY

LANGCHAIN_TRACING_V2

LANGCHAIN_ENDPOINT

LANGCHAIN_PROJECT

Pipeline

class RunConfig(BaseModel):

retrieval_service: str = Field(default="public_api", description="Service to use for paper retrieval")

retriever_args: dict = Field(default=None, description="Arguments for the retrieval service")

reranker_service: str = Field(default="modal", description="Service to use for paper reranking")

reranker_args: dict = Field(default=None, description="Arguments for the reranker service")

paper_finder_args: dict = Field(default=None, description="Arguments for the paper finder service")

pipeline_args: dict = Field(default=None, description="Arguments for the Scholar QA pipeline service")Note:

i. *(retrieval, reranker)_service can be used to indicate the type

of retrieval/reranker you want to instantiate. Ai2 Scholar QA uses the

FullTextRetriever and ModalReranker respectively, which are chosen based on the

default public_api and modal keywords. To choose a

SentenceTransformers reranker, replace modal with cross_encoder or

biencoder or define your own types.

ii. *(retriever, reranker, paper_finder, pipeline)_args are used to

initialize the corresponding instances of the pipeline components. eg.

retriever = FullTextRetriever(**run_config.retriever_args). You

can initialize multiple runs and customize your pipeline.

iii. If the reranker_args are not defined, the app resorts to using only the retrieval service.

The web app initializes 4 docker containers - one each for the API, GUI, nginx proxy and sonar with their own Dockerfile. The api container config can also be used to declare environment variables -

api:

build: ./api

volumes:

- ./api:/api

- ./secret:/secret

environment:

# This ensures that errors are printed as they occur, which

# makes debugging easier.

- PYTHONUNBUFFERED=1

- LOG_LEVEL=INFO

- CONFIG_PATH=run_configs/default.json

ports:

- 8000:8000

env_file:

- .envenvironment.CONFIG_PATH indicates the path of the application configuration json file.

env_file indicates the path of the file with environment variables.

Please refer to DOCKER.md for more info on setting up the docker app.

i. Clone the repo

git clone [email protected]:allenai/ai2-scholarqa-lib.git

cd ai2-scholarqa-libii. Run docker-compose

docker compose up --buildThe docker compose command takes a while to run the first time to install torch and related dependencies. You can get the verbose output with the following command:

docker compose build --progress plainhttps://github.com/user-attachments/assets/7d6761d6-1e95-4dac-9aeb-a5a898a89fbe

https://github.com/user-attachments/assets/baed8710-2161-4fbf-b713-3a2dcf46ac61

https://github.com/user-attachments/assets/f9a1b39f-36c8-41c4-a0ac-10046ded0593

The Ai2 Scholar QA UI is powered by an async api at the back end in app.py which is run from dev.sh.

i. The query_corpusqa end point is first called with the query, and a uuid as the user_id, adn it returns a task_id.

ii. Subsequently, the query_corpusqa is then polled to get the updated status of the async task until the task status is not COMPLETED

conda create -n scholarqa python=3.11.3

conda activate scholarqa

pip install ai2-scholar-qa

#to use sentence transformer models as re-ranker

pip install 'ai2-scholar-qa[all]'Both the webapp and the api are powered by the same pipeline represented by the ScholarQA class. The pipeline consists of a retrieval component, the PaperFinder which consists of a retriever and maybe a reranker and a 3 step generator component MultiStepQAPipeline. Each component is extensible and can be replaced by custom instances/classes as required.

Sample usage

from scholarqa.rag.reranker.modal_engine import ModalReranker

from scholarqa.rag.retrieval import PaperFinderWithReranker

from scholarqa.rag.retriever_base import FullTextRetriever

from scholarqa import ScholarQA

from scholarqa.llms.constants import CLAUDE_35_SONNET

retriever = FullTextRetriever(n_retrieval=256, n_keyword_srch=20)

reranker = ModalReranker(app_name=<modal_app_name>, api_name=<modal_api_name>, batch_size=256, gen_options=dict())

paper_finder = PaperFinderWithReranker(retriever, reranker, n_rerank=50, context_threshold=0.5)

#For wrapper class with MultiStepQAPipeline integrated

scholar_qa = ScholarQA(paper_finder=paper_finder, llm_model=CLAUDE_35_SONNET) #llm_model can be any litellm model

print(scholar_qa.answer_query("Which is the 9th planet in our solar system?"))

#Custom MultiStepQAPipeline class/steps

from scholarqa.rag.multi_step_qa_pipeline import MultiStepQAPipeline

mqa_pipeline = MultiStepQAPipeline(llm_model=CLAUDE_35_SONNET)

per_paper_summaries, completion_results = mqa_pipeline.step_select_quotes(query, scored_df, sys_prompt)

plan_json = mqa_pipeline.step_clustering(query, per_paper_summaries, sys_prompt)

response = list(generate_iterative_summary(query, per_paper_summaries, plan_json, sys_prompt))-

The api end points in app.py can be extended with a fastapi APIRouter in another script. eg.

custom_app.pyfrom fastapi import APIRouter, FastAPI from scholarqa.app import create_app as create_app_base def create_app() -> FastAPI: app = create_app_base() custom_router = APIRouter() @custom_router.post("/custom") def custom_endpt(): pass app.include_router(custom_router) return app.py

To run

custom_app.py, simply replacescholarqa.app:create_appin dev.sh with<package>.custom_app:create_app -

To extend the existing ScholarQA functionality in a new class you can either create a sub class of ScholarQA or a new class altogether. Either way,

lazy_load_scholarqain app.py should be reimplemented in the new api script to ensure the correct class is initialized. -

The components of the pipeline are individually extensible. We have the following abstract classes that can be extended to achieve desired customization for retrieval:

and the MultiStepQAPipeline can be extended/modified as needed for generation.

-

If you would prefer to serve your models via modal, please refer to MODAL.md for more info and sample code that we used to deploy the reranker model in the live demo.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai2-scholarqa-lib

Similar Open Source Tools

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

neo4j-graphrag-python

The Neo4j GraphRAG package for Python is an official repository that provides features for creating and managing vector indexes in Neo4j databases. It aims to offer developers a reliable package with long-term commitment, maintenance, and fast feature updates. The package supports various Python versions and includes functionalities for creating vector indexes, populating them, and performing similarity searches. It also provides guidelines for installation, examples, and development processes such as installing dependencies, making changes, and running tests.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

cortex

Cortex is a tool that simplifies and accelerates the process of creating applications utilizing modern AI models like chatGPT and GPT-4. It provides a structured interface (GraphQL or REST) to a prompt execution environment, enabling complex augmented prompting and abstracting away model connection complexities like input chunking, rate limiting, output formatting, caching, and error handling. Cortex offers a solution to challenges faced when using AI models, providing a simple package for interacting with NL AI models.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

semantic-cache

Semantic Cache is a tool for caching natural text based on semantic similarity. It allows for classifying text into categories, caching AI responses, and reducing API latency by responding to similar queries with cached values. The tool stores cache entries by meaning, handles synonyms, supports multiple languages, understands complex queries, and offers easy integration with Node.js applications. Users can set a custom proximity threshold for filtering results. The tool is ideal for tasks involving querying or retrieving information based on meaning, such as natural language classification or caching AI responses.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

MiniAgents

MiniAgents is an open-source Python framework designed to simplify the creation of multi-agent AI systems. It offers a parallelism and async-first design, allowing users to focus on building intelligent agents while handling concurrency challenges. The framework, built on asyncio, supports LLM-based applications with immutable messages and seamless asynchronous token and message streaming between agents.

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

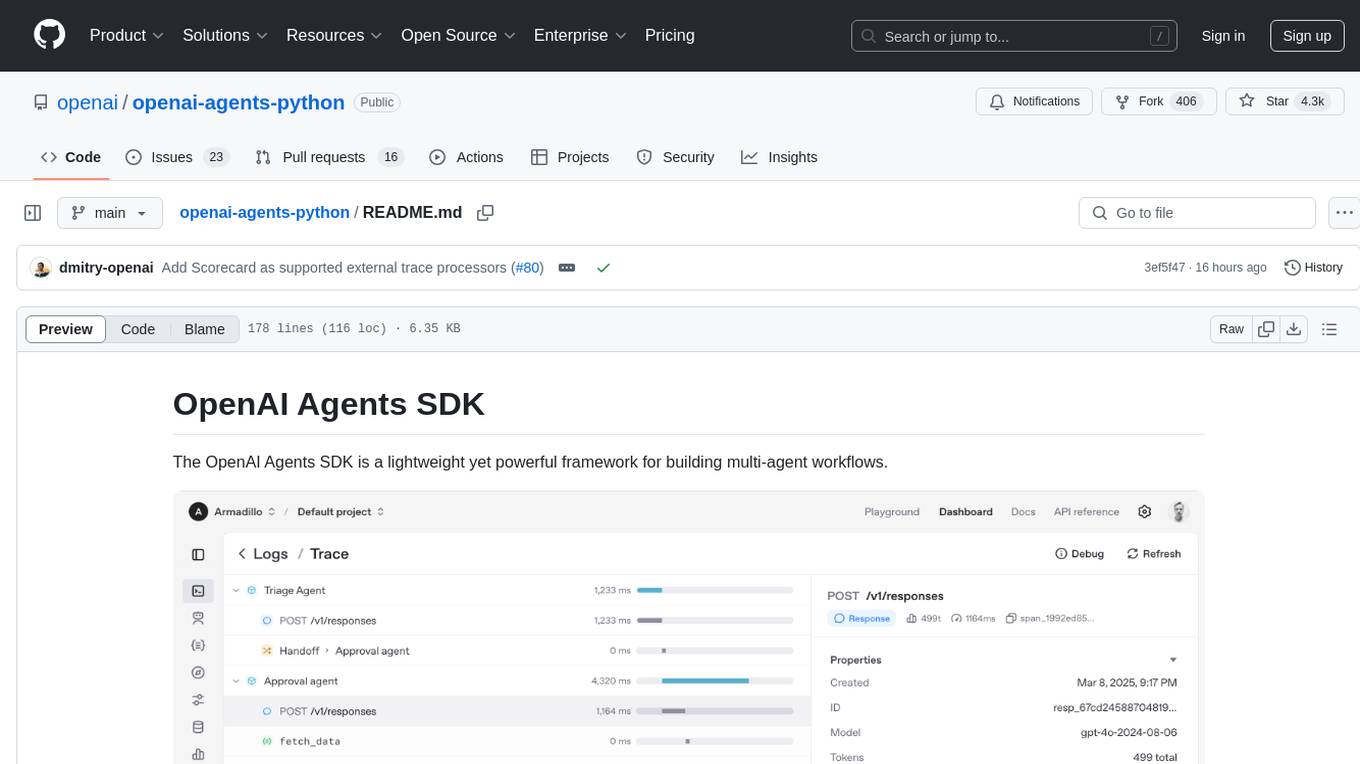

openai-agents-python

The OpenAI Agents SDK is a lightweight framework for building multi-agent workflows. It includes concepts like Agents, Handoffs, Guardrails, and Tracing to facilitate the creation and management of agents. The SDK is compatible with any model providers supporting the OpenAI Chat Completions API format. It offers flexibility in modeling various LLM workflows and provides automatic tracing for easy tracking and debugging of agent behavior. The SDK is designed for developers to create deterministic flows, iterative loops, and more complex workflows.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

mflux

MFLUX is a line-by-line port of the FLUX implementation in the Huggingface Diffusers library to Apple MLX. It aims to run powerful FLUX models from Black Forest Labs locally on Mac machines. The codebase is minimal and explicit, prioritizing readability over generality and performance. Models are implemented from scratch in MLX, with tokenizers from the Huggingface Transformers library. Dependencies include Numpy and Pillow for image post-processing. Installation can be done using `uv tool` or classic virtual environment setup. Command-line arguments allow for image generation with specified models, prompts, and optional parameters. Quantization options for speed and memory reduction are available. LoRA adapters can be loaded for fine-tuning image generation. Controlnet support provides more control over image generation with reference images. Current limitations include generating images one by one, lack of support for negative prompts, and some LoRA adapters not working.

For similar tasks

basdonax-ai-rag

Basdonax AI RAG v1.0 is a repository that contains all the necessary resources to create your own AI-powered secretary using the RAG from Basdonax AI. It leverages open-source models from Meta and Microsoft, namely 'Llama3-7b' and 'Phi3-4b', allowing users to upload documents and make queries. This tool aims to simplify life for individuals by harnessing the power of AI. The installation process involves choosing between different data models based on GPU capabilities, setting up Docker, pulling the desired model, and customizing the assistant prompt file. Once installed, users can access the RAG through a local link and enjoy its functionalities.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

awesome-agents

Awesome Agents is a curated list of open source AI agents designed for various tasks such as private interactions with documents, chat implementations, autonomous research, human-behavior simulation, code generation, HR queries, domain-specific research, and more. The agents leverage Large Language Models (LLMs) and other generative AI technologies to provide solutions for complex tasks and projects. The repository includes a diverse range of agents for different use cases, from conversational chatbots to AI coding engines, and from autonomous HR assistants to vision task solvers.

Lumi-AI

Lumi AI is a friendly AI sidekick with a human-like personality that offers features like file upload and analysis, web search, local chat storage, custom instructions, changeable conversational style, enhanced context retention, voice query input, and various tools. The project has been developed with contributions from a team of developers, designers, and testers, and is licensed under Apache 2.0 and MIT licenses.

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

For similar jobs

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

HebTTS

HebTTS is a language modeling approach to diacritic-free Hebrew text-to-speech (TTS) system. It addresses the challenge of accurately mapping text to speech in Hebrew by proposing a language model that operates on discrete speech representations and is conditioned on a word-piece tokenizer. The system is optimized using weakly supervised recordings and outperforms diacritic-based Hebrew TTS systems in terms of content preservation and naturalness of generated speech.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.