LongRAG

Official repo for "LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs".

Stars: 103

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

README:

This repo contains the code for "LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs". We are still in the process to polish our repo.

- Introduction

- Installation

- Quick Start

- Corpus Preparation (Optional)

- Long Retriever

- Long Reader

- License

- Citation

In traditional RAG framework, the basic retrieval units are normally short. Such a design forces the retriever to search over a large corpus to find the "needle" unit. In contrast, the readers only need to extract answers from the short retrieved units. Such an imbalanced heavy retriever and light reader design can lead to sub-optimal performance. We propose a new framework LongRAG, consisting of a "long retriever" and a "long reader". Our framework use a 4K-token retrieval unit, which is 30x longer than before. Our study offers insights into the future roadmap for combining RAG with long-context LLMs.

Clone this repository and install the required packages:

git clone https://github.com/TIGER-AI-Lab/LongRAG.git

cd LongRAG

pip install -r requirements.txtPlease go to the "Long Reader" section and follow the instructions. This will help you get the final prediction for 100 examples.

The output will be similar to our sample files in the exp/ directory.

This is an optional step. You can use our processed corpus directly. We have released two versions of the retrieval corpus for NQ and HotpotQA on Hugging Face.

from datasets import load_dataset

corpus_nq = load_dataset("TIGER-Lab/LongRAG", "nq_corpus")

corpus_hotpotqa = load_dataset("TIGER-Lab/LongRAG", "hotpot_qa_corpus")If you are still interested in how we craft the corpus, you can start reading here.

Wikipedia raw data clean: We first clean Wikipedia raw data by following the standard process. We use WikiExtractor. This is a widely-used Python script that extracts and cleans text from a Wikipedia database backup dump. Please ensure you use the required Python environment. A sample script is:

sh scripts/extract_and_clean_wiki_dump.shPreprocess Wikipedia data After cleaning the Wikipedia raw data, run the following script to gather more information.

sh scripts/process_wiki_page.sh-

dir_path: The directory path of the cleaned Wikipedia dump, which is the output of the previous step. -

output_path_dir: The output directory will contain several pickle files, each representing a dictionary for the Wikipedia page.degree.pickle: The key is the Wikipedia page title, and the value is the number of hyperlinks.abs_adj.pickle: The key is the Wikipedia page title, and the value is the linked page in the abstract paragraph.full_adj.pickle: The key is the Wikipedia page title, and the value is the linked page in the entire page.doc_size.pickle: The key is the Wikipedia page title, and the value is the number of tokens on that page.doc_dict.pickle: The key is the Wikipedia page title, and the value is the text of the page. -

corpus_title_path: The key is used to filter the NQ dataset. In the original DPR paper, certain Wikipedia pages, such as list pages and disambiguation pages, were removed, reducing the total number of Wikipedia pages from 5 million to 3 million. For a fair comparison, we also chose to exclude these pages. (For HotpotQA, we did not remove any pages, so the number of Wikipedia pages remains at 5 million.) You can download the DPR's titles from this link.

We have provided the processed Wikipedia in our huggingface repo.

Please check out the nq_wiki and hotpot_qa_wiki subsets for more information. You could easily derive these

pickle files from these two datasets.

Retrieval Corpus: By grouping multiple related documents, we can construct long retrieval units with more than 4K tokens. This design could also significantly reduce the corpus size (number of retrieval units in the corpus). Then, the retriever’s task becomes much easier. Additionally, the long retrieval unit will also improve the information completeness to avoid ambiguity or confusion.

sh scripts/group_documents.sh-

processed_wiki_dir: The output directory of the above step. -

mode:absis for HotpotQA corpus,fullis for NQ corpus. -

output_dir: The output directory, The output directory will contain several pickle files, each representing a dictionary for the retrieval corpus. The most important one isgroup_text.pickle, which maps the corpus ID to the corpus text. For more details, please refer to our released corpus on Hugging Face.

We leverage open-sourced dense retrieval toolkit, Tevatron. For all our retrieval experiments. The base embedding model we used is bge-large-en-v1.5. We have provided a sample script; make sure to update the parameters with your own dataset local path. Additionally, our script uses 4 GPUs to encode the corpus for time saving; please update this based on your own use case.

sh scripts/run_retrieve_tevatron.shWe select Gemini-1.5-Pro and GPT-4o as our long reader given their strong ability to handle long context input. (We also plan to test other LLMs capable of handling long contexts in the future.)

The input of the reader is a concatenation of all the long retrieval units from the long retriever. We have provided the input file in our Huggingface repo.

mkdir -p exp/

sh scripts/run_eval_qa.sh-

test_data_name: Test set name,nq(NQ) orhotpot_qa(HotpotQA). -

test_data_split: For each test set, there are three splits:full,subset_1000,subset_100. We suggest starting withsubset_100for a quick start or debugging and usingsubset_1000to obtain relatively stable results. -

output_file_path: The output file, here it's placed in theexp/directory. -

reader_model: The long context reader model we use, currently our code supportGPT-4o,GPT-4-Turbo,Gemini-1.5-Pro,Claude-3-Opus. Please note that you need to update the related API key and API configuration in the code. For example, if you are using the GPT-4 series, you need to configure the code inutils/gpt_inference.py; if you are using the Gemini series, you need to configure the code inutils/gemini_inference.py. We will continue to support more models in the future.

The output file contains one test case per row. The short_ans field is our final prediction.

{

"query_id": "383",

"question": "how many episodes of touching evil are there",

"answers": ["16"],

"long_ans": "16 episodes.",

"short_ans": "16",

"is_exact_match": 1,

"is_substring_match": 1,

"is_retrieval": 1

}We have provided some sample output files in our exp/ directory. For example, exp/nq_gpt4o_100.json contains

the result from the running file:

python eval/eval_qa.py \

--test_data_name "nq" \

--test_data_split "subset_100" \

--output_file_path "./exp/nq_gpt4o_100.json" \

--reader_model "GPT-4o"The top-1 retrieval accuracy is 88%, and the exact match rate is 64%.

Please check out the license of each subset we use in our work.

| Dataset Name | License Type |

|---|---|

| NQ | Apache License 2.0 |

| HotpotQA | CC BY-SA 4.0 License |

Please kindly cite our paper if you find our project is useful:

@article{jiang2024longrag

title={LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs},

author={Ziyan Jiang, Xueguang Ma, Wenhu Chen},

journal={arXiv preprint arXiv:2406.15319},

year={2024},

url={https://arxiv.org/abs/2406.15319}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LongRAG

Similar Open Source Tools

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

LLM-Merging

LLM-Merging is a repository containing starter code for the LLM-Merging competition. It provides a platform for efficiently building LLMs through merging methods. Users can develop new merging methods by creating new files in the specified directory and extending existing classes. The repository includes instructions for setting up the environment, developing new merging methods, testing the methods on specific datasets, and submitting solutions for evaluation. It aims to facilitate the development and evaluation of merging methods for LLMs.

OlympicArena

OlympicArena is a comprehensive benchmark designed to evaluate advanced AI capabilities across various disciplines. It aims to push AI towards superintelligence by tackling complex challenges in science and beyond. The repository provides detailed data for different disciplines, allows users to run inference and evaluation locally, and offers a submission platform for testing models on the test set. Additionally, it includes an annotation interface and encourages users to cite their paper if they find the code or dataset helpful.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

aisuite

Aisuite is a simple, unified interface to multiple Generative AI providers. It allows developers to easily interact with various Language Model (LLM) providers like OpenAI, Anthropic, Azure, Google, AWS, and more through a standardized interface. The library focuses on chat completions and provides a thin wrapper around python client libraries, enabling creators to test responses from different LLM providers without changing their code. Aisuite maximizes stability by using HTTP endpoints or SDKs for making calls to the providers. Users can install the base package or specific provider packages, set up API keys, and utilize the library to generate chat completion responses from different models.

strwythura

Strwythura is a library and tutorial focused on constructing a knowledge graph from unstructured data sources using state-of-the-art models for named entity recognition. It implements an enhanced GraphRAG approach and curates semantics for optimizing AI application outcomes within a specific domain. The tutorial emphasizes the use of sophisticated NLP pipelines based on spaCy, GLiNER, TextRank, and related libraries to provide better/faster/cheaper results with more control over the intentional arrangement of the knowledge graph. It leverages neurosymbolic AI methods and combines practices from natural language processing, graph data science, entity resolution, ontology pipeline, context engineering, and human-in-the-loop processes.

BTGenBot

BTGenBot is a tool that generates behavior trees for robots using lightweight large language models (LLMs) with a maximum of 7 billion parameters. It fine-tunes on a specific dataset, compares multiple LLMs, and evaluates generated behavior trees using various methods. The tool demonstrates the potential of LLMs with a limited number of parameters in creating effective and efficient robot behaviors.

curategpt

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

curate-gpt

CurateGPT is a prototype web application and framework for performing general purpose AI-guided curation and curation-related operations over collections of objects. It allows users to load JSON, YAML, or CSV data, build vector database indexes for ontologies, and interact with various data sources like GitHub, Google Drives, Google Sheets, and more. The tool supports ontology curation, knowledge base querying, term autocompletion, and all-by-all comparisons for objects in a collection.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

ReasonablePlanningAI

Reasonable Planning AI is a robust design and data-driven AI solution for game developers. It provides an AI Editor that allows creating AI without Blueprints or C++. The AI can think for itself, plan actions, adapt to the game environment, and act dynamically. It consists of Core components like RpaiGoalBase, RpaiActionBase, RpaiPlannerBase, RpaiReasonerBase, and RpaiBrainComponent, as well as Composer components for easier integration by Game Designers. The tool is extensible, cross-compatible with Behavior Trees, and offers debugging features like visual logging and heuristics testing. It follows a simple path of execution and supports versioning for stability and compatibility with Unreal Engine versions.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

For similar tasks

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

RAGFoundry

RAG Foundry is a library designed to enhance Large Language Models (LLMs) by fine-tuning models on RAG-augmented datasets. It helps create training data, train models using parameter-efficient finetuning (PEFT), and measure performance using RAG-specific metrics. The library is modular, customizable using configuration files, and facilitates prototyping with various RAG settings and configurations for tasks like data processing, retrieval, training, inference, and evaluation.

RAG-FiT

RAG-FiT is a library designed to improve Language Models' ability to use external information by fine-tuning models on specially created RAG-augmented datasets. The library assists in creating training data, training models using parameter-efficient finetuning (PEFT), and evaluating performance using RAG-specific metrics. It is modular, customizable via configuration files, and facilitates fast prototyping and experimentation with various RAG settings and configurations.

LotteryAi

LotteryAi is a lottery prediction artificial intelligence that uses machine learning to predict the winning numbers of any lottery game. It requires Python 3.x and specific libraries like numpy, tensorflow, keras, and art for installation. Users need a data file with past lottery results in a comma-separated format to train the model and generate predictions. The tool comes with no guarantee of accuracy in predicting lottery numbers and is meant for educational and research purposes only.

llm_processes

This repository contains code for LLM Processes, which focuses on generating numerical predictive distributions conditioned on natural language. It supports various LLMs through Hugging Face transformer APIs and includes experiments on prompt engineering, 1D synthetic data, comparison to LLMTime, Fashion MNIST, black-box optimization, weather regression, in-context learning, and text conditioning. The code requires Python 3.9+, PyTorch 2.3.0+, and other dependencies for running experiments and reproducing results.

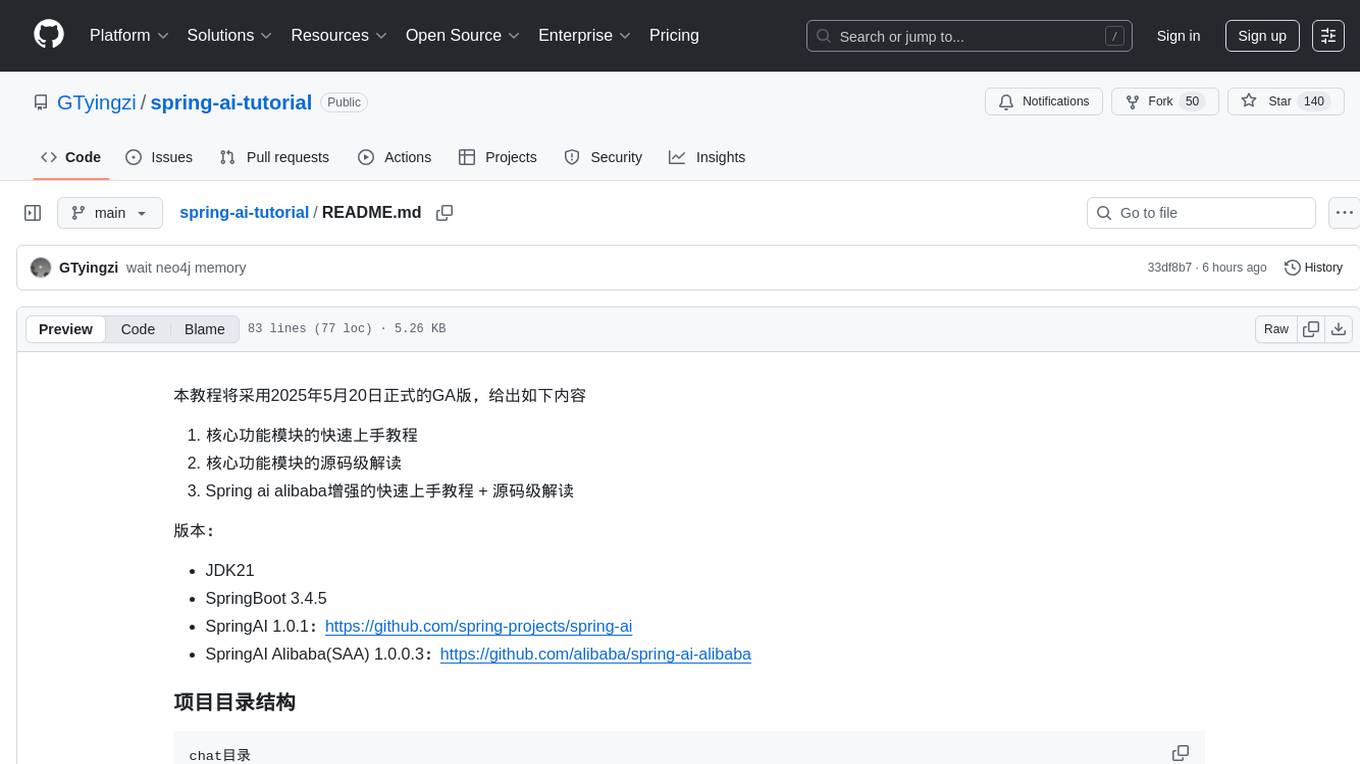

spring-ai-tutorial

Spring AI Tutorial is a comprehensive guide for beginners to learn about integrating artificial intelligence capabilities into Spring Boot applications. The tutorial covers various AI concepts such as machine learning, natural language processing, and computer vision, and demonstrates how to implement them using popular AI libraries and tools within the Spring framework. By following this tutorial, users will gain a solid understanding of how to leverage AI technologies to enhance the functionality and intelligence of their Spring applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.