LiveBench

LiveBench: A Challenging, Contamination-Free LLM Benchmark

Stars: 1035

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

README:

🏆 Leaderboard • 💻 Data • 📝 Paper

LiveBench appeared as a Spotlight Paper in ICLR 2025.

Top models as of 30th September 2024 (for a full up-to-date leaderboard, see here):

Please see the changelog for details about each LiveBench release.

- Introduction

- Installation Quickstart

- Usage

- Data

- Adding New Questions

- Evaluating New Models and Configuring API Parameters

- Documentation

- Citation

Introducing LiveBench: a benchmark for LLMs designed with test set contamination and objective evaluation in mind.

LiveBench has the following properties:

- LiveBench is designed to limit potential contamination by releasing new questions monthly, as well as having questions based on recently-released datasets, arXiv papers, news articles, and IMDb movie synopses.

- Each question has verifiable, objective ground-truth answers, allowing hard questions to be scored accurately and automatically, without the use of an LLM judge.

- LiveBench currently contains a set of 18 diverse tasks across 6 categories, and we will release new, harder tasks over time.

We will evaluate your model! Open an issue or email us at [email protected]!

We recommend using a virtual environment to install LiveBench.

python -m venv .venv

source .venv/bin/activateTo generate answers with API models (i.e. with gen_api_answer.py), conduct judgments, and show results:

cd LiveBench

pip install -e .To score results on the coding tasks (code_completion and code_generation), you will also need to install the required dependencies:

cd livebench/code_runner

pip install -r requirements_eval.txtNote that, to evaluate the agentic coding questions, you will need docker installed and available (i.e. the command docker --version should work).

This will be checked prior to such tasks being run.

Note about local models: Local model inference is unmaintained. We highly recommend serving your model on an OpenAI compatible API using vllm and performing inference using run_livebench.py.

Our repo contains code from LiveCodeBench and IFEval.

cd livebenchThe simplest way to run LiveBench inference and scoring is using the run_livebench.py script, which handles the entire evaluation pipeline including generating answers, scoring them, and showing results.

Basic usage:

python run_livebench.py --model gpt-4o --bench-name live_bench/coding --livebench-release-option 2024-11-25Some common options:

-

--bench-name: Specify which subset(s) of questions to use (e.g.live_benchfor all questions,live_bench/codingfor coding tasks only) -

--model: The model to evaluate -

--max-tokens: Maximum number of tokens in model responses (defaults to 4096 unless overriden for specific models) -

--api-base: Custom API endpoint for OpenAI-compatible servers -

--api-key-name: Environment variable name containing the API key (defaults to OPENAI_API_KEY for OpenAI models) -

--api-key: Raw API key value -

--parallel-requests: Number of concurrent API requests (for models with high rate limits) -

--resume: Continue from a previous interrupted run -

--retry-failures: Retry questions that failed in previous runs -

--livebench-release-option: Evaluate questions from a specific LiveBench release

Run python run_livebench.py --help to see all available options.

When this is finished, follow along with Viewing Results to view results.

Note: The current LiveBench release is 2025-04-25; however, not all questions for this release are public on Huggingface. In order to evaluate all categories, you will need to pass --livebench-release-option 2024-11-25 to all scripts to use the most recent public questions.

Note: Evaluation of the agentic coding tasks require the building of task-specific Docker images. Storing all of these images may take up to 150GB. Images are needed both for inference and evaluation. In the future we will work on optimizing the evaluation process for this task to minimize storage requirements.

LiveBench provides two different arguments for parallelizing evaluations, which can be used independently or together:

-

--mode parallel: Runs separate tasks/categories in parallel by creating multiple tmux sessions. Each category or task runs in its own terminal session, allowing simultaneous evaluation across different benchmark subsets. This also parallelizes the ground truth evaluation phase. By default, this will create one session for each category; if--bench-nameis supplied, there will be one session for each value of--bench-name. -

--parallel-requests: Sets the number of concurrent questions to be answered within a single task evaluation instance. This controls how many API requests are made simultaneously for a specific task.

When to use which option:

-

For high rate limits (e.g., commercial APIs with high throughput):

- Use both options together for maximum throughput when evaluating the full benchmark.

- For example:

python run_livebench.py --model gpt-4o --bench-name live_bench --mode parallel --parallel-requests 10

-

For lower rate limits:

- When running the entire LiveBench suite,

--mode parallelis recommended to parallelize across categories, even if--parallel-requestsmust be kept low. - For small subsets of tasks,

--parallel-requestsmay be more efficient as the overhead of creating multiple tmux sessions provides less benefit. - Example for lower rate limits on full benchmark:

python run_livebench.py --model claude-3-5-sonnet --bench-name live_bench --mode parallel --parallel-requests 2

- When running the entire LiveBench suite,

-

For single task evaluation:

- When running just one or two tasks, use only

--parallel-requests:python run_livebench.py --model gpt-4o --bench-name live_bench/coding --parallel-requests 10

- When running just one or two tasks, use only

Note that --mode parallel requires tmux to be installed on your system. The number of tmux sessions created will depend on the number of categories or tasks being evaluated.

You can view the results of your evaluations using the show_livebench_result.py script:

python show_livebench_result.py --bench-name <bench-name> --model-list <model-list> --question-source <question-source> --livebench-release-option 2024-11-25<model-list> is a space-separated list of model IDs to show. For example, to show the results of gpt-4o and claude-3-5-sonnet on coding tasks, run:

python show_livebench_result.py --bench-name live_bench/coding --model-list gpt-4o claude-3-5-sonnetMultiple --bench-name values can be provided to see scores on specific subsets of benchmarks:

python show_livebench_result.py --bench-name live_bench/coding live_bench/math --model-list gpt-4oIf no --model-list argument is provided, all models will be shown. The --question-source argument defaults to huggingface but should match what was used during evaluation, as should --livebench-release-option.

The leaderboard will be displayed in the terminal. You can also find the breakdown by category in all_groups.csv and by task in all_tasks.csv.

The scripts/error_check.py script will print out questions for which a model's output is $ERROR$, which indicates repeated API call failures.

You can use the scripts/rerun_failed_questions.py script to rerun the failed questions, or run run_livebench.py as normal with the --resume and --retry-failures arguments.

By default, LiveBench will retry API calls three times and will include a delay in between attempts to account for rate limits. If the errors seen during evaluation are due to rate limits, you may need to switch to --mode single or --mode sequential and decrease the value of --parallel-requests. If after multiple attempts, the model's output is still $ERROR$, it's likely that the question is triggering some content filter from the model's provider (Gemini models are particularly prone to this, with an error of RECITATION). In this case, there is not much that can be done. We consider such failures to be incorrect responses.

The questions for each of the categories can be found below:

Also available are the model answers and the model judgments.

To download the question.jsonl files (for inspection) and answer/judgment files from the leaderboard, use

python download_questions.py

python download_leaderboard.pyQuestions will be downloaded to livebench/data/<category>/question.jsonl.

If you want to create your own set of questions, or try out different prompts, etc, follow these steps:

- Create a

question.jsonlfile with the following path (or, runpython download_questions.pyand update the downloaded file):livebench/data/live_bench/<category>/<task>/question.jsonl. For example,livebench/data/reasoning/web_of_lies_new_prompt/question.jsonl. Here is an example of the format forquestion.jsonl(it's the first few questions from web_of_lies_v2):

{"question_id": "0daa7ca38beec4441b9d5c04d0b98912322926f0a3ac28a5097889d4ed83506f", "category": "reasoning", "ground_truth": "no, yes, yes", "turns": ["In this question, assume each person either always tells the truth or always lies. Tala is at the movie theater. The person at the restaurant says the person at the aquarium lies. Ayaan is at the aquarium. Ryan is at the botanical garden. The person at the park says the person at the art gallery lies. The person at the museum tells the truth. Zara is at the museum. Jake is at the art gallery. The person at the art gallery says the person at the theater lies. Beatriz is at the park. The person at the movie theater says the person at the train station lies. Nadia is at the campground. The person at the campground says the person at the art gallery tells the truth. The person at the theater lies. The person at the amusement park says the person at the aquarium tells the truth. Grace is at the restaurant. The person at the aquarium thinks their friend is lying. Nia is at the theater. Kehinde is at the train station. The person at the theater thinks their friend is lying. The person at the botanical garden says the person at the train station tells the truth. The person at the aquarium says the person at the campground tells the truth. The person at the aquarium saw a firetruck. The person at the train station says the person at the amusement park lies. Mateo is at the amusement park. Does the person at the train station tell the truth? Does the person at the amusement park tell the truth? Does the person at the aquarium tell the truth? Think step by step, and then put your answer in **bold** as a list of three words, yes or no (for example, **yes, no, yes**). If you don't know, guess."], "task": "web_of_lies_v2"}-

If adding a new task, create a new scoring method in the

process_resultsfolder. If it is similar to an existing task, you can copy that task's scoring function. For example,livebench/process_results/reasoning/web_of_lies_new_prompt/utils.pycan be a copy of theweb_of_lies_v2scoring method. -

Add the scoring function to

gen_ground_truth_judgment.pyhere. -

Run and score models using

--question-source jsonland specifying your task. For example:

python gen_api_answer.py --bench-name live_bench/reasoning/web_of_lies_new_prompt --model claude-3-5-sonnet --question-source jsonl

python gen_ground_truth_judgment.py --bench-name live_bench/reasoning/web_of_lies_new_prompt --question-source jsonl

python show_livebench_result.py --bench-name live_bench/reasoning/web_of_lies_new_promptAny API-based model for which there is an OpenAI compatible endpoint should work out of the box using the --api-base and --api-key (or --api-key-name) arguments. If you'd like to override the name of the model for local files (e.g. saving it as deepseek-v3 instead of deepseek-chat), use the --model-display-name argument. You can also override values for temperature and max tokens using the --force-temperature and --max-tokens arguments, respectively.

If you'd like to have persistent model configuration without needing to specify command-line arguments, you can create a model configuration document in a yaml file in livebench/model/model_configs. See the other files there for examples of the necessary format. Important values are model_display_name, which determines the answer .jsonl file name and model ID used for other scripts, and api_name, which provides a mapping between API providers and names for the model in that API. For instance, Deepseek R1 can be evaluated using the Deepseek API with a name of deepseek-reasoner and the Together API with a name of deepseek-ai/deepseek-r1. api_kwargs allows you to set overrides for parameters such as temperature, max tokens, and top p, for all providers or for specific ones. Once this is set, you can use --model <model_name> with the model_display_name value you put in the yaml document when running run_livebench.py.

When performing inference, use the --model-provider-override argument to override the provider you'd like to use for the model.

We have also implemented inference for Anthropic, Cohere, Mistral, Together, and Google models, so those should also all work immediately either by using --model-provider-override or adding a new entry to the appropriate configuration file.

If you'd like to use a model with a new provider that is not OpenAI-compatible, you will need to implement a new completions function in completions.py and add it to get_api_function in that file; then, you can use it in your model configuration.

Here, we describe our dataset documentation. This information is also available in our paper.

@inproceedings{livebench,

title={LiveBench: A Challenging, Contamination-Free {LLM} Benchmark},

author={Colin White and Samuel Dooley and Manley Roberts and Arka Pal and Benjamin Feuer and Siddhartha Jain and Ravid Shwartz-Ziv and Neel Jain and Khalid Saifullah and Sreemanti Dey and Shubh-Agrawal and Sandeep Singh Sandha and Siddartha Venkat Naidu and Chinmay Hegde and Yann LeCun and Tom Goldstein and Willie Neiswanger and Micah Goldblum},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LiveBench

Similar Open Source Tools

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

MultiPL-E

MultiPL-E is a system for translating unit test-driven neural code generation benchmarks to new languages. It is part of the BigCode Code Generation LM Harness and allows for evaluating Code LLMs using various benchmarks. The tool supports multiple versions with improvements and new language additions, providing a scalable and polyglot approach to benchmarking neural code generation. Users can access a tutorial for direct usage and explore the dataset of translated prompts on the Hugging Face Hub.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

ai-rag-chat-evaluator

This repository contains scripts and tools for evaluating a chat app that uses the RAG architecture. It provides parameters to assess the quality and style of answers generated by the chat app, including system prompt, search parameters, and GPT model parameters. The tools facilitate running evaluations, with examples of evaluations on a sample chat app. The repo also offers guidance on cost estimation, setting up the project, deploying a GPT-4 model, generating ground truth data, running evaluations, and measuring the app's ability to say 'I don't know'. Users can customize evaluations, view results, and compare runs using provided tools.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

OlympicArena

OlympicArena is a comprehensive benchmark designed to evaluate advanced AI capabilities across various disciplines. It aims to push AI towards superintelligence by tackling complex challenges in science and beyond. The repository provides detailed data for different disciplines, allows users to run inference and evaluation locally, and offers a submission platform for testing models on the test set. Additionally, it includes an annotation interface and encourages users to cite their paper if they find the code or dataset helpful.

LayerSkip

LayerSkip is an implementation enabling early exit inference and self-speculative decoding. It provides a code base for running models trained using the LayerSkip recipe, offering speedup through self-speculative decoding. The tool integrates with Hugging Face transformers and provides checkpoints for various LLMs. Users can generate tokens, benchmark on datasets, evaluate tasks, and sweep over hyperparameters to optimize inference speed. The tool also includes correctness verification scripts and Docker setup instructions. Additionally, other implementations like gpt-fast and Native HuggingFace are available. Training implementation is a work-in-progress, and contributions are welcome under the CC BY-NC license.

curategpt

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

HackBot

HackBot is an AI-powered cybersecurity chatbot designed to provide accurate answers to cybersecurity-related queries, conduct code analysis, and scan analysis. It utilizes the Meta-LLama2 AI model through the 'LlamaCpp' library to respond coherently. The chatbot offers features like local AI/Runpod deployment support, cybersecurity chat assistance, interactive interface, clear output presentation, static code analysis, and vulnerability analysis. Users can interact with HackBot through a command-line interface and utilize it for various cybersecurity tasks.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

ReasonablePlanningAI

Reasonable Planning AI is a robust design and data-driven AI solution for game developers. It provides an AI Editor that allows creating AI without Blueprints or C++. The AI can think for itself, plan actions, adapt to the game environment, and act dynamically. It consists of Core components like RpaiGoalBase, RpaiActionBase, RpaiPlannerBase, RpaiReasonerBase, and RpaiBrainComponent, as well as Composer components for easier integration by Game Designers. The tool is extensible, cross-compatible with Behavior Trees, and offers debugging features like visual logging and heuristics testing. It follows a simple path of execution and supports versioning for stability and compatibility with Unreal Engine versions.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

Tiny-Predictive-Text

Tiny-Predictive-Text is a demonstration of predictive text without an LLM, using permy.link. It provides a detailed description of the tool, including its features, benefits, and how to use it. The tool is suitable for a variety of jobs, including content writers, editors, and researchers. It can be used to perform a variety of tasks, such as generating text, completing sentences, and correcting errors.

For similar tasks

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and applications. It discusses current limitations and future directions in efficient MLLM research.

uvadlc_notebooks

The UvA Deep Learning Tutorials repository contains a series of Jupyter notebooks designed to help understand theoretical concepts from lectures by providing corresponding implementations. The notebooks cover topics such as optimization techniques, transformers, graph neural networks, and more. They aim to teach details of the PyTorch framework, including PyTorch Lightning, with alternative translations to JAX+Flax. The tutorials are integrated as official tutorials of PyTorch Lightning and are relevant for graded assignments and exams.

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

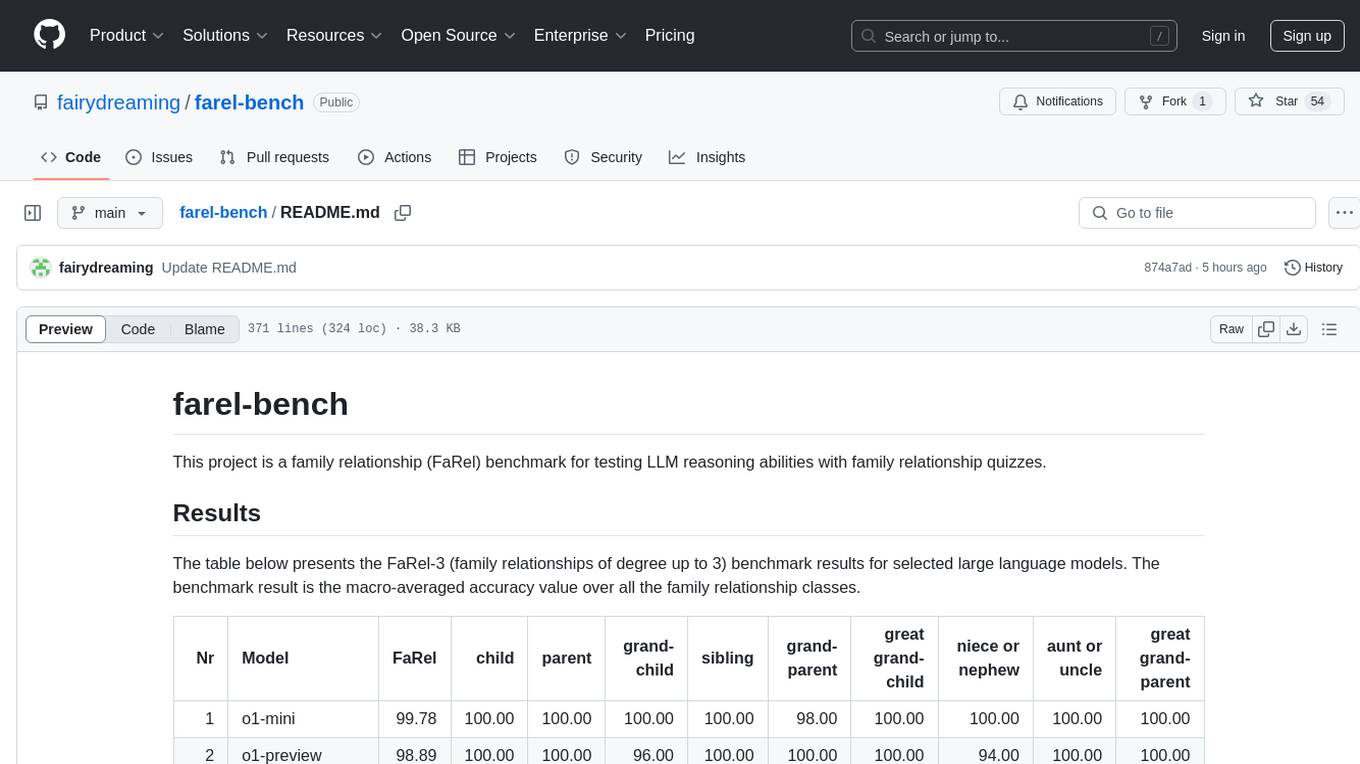

farel-bench

The 'farel-bench' project is a benchmark tool for testing LLM reasoning abilities with family relationship quizzes. It generates quizzes based on family relationships of varying degrees and measures the accuracy of large language models in solving these quizzes. The project provides scripts for generating quizzes, running models locally or via APIs, and calculating benchmark metrics. The quizzes are designed to test logical reasoning skills using family relationship concepts, with the goal of evaluating the performance of language models in this specific domain.

LLMcalc

LLM Calculator is a script that estimates the memory requirements and performance of Hugging Face models based on quantization levels. It fetches model parameters, calculates required memory, and analyzes performance with different RAM/VRAM configurations. The tool supports Windows and Linux, AMD, Intel, and Nvidia GPUs. Users can input a Hugging Face model ID to get its parameter count and analyze memory requirements for various quantization schemes. The tool provides estimates for GPU offload percentage and throughput in tk/s. It requires dependencies like python, uv, pciutils for AMD + Linux, and drivers for Nvidia. The tool is designed for rough estimates and may not work with MultiGPU setups.

ai-chat-protocol

The Microsoft AI Chat Protocol SDK is a library for easily building AI Chat interfaces from services that follow the AI Chat Protocol API Specification. By agreeing on a standard API contract, AI backend consumption and evaluation can be performed easily and consistently across different services. It allows developers to develop AI chat interfaces, consume and evaluate AI inference backends, and incorporate HTTP middleware for logging and authentication.

gen-ai-experiments

Gen-AI-Experiments is a structured collection of Jupyter notebooks and AI experiments designed to guide users through various AI tools, frameworks, and models. It offers valuable resources for both beginners and experienced practitioners, covering topics such as AI agents, model testing, RAG systems, real-world applications, and open-source tools. The repository includes folders with curated libraries, AI agents, experiments, LLM testing, open-source libraries, RAG experiments, and educhain experiments, each focusing on different aspects of AI development and application.

verifywise

VerifyWise is an open-source AI governance platform designed to help businesses harness the power of AI safely and responsibly. The platform ensures compliance and robust AI management without compromising on security. It offers additional products like MaskWise for data redaction, EvalWise for AI model evaluation, and FlagWise for security threat monitoring. VerifyWise simplifies AI governance for organizations, aiding in risk management, regulatory compliance, and promoting responsible AI practices. It features options for on-premises or private cloud hosting, open-source with AGPLv3 license, AI-generated answers for compliance audits, source code transparency, Docker deployment, user registration, role-based access control, and various AI governance tools like risk management, bias & fairness checks, evidence center, AI trust center, and more.

For similar jobs

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

AlignBench

AlignBench is the first comprehensive evaluation benchmark for assessing the alignment level of Chinese large models across multiple dimensions. It includes introduction information, data, and code related to AlignBench. The benchmark aims to evaluate the alignment performance of Chinese large language models through a multi-dimensional and rule-calibrated evaluation method, enhancing reliability and interpretability.

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

home-assistant-datasets

This package provides a collection of datasets for evaluating AI Models in the context of Home Assistant. It includes synthetic data generation, loading data into Home Assistant, model evaluation with different conversation agents, human annotation of results, and visualization of improvements over time. The datasets cover home descriptions, area descriptions, device descriptions, and summaries that can be performed on a home. The tool aims to build datasets for future training purposes.

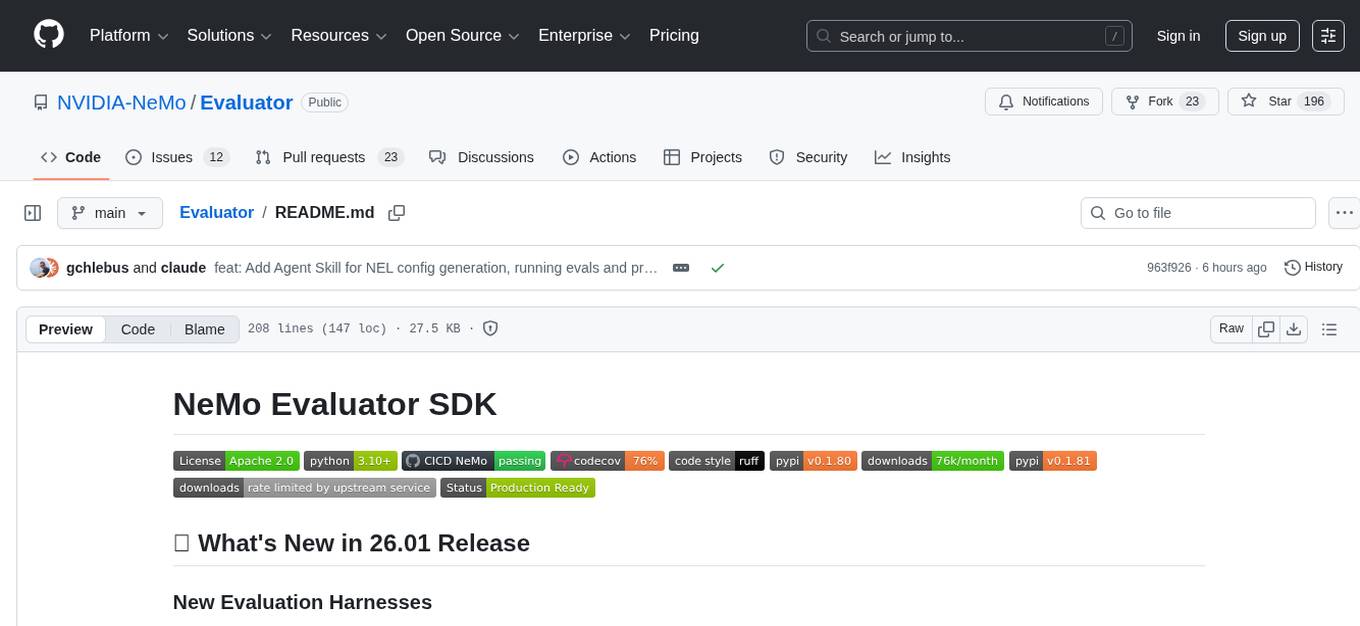

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.