llm-jp-eval

None

Stars: 137

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

README:

[ English | 日本語 ]

このツールは、複数のデータセットを横断して日本語の大規模言語モデルを自動評価するためのものです。 以下の機能を提供します:

- 既存の日本語評価データを利用し、テキスト生成タスクの評価データセットに変換

- 複数データセットを横断して大規模言語モデルの評価を実行

- 一部の評価データに対して、プロンプトと同形式のインストラクションデータ (jaster) を生成

データフォーマットの詳細、サポートしているデータの一覧、およびjasterの詳細については、DATASET.mdを参照してください。

- uv(推奨)または pip を使用

- uvの場合

# 詳細はドキュメントを参照: https://docs.astral.sh/uv/getting-started/installation/ # install uv $ curl -LsSf https://astral.sh/uv/install.sh | sh $ uv sync

- pip の場合

$ cd llm-jp-eval $ pip install .

- Taskfile のインストール

- pipx の場合

$ pipx install go-task-bin - uv の場合

$ uv tool install go-task-bin

- config file, .envをテンプレートからコピー

$ cp configs/config_template.yaml configs/config.yaml

$ cp env.sample .env- 必要に応じて、HuggingFace Hubにログイン

# .env に HF_TOKEN を設定後

$ task hf_login簡易的な実行方法として以下2つの実行方法を下記に示します。

- taskfile による推論から評価の一括実行方法

- vllm serverによる同期的な評価方法

Taskfileを使用してコマンドを実行します。

TaskfileのCLI_ARGS機能を利用し、末尾に -- --optional_args のように引数を加えることでconfig.yaml の内容をコマンドライン引数として直接与えることも可能です。

# setup eval & inference configs

$ cat << _EOF_ >> configs/config.yaml

exporters:

local:

export_output_table: true # 出力結果をテーブル形式で保存するかどうか

output_top_n: 5 # 出力結果の上位何件を保存するか

_EOF_

$ cat << _EOF_ >> configs/vllm_inference.yaml

model:

model: llm-jp/llm-jp-3-3.7b-instruct

tokenizer:

pretrained_model_name_or_path: llm-jp/llm-jp-3-3.7b-instruct

_EOF_

# download llm-jp-eval-inference repository & build container for evaluation

$ task install

$ task eval_inference inference_config=configs/vllm_inference.yaml eval_config=configs/config.yaml

# if you want to evalute non commercial datasets also, put "-- --include_non_commercial" or revise config.yaml directly

$ task eval_inference inference_config=configs/vllm_inference.yaml eval_config=configs/config.yaml -- --include_non_commercial

# download llm-jp-eval-inference repository & build container for evaluation

$ task install

$ cd llm-jp-eval-inference/inference-modules/vllm && uv run vllm serve llm-jp/llm-jp-3-3.7b-instruct &

# 評価セットアップ

$ cat << _EOF_ >> configs/config.yaml

exporters:

local:

export_output_table: true # 出力結果をテーブル形式で保存するかどうか

output_top_n: 5 # 出力結果の上位何件を保存するか

online_inference_config:

provider: vllm-openai

# 並列リクエスト数

max_concurrent: 4

hostname: localhost:8000

model_name: llm-jp/llm-jp-3-3.7b-instruct

generation_config:

temperature: 0.0

_EOF_

# コード生成の評価を行う場合(デフォルトランタイム:Docker)

$ task run_sandbox

# Singularityを用いる場合

$ task run_sandbox CONTAINER_RUNTIME=singularity

# 評価の実行

$ uv run scripts/evaluate_llm.py eval --config configs/config.yaml

前処理済みのデータセットがある場合は、このステップは不要です。

output-dir は llm-jp-eval 全体で用いられる出力先ディレクトリとして使用され、デフォルト値として local_files が指定されています。

$ uv run python scripts/preprocess_dataset.py \

--dataset-name example_data \

--output-dir /path/to/dataset_dir \

--version-name dataset_version_nameこれにより、/path/to/dataset_dir ディレクトリに評価用データとインストラクションデータ (jaster) がそれぞれ作成されます。

task prepare コマンドを使用して、データセットのインストール状態の確認や、失敗したデータセットの再インストールができます:

# インストールされていないデータセットを確認

$ task prepare

# 特定のデータセットを再インストール

$ task prepare dataset=example_data

# インストールされていない全てのデータセットをインストール

$ task prepare dataset=all--version-name は省略可能で、デフォルトとして llm-jp-eval のバージョン が使われます。

configs/config.yaml で output_dir をデータセットの前処理結果出力先に合わせた上で、次のスクリプトを実行します。

suffix として評価用に用いられる一部のパラメータの hash 値が用いられます(詳細は EvaluationConfig を参照)。

出力ディレクトリは <output_dir>/datasets/<version>/evaluation/<split> 以下であり、

設定ファイル中で inference_input_dir が null の場合は、直下にサブディレクトリ prompts_<suffix> を作成します。

$ uv run python scripts/evaluate_llm.py dump --config path/to/config.yaml評価対象のデータセットおよび統計値の算出設定は評価データセット用設定ファイルを別途用意する必要があります。

評価中、 datasets に指定されたdatasetがない場合、エラーが発生します。

設定用フォーマット

datasets:

- jamp

- janli

...

categories: # カテゴリごとの統計値算出設定

NLI:

description: "Natural Language Inference"

default_metric: exact_match

# 必要であればデータセットごとの評価関数を設定する

metrics: {}

# metrics:

# wiki_reading: char_f1

datasets: # カテゴリに含めるデータセット (データセット側が評価関数に対応している必要があります)

- jamp

- janli

- jnli

- jsem

- jsick

dataset_info_overrides: # データセットごとの設定の上書き設定

dataset_key: # 任意のデータセット識別子

# attributeに指定可能な値は src/llm-jp_eval/jaster/base.py内OutputInfoを参照

attributes: override_value評価データ設定用設定ファイルの設定

# configs/config.yaml内

eval_dataset_config_path: /path/to/dataset_config.yaml # デフォルト値: './eval_configs/all_datasets.yaml'

# datasetsに指定されたdatasetにおける、商用利用不可能なデータセットを除外するかどうかの設定

include_non_commercial: falsellm-jp/llm-jp-eval-inference を利用し、下記の推論エンジンを使用することができます。 ライブラリのインストール方法と推論の実行方法の詳細は、各推論エンジンの README を参照してください。

- vLLM

- Transformers (TextGenerationPipeline)

- TensorRT-LLM - experimental

インストール例:

$ git clone https://github.com/llm-jp/llm-jp-eval-inference

$ cd llm-jp-eval-inference/inference-modules/vllm && uv sync

# 推論の実行

$ cd llm-jp-eval-inference/inference-modules/vllm && uv run inference.py --config path/to/inference_config.yaml

# vllm serve による推論の例

$ uv run vllm serve organization/model_name設定は config ファイルで管理し、設定可能な値やデフォルト値は EvaluationConfig によって定義されます。 llm-jp-eval でデフォルトとして想定している設定は こちらのファイル をご参照ください。

wandb で結果を保存する際は、環境変数 WANDB_API_KEY に WANDB の API KEY を事前登録しておきます。

$ CUDA_VISIBLE_DEVICES=0 uv run scripts/evaluate_llm.py eval --config config.yaml \

model.pretrained_model_name_or_path=/path/to/model_dir \

tokenizer.pretrained_model_name_or_path=/path/to/tokenizer_dir \

dataset_dir=/path/to/dataset_dir/scripts/evaluate_llm.py で推論結果の保存先を offline_dir オプションに指定して評価を実行します。

推論時に wandb でログを保存している場合は、resume して同じ run に評価結果を保存します。

事前に実行した推論結果を参照する例:

$ CUDA_VISIBLE_DEVICES=0 uv run python scripts/evaluate_llm.py eval \

--inference_result_dir=./llm-jp-eval-inference/inference-modules/vllm/outputs/llm-jp--llm-jp-13b-v2.0_vllm_yyyyMMdd_hhmmss/OpenAI 準拠の HTTP Server による評価を実行する例:

config ファイルに以下を追加:

online_inference_config:

provider: vllm-openai

# 並列リクエスト数

max_concurrent: 4

hostname: localhost:8000

model_name: llm-jp/llm-jp-3-3.7b-instruct

generation_config:

temperature: 0.0評価の実行:

$ uv run scripts/evaluate_llm.py eval --config path/to/config.yamlllm-jp-evalではコード生成タスクにおける任意コード実行環境としてDify-sandboxを利用しています。 コンテナランタイムはDockerもしくはSingularityに対応しています。 コンテナランタイムが実行可能ではない環境においては、十分にセキュリティリスクに配慮した上で、Dify-sandboxを迂回しコード実行評価の設定を行えます。 なお、評価実行環境と同一環境にて生成されたコードの実行を行うことは、非常にセキュリティリスクの高い行為であることに留意してください。

以下のように eval_configs/all_datasets.yaml を参考にし、評価データセット設定を記述してください。

dataset_info_overrides:

mbpp:

# code_exec_sandbox の代わりに code_exec を指定

metrics: ["code_exec", "pylint_check"]評価結果のスコアと出力結果は設定ファイル内 Exporters 設定によって定義されます。

詳細は src/llm_jp_eval/exporter 以下を参照してください。

exportersには1️つ以上の出力設定が必要です。

exporters:

# output_dir/results 以下に json ファイルとして出力する例

local:

filename_format: result_{run_name}_{timestamp}.json

export_output_table: true # 推論結果を出力するか否か

output_top_n: 5

# WandB への出力の例

# 評価結果と生成ログは、自動で W&B と同期されます

wandb:

export_output_table: true

project: project_name

entity: entity_name

output_top_n: null # null 指定で全件設定可能項目についての詳細は、README、Config テンプレート、もしくは EvaluationConfig クラス を参照してください。

-

他のデータセットを参考に、

src/llm_jp_eval/jaster以下にデータセット(例:example_data)を追加します。編集が必要なファイルは以下の通りです:-

src/llm_jp_eval/jaster/example_data.py: データセットのクラスを記述。主にはダウンロードと前処理。新たに作成の必要があります - 必要であれば

eval_configs/all_datasets.yamlを編集、もしくはコピーし、設定ファイルにeval_dataset_config_path: path/to/eval_dataset_config.yamlを追加します

-

-

データセット作成スクリプトを実行

$ uv run scripts/preprocess_dataset.py \

--dataset-name example_data \

--output_dir /path/to/dataset_dir \

--version-name dataset_version_nameこれにより、/path/to/dataset_dir ディレクトリに評価用とチューニング用のデータセットがそれぞれ作成されます。

-

train、dev、testの分割が既に決まっているデータセットは、それに従います。 -

train、testと分割されているデータセットは、trainをシャッフルした上でtrain:dev= 9:1 で分割します。 - 分割が決まってないデータセットは、全て評価のみに使います。

jaster を用いてインストラクションチューニングを施したモデルが、テストデータをインストラクションチューニングに使用していない場合でも、llm-jp-eval の評価スコアを非常に高くすることができることが明らかになっています。また、jaster を用いてインストラクションチューニングを施したモデルが、llm-jp-eval 以外の評価や実利用などにあたって、正常でない文字列の生成など、様々な問題を起こすことも報告されています。したがって、llm-jp-eval で高い評価スコアを得たからといって、他の LLM よりも性能が優れていると断言するのは適切ではない上、jaster を訓練・チューニング目的で使う時は細心の注意を払う必要があるということに注意してください。

本ツールは Apache License 2.0 の元に配布します。 各データのライセンスは DATASET.md を参照してください。

- 問題や提案があれば、Issue で報告してください。

- コードのフォーマッターの管理に pre-commit を使用しています。

-

pre-commit run --all-filesまたはuv run pre-commit run --all-filesを実行することでコードのフォーマットを自動で行い、修正が必要な箇所が表示されます。全ての項目を修正してからコミットしてください。

-

- 修正や追加があれば、以下の手順で Pull Request を作成してください:

-

devからそれぞれのブランチを作成し、devに向けて Pull Request を作成してください。 - Pull Request のマージは、レビュー後に行います。

-

devからmainへのマージはタイミングを見て行います。

-

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-jp-eval

Similar Open Source Tools

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

Mirror-Flowers

Mirror Flowers is an out-of-the-box code security auditing tool that integrates local static scanning (line-level taint tracking + AST) with AI verification to help quickly discover and locate high-risk issues, providing repair suggestions. It supports multiple languages such as PHP, Python, JavaScript/TypeScript, and Java. The tool offers both single-file and project modes, with features like concurrent acceleration, integrated UI for visual results, and compatibility with multiple OpenAI interface providers. Users can configure the tool through environment variables or API, and can utilize it through a web UI or HTTP API for tasks like single-file auditing or project auditing.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

astrbot_plugin_qq_group_daily_analysis

AstrBot Plugin QQ Group Daily Analysis is an intelligent chat analysis plugin based on AstrBot. It provides comprehensive statistics on group chat activity and participation, extracts hot topics and discussion points, analyzes user behavior to assign personalized titles, and identifies notable messages in the chat. The plugin generates visually appealing daily chat analysis reports in various formats including images and PDFs. Users can customize analysis parameters, manage specific groups, and schedule automatic daily analysis. The plugin requires configuration of an LLM provider for intelligent analysis and adaptation to the QQ platform adapter.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

For similar tasks

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

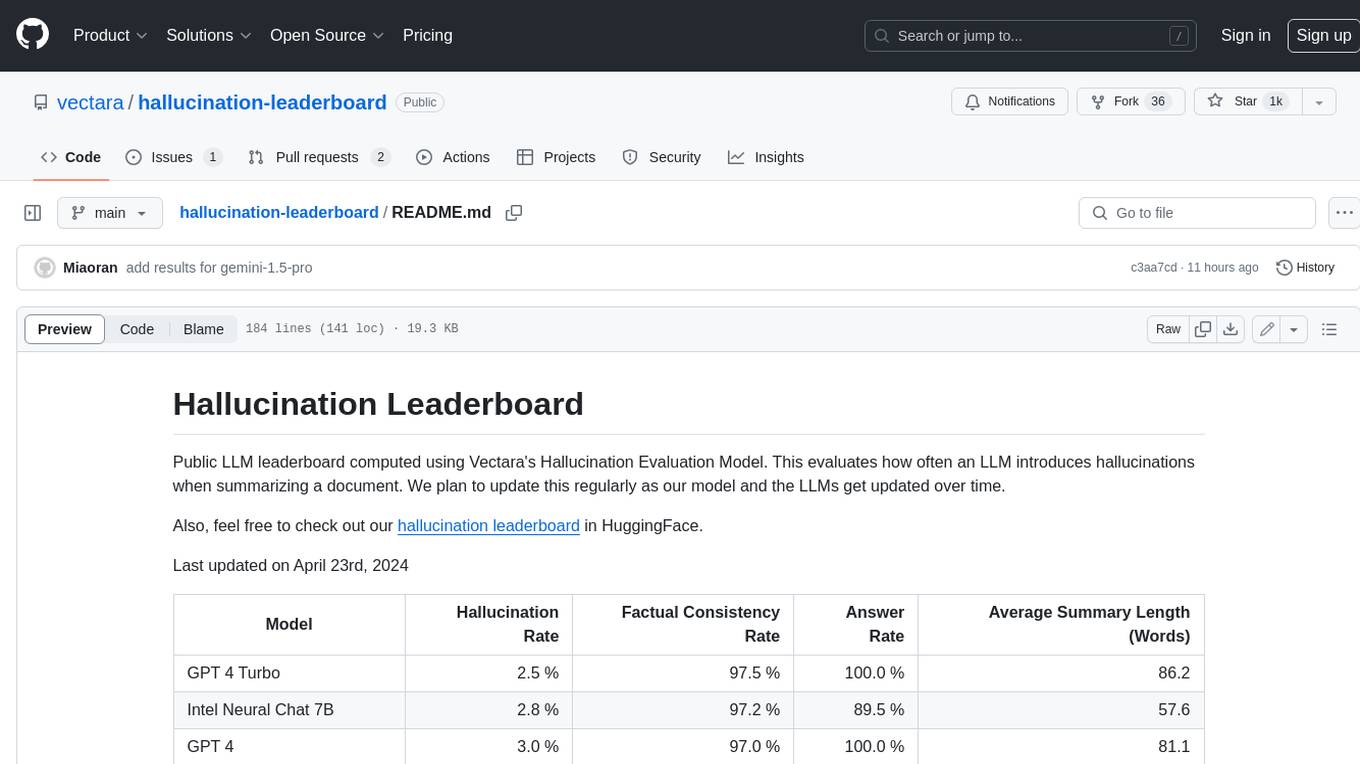

hallucination-leaderboard

This leaderboard evaluates the hallucination rate of various Large Language Models (LLMs) when summarizing documents. It uses a model trained by Vectara to detect hallucinations in LLM outputs. The leaderboard includes models from OpenAI, Anthropic, Google, Microsoft, Amazon, and others. The evaluation is based on 831 documents that were summarized by all the models. The leaderboard shows the hallucination rate, factual consistency rate, answer rate, and average summary length for each model.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

bocoel

BoCoEL is a tool that leverages Bayesian Optimization to efficiently evaluate large language models by selecting a subset of the corpus for evaluation. It encodes individual entries into embeddings, uses Bayesian optimization to select queries, retrieves from the corpus, and provides easily managed evaluations. The tool aims to reduce computation costs during evaluation with a dynamic budget, supporting models like GPT2, Pythia, and LLAMA through integration with Hugging Face transformers and datasets. BoCoEL offers a modular design and efficient representation of the corpus to enhance evaluation quality.

cladder

CLadder is a repository containing the CLadder dataset for evaluating causal reasoning in language models. The dataset consists of yes/no questions in natural language that require statistical and causal inference to answer. It includes fields such as question_id, given_info, question, answer, reasoning, and metadata like query_type and rung. The dataset also provides prompts for evaluating language models and example questions with associated reasoning steps. Additionally, it offers dataset statistics, data variants, and code setup instructions for using the repository.

uncheatable_eval

Uncheatable Eval is a tool designed to assess the language modeling capabilities of LLMs on real-time, newly generated data from the internet. It aims to provide a reliable evaluation method that is immune to data leaks and cannot be gamed. The tool supports the evaluation of Hugging Face AutoModelForCausalLM models and RWKV models by calculating the sum of negative log probabilities on new texts from various sources such as recent papers on arXiv, new projects on GitHub, news articles, and more. Uncheatable Eval ensures that the evaluation data is not included in the training sets of publicly released models, thus offering a fair assessment of the models' performance.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

For similar jobs

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

AlignBench

AlignBench is the first comprehensive evaluation benchmark for assessing the alignment level of Chinese large models across multiple dimensions. It includes introduction information, data, and code related to AlignBench. The benchmark aims to evaluate the alignment performance of Chinese large language models through a multi-dimensional and rule-calibrated evaluation method, enhancing reliability and interpretability.

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

home-assistant-datasets

This package provides a collection of datasets for evaluating AI Models in the context of Home Assistant. It includes synthetic data generation, loading data into Home Assistant, model evaluation with different conversation agents, human annotation of results, and visualization of improvements over time. The datasets cover home descriptions, area descriptions, device descriptions, and summaries that can be performed on a home. The tool aims to build datasets for future training purposes.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.