new-api

AI模型接口管理与分发系统,支持将多种大模型转为统一格式调用,支持OpenAI、Claude等格式,可供个人或者企业内部管理与分发渠道使用,本项目基于One API二次开发。🍥 The next-generation LLM gateway and AI asset management system supports multiple languages.

Stars: 6490

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

README:

中文 | English

[!NOTE]

本项目为开源项目,在One API的基础上进行二次开发

[!IMPORTANT]

- 本项目仅供个人学习使用,不保证稳定性,且不提供任何技术支持。

- 使用者必须在遵循 OpenAI 的使用条款以及法律法规的情况下使用,不得用于非法用途。

- 根据《生成式人工智能服务管理暂行办法》的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。

详细文档请访问我们的官方Wiki:https://docs.newapi.pro/

New API提供了丰富的功能,详细特性请参考特性说明:

- 🎨 全新的UI界面

- 🌍 多语言支持

- 💰 支持在线充值功能(易支付)

- 🔍 支持用key查询使用额度(配合neko-api-key-tool)

- 🔄 兼容原版One API的数据库

- 💵 支持模型按次数收费

- ⚖️ 支持渠道加权随机

- 📈 数据看板(控制台)

- 🔒 令牌分组、模型限制

- 🤖 支持更多授权登陆方式(LinuxDO,Telegram、OIDC)

- 🔄 支持Rerank模型(Cohere和Jina),接口文档

- ⚡ 支持OpenAI Realtime API(包括Azure渠道),接口文档

- ⚡ 支持Claude Messages 格式,接口文档

- 支持使用路由/chat2link进入聊天界面

- 🧠 支持通过模型名称后缀设置 reasoning effort:

- OpenAI o系列模型

- 添加后缀

-high设置为 high reasoning effort (例如:o3-mini-high) - 添加后缀

-medium设置为 medium reasoning effort (例如:o3-mini-medium) - 添加后缀

-low设置为 low reasoning effort (例如:o3-mini-low)

- 添加后缀

- Claude 思考模型

- 添加后缀

-thinking启用思考模式 (例如:claude-3-7-sonnet-20250219-thinking)

- 添加后缀

- OpenAI o系列模型

- 🔄 思考转内容功能

- 🔄 针对用户的模型限流功能

- 💰 缓存计费支持,开启后可以在缓存命中时按照设定的比例计费:

- 在

系统设置-运营设置中设置提示缓存倍率选项 - 在渠道中设置

提示缓存倍率,范围 0-1,例如设置为 0.5 表示缓存命中时按照 50% 计费 - 支持的渠道:

- [x] OpenAI

- [x] Azure

- [x] DeepSeek

- [x] Claude

- 在

此版本支持多种模型,详情请参考接口文档-中继接口:

- 第三方模型 gpts (gpt-4-gizmo-*)

- 第三方渠道Midjourney-Proxy(Plus)接口,接口文档

- 第三方渠道Suno API接口,接口文档

- 自定义渠道,支持填入完整调用地址

- Rerank模型(Cohere和Jina),接口文档

- Claude Messages 格式,接口文档

- Dify,当前仅支持chatflow

详细配置说明请参考安装指南-环境变量配置:

-

GENERATE_DEFAULT_TOKEN:是否为新注册用户生成初始令牌,默认为false -

STREAMING_TIMEOUT:流式回复超时时间,默认60秒 -

DIFY_DEBUG:Dify渠道是否输出工作流和节点信息,默认true -

FORCE_STREAM_OPTION:是否覆盖客户端stream_options参数,默认true -

GET_MEDIA_TOKEN:是否统计图片token,默认true -

GET_MEDIA_TOKEN_NOT_STREAM:非流情况下是否统计图片token,默认true -

UPDATE_TASK:是否更新异步任务(Midjourney、Suno),默认true -

COHERE_SAFETY_SETTING:Cohere模型安全设置,可选值为NONE,CONTEXTUAL,STRICT,默认NONE -

GEMINI_VISION_MAX_IMAGE_NUM:Gemini模型最大图片数量,默认16 -

MAX_FILE_DOWNLOAD_MB: 最大文件下载大小,单位MB,默认20 -

CRYPTO_SECRET:加密密钥,用于加密数据库内容 -

AZURE_DEFAULT_API_VERSION:Azure渠道默认API版本,默认2024-12-01-preview -

NOTIFICATION_LIMIT_DURATION_MINUTE:通知限制持续时间,默认10分钟 -

NOTIFY_LIMIT_COUNT:用户通知在指定持续时间内的最大数量,默认2

详细部署指南请参考安装指南-部署方式:

[!TIP] 最新版Docker镜像:

calciumion/new-api:latest

- 必须设置环境变量

SESSION_SECRET,否则会导致多机部署时登录状态不一致 - 如果公用Redis,必须设置

CRYPTO_SECRET,否则会导致多机部署时Redis内容无法获取

- 本地数据库(默认):SQLite(Docker部署必须挂载

/data目录) - 远程数据库:MySQL版本 >= 5.7.8,PgSQL版本 >= 9.6

安装宝塔面板(9.2.0版本及以上),在应用商店中找到New-API安装即可。 图文教程

# 下载项目

git clone https://github.com/Calcium-Ion/new-api.git

cd new-api

# 按需编辑docker-compose.yml

# 启动

docker-compose up -d# 使用SQLite

docker run --name new-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/new-api:/data calciumion/new-api:latest

# 使用MySQL

docker run --name new-api -d --restart always -p 3000:3000 -e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi" -e TZ=Asia/Shanghai -v /home/ubuntu/data/new-api:/data calciumion/new-api:latest渠道重试功能已经实现,可以在设置->运营设置->通用设置设置重试次数,建议开启缓存功能。

-

REDIS_CONN_STRING:设置Redis作为缓存 -

MEMORY_CACHE_ENABLED:启用内存缓存(设置了Redis则无需手动设置)

详细接口文档请参考接口文档:

- One API:原版项目

- Midjourney-Proxy:Midjourney接口支持

- chatnio:下一代AI一站式B/C端解决方案

- neko-api-key-tool:用key查询使用额度

其他基于New API的项目:

- new-api-horizon:New API高性能优化版

- VoAPI:基于New API的前端美化版本

如有问题,请参考帮助支持:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for new-api

Similar Open Source Tools

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

astrbot_plugin_qq_group_daily_analysis

AstrBot Plugin QQ Group Daily Analysis is an intelligent chat analysis plugin based on AstrBot. It provides comprehensive statistics on group chat activity and participation, extracts hot topics and discussion points, analyzes user behavior to assign personalized titles, and identifies notable messages in the chat. The plugin generates visually appealing daily chat analysis reports in various formats including images and PDFs. Users can customize analysis parameters, manage specific groups, and schedule automatic daily analysis. The plugin requires configuration of an LLM provider for intelligent analysis and adaptation to the QQ platform adapter.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

EduChat

EduChat is a large-scale language model-based chatbot system designed for intelligent education by the EduNLP team at East China Normal University. The project focuses on developing a dialogue-based language model for the education vertical domain, integrating diverse education vertical domain data, and providing functions such as automatic question generation, homework correction, emotional support, course guidance, and college entrance examination consultation. The tool aims to serve teachers, students, and parents to achieve personalized, fair, and warm intelligent education.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

chatgpt-wechat

ChatGPT-WeChat is a personal assistant application that can be safely used on WeChat through enterprise WeChat without the risk of being banned. The project is open source and free, with no paid sections or external traffic operations except for advertising on the author's public account '积木成楼'. It supports various features such as secure usage on WeChat, multi-channel customer service message integration, proxy support, session management, rapid message response, voice and image messaging, drawing capabilities, private data storage, plugin support, and more. Users can also develop their own capabilities following the rules provided. The project is currently in development with stable versions available for use.

ZcChat

ZcChat is an AI desktop pet suitable for Galgame characters, featuring long-term memory, expressive actions, control over the computer, and voice functions. It utilizes Letta for AI long-term memory, Galgame-style character illustrations for more actions and expressions, and voice interaction with support for various voice synthesis tools like Vits. Users can configure characters, install Letta, set up voice synthesis and input, and control the pet to interact with the computer. The tool enhances visual and auditory experiences for users interested in AI desktop pets.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

For similar tasks

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

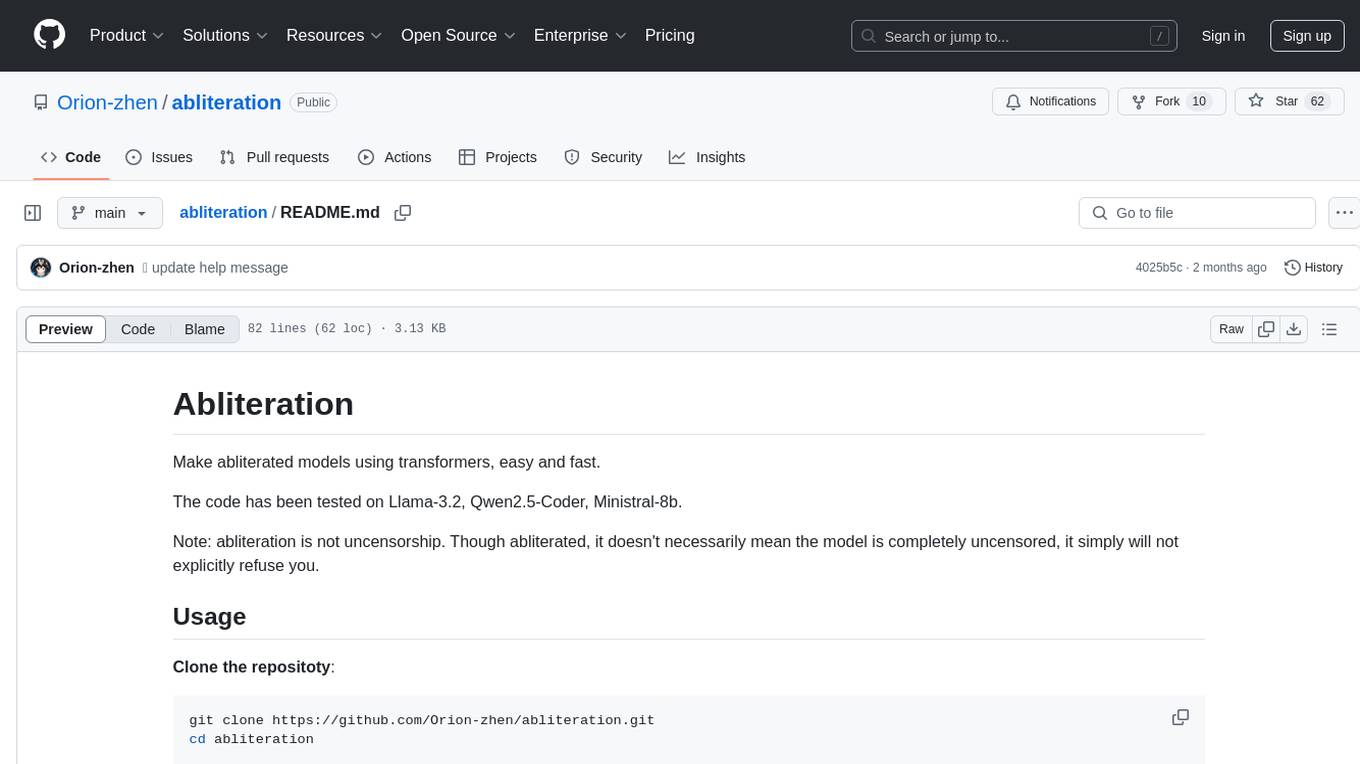

abliteration

Abliteration is a tool that allows users to create abliterated models using transformers quickly and easily. It is not a tool for uncensorship, but rather for making models that will not explicitly refuse users. Users can clone the repository, install dependencies, and make abliterations using the provided commands. The tool supports adjusting parameters for stubborn models and offers various options for customization. Abliteration can be used for creating modified models for specific tasks or topics.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.