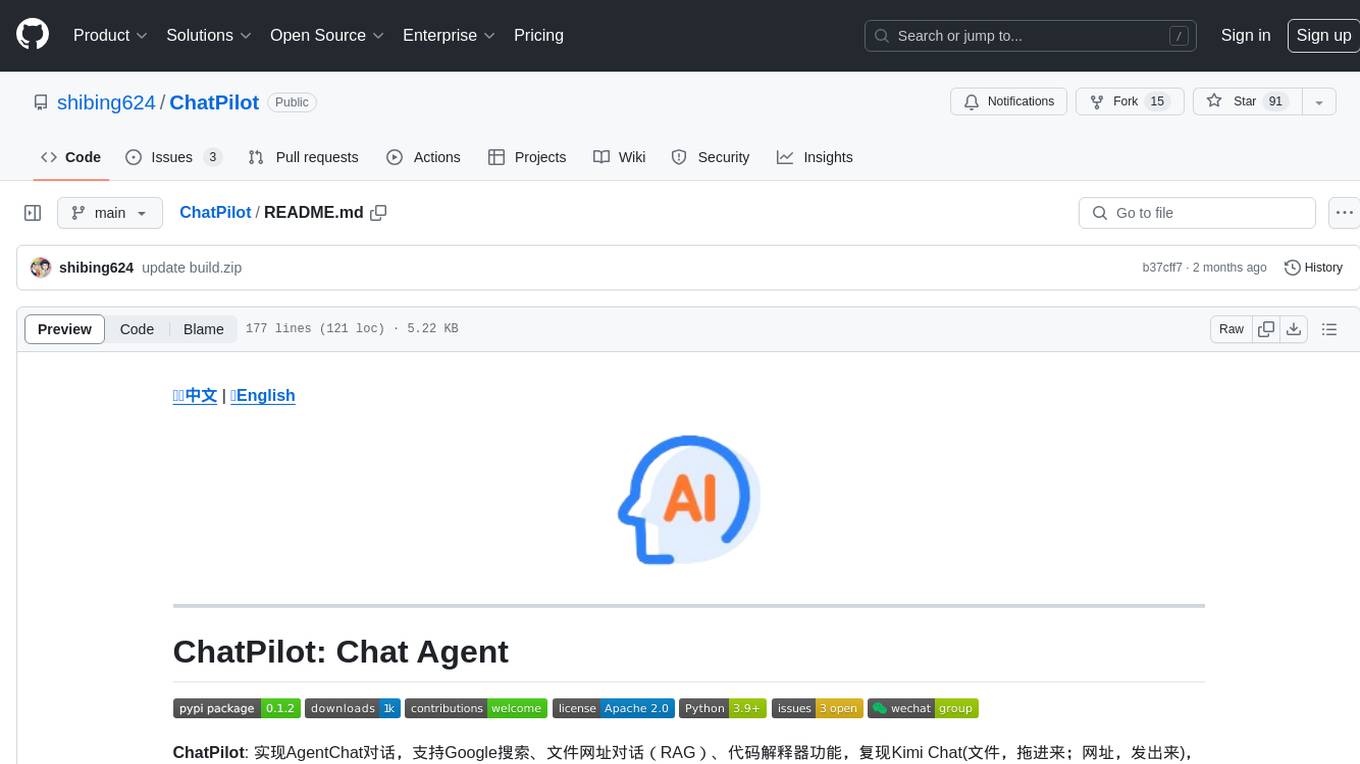

ChatPilot

ChatPilot: Chat Agent Web UI,实现Chat对话前端,支持Google搜索、文件网址对话(RAG)、代码解释器功能,复现了Kimi Chat(文件,拖进来;网址,发出来)。

Stars: 523

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

README:

ChatPilot: Chat Agent WebUI, 实现了AgentChat对话,支持Google搜索、文件网址对话(RAG)、代码解释器功能,复现Kimi Chat(文件,拖进来;网址,发出来),支持OpenAI/Azure API。

- 本项目基于Agentica实现了Agent Assistant调用,支持如下功能:

- 工具调用:支持Agent调用外部工具

- 联网搜索工具:Google Search API(Serper/DuckDuckGo)

- URL自动解析工具:复现了Kimi Chat网址发出来功能

- Python代码解释器:支持E2B虚拟环境和本地python编译器环境运行代码

- 多种LLM接入:支持多种LLM模型以多方式接入,包括使用Ollama Api接入各种本地开源模型;使用litellm Api接入各云服务部署模型;使用OpenAI Api接入GPT系列模型

- RAG:支持Agent调用RAG文件问答

- 工具调用:支持Agent调用外部工具

- 支持前后端服务分离,前端使用Svelte,后端使用FastAPI

- 支持语音输入输出,支持图像生成

- 支持用户管理,权限控制,支持聊天记录导入导出

Official Demo: https://chat.mulanai.com

export OPENAI_API_KEY=sk-xxx

export OPENAI_BASE_URL=https://xxx/v1

docker run -it \

-e OPENAI_API_KEY=$WORKSPACE_BASE \

-e OPENAI_BASE_URL=$OPENAI_BASE_URL \

-e RAG_EMBEDDING_MODEL="text-embedding-ada-002" \

-p 8080:8080 --name chatpilot-$(date +%Y%m%d%H%M%S) shibing624/chatpilot:0.0.1You'll find ChatPilot running at http://0.0.0.0:8080 Enjoy! 😄

git clone https://github.com/shibing624/ChatPilot.git

cd ChatPilot

pip install -r requirements.txt

# Copying required .env file, and fill in the LLM api key

cp .env.example .env

bash start.sh好了,现在你的应用正在运行:http://0.0.0.0:8080 Enjoy! 😄

两种方法构建前端:

- 下载打包并编译好的前端 buid.zip 解压到项目web目录下。

- 如果修改了web前端代码,需要自己使用npm重新构建前端:

git clone https://github.com/shibing624/ChatPilot.git

cd ChatPilot/

# Building Frontend Using Node.js >= 20.10

cd web

npm install

npm run build输出:项目web目录产出build文件夹,包含了前端编译输出文件。

- 使用OpenAI API,配置环境变量:

export OPENAI_API_KEY=xxx

export OPENAI_BASE_URL=https://api.openai.com/v1

export MODEL_TYPE="openai"- 如果使用Azure OpenAI API,需要配置如下环境变量:

export AZURE_OPENAI_API_KEY=

export AZURE_OPENAI_API_VERSION=

export AZURE_OPENAI_ENDPOINT=

export MODEL_TYPE="azure"以ollama serve启动ollama服务,然后配置OLLAMA_API_URL:export OLLAMA_API_URL=http://localhost:11413

- 安装

litellm包:

pip install litellm -U- 修改配置文件

chatpilot默认的litellm config文件在~/.cache/chatpilot/data/litellm/config.yaml

修改其内容如下:

model_list:

# - model_name: moonshot-v1-auto # show model name in the UI

# litellm_params: # all params accepted by litellm.completion() - https://docs.litellm.ai/docs/completion/input

# model: openai/moonshot-v1-auto # MODEL NAME sent to `litellm.completion()` #

# api_base: https://api.moonshot.cn/v1

# api_key: sk-xx

# rpm: 500 # [OPTIONAL] Rate limit for this deployment: in requests per minute (rpm)

- model_name: deepseek-ai/DeepSeek-Coder # show model name in the UI

litellm_params: # all params accepted by litellm.completion() - https://docs.litellm.ai/docs/completion/input

model: openai/deepseek-coder # MODEL NAME sent to `litellm.completion()` #

api_base: https://api.deepseek.com/v1

api_key: sk-xx

rpm: 500

- model_name: openai/o1-mini # show model name in the UI

litellm_params: # all params accepted by litellm.completion() - https://docs.litellm.ai/docs/completion/input

model: o1-mini # MODEL NAME sent to `litellm.completion()` #

api_base: https://api.61798.cn/v1

api_key: sk-xxx

rpm: 500

litellm_settings: # module level litellm settings - https://github.com/BerriAI/litellm/blob/main/litellm/__init__.py

drop_params: True

set_verbose: False- Issue(建议):

- 邮件我:xuming: [email protected]

- 微信我:加我微信号:xuming624, 备注:姓名-公司-NLP 进NLP交流群。

如果你在研究中使用了ChatPilot,请按如下格式引用:

APA:

Xu, M. ChatPilot: LLM agent toolkit (Version 0.0.2) [Computer software]. https://github.com/shibing624/ChatPilotBibTeX:

@misc{ChatPilot,

author = {Ming Xu},

title = {ChatPilot: llm agent},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/shibing624/ChatPilot}},

}授权协议为 The Apache License 2.0,可免费用做商业用途。请在产品说明中附加ChatPilot的链接和授权协议。

项目代码还很粗糙,如果大家对代码有所改进,欢迎提交回本项目,在提交之前,注意以下两点:

- 在

tests添加相应的单元测试 - 使用

python -m pytest -v来运行所有单元测试,确保所有单测都是通过的

之后即可提交PR。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ChatPilot

Similar Open Source Tools

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

aiotieba

Aiotieba is an asynchronous Python library for interacting with the Tieba API. It provides a comprehensive set of features for working with Tieba, including support for authentication, thread and post management, and image and file uploading. Aiotieba is well-documented and easy to use, making it a great choice for developers who want to build applications that interact with Tieba.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

ERNIE-SDK

ERNIE SDK repository contains two projects: ERNIE Bot Agent and ERNIE Bot. ERNIE Bot Agent is a large model intelligent agent development framework based on the Wenxin large model orchestration capability introduced by Baidu PaddlePaddle, combined with the rich preset platform functions of the PaddlePaddle Star River community. ERNIE Bot provides developers with convenient interfaces to easily call the Wenxin large model for text creation, general conversation, semantic vectors, and AI drawing basic functions.

QFurina

QFurina is a powerful and easily extensible Python QQ robot backend service that provides a range of automation and interactive features. It supports multiple messaging platforms and has a robust plugin system, allowing users to easily expand and customize functionality.

AI-Codereview-Gitlab

AI-Codereview-Gitlab is an automated code review tool based on large models, designed to help development teams conduct intelligent code reviews quickly during code merging or submission. It supports multiple large models including DeepSeek, ZhipuAI, OpenAI, and Ollama. The tool can automatically push review results to DingTalk, WeChat Work, and Feishu, generate daily reports based on GitLab commit records, and provide a visual dashboard to display code review records. The tool works by triggering webhook events on GitLab when users submit code, calling third-party large models to review the code, and recording the review results in corresponding Merge Requests or Commit Notes.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

xiaozhi-client

Xiaozhi Client is a tool that supports integration with Xiaozhi official servers, acts as a regular MCP Server integrated into various clients, allows configuration of multiple Xiaozhi access points for shared MCP configuration, aggregates multiple MCP Servers in a standard way, dynamically controls MCP Server tool visibility, supports local deployment of open-source server integration, provides web-based visual configuration allowing customization of IP and port, integrates ModelScope remote MCP services, creates Xiaozhi Client projects through templates, and supports running in the background.

chatgpt-adapter

ChatGPT-Adapter is an interface service that integrates various free services together. It provides a unified interface specification and integrates services like Bing, Claude-2, Gemini. Users can start the service by running the linux-server script and set proxies if needed. The tool offers model lists for different adapters, completion dialogues, authorization methods for different services like Claude, Bing, Gemini, Coze, and Lmsys. Additionally, it provides a free drawing interface with options like coze.dall-e-3, sd.dall-e-3, xl.dall-e-3, pg.dall-e-3 based on user-provided Authorization keys. The tool also supports special flags for enhanced functionality.

siliconflow-plugin

SiliconFlow-PLUGIN (SF-PLUGIN) is a versatile AI integration plugin for the Yunzai robot framework, supporting multiple AI services and models. It includes features such as AI drawing, intelligent conversations, real-time search, text-to-speech synthesis, resource management, link handling, video parsing, group functions, WebSocket support, and Jimeng-Api interface. The plugin offers functionalities for drawing, conversation, search, image link retrieval, video parsing, group interactions, and more, enhancing the capabilities of the Yunzai framework.

For similar tasks

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.