CortexTheseus

Cortex - AI on Blockchain, Official Golang implementation

Stars: 134

CortexTheseus is a full node implementation of the Cortex blockchain, written in C++. It provides a complete set of features for interacting with the Cortex network, including the ability to create and manage accounts, send and receive transactions, and participate in consensus. CortexTheseus is designed to be scalable, secure, and easy to use, making it an ideal choice for developers building applications on the Cortex blockchain.

README:

https://github.com/CortexFoundation/cvm-runtime

Stop your cortex full node daemon, when you do this test

https://github.com/CortexFoundation/torrentfs

git clone https://github.com/CortexFoundation/torrentfs.git

cd torrentfs

make

./build/bin/torrent download 'infohash:6b75cc1354495ec763a6b295ee407ea864a0c292'

./build/bin/torrent download 'infohash:b2f5b0036877be22c6101bdfa5f2c7927fc35ef8'

./build/bin/torrent download 'infohash:5a49fed84aaf368cbf472cc06e42f93a93d92db5'

./build/bin/torrent download 'infohash:1f1706fa53ce0723ba1c577418b222acbfa5a200'

./build/bin/torrent download 'infohash:3f1f6c007e8da3e16f7c3378a20a746e70f1c2b0'

downloaded ALL the torrents !!!!!!!!!!!!!!!!!!!

https://github.com/CortexFoundation/inference

https://github.com/CortexFoundation/solution

https://github.com/CortexFoundation/rosetta-cortex

https://github.com/CortexFoundation/docker

https://github.com/CortexFoundation/robot

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm invpcid_single pti ibrs ibpb stibp fsgsbase bmi1 avx2 smep bmi2 erms invpcid xsaveopt

For example

cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz

stepping : 2

microcode : 0x1

cpu MHz : 2494.224

cache size : 30720 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 1

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm invpcid_single pti ibrs ibpb stibp fsgsbase bmi1 avx2 smep bmi2 erms invpcid xsaveopt

bugs : cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf mds swapgs itlb_multihit

bogomips : 4988.44

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 32

On-line CPU(s) list: 0-31

Thread(s) per core: 2

Core(s) per socket: 16

Socket(s): 1

NUMA node(s): 2

Vendor ID: AuthenticAMD

CPU family: 23

Model: 1

Model name: AMD EPYC 7571

Stepping: 2

CPU MHz: 2534.021

BogoMIPS: 4399.86

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32K

L1i cache: 64K

L2 cache: 512K

L3 cache: 8192K

NUMA node0 CPU(s): 0-7,16-23

NUMA node1 CPU(s): 8-15,24-31

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid amd_dcm aperfmperf tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy cr8_legacy abm sse4a misalignsse 3dnowprefetch topoext perfctr_core vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero xsaveerptr arat npt nrip_save

Cortex node is developed in Ubuntu 18.04 x64 + CUDA 9.2 + NVIDIA Driver 396.37 environment, with CUDA Compute capability >= 6.1. Latest Ubuntu distributions are also compatible, but not fully tested. Recommend:

- cmake 3.11.0+

wget https://cmake.org/files/v3.11/cmake-3.11.0-rc4-Linux-x86_64.tar.gz

tar zxvf cmake-3.11.0-rc4-Linux-x86_64.tar.gz

sudo mv cmake-3.11.0-rc4-Linux-x86_64 /opt/cmake-3.11

sudo ln -sf /opt/cmake-3.11/bin/* /usr/bin/

sudo apt-get install make

- go 1.20.+

wget https://go.dev/dl/go1.20.2.linux-amd64.tar.gz

sudo tar -C /usr/local -xzf go1.20.2.linux-amd64.tar.gz

echo 'export PATH="$PATH:/usr/local/go/bin"' >> ~/.bashrc

source ~/.bashrc

- gcc/g++ 5.4+

sudo apt install gcc

sudo apt install g++

- cuda 9.2+ (if u have gpu)

export LD_LIBRARY_PATH=/usr/local/cuda/lib64/:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

export LIBRARY_PATH=/usr/local/cuda/lib64/:/usr/local/cuda/lib64/stubs:$LIBRARY_PATH

- nvidia driver 396.37+ reference: https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html#major-components

- ubuntu 18.04+

Recommend:

- cmake 3.11.0+

yum install cmake3

- go 1.20.+

- gcc/g++ 5.4+ reference: https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#system-requirements

sudo yum install centos-release-scl

sudo yum install devtoolset-7-gcc*

scl enable devtoolset-7 bash

which gcc

gcc --version

- cuda 10.1+ (if u have gpu)

export LD_LIBRARY_PATH=/usr/local/cuda/lib64/:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

export LIBRARY_PATH=/usr/local/cuda/lib64/:/usr/local/cuda/lib64/stubs:$LIBRARY_PATH

- nvidia driver 418.67+

- centos 7.6

- git clone --recursive https://github.com/CortexFoundation/CortexTheseus.git

- cd CortexTheseus

- make clean && make -j$(nproc)

ldd plugins/libcvm_runtime.so

linux-vdso.so.1 => (0x00007ffe107fa000)

libstdc++.so.6 => /lib64/libstdc++.so.6 (0x00007f250e6a8000)

libm.so.6 => /lib64/libm.so.6 (0x00007f250e3a6000)

libgomp.so.1 => /lib64/libgomp.so.1 (0x00007f250e180000)

libgcc_s.so.1 => /lib64/libgcc_s.so.1 (0x00007f250df6a000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f250dd4e000)

libc.so.6 => /lib64/libc.so.6 (0x00007f250d980000)

/lib64/ld-linux-x86-64.so.2 (0x00007f250ed35000)

(If failed, run rm -rf cvm-runtime && git submodule init && git submodule update and try again)

And then, run any command to start full node cortex:

1. cd CortexTheseus

2. export LD_LIBRARY_PATH=$PWD:$PWD/plugins:$LD_LIBRARY_PATH

3. ./build/bin/cortex

It is easy for you to view the help document by running ./build/bin/cortex --help./cortex --bernard

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for CortexTheseus

Similar Open Source Tools

CortexTheseus

CortexTheseus is a full node implementation of the Cortex blockchain, written in C++. It provides a complete set of features for interacting with the Cortex network, including the ability to create and manage accounts, send and receive transactions, and participate in consensus. CortexTheseus is designed to be scalable, secure, and easy to use, making it an ideal choice for developers building applications on the Cortex blockchain.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

KuiperLLama

KuiperLLama is a custom large model inference framework that guides users in building a LLama-supported inference framework with Cuda acceleration from scratch. The framework includes modules for architecture design, LLama2 model support, model quantization, Cuda basics, operator implementation, and fun tasks like text generation and storytelling. It also covers learning other commercial inference frameworks for comprehensive understanding. The project provides detailed tutorials and resources for developing and optimizing large models for efficient inference.

open-lx01

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

airdrop-tools

Airdrop-tools is a repository containing tools for all Telegram bots. Users can join the Telegram group for support and access various bot apps like Moonbix, Blum, Major, Memefi, and more. The setup requires Node.js and Python, with instructions on creating data directories and installing extensions. Users can run different tools like Blum, Major, Moonbix, Yescoin, Matchain, Fintopio, Agent301, IAMDOG, Banana, Cats, Wonton, and Xkucoin by following specific commands. The repository also provides contact information and options for supporting the creator.

flyte-sdk

Flyte 2 SDK is a pure Python tool for type-safe, distributed orchestration of agents, ML pipelines, and more. It allows users to write data pipelines, ML training jobs, and distributed compute in Python without any DSL constraints. With features like async-first parallelism and fine-grained observability, Flyte 2 offers a seamless workflow experience. Users can leverage core concepts like TaskEnvironments for container configuration, pure Python workflows for flexibility, and async parallelism for distributed execution. Advanced features include sub-task observability with tracing and remote task execution. The tool also provides native Jupyter integration for running and monitoring workflows directly from notebooks. Configuration and deployment are made easy with configuration files and commands for deploying and running workflows. Flyte 2 is licensed under the Apache 2.0 License.

xiaozhi-client

Xiaozhi Client is a tool that supports integration with Xiaozhi official servers, acts as a regular MCP Server integrated into various clients, allows configuration of multiple Xiaozhi access points for shared MCP configuration, aggregates multiple MCP Servers in a standard way, dynamically controls MCP Server tool visibility, supports local deployment of open-source server integration, provides web-based visual configuration allowing customization of IP and port, integrates ModelScope remote MCP services, creates Xiaozhi Client projects through templates, and supports running in the background.

lm-engine

LM Engine is a research-grade, production-ready library for training large language models at scale. It provides support for multiple accelerators including NVIDIA GPUs, Google TPUs, and AWS Trainiums. Key features include multi-accelerator support, advanced distributed training, flexible model architectures, HuggingFace integration, training modes like pretraining and finetuning, custom kernels for high performance, experiment tracking, and efficient checkpointing.

cipher

Cipher is a versatile encryption and decryption tool designed to secure sensitive information. It offers a user-friendly interface with various encryption algorithms to choose from, ensuring data confidentiality and integrity. With Cipher, users can easily encrypt text or files using strong encryption methods, making it suitable for protecting personal data, confidential documents, and communication. The tool also supports decryption of encrypted data, providing a seamless experience for users to access their secured information. Cipher is a reliable solution for individuals and organizations looking to enhance their data security measures.

openbrowser-ai

OpenBrowser is a framework for intelligent browser automation that combines direct CDP communication with a CodeAgent architecture. It allows users to navigate, interact with, and extract information from web pages autonomously. The tool supports various LLM providers, offers vision support for screenshot analysis, and includes a MCP server for Model Context Protocol support. Users can record browser sessions as video files and benefit from features like video recording and full documentation available at docs.openbrowser.me.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

ai-commit

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

For similar tasks

CortexTheseus

CortexTheseus is a full node implementation of the Cortex blockchain, written in C++. It provides a complete set of features for interacting with the Cortex network, including the ability to create and manage accounts, send and receive transactions, and participate in consensus. CortexTheseus is designed to be scalable, secure, and easy to use, making it an ideal choice for developers building applications on the Cortex blockchain.

Tinder_Automation_Bot

Tinder Automation Bot is an Appium-based tool designed for automated Tinder account creation and swiping on real devices. It offers functionalities such as automated account creation and swiping, along with integrations like Crane tweak and SMSPool service. The tool also provides features like device and automation management system, anti-bot system for human behavior modeling, IP rotation system for different IP addresses, and GPS location spoofing for different GPS coordinates. It is part of a series of automation bots including TikTok, Bumble, and Badoo automation bots.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

awesome-algorand

Awesome Algorand is a curated list of resources related to the Algorand Blockchain, including official resources, wallets, blockchain explorers, portfolio trackers, learning resources, development tools, DeFi platforms, nodes & consensus participation, subscription management, security auditing services, blockchain bridges, oracles, name services, community resources, Algorand Request for Comments, metrics and analytics services, decentralized voting tools, and NFT marketplaces. The repository provides a comprehensive collection of tools, tutorials, protocols, and platforms for developers, users, and enthusiasts interested in the Algorand ecosystem.

For similar jobs

ethereum-etl-airflow

This repository contains Airflow DAGs for extracting, transforming, and loading (ETL) data from the Ethereum blockchain into BigQuery. The DAGs use the Google Cloud Platform (GCP) services, including BigQuery, Cloud Storage, and Cloud Composer, to automate the ETL process. The repository also includes scripts for setting up the GCP environment and running the DAGs locally.

airnode

Airnode is a fully-serverless oracle node that is designed specifically for API providers to operate their own oracles.

CHATPGT-MEV-BOT

The 𝓜𝓔𝓥-𝓑𝓞𝓣 is a revolutionary tool that empowers users to maximize their ETH earnings through advanced slippage techniques within the Ethereum ecosystem. Its user-centric design, optimized earning mechanism, and comprehensive security measures make it an indispensable tool for traders seeking to enhance their crypto trading strategies. With its current free access, there's no better time to explore the 𝓜𝓔𝓥-𝓑𝓞𝓣's capabilities and witness the transformative impact it can have on your crypto trading journey.

CortexTheseus

CortexTheseus is a full node implementation of the Cortex blockchain, written in C++. It provides a complete set of features for interacting with the Cortex network, including the ability to create and manage accounts, send and receive transactions, and participate in consensus. CortexTheseus is designed to be scalable, secure, and easy to use, making it an ideal choice for developers building applications on the Cortex blockchain.

CHATPGT-MEV-BOT-ETH

This tool is a bot that monitors the performance of MEV transactions on the Ethereum blockchain. It provides real-time data on MEV profitability, transaction volume, and network congestion. The bot can be used to identify profitable MEV opportunities and to track the performance of MEV strategies.

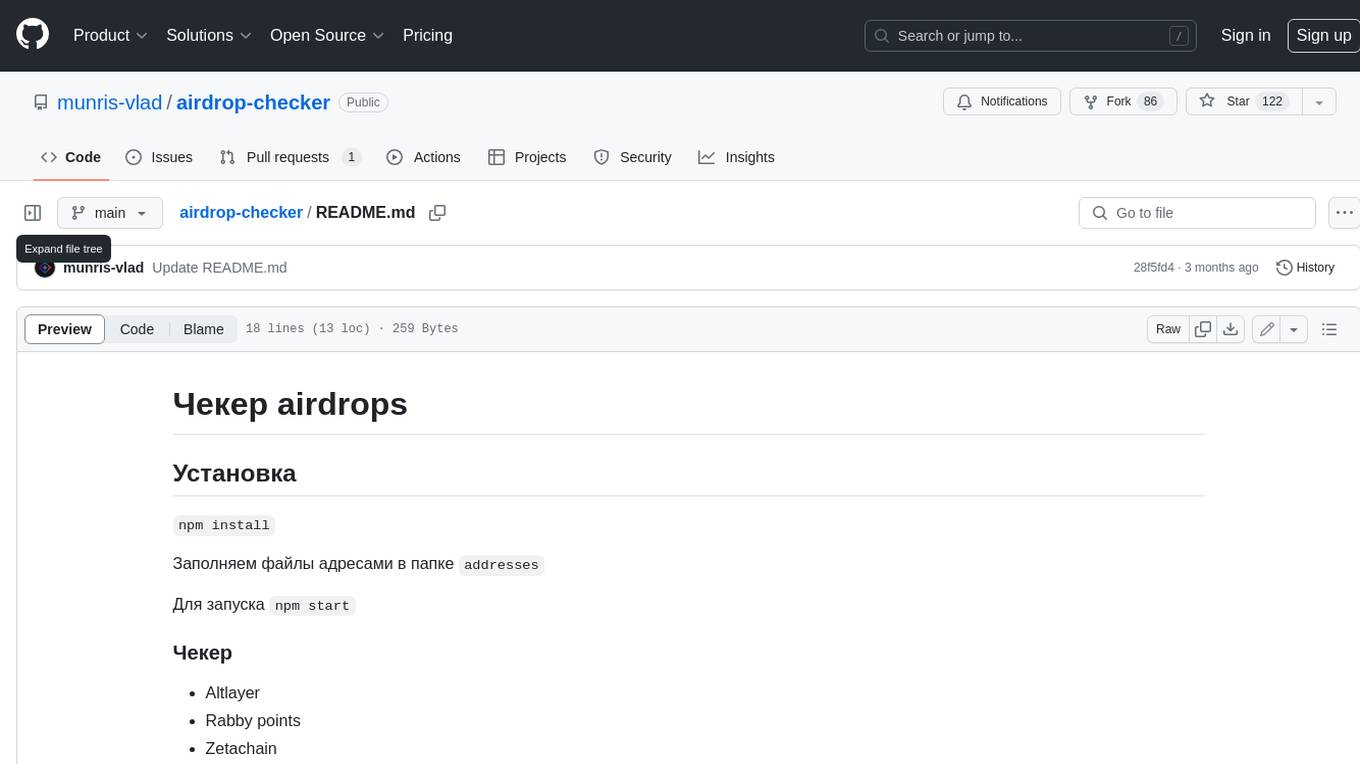

airdrop-checker

Airdrop-checker is a tool that helps you to check if you are eligible for any airdrops. It supports multiple airdrops, including Altlayer, Rabby points, Zetachain, Frame, Anoma, Dymension, and MEME. To use the tool, you need to install it using npm and then fill the addresses files in the addresses folder with your wallet addresses. Once you have done this, you can run the tool using npm start.

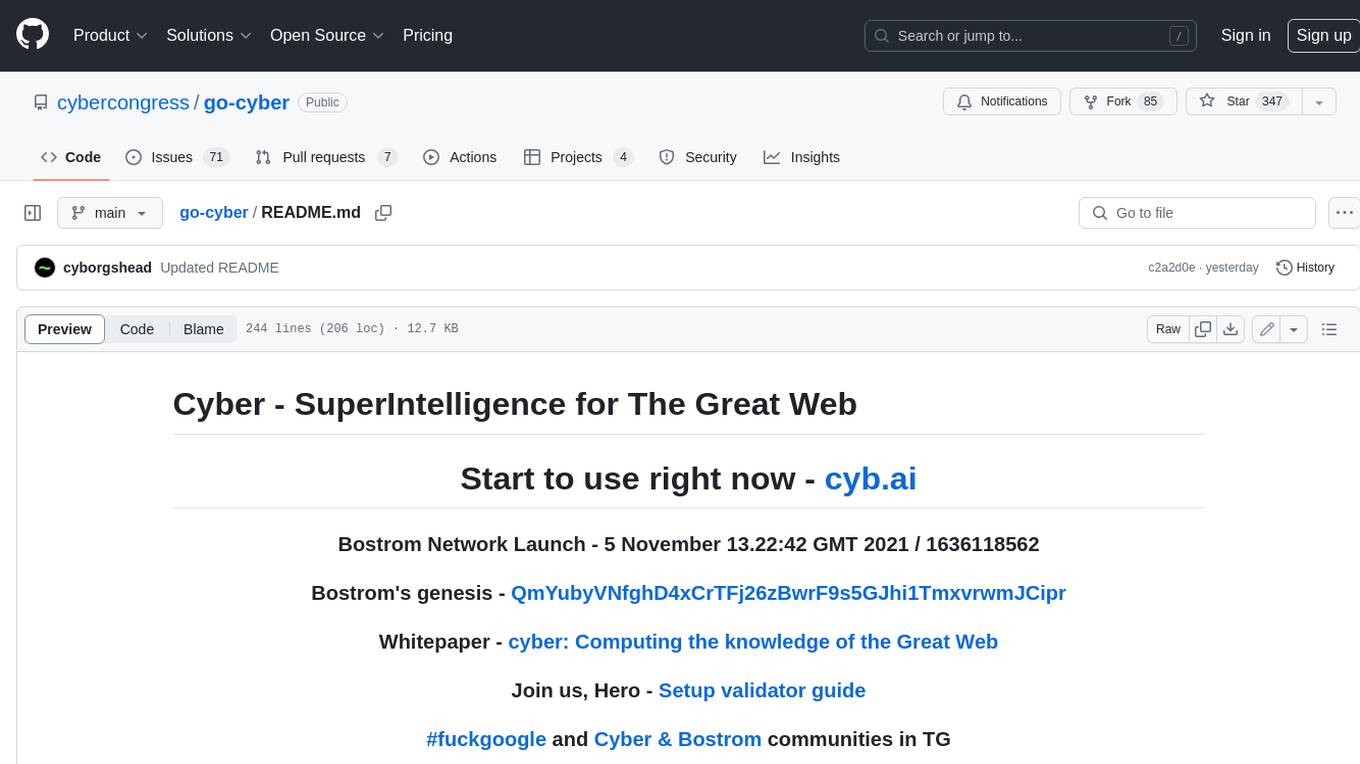

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

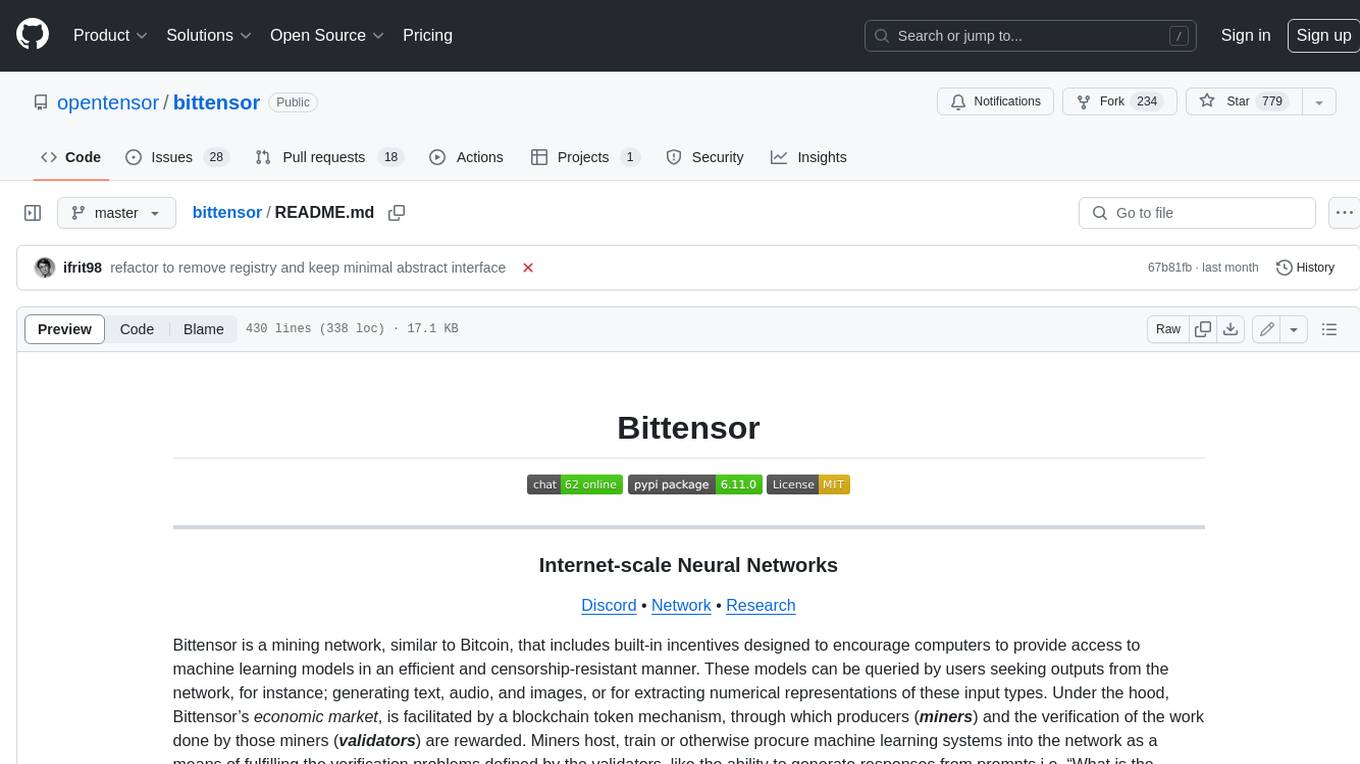

bittensor

Bittensor is an internet-scale neural network that incentivizes computers to provide access to machine learning models in a decentralized and censorship-resistant manner. It operates through a token-based mechanism where miners host, train, and procure machine learning systems to fulfill verification problems defined by validators. The network rewards miners and validators for their contributions, ensuring continuous improvement in knowledge output. Bittensor allows anyone to participate, extract value, and govern the network without centralized control. It supports tasks such as generating text, audio, images, and extracting numerical representations.