MAVIS

Math Visual Intelligent System (Strongest calculator in the world)

Stars: 85

MAVIS (Math Visual Intelligent System) is an AI-driven application that allows users to analyze visual data such as images and generate interactive answers based on them. It can perform complex mathematical calculations, solve programming tasks, and create professional graphics. MAVIS supports Python for coding and frameworks like Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, and Pandas. It is designed to make projects more efficient and professional.

README:

MAth Visual Intelligent System (Strongest calculator in the world).

Do you think you're better at math than an AI? Prove it! Show that there are infinitely many pairs of prime numbers that are exactly 2 apart. No proof? No answer? Well – maybe it can't be done without AI after all! What are you waiting for? Ask MAVIS - install!

Deutsch

Denkst du, du bist besser in Mathe als eine KI? Beweise es! Zeig, dass es unendlich viele Primzahlpaare gibt, die genau 2 auseinander liegen. Kein Beweis? Keine Antwort? Tja – vielleicht geht’s doch nicht ohne KI! Worauf wartest du? Frag MAVIS![!WARNING]

Beware of fake accounts!

There is evidence that fake accounts may attempt to misrepresent this project. Please do not share personal information with anyone you do not know and only rely on content coming directly from this repository. Immediately report large activities or accounts to GitHub or the project team.

Deutsch

[!WARNING]

Wichtiger Hinweis: Vorsicht vor Fake-Accounts!

Es gibt Hinweise darauf, dass Fake-Accounts versuchen könnten, dieses Projekt falsch darzustellen. Bitte geben Sie keine persönlichen Daten an Unbekannte weiter und verlassen Sie sich nur auf Inhalte, die direkt aus diesem Repository stammen. Melden Sie umfangreiche Aktivitäten oder Accounts umgehend an GitHub oder das Projektteam.

[!TIP] MAVIS is an AI-driven application that allows you to analyze visual data such as images formats: PNG, JPG, JPEG, GIF, Text, PNG, Py, doc and docx and generate interactive answers based on them. With MAVIS you can perform complex mathematical calculations, solve programming tasks and create professional graphics.

To achieve optimal results, please note the following:

Always display formulas in LaTeX to ensure precise and attractive formatting.

Ask MAVIS to always write code in Python, as this is the only language supported by the user interface.

Ask MAVIS to create graphics using Matplotlib, Plotly, Seaborn and Altair, as the user interface only supports HTML, LaTeX and Python (version 3.13 with the frameworks Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, Pandas, AstroPy, QuantLib, OpenMDAO, pybullet, MONAI (if needed soon: PyTorch, TensorFlow, Keras, Scikit-Learn and Hugging Face Transformers etc. (maybe JAX)).

Use the powerful features of MAVIS to make your projects more efficient and professional.

Deutsch

MAVIS ist eine KI-basierte Anwendung, mit der Sie visuelle Daten wie Bildformate wie PNG, JPG, JPEG, GIF, Text, PNG, Py, doc und docx analysieren und daraus interaktive Antworten generieren kann. Mit MAVIS können Sie komplexe mathematische Berechnungen durchführen, Programmieraufgaben lösen und professionelle Grafiken erstellen.

Für optimale Ergebnisse beachten Sie bitte Folgendes:

Formeln sollten immer in LaTeX dargestellt werden, um eine präzise und ansprechende Formatierung zu gewährleisten.

Mavis soll Code immer in Python schreiben, da die Benutzeroberfläche nur Python unterstützt.

MAVIS erstellt Grafiken mit Matplotlib, Plotly, Seaborn und Altair da die Benutzeroberfläche nur HTML, LaTeX und Python unterstützt (Version 3.13 mit den Frameworks Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, Pandas, AstroPy, QuantLib, OpenMDAO, Pybullet, MONAI (bei Bedarf auch PyTorch, TensorFlow, Keras, Scikit-Learn und Hugging Face Transformers etc. (ggf. JAX)).

Nutzen Sie die leistungsstarken Funktionen von MAVIS, um Ihre Projekte effizienter und professioneller zu gestalten.

- [09.11.2024] Start ;-)

- [10.11.2024] Available with Llama3.2 + Demo with Xc++ 2

- [13.11.2024] Demo UI

- [21.11.2024] MAVIS with Python (Version 3.11/12/13 with the frameworks Matplotlib, NumPy, Math, SymPy and Pandas) Demo

- [28.11.2024] Available with Plotly but still bugs that need to be resolved

- [30.11.2024] MAVIS can write PyTorch, TensorFlow, Keras, Scikit-Learn and Hugging Face Transformers (maybe JAX) code and run it side-by-side without the need for an IDE. But it is only intended for experimentation.

- [01.12.2024] MAVIS EAP release

- [03.12.2024] Available with Altair

- [24.12.2024] MAVIS 1.3 EAP release: new Plotly functions Demo + Stronger adaptability through Transformer (Huggingface) + Bigger Input Box

- [01.02.2025] MAVIS 1.5 EAP release: new functions

- [27.02.2025] MAVIS 1.5 release

- [27.02.2025] MAVIS 1.n and MAVIS 2.n was terminated!!!

- [27.02.2025] Start of development of MAVIS 3

- [27.02.2025] MAVIS 3 EAP release

- [01.03.2025] MAVIS Terminal 3 EAP release

- [01.03.2025] MAVIS Installer 3 EAP release

- [15.03.2025] MAVIS 3 release !!!

- [16.03.2025] MAVIS 3.3 release !!!

- [19.03.2025] Development of MAVIS 4 has begun – featuring new Vision Models, a more powerful and faster MAVIS Terminal, and access to over 200 models. No separate main, math, or code versions – ensuring greater usability.

- [31.03.2025] MAVIS 4 EAP release !!!

[!IMPORTANT] Release: 15.03.2025

[!IMPORTANT] Release: 15.03.2025

[!IMPORTANT] Release: 01.05.2025

MAVIS Terminal 4

MAVIS Terminal: Harness the power of MAVIS, Python, PIP, Ollama, Git, vLLM, PowerShell, and a vast array of Linux distributions—including Ubuntu, Debian, Arch Linux, Kali Linux, openSUSE, Mint, Fedora, and Red Hat—all within a single terminal on Windows!

MAVIS Installer 4

[!WARNING] Still in progress

Old MAVIS versions

| Model | Description | Requirements | Parameters |

|---|---|---|---|

| Mavis 1.2 main | With Xc++ 2 11B or Llama3.2 11B +16GB RAM | +23GB storage (Works with one CPU) | 22B |

| Mavis 1.2 math | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.2 code | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 Coder 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.2 math pro | With Xc++ 2 90B or Llama3.2 90B + QwQ +64GB RAM | +53GB storage (Works with one CPU) | 122B |

| Mavis 1.2 code pro | With Xc++ 2 90B or Llama3.2 90B + Qwen 2.5 Coder 32B | +64GB RAM +53GB storage (Works with one CPU) | 122B |

| Mavis 1.2 mini | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 0.5B | +16GB RAM +13GB storage (Works with one CPU) | 11.5B |

| Mavis 1.2 mini mini | With Xc++ 2 11B or Llama3.2 11B + smollm:135m | +16GB RAM +33GB storage (Works with one CPU) | 11.0135B |

| Mavis 1.2.2 main | With Xc++ 2 11B or Llama3.2 11B + Phi4 +16GB RAM | +23GB storage (Works with one CPU) | 25B |

| Mavis 1.2.2 math | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R1 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.2.2 math pro | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R 32B | +32GB RAM +33GB storage (Works with one CPU) | 43B |

| Mavis 1.2.2 math ultra | With Xc++ 2 90B or Llama3.2 90B + DeepSeek R1 671B | +256GB RAM +469GB storage (Works with one CPU) | 761B |

| Mavis 1.3 main | With Xc++ 2 11B or Qwen2 VL 7B + Llama 3.3 | +64GB RAM +53GB storage | 77B |

| Mavis 1.3 math | With Xc++ 2 11B or Qwen2 VL 7B + Qwen 2.5 14B | +16GB RAM +33GB storage | 21B |

| Mavis 1.3 code | With Xc++ 2 11B or Qwen2 VL 7B + Qwen 2.5 Coder 14B | +16B RAM +33GB storage | 21B |

| Mavis 1.3 math pro | With Xc++ 2 90 or Qwen2 VL 72B + QwQ 32B | +64GB RAM +53GB storage | 104B |

| Mavis 1.3 math pro | With Xc++ 2 90B or Qwen2 VL 72B + Qwen 2.5 Coder 32B | +64GB RAM +53GB storage | 104B |

| Mavis 1.4 math | With Xc++ 2 90B or QvQ + QwQ 32B | +64GB RAM +53GB storage | 104B |

| Mavis 1.5 main | With Xc++ 2 11B or Llama3.2 11B + Phi 4 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.5 math | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R1 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.5 math pro | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R 32B | +32GB RAM +33GB storage (Works with one CPU) | 43B |

| Mavis 1.5 math ultra | With Xc++ 2 90B or Llama3.2 90B + DeepSeek R1 671B | +512GB RAM +469GB storage (Works with one CPU) | 761B |

| Mavis 1.5 math mini | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R1 7B | +16GB RAM +18GB storage (Works with one CPU) | 18B |

| Mavis 1.5 math mini mini | With Xc++ 2 11B or Llama3.2 11B + DeepSeek R1 1.5B | +16GB RAM +14GB storage (Works with one CPU) | 12.5B |

| Mavis 1.5 code | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 Coder 14B | +16GB RAM +23GB storage (Works with one CPU) | 25B |

| Mavis 1.5 code pro | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 Coder 32B | +32GB RAM +33GB storage (Works with one CPU) | 43B |

| Mavis 1.5 code mini | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 Coder 7B | +16GB RAM +18GB storage (Works with one CPU) | 18B |

| Mavis 1.5 code mini mini | With Xc++ 2 11B or Llama3.2 11B + Qwen 2.5 Coder 1.5B | +16GB RAM +14GB storage (Works with one CPU) | 12.5B |

| Mavis 1.5.3 math mini mini | With Xc++ 2 11B or Llama3.2 11B + DeepScaleR 1.5B | +16GB RAM +14GB storage (Works with one CPU) | 12.5B |

| Model | Description | Requirements (Works with one CPU) | Parameters |

|---|---|---|---|

| MAVIS 3 main | With Xc++ 3 11B or Llama3.2 11B + Phi4 14b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +23GB storage | 28.5B |

| MAVIS 3 main mini | With Xc++ 3 11B or Llama3.2 11B + Phi4-mini 3.8b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +18GB storage | 18.3B |

| MAVIS 3 math | With Xc++ 3 11B or Llama3.2 11B + DeepSeek R1 14b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +23GB storage | 28.5B |

| MAVIS 3 math pro | With Xc++ 3 11B or Llama3.2 11B + DeepSeek R1 32b + Qwen 2.5 1.5b + granite3.2-vision 2b | +32GB RAM +33GB storage | 46.5B |

| MAVIS 3 math ultra | With Xc++ 3 11B or Llama3.2 90B + DeepSeek R1 671b + Qwen 2.5 1.5b + granite3.2-vision 2b | +512GB RAM +469GB storage | 764.5B |

| MAVIS 3 math mini | With Xc++ 3 11B or Llama3.2 11B + DeepSeek R1 7b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +20GB storage | 21.5B |

| MAVIS 3 math mini mini | With Xc++ 3 11B or Llama3.2 11B + DeepSeek R1 1.5b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +17GB storage | 16B |

| MAVIS 3 code | With Xc++ 3 11B or Llama3.2 11B + Qwen 2.5 Coder 14B +Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +23GB storage | 28.5B |

| MAVIS 3 code pro | With Xc++ 3 11B or Llama3.2 11B + Qwen 2.5 Coder 32B +Qwen 2.5 1.5b + granite3.2-vision 2b | +32GB RAM +33GB storage | 46.5B |

| MAVIS 3 code mini | With Xc++ 3 11B or Llama3.2 11B + Qwen 2.5 Coder 7B +Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +20GB storage | 21.5B |

| MAVIS 3 code mini | With Xc++ 3 11B or Llama3.2 11B + Qwen 2.5 Coder 1.5B +Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +17GB storage | 16B |

| MAVIS 3.3 main | With Xc++ 3 11B or Llama3.2 11B + Gemma3 12B + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +23GB storage | 26.5B |

| MAVIS 3.3 main pro | With Xc++ 3 11B or Llama3.2 11B + Gemma3 27B + Qwen 2.5 1.5b + granite3.2-vision 2b | +32GB RAM +23GB storage | 41.5B |

| MAVIS 3.3 main mini | With Xc++ 3 11B or Llama3.2 11B + Gemma3 4B + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +18GB storage | 18.5B |

| MAVIS 3.3 main mini | With Xc++ 3 11B or Llama3.2 11B + Gemma3 1B + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +17GB storage | 15.5B |

| MAVIS 3.3 math | With Xc++ 3 11B or Llama3.2 11B + QwQ 32b + Qwen 2.5 1.5b + granite3.2-vision 2b | +32GB RAM +33GB storage | 46.5B |

| MAVIS 3.3 math mini | With Xc++ 3 11B or Llama3.2 11B + DeepScaleR 1.5b + Qwen 2.5 1.5b + granite3.2-vision 2b | +16GB RAM +18GB storage | 16B |

[!WARNING] Still in progress

| Modell | GPQA | AIME | MATH-500 | LiveCodeBench | Codeforces | HumanEval |

|---|---|---|---|---|---|---|

| Xc++ III | ... | ... | ... | ... | ... | ... |

| Gemma 3 12b | 40.9 | ... | 83.8 | 24.6 | ... | ... |

| Gemma 3 27b | 42.4 | ... | 89.0 | 29.7 | ... | ... |

| DeepSeek R1 14b | 59.1 | 69.7 | 93.9 | ... | 90.6 | ... |

| DeepSeek R1 32b | 62.1 | 72.6 | 94.3 | ... | ... | ... |

| DeepSeek R1 671b | 71.5 | 79.8 | 97.3 | ... | 96.3 | ... |

| QwQ 32B Preview | 65.2 | 50.0 | 90.6 | 50.0 | ... | ... |

| QwQ 32B | ... | ... | ... | ... | ... | ... |

| Qwen 2.5 Coder 14B | ... | ... | ... | ... | ... | 89.6 |

| Qwen 2.5 Coder 32B | ... | ... | ... | 31.4 | ... | 92.7 |

| Open AI o1 | 75.7 | 79.2 | 96.4 | ... | 96.6 | ... |

| Open AI o1-preview | 72.3 | 44.6 | 85.5 | 53.6 | 92.4 | ... |

| Open AI o1 mini | 60.0 | 56.7 | 90.0 | 58.0 | 92.4 | ... |

| GPT-4o | 53.6 | 59.4 | 76.6 | 33.4 | 90.2 | 92.1 |

| Claude3.5 Sonnet | 49.0 | 53.6 | 82.6 | 30.4 | 92.0 | 92.1 |

[!WARNING] Still in progress

Installation MAVIS 1.1 - 1.5

MAVIS (aka Xc++ III) is currently under development and is not fully available in this repository. Cloning the repository will only give you the README file, some images and already released code, including the user interface (UI) compatible with Qwen 2.5 Code and Llama 3.2 Vision.

Note: Although manual installation is not much more complicated, it is significantly more stable and secure, so we recommend preferring this method.

The automatic installation installs Git, Python and Ollama, creates a folder and sets up a virtual Python environment. It also installs the required Python frameworks and AI models - but this step is also done automatically with the manual installation.

Automatic Installation (experimental)

If you still prefer the automatic installation, please follow the instructions below:

Automatic Installation on Windows

-

Download the installation file:

- Download the file

mavis-installer.batfrom GitHub.

-

Run the installation file:

- Run the downloaded file by double-clicking it.

-

Accept security warnings:

- If Windows issues a security warning, accept it and allow execution.

-

Follow the installation instructions:

- Follow the on-screen instructions to install MAVIS.

Start the UI

After installation, you can start MAVIS. There are several start options that vary depending on the version and intended use:

- All MAVIS versions:

-

run-mavis-all.bat(experimental)

-

- With a batch file for MAVIS 1.2:

-

run-mavis-1-2-main.bat(recommended) -

run-mavis-1-2-code.bat(recommended) -

run-mavis-1-2-code-pro.bat(recommended) -

run-mavis-1-2-math.bat(recommended) -

run-mavis-1-2-math-pro.bat(recommended) -

run-mavis-1-2-mini.bat(recommended) -

run-mavis-1-2-mini-mini.bat(recommended) -

run-mavis-1-2-3-main.bat(recommended) -

run-mavis-1-2-3-math.bat(recommended) -

run-mavis-1-2-3-math-pro.bat(recommended) -

run-mavis-1-2-3-math-ultra.bat(recommended)

-

- for MAVIS 1.3 EAP:

-

run-mavis-1-3-main.bat(experimental) -

run-mavis-1-3-code.bat(experimental) -

run-mavis-1-3-code-pro.bat(experimental) -

run-mavis-1-3-math.bat(experimental) -

run-mavis-1-3-math-pro.bat(experimental)

-

- for MAVIS 1.4 EAP:

-

run-mavis-1-4-math.bat(experimental)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.bat(experimental) -

run-mavis-1-5-math.bat(experimental) -

run-mavis-1-5-math-pro.bat(experimental) -

run-mavis-1-5-math-ultra.bat(experimental) -

run-mavis-1-5-math-mini.bat(experimental) -

run-mavis-1-5-math-mini-mini.bat(experimental) -

run-mavis-1-5-code.bat(experimental) -

run-mavis-1-5-code-pro.bat(experimental) -

run-mavis-1-5-code-mini.bat(experimental) -

run-mavis-1-5-code-mini-mini.bat(experimental)

-

Automatic Installation on macOS/Linux

-

Download the installation file:

- Download the file

mavis-installer.shfrom GitHub.

-

Make the file executable:

- Open a terminal and navigate to the directory where the downloaded file is located.

- Enter the following command:

chmod +x mavis-installer.sh-

Run the installation file:

- Start the installation with:

./mavis-installer.sh-

Follow the installation instructions:

- Follow the instructions in the terminal to install MAVIS.

Start the UI

After installation, you can start MAVIS. There are several start options that vary depending on the version and intended use:

- All MAVIS versions:

-

run-mavis-all.sh(experimental)

-

- With a shell file for MAVIS 1.2:

-

run-mavis-1-2-main.sh(recommended) -

run-mavis-1-2-code.sh(recommended) -

run-mavis-1-2-code-pro.sh(recommended) -

run-mavis-1-2-math.sh(recommended) -

run-mavis-1-2-math-pro.sh(recommended) -

run-mavis-1-2-mini.sh(recommended) -

run-mavis-1-2-mini-mini.sh(recommended) -

run-mavis-1-2-3-main.sh(recommended) -

run-mavis-1-2-3-math.sh(recommended) -

run-mavis-1-2-3-math-pro.sh(recommended) -

run-mavis-1-2-3-math-ultra.sh(recommended)

-

- for MAVIS 1.3 EAP:

-

run-mavis-1-3-main.sh(experimental) -

run-mavis-1-3-code.sh(experimental) -

run-mavis-1-3-code-pro.sh(experimental) -

run-mavis-1-3-math.sh(experimental) -

run-mavis-1-3-math-pro.sh(experimental)

-

- for MAVIS 1.4 EAP:

-

run-mavis-1-4-math.sh(experimental)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.sh(experimental) -

run-mavis-1-5-math.sh(experimental) -

run-mavis-1-5-math-pro.sh(experimental) -

run-mavis-1-5-math-ultra.sh(experimental) -

run-mavis-1-5-math-mini.sh(experimental) -

run-mavis-1-5-math-mini-mini.sh(experimental) -

run-mavis-1-5-code.sh(experimental) -

run-mavis-1-5-code-pro.sh(experimental) -

run-mavis-1-5-code-mini.sh(experimental) -

run-mavis-1-5-code-mini-mini.sh(experimental)

-

Manual Installation (recommended)

To successfully install MAVIS, you need the following programs:

-

Git

Download Git from the official website: https://git-scm.com/downloads -

Python

Recommended: Python 3.12 (3.11 is also supported - not Python 3.13 yet). Download Python from the https://www.python.org/downloads/ or from the Microsoft Store. -

Ollama

Ollama is a tool required for MAVIS. Install Ollama from the official website: https://ollama.com/download

The necessary Python frameworks and AI models are automatically installed during manual installation.

Windows

-

Create folder

Create a folder namedPycharmProjects(C:\Users\DeinBenutzername\PycharmProjects) if it doesn't already exist. The location and method vary depending on your operating system:-

Option 1: Via File Explorer

- Open File Explorer.

- Navigate to

C:\Users\YourUsername\. - Create a folder there called

PycharmProjects.

-

Option 2: Using the Command Prompt

Open the command prompt and run the following commands:mkdir C:\Users\%USERNAME%\PycharmProjects cd C:\Users\%USERNAME%\PycharmProjects

-

-

Clone repository

Clone the repository to a local directory:git clone https://github.com/Peharge/MAVIS

-

Change directory

Navigate to the project directory:cd MAVIS -

Create Python virtual environment

Set up a virtual environment to install dependencies in isolation:python -m venv env

(You can Do not replace

envwith another name!)

Start the UI

After installation, you can start MAVIS. There are several start options that vary depending on the version and intended use:

- All MAVIS versions:

-

run-mavis-all.bat(experimental)

-

- With a batch file for MAVIS 1.2:

-

run-mavis-1-2-main.bat(recommended) -

run-mavis-1-2-code.bat(recommended) -

run-mavis-1-2-code-pro.bat(recommended) -

run-mavis-1-2-math.bat(recommended) -

run-mavis-1-2-math-pro.bat(recommended) -

run-mavis-1-2-mini.bat(recommended) -

run-mavis-1-2-mini-mini.bat(recommended) -

run-mavis-1-2-3-main.bat(recommended) -

run-mavis-1-2-3-math.bat(recommended) -

run-mavis-1-2-3-math-pro.bat(recommended) -

run-mavis-1-2-3-math-ultra.bat(recommended)

-

- for MAVIS 1.3 EAP:

-

run-mavis-1-3-main.bat(experimental) -

run-mavis-1-3-code.bat(experimental) -

run-mavis-1-3-code-pro.bat(experimental) -

run-mavis-1-3-math.bat(experimental) -

run-mavis-1-3-math-pro.bat(experimental)

-

- for MAVIS 1.4 EAP:

-

run-mavis-1-4-math.bat(experimental)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.bat(experimental) -

run-mavis-1-5-math.bat(experimental) -

run-mavis-1-5-math-pro.bat(experimental) -

run-mavis-1-5-math-ultra.bat(experimental) -

run-mavis-1-5-math-mini.bat(experimental) -

run-mavis-1-5-math-mini-mini.bat(experimental) -

run-mavis-1-5-code.bat(experimental) -

run-mavis-1-5-code-pro.bat(experimental) -

run-mavis-1-5-code-mini.bat(experimental) -

run-mavis-1-5-code-mini-mini.bat(experimental)

-

macOS/Linux

-

Create a folder

Create a folder calledPycharmProjects(~/PycharmProjects) if it doesn't already exist. The location and method vary depending on your operating system:-

Option 1: Via the File Manager

- Open the File Manager.

- Navigate to your home directory (

~/). - Create a folder called

PycharmProjectsthere.

-

Option 2: Via Terminal

Open Terminal and run the following commands:mkdir -p ~/PycharmProjects cd ~/PycharmProjects

-

-

Clone repository

Clone the repository to a local directory:git clone https://github.com/Peharge/MAVIS

-

Change directory

Navigate to the project directory:cd MAVIS -

Create Python virtual environment

Set up a virtual environment to install dependencies in isolation:python -m venv env

(You cannot replace

envwith any other name!)

Start the UI

After installation, you can start MAVIS. There are several start options that vary depending on the version and intended use:

- All MAVIS versions:

-

run-mavis-all.sh(experimental)

-

- With a shell file for MAVIS 1.2:

-

run-mavis-1-2-main.sh(recommended) -

run-mavis-1-2-code.sh(recommended) -

run-mavis-1-2-code-pro.sh(recommended) -

run-mavis-1-2-math.sh(recommended) -

run-mavis-1-2-math-pro.sh(recommended) -

run-mavis-1-2-mini.sh(recommended) -

run-mavis-1-2-mini-mini.sh(recommended) -

run-mavis-1-2-3-main.sh(recommended) -

run-mavis-1-2-3-math.sh(recommended) -

run-mavis-1-2-3-math-pro.sh(recommended) -

run-mavis-1-2-3-math-ultra.sh(recommended)

-

- for MAVIS 1.3 EAP:

-

run-mavis-1-3-main.sh(experimental) -

run-mavis-1-3-code.sh(experimental) -

run-mavis-1-3-code-pro.sh(experimental) -

run-mavis-1-3-math.sh(experimental) -

run-mavis-1-3-math-pro.sh(experimental)

-

- for MAVIS 1.4 EAP:

-

run-mavis-1-4-math.sh(experimental)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.sh(experimental) -

run-mavis-1-5-math.sh(experimental) -

run-mavis-1-5-math-pro.sh(experimental) -

run-mavis-1-5-math-ultra.sh(experimental) -

run-mavis-1-5-math-mini.sh(experimental) -

run-mavis-1-5-math-mini-mini.sh(experimental) -

run-mavis-1-5-code.sh(experimental) -

run-mavis-1-5-code-pro.sh(experimental) -

run-mavis-1-5-code-mini.sh(experimental) -

run-mavis-1-5-code-mini-mini.sh(experimental)

-

Installation MAVIS 1.1 - 1.5

MAVIS (alias Xc++ III) befindet sich derzeit in der Entwicklung und ist in diesem Repository nicht vollständig verfügbar. Durch das Klonen des Repositories erhalten Sie lediglich die README-Datei, einige Bilder und bereits veröffentlichte Codes, einschließlich der Benutzeroberfläche (UI), die mit Qwen 2.5 Code und Llama 3.2 Vision kompatibel ist.

Hinweis: Die manuelle Installation ist zwar nicht wesentlich komplizierter, jedoch erheblich stabiler und sicherer. Deshalb empfehlen wir, diese Methode zu bevorzugen.

Bei der automatischen Installation werden Git, Python und Ollama installiert, ein Ordner erstellt und eine virtuelle Python-Umgebung eingerichtet. Außerdem werden die erforderlichen Python-Frameworks und KI-Modelle installiert – dieser Schritt erfolgt jedoch auch bei der manuellen Installation automatisch.

Automatische Installation (experimentell)

Solltest du dennoch die automatische Installation bevorzugen, folge bitte den untenstehenden Anweisungen:

Automatische Installation auf Windows

-

Herunterladen der Installationsdatei:

- Laden Sie die Datei

mavis-installer.batvon GitHub herunter.

- Laden Sie die Datei

-

Installationsdatei ausführen:

- Führen Sie die heruntergeladene Datei durch Doppelklick aus.

-

Sicherheitswarnungen akzeptieren:

- Falls Windows eine Sicherheitswarnung ausgibt, bestätigen Sie diese und erlauben Sie die Ausführung.

-

Installationsanweisungen befolgen:

- Folgen Sie den Anweisungen auf dem Bildschirm, um MAVIS zu installieren.

Starten der UI

Nach der Installation können Sie MAVIS starten. Es gibt mehrere Startoptionen, die je nach Version und Verwendungszweck variieren:

- Alle MAVIS Versionen:

-

run-mavis-all.bat(experimentell)

-

- Mit einer Batch-Datei für MAVIS 1.2:

-

run-mavis-1-2-main.bat(empfohlen) -

run-mavis-1-2-code.bat(empfohlen) -

run-mavis-1-2-code-pro.bat(empfohlen) -

run-mavis-1-2-math.bat(empfohlen) -

run-mavis-1-2-math-pro.bat(empfohlen) -

run-mavis-1-2-mini.bat(empfohlen) -

run-mavis-1-2-mini-mini.bat(empfohlen) -

run-mavis-1-2-3-main.bat(empfohlen) -

run-mavis-1-2-3-math.bat(empfohlen) -

run-mavis-1-2-3-math-pro.bat(empfohlen) -

run-mavis-1-2-3-math-ultra.bat(empfohlen)

-

- für MAVIS 1.3 EAP:

-

run-mavis-1-3-main.bat(experimentell) -

run-mavis-1-3-code.bat(experimentell) -

run-mavis-1-3-code-pro.bat(experimentell) -

run-mavis-1-3-math.bat(experimentell) -

run-mavis-1-3-math-pro.bat(experimentell)

-

- für MAVIS 1.4 EAP:

-

run-mavis-1-4-math.bat(experimentell)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.bat(experimentell) -

run-mavis-1-5-math.bat(experimentell) -

run-mavis-1-5-math-pro.bat(experimentell) -

run-mavis-1-5-math-ultra.bat(experimentell) -

run-mavis-1-5-math-mini.bat(experimentell) -

run-mavis-1-5-math-mini-mini.bat(experimentell) -

run-mavis-1-5-code.bat(experimentell) -

run-mavis-1-5-code-pro.bat(experimentell) -

run-mavis-1-5-code-mini.bat(experimentell) -

run-mavis-1-5-code-mini-mini.bat(experimentell)

-

Automatische Installation auf macOS/Linux

-

Herunterladen der Installationsdatei:

- Laden Sie die Datei

mavis-installer.shvon GitHub herunter.

- Laden Sie die Datei

-

Datei ausführbar machen:

- Öffnen Sie ein Terminal und navigieren Sie in das Verzeichnis, in dem sich die heruntergeladene Datei befindet.

- Geben Sie folgenden Befehl ein:

chmod +x mavis-installer.sh

-

Installationsdatei ausführen:

- Starten Sie die Installation mit:

./mavis-installer.sh

- Starten Sie die Installation mit:

-

Installationsanweisungen befolgen:

- Folgen Sie den Anweisungen im Terminal, um MAVIS zu installieren.

Starten der UI

Nach der Installation können Sie MAVIS starten. Es gibt mehrere Startoptionen, die je nach Version und Verwendungszweck variieren:

- Alle MAVIS Versionen:

-

run-mavis-all.sh(experimentell)

-

- Mit einer shell-Datei für MAVIS 1.2:

-

run-mavis-1-2-main.sh(empfohlen) -

run-mavis-1-2-code.sh(empfohlen) -

run-mavis-1-2-code-pro.sh(empfohlen) -

run-mavis-1-2-math.sh(empfohlen) -

run-mavis-1-2-math-pro.sh(empfohlen) -

run-mavis-1-2-3-main.sh(empfohlen) -

run-mavis-1-2-3-math.sh(empfohlen) -

run-mavis-1-2-3-math-pro.sh(empfohlen) -

run-mavis-1-2-3-math-ultra.sh(empfohlen)

-

- für MAVIS 1.3 EAP:

-

run-mavis-1-3-main.sh(experimentell) -

run-mavis-1-3-code.sh(experimentell) -

run-mavis-1-3-code-pro.sh(experimentell) -

run-mavis-1-3-math.sh(experimentell) -

run-mavis-1-3-math-pro.sh(experimentell)

-

- für MAVIS 1.4 EAP:

-

run-mavis-1-4-math.sh(experimentell)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.sh(experimentell) -

run-mavis-1-5-math.sh(experimentell) -

run-mavis-1-5-math-pro.sh(experimentell) -

run-mavis-1-5-math-ultra.sh(experimentell) -

run-mavis-1-5-math-mini.sh(experimentell) -

run-mavis-1-5-math-mini-mini.sh(experimentell) -

run-mavis-1-5-code.sh(experimentell) -

run-mavis-1-5-code-pro.sh(experimentell) -

run-mavis-1-5-code-mini.sh(experimentell) -

run-mavis-1-5-code-mini-mini.sh(experimentell)

-

Manuelle Installation (empfohlen)

Um MAVIS erfolgreich zu installieren, benötigen Sie die folgenden Programme:

-

Git

Laden Sie Git von der offiziellen Website herunter:

https://git-scm.com/downloads -

Python

Empfohlen: Python 3.12 (auch 3.11 wird unterstützt - noch nicht Python 3.13).

Laden Sie Python von der https://www.python.org/downloads/ oder über den Microsoft Store herunter. -

Ollama

Ollama ist ein Tool, das für MAVIS erforderlich ist.

Installieren Sie Ollama von der offiziellen Website:

https://ollama.com/download

Die erforderlichen Python-Frameworks und KI-Modelle werden bei der manuellen Installation automatisch mitinstalliert.

Windows

-

Ordner erstellen

Erstelle einen Ordner mit dem NamenPycharmProjects(C:\Users\DeinBenutzername\PycharmProjects), falls dieser noch nicht existiert. Der Speicherort und die Methode variieren je nach Betriebssystem:-

Option 1: Über den Datei-Explorer

- Öffne den Datei-Explorer.

- Navigiere zu

C:\Users\DeinBenutzername\. - Erstelle dort einen Ordner namens

PycharmProjects.

-

Option 2: Über die Eingabeaufforderung (Command Prompt)

Öffne die Eingabeaufforderung und führe die folgenden Befehle aus:mkdir C:\Users\%USERNAME%\PycharmProjects cd C:\Users\%USERNAME%\PycharmProjects

-

-

Repository klonen

Klonen Sie das Repository in ein lokales Verzeichnis:git clone https://github.com/Peharge/MAVIS

-

In das Verzeichnis wechseln

Navigieren Sie in das Projektverzeichnis:cd MAVIS -

Virtuelle Python-Umgebung erstellen

Richten Sie eine virtuelle Umgebung ein, um Abhängigkeiten isoliert zu installieren:python -m venv env

(Sie können

envnicht durch einen anderen Namen ersetzen!)

Starten der UI

Nach der Installation können Sie MAVIS starten. Es gibt mehrere Startoptionen, die je nach Version und Verwendungszweck variieren:

- Alle MAVIS Versionen:

-

run-mavis-all.bat(experimentell)

-

- Mit einer Batch-Datei für MAVIS 1.2:

-

run-mavis-1-2-main.bat(empfohlen) -

run-mavis-1-2-code.bat(empfohlen) -

run-mavis-1-2-code-pro.bat(empfohlen) -

run-mavis-1-2-math.bat(empfohlen) -

run-mavis-1-2-math-pro.bat(empfohlen) -

run-mavis-1-2-mini.bat(empfohlen) -

run-mavis-1-2-mini-mini.bat(empfohlen) -

run-mavis-1-2-3-main.bat(empfohlen) -

run-mavis-1-2-3-math.bat(empfohlen) -

run-mavis-1-2-3-math-pro.bat(empfohlen) -

run-mavis-1-2-3-math-ultra.bat(empfohlen)

-

- für MAVIS 1.3 EAP:

-

run-mavis-1-3-main.bat(experimentell) -

run-mavis-1-3-code.bat(experimentell) -

run-mavis-1-3-code-pro.bat(experimentell) -

run-mavis-1-3-math.bat(experimentell) -

run-mavis-1-3-math-pro.bat(experimentell)

-

- für MAVIS 1.4 EAP:

-

run-mavis-1-4-math.bat(experimentell)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.bat(experimentell) -

run-mavis-1-5-math.bat(experimentell) -

run-mavis-1-5-math-pro.bat(experimentell) -

run-mavis-1-5-math-ultra.bat(experimentell) -

run-mavis-1-5-math-mini.bat(experimentell) -

run-mavis-1-5-math-mini-mini.bat(experimentell) -

run-mavis-1-5-code.bat(experimentell) -

run-mavis-1-5-code-pro.bat(experimentell) -

run-mavis-1-5-code-mini.bat(experimentell) -

run-mavis-1-5-code-mini-mini.bat(experimentell)

-

macOS/Linux

-

Ordner erstellen

Erstelle einen Ordner mit dem NamenPycharmProjects(~/PycharmProjects), falls dieser noch nicht existiert. Der Speicherort und die Methode variieren je nach Betriebssystem:-

Option 1: Über den Datei-Manager

- Öffne den Datei-Manager.

- Navigiere zu deinem Home-Verzeichnis (

~/). - Erstelle dort einen Ordner namens

PycharmProjects.

-

Option 2: Über das Terminal

Öffne das Terminal und führe die folgenden Befehle aus:mkdir -p ~/PycharmProjects cd ~/PycharmProjects

-

-

Repository klonen

Klonen Sie das Repository in ein lokales Verzeichnis:git clone https://github.com/Peharge/MAVIS

-

In das Verzeichnis wechseln

Navigieren Sie in das Projektverzeichnis:cd MAVIS -

Virtuelle Python-Umgebung erstellen

Richten Sie eine virtuelle Umgebung ein, um Abhängigkeiten isoliert zu installieren:python -m venv env

(Sie können

envnicht durch einen anderen Namen ersetzen!)

Starten der UI

Nach der Installation können Sie MAVIS starten. Es gibt mehrere Startoptionen, die je nach Version und Verwendungszweck variieren:

- Alle MAVIS Versionen:

-

run-mavis-all.sh(experimentell)

-

- Mit einer shell-Datei für MAVIS 1.2:

-

run-mavis-1-2-main.sh(empfohlen) -

run-mavis-1-2-code.sh(empfohlen) -

run-mavis-1-2-code-pro.sh(empfohlen) -

run-mavis-1-2-math.sh(empfohlen) -

run-mavis-1-2-math-pro.sh(empfohlen) -

run-mavis-1-2-mini.sh(empfohlen) -

run-mavis-1-2-mini-mini.sh(empfohlen) -

run-mavis-1-2-3-main.sh(empfohlen) -

run-mavis-1-2-3-math.sh(empfohlen) -

run-mavis-1-2-3-math-pro.sh(empfohlen) -

run-mavis-1-2-3-math-ultra.sh(empfohlen)

-

- für MAVIS 1.3 EAP:

-

run-mavis-1-3-main.sh(experimentell) -

run-mavis-1-3-code.sh(experimentell) -

run-mavis-1-3-code-pro.sh(experimentell) -

run-mavis-1-3-math.sh(experimentell) -

run-mavis-1-3-math-pro.sh(experimentell)

-

- für MAVIS 1.4 EAP:

-

run-mavis-1-4-math.sh(experimentell)

-

- for MAVIS 1.5 EAP:

-

run-mavis-1-5-main.sh(experimentell) -

run-mavis-1-5-math.sh(experimentell) -

run-mavis-1-5-math-pro.sh(experimentell) -

run-mavis-1-5-math-ultra.sh(experimentell) -

run-mavis-1-5-math-mini.sh(experimentell) -

run-mavis-1-5-math-mini-mini.sh(experimentell) -

run-mavis-1-5-code.sh(experimentell) -

run-mavis-1-5-code-pro.sh(experimentell) -

run-mavis-1-5-code-mini.sh(experimentell) -

run-mavis-1-5-code-mini-mini.sh(experimentell)

-

To install MAVIS 3 EAP on a Windows computer, please follow these steps:

-

Download the installation file Download mavis-launcher-4.bat.

-

Navigate to the folder where you saved the file.

-

Important: Make sure you have administrator rights, as the installation process may require changes to your system.

-

Double-click the

mavis-launcher-4.batfile to begin the installation. -

Follow the on-screen instructions provided by the installation script. This may involve confirming multiple steps, such as installing dependencies or adjusting environment variables.

-

Once the installation process is complete, MAVIS 4 EAP will be installed on your system and can be launched from running

mavis-installer.batagain.

-

Issue: The script does not run.

Solution: Ensure that you are running the script with administrator rights. Right-click onmavis-installer.batand select "Run as Administrator". -

Issue: Missing permissions or errors during installation.

Solution: Check your user rights and ensure that you have the necessary permissions to install programs.

If you need further assistance, refer to the Dokumentation - Using MAVIS or ask a question in the Issues section of the repository.

Um MAVIS 3 EAP auf einem Windows-Computer zu installieren, folge bitte diesen Schritten:

-

Lade die Installationsdatei Download mavis-launcher-4.bat herunter.

-

Navigiere zu dem Ordner, in dem du die Datei gespeichert hast.

-

Wichtiger Hinweis: Stelle sicher, dass du über Administratorrechte verfügst, da der Installationsprozess Änderungen an deinem System vornehmen kann.

-

Doppelklicke auf die Datei

mavis-launcher-4.bat, um die Installation zu starten. -

Folge den Anweisungen im Installationsskript. Dies kann die Bestätigung mehrerer Schritte umfassen, wie z.B. die Installation von Abhängigkeiten oder das Anpassen von Umgebungsvariablen.

-

Wenn der Installationsprozess abgeschlossen ist, wird MAVIS 4 EAP auf deinem System installiert und kann über das Ausführen von

mavis-installer.batimmer gestartet werden.

-

Problem: Das Skript lässt sich nicht ausführen.

Lösung: Stelle sicher, dass du das Skript mit Administratorrechten ausführst. Klicke mit der rechten Maustaste aufmavis-installer.batund wähle "Als Administrator ausführen". -

Problem: Fehlende Berechtigungen oder Fehler während der Installation.

Lösung: Überprüfe deine Benutzerrechte und stelle sicher, dass du über die erforderlichen Rechte zum Installieren von Programmen verfügst.

Falls du weitere Hilfe benötigst, schau in die Dokumentation - Using MAVIS oder stelle eine Frage im Issues-Bereich des Repositories.

Testing (Don't work!!!): Download mavis-launcher-4.sh.

[!WARNING] Still in progress

[!WARNING] Still in progress

got to: Github/Xc++-II

- Make sure Mavis is always told to use fig.update_layout(mapbox_style="open-street-map") in Python code!

- Stellen Sie sicher, dass Mavis stets darauf hingewiesen wird, im Python-Code fig.update_layout(mapbox_style="open-street-map") zu verwenden!

more Demo

Aufgabe: Du bist ein Professioneller Thermodynamik Lehrer. Fasse mir die Übersicht zusammen in einer Tabelle (Formelsammlung) und lege dich ins Zeug!

Grafik: https://www.ulrich-rapp.de/stoff/thermodynamik/Gasgesetz_AB.pdf

Task: In this task, students are to create a phase diagram for a binary mixed system that shows the phase transitions between the liquid and vapor phases. They use thermodynamic models and learn how to visualize complex phase diagrams using Python and the Matplotlib and Seaborn libraries.

Use the Antoine equation to calculate the vapor pressure for each of the two components at a given temperature. The vapor pressure formula is:

$$ P_A = P_A^0 \cdot x_A \quad \text{and} \quad P_B = P_B^0 \cdot x_B $$

Where $P_A^0$ and $P_B^0$ are the vapor pressures of the pure components at a given temperature $T$, and $x_A$ and $x_B$ are the mole fractions of the two components in the liquid phase.

Given data: The vapor pressure parameters for the two components A and B at different temperatures are described by the Antoine equation. The corresponding constants for each component are:

For component A:

-

$A_A = 8.07131$

-

$B_A = 1730.63$

-

$C_A = 233.426$

For component B:

-

$A_B = 8.14019$

-

$B_B = 1810.94$

-

$C_B = 244.485 $

Good luck!

Learn to install \ use MAVIS Installer; use MAVIS UI; use Latex; use Matplotlib

Soon: Use Plotly; Seaborn; Numpy; Sympy; PyTorch; Tensorflow; Sikit-Learn; etc.

Authors: Jacob Devlin, Ming-Wei Chang, Kenton Lee ...

Link: arXiv:1810.04805v2

Abstract: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. As a result, the pre-trained BERT model can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of tasks, such as question answering and language inference, without substantial task-specific architecture modifications.

BERT is conceptually simple and empirically powerful. It obtains new state-of-the-art results on eleven natural language processing tasks, including pushing the GLUE score to 80.5% (7.7% point absolute improvement), MultiNLI accuracy to 86.7% (4.6% absolute improvement), SQuAD v1.1 question answering Test F1 to 93.2 (1.5 point absolute improvement) and SQuAD v2.0 Test F1 to 83.1 (5.1 point absolute improvement).

Authors: Mark Chen, Jerry Tworek, Heewoo Jun ...

Link: arXiv:2107.03374

Abstract: We introduce Codex, a GPT language model fine-tuned on publicly available code from GitHub, and study its Python code-writing capabilities. A distinct production version of Codex powers GitHub Copilot. On HumanEval, a new evaluation set we release to measure functional correctness for synthesizing programs from docstrings, our model solves 28.8% of the problems, while GPT-3 solves 0% and GPT-J solves 11.4%. Furthermore, we find that repeated sampling from the model is a surprisingly effective strategy for producing working solutions to difficult prompts. Using this method, we solve 70.2% of our problems with 100 samples per problem. Careful investigation of our model reveals its limitations, including difficulty with docstrings describing long chains of operations and with binding operations to variables. Finally, we discuss the potential broader impacts of deploying powerful code generation technologies, covering safety, security, and economics.

Authors: Long Ouyang, Jeff Wu, Xu Jiang ...

Link: arXiv:2203.02155

Abstract: Making language models bigger does not inherently make them better at following a user's intent. For example, large language models can generate outputs that are untruthful, toxic, or simply not helpful to the user. In other words, these models are not aligned with their users. In this paper, we show an avenue for aligning language models with user intent on a wide range of tasks by fine-tuning with human feedback. Starting with a set of labeler-written prompts and prompts submitted through the OpenAI API, we collect a dataset of labeler demonstrations of the desired model behavior, which we use to fine-tune GPT-3 using supervised learning. We then collect a dataset of rankings of model outputs, which we use to further fine-tune this supervised model using reinforcement learning from human feedback. We call the resulting models InstructGPT. In human evaluations on our prompt distribution, outputs from the 1.3B parameter InstructGPT model are preferred to outputs from the 175B GPT-3, despite having 100x fewer parameters. Moreover, InstructGPT models show improvements in truthfulness and reductions in toxic output generation while having minimal performance regressions on public NLP datasets. Even though InstructGPT still makes simple mistakes, our results show that fine-tuning with human feedback is a promising direction for aligning language models with human intent.

more Paper

4. Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books (2015)

Authors: Yukun Zhu, Ryan Kiros, Richard Zemel ...

Link: arXiv:1506.06724

Abstract: This work presents a Neural Architecture Search (NAS) method using reinforcement learning to automatically generate neural network architectures. NAS demonstrates the ability to discover novel architectures that outperform human-designed models on standard benchmarks.

Authors: Tom B. Brown, Benjamin Mann, Nick Ryder ...

Link: arXiv:2005.14165v4

Abstract: Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions - something which current NLP systems still largely struggle to do. Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine-tuning approaches. Specifically, we train GPT-3, an autoregressive language model with 175 billion parameters, 10x more than any previous non-sparse language model, and test its performance in the few-shot setting. For all tasks, GPT-3 is applied without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. At the same time, we also identify some datasets where GPT-3's few-shot learning still struggles, as well as some datasets where GPT-3 faces methodological issues related to training on large web corpora. Finally, we find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans. We discuss broader societal impacts of this finding and of GPT-3 in general.

Authors: Chenfei Wu, Shengming Yin, Weizhen Qi ...

Link: arXiv:2303.04671

Abstract: ChatGPT is attracting a cross-field interest as it provides a language interface with remarkable conversational competency and reasoning capabilities across many domains. However, since ChatGPT is trained with languages, it is currently not capable of processing or generating images from the visual world. At the same time, Visual Foundation Models, such as Visual Transformers or Stable Diffusion, although showing great visual understanding and generation capabilities, they are only experts on specific tasks with one-round fixed inputs and outputs. To this end, We build a system called \textbf{Visual ChatGPT}, incorporating different Visual Foundation Models, to enable the user to interact with ChatGPT by 1) sending and receiving not only languages but also images 2) providing complex visual questions or visual editing instructions that require the collaboration of multiple AI models with multi-steps. 3) providing feedback and asking for corrected results. We design a series of prompts to inject the visual model information into ChatGPT, considering models of multiple inputs/outputs and models that require visual feedback. Experiments show that Visual ChatGPT opens the door to investigating the visual roles of ChatGPT with the help of Visual Foundation Models.

Authors: Rishi Bommasani, Drew A. Hudson, Ehsan Adeli, Russ Altman ...

Link: arXiv:2108.07258

Abstract: AI is undergoing a paradigm shift with the rise of models (e.g., BERT, DALL-E, GPT-3) that are trained on broad data at scale and are adaptable to a wide range of downstream tasks. We call these models foundation models to underscore their critically central yet incomplete character. This report provides a thorough account of the opportunities and risks of foundation models, ranging from their capabilities (e.g., language, vision, robotics, reasoning, human interaction) and technical principles(e.g., model architectures, training procedures, data, systems, security, evaluation, theory) to their applications (e.g., law, healthcare, education) and societal impact (e.g., inequity, misuse, economic and environmental impact, legal and ethical considerations). Though foundation models are based on standard deep learning and transfer learning, their scale results in new emergent capabilities,and their effectiveness across so many tasks incentivizes homogenization. Homogenization provides powerful leverage but demands caution, as the defects of the foundation model are inherited by all the adapted models downstream. Despite the impending widespread deployment of foundation models, we currently lack a clear understanding of how they work, when they fail, and what they are even capable of due to their emergent properties. To tackle these questions, we believe much of the critical research on foundation models will require deep interdisciplinary collaboration commensurate with their fundamentally sociotechnical nature.

Authors: Long Ouyang, Jeff Wu, Xu Jiang ...

Link: arXiv:2203.02155

Abstract: Making language models bigger does not inherently make them better at following a user's intent. For example, large language models can generate outputs that are untruthful, toxic, or simply not helpful to the user. In other words, these models are not aligned with their users. In this paper, we show an avenue for aligning language models with user intent on a wide range of tasks by fine-tuning with human feedback. Starting with a set of labeler-written prompts and prompts submitted through the OpenAI API, we collect a dataset of labeler demonstrations of the desired model behavior, which we use to fine-tune GPT-3 using supervised learning. We then collect a dataset of rankings of model outputs, which we use to further fine-tune this supervised model using reinforcement learning from human feedback. We call the resulting models InstructGPT. In human evaluations on our prompt distribution, outputs from the 1.3B parameter InstructGPT model are preferred to outputs from the 175B GPT-3, despite having 100x fewer parameters. Moreover, InstructGPT models show improvements in truthfulness and reductions in toxic output generation while having minimal performance regressions on public NLP datasets. Even though InstructGPT still makes simple mistakes, our results show that fine-tuning with human feedback is a promising direction for aligning language models with human intent.

Authors: Renqian Luo, Liai Sun, Yingce Xia ...

Link: arXiv:2210.10341

Abstract: Pre-trained language models have attracted increasing attention in the biomedical domain, inspired by their great success in the general natural language domain. Among the two main branches of pre-trained language models in the general language domain, i.e., BERT (and its variants) and GPT (and its variants), the first one has been extensively studied in the biomedical domain, such as BioBERT and PubMedBERT. While they have achieved great success on a variety of discriminative downstream biomedical tasks, the lack of generation ability constrains their application scope. In this paper, we propose BioGPT, a domain-specific generative Transformer language model pre-trained on large scale biomedical literature. We evaluate BioGPT on six biomedical NLP tasks and demonstrate that our model outperforms previous models on most tasks. Especially, we get 44.98%, 38.42% and 40.76% F1 score on BC5CDR, KD-DTI and DDI end-to-end relation extraction tasks respectively, and 78.2% accuracy on PubMedQA, creating a new record. Our case study on text generation further demonstrates the advantage of BioGPT on biomedical literature to generate fluent descriptions for biomedical terms.

Authors: Irene Solaiman, Miles Brundage, Jack Clark ...

Link: arXiv:1908.09203

Abstract: Large language models have a range of beneficial uses: they can assist in prose, poetry, and programming; analyze dataset biases; and more. However, their flexibility and generative capabilities also raise misuse concerns. This report discusses OpenAI's work related to the release of its GPT-2 language model. It discusses staged release, which allows time between model releases to conduct risk and benefit analyses as model sizes increased. It also discusses ongoing partnership-based research and provides recommendations for better coordination and responsible publication in AI.

**Authors:**Reiichiro Nakano, Jacob Hilton, Suchir Balaji ...

Link: arXiv:2112.09332

Abstract: We fine-tune GPT-3 to answer long-form questions using a text-based web-browsing environment, which allows the model to search and navigate the web. By setting up the task so that it can be performed by humans, we are able to train models on the task using imitation learning, and then optimize answer quality with human feedback. To make human evaluation of factual accuracy easier, models must collect references while browsing in support of their answers. We train and evaluate our models on ELI5, a dataset of questions asked by Reddit users. Our best model is obtained by fine-tuning GPT-3 using behavior cloning, and then performing rejection sampling against a reward model trained to predict human preferences. This model's answers are preferred by humans 56% of the time to those of our human demonstrators, and 69% of the time to the highest-voted answer from Reddit.

Authors: OpenAI, Josh Achiam, Steven Adler ...

Link: arXiv:2303.08774

Abstract: We report the development of GPT-4, a large-scale, multimodal model which can accept image and text inputs and produce text outputs. While less capable than humans in many real-world scenarios, GPT-4 exhibits human-level performance on various professional and academic benchmarks, including passing a simulated bar exam with a score around the top 10% of test takers. GPT-4 is a Transformer-based model pre-trained to predict the next token in a document. The post-training alignment process results in improved performance on measures of factuality and adherence to desired behavior. A core component of this project was developing infrastructure and optimization methods that behave predictably across a wide range of scales. This allowed us to accurately predict some aspects of GPT-4's performance based on models trained with no more than 1/1,000th the compute of GPT-4.

Authors: Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan ...

Link: arXiv:2303.12712

Abstract: Artificial intelligence (AI) researchers have been developing and refining large language models (LLMs) that exhibit remarkable capabilities across a variety of domains and tasks, challenging our understanding of learning and cognition. The latest model developed by OpenAI, GPT-4, was trained using an unprecedented scale of compute and data. In this paper, we report on our investigation of an early version of GPT-4, when it was still in active development by OpenAI. We contend that (this early version of) GPT-4 is part of a new cohort of LLMs (along with ChatGPT and Google's PaLM for example) that exhibit more general intelligence than previous AI models. We discuss the rising capabilities and implications of these models. We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting. Moreover, in all of these tasks, GPT-4's performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of GPT-4's capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system. In our exploration of GPT-4, we put special emphasis on discovering its limitations, and we discuss the challenges ahead for advancing towards deeper and more comprehensive versions of AGI, including the possible need for pursuing a new paradigm that moves beyond next-word prediction. We conclude with reflections on societal influences of the recent technological leap and future research directions.

soon more...

This project is licensed under the MIT license – see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MAVIS

Similar Open Source Tools

MAVIS

MAVIS (Math Visual Intelligent System) is an AI-driven application that allows users to analyze visual data such as images and generate interactive answers based on them. It can perform complex mathematical calculations, solve programming tasks, and create professional graphics. MAVIS supports Python for coding and frameworks like Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, and Pandas. It is designed to make projects more efficient and professional.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

Pake

Pake is a tool that allows users to turn any webpage into a desktop app with ease. It is lightweight, fast, and supports Mac, Windows, and Linux. Pake provides a battery-included package with shortcut pass-through, immersive windows, and minimalist customization. Users can explore popular packages like WeRead, Twitter, Grok, DeepSeek, ChatGPT, Gemini, YouTube Music, YouTube, LiZhi, ProgramMusic, Excalidraw, and XiaoHongShu. The tool is suitable for beginners, developers, and hackers, offering command-line packaging and advanced usage options. Pake is developed by a community of contributors and offers support through various channels like GitHub, Twitter, and Telegram.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

candle-vllm

Candle-vllm is an efficient and easy-to-use platform designed for inference and serving local LLMs, featuring an OpenAI compatible API server. It offers a highly extensible trait-based system for rapid implementation of new module pipelines, streaming support in generation, efficient management of key-value cache with PagedAttention, and continuous batching. The tool supports chat serving for various models and provides a seamless experience for users to interact with LLMs through different interfaces.

celeste-python

Celeste AI is a type-safe, modality/provider-agnostic tool that offers unified interface for various providers like OpenAI, Anthropic, Gemini, Mistral, and more. It supports multiple modalities including text, image, audio, video, and embeddings, with full Pydantic validation and IDE autocomplete. Users can switch providers instantly, ensuring zero lock-in and a lightweight architecture. The tool provides primitives, not frameworks, for clean I/O operations.

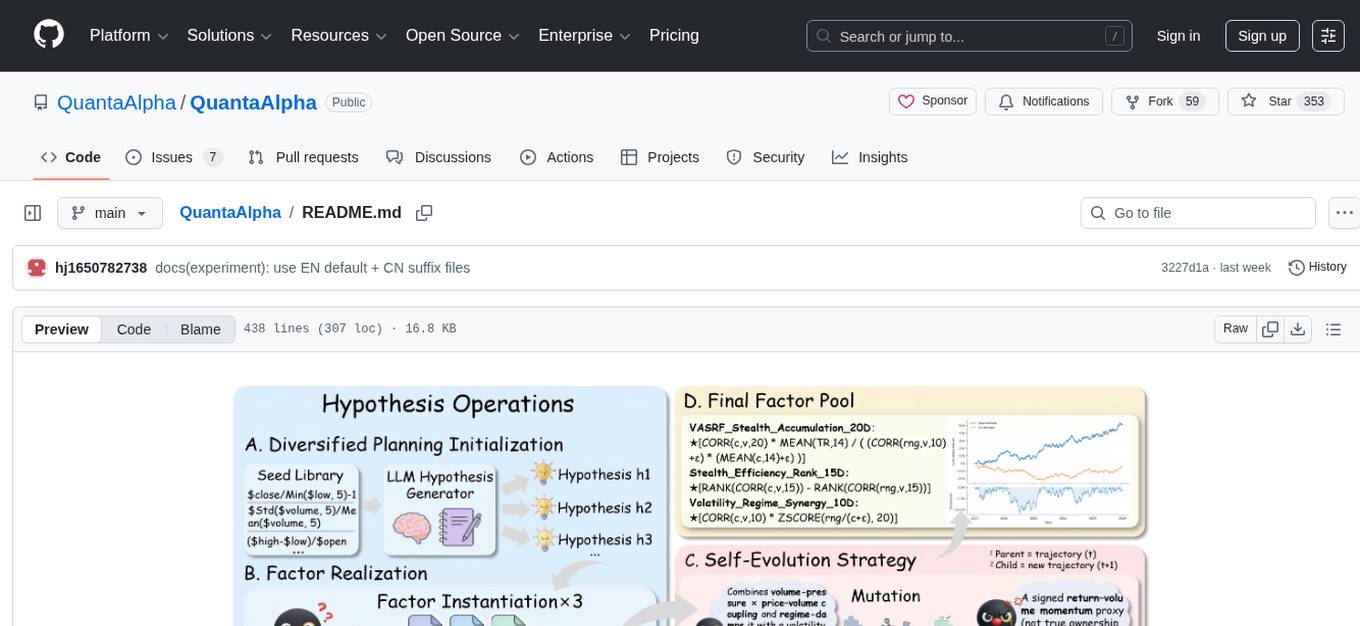

QuantaAlpha

QuantaAlpha is a framework designed for factor mining in quantitative alpha research. It combines LLM intelligence with evolutionary strategies to automatically mine, evolve, and validate alpha factors through self-evolving trajectories. The framework provides a trajectory-based approach with diversified planning initialization and structured hypothesis-code constraint. Users can describe their research direction and observe the automatic factor mining process. QuantaAlpha aims to transform how quantitative alpha factors are discovered by leveraging advanced technologies and self-evolving methodologies.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

tokscale

Tokscale is a high-performance CLI tool and visualization dashboard for tracking token usage and costs across multiple AI coding agents. It helps monitor and analyze token consumption from various AI coding tools, providing real-time pricing calculations using LiteLLM's pricing data. Inspired by the Kardashev scale, Tokscale measures token consumption as users scale the ranks of AI-augmented development. It offers interactive TUI mode, multi-platform support, real-time pricing, detailed breakdowns, web visualization, flexible filtering, and social platform features.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

For similar tasks

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

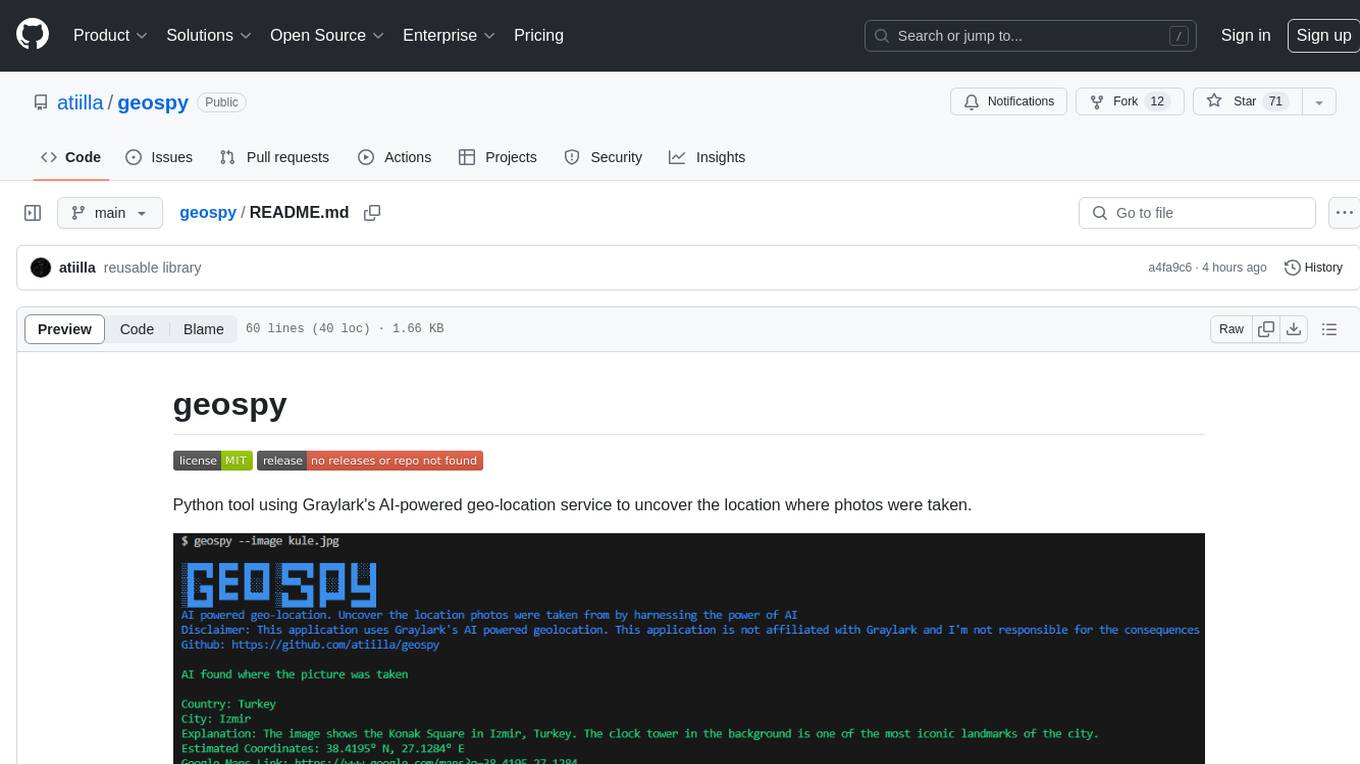

geospy

Geospy is a Python tool that utilizes Graylark's AI-powered geolocation service to determine the location where photos were taken. It allows users to analyze images and retrieve information such as country, city, explanation, coordinates, and Google Maps links. The tool provides a seamless way to integrate geolocation services into various projects and applications.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

-blue?&style=flat&logo=Python&logoColor=white%5BPython)