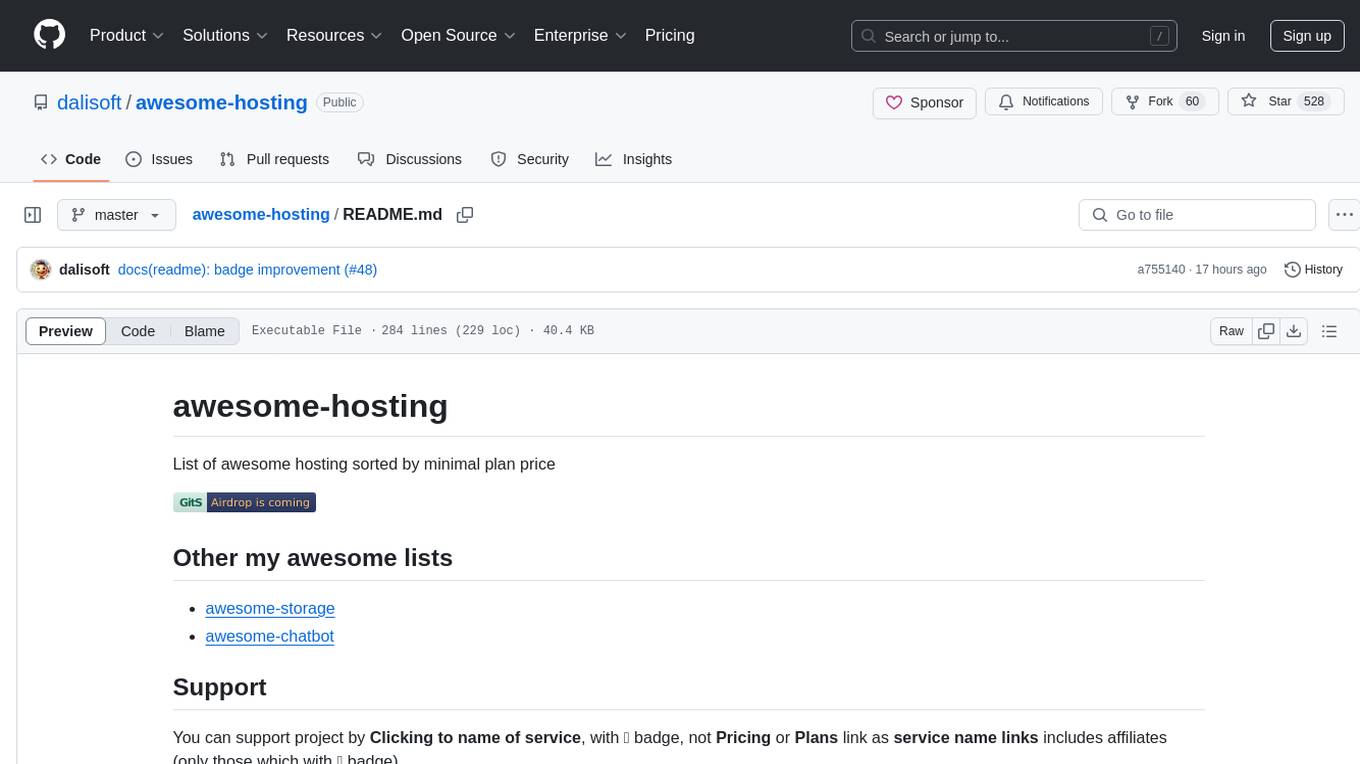

cb-tumblebug

Cloud-Barista Multi-Cloud Infra Management Framework

Stars: 72

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

README:

CB-Tumblebug (CB-TB) is an advanced multi-cloud infrastructure management system that enables seamless provisioning, management, and orchestration of resources across multiple cloud service providers. Part of the Cloud-Barista project, CB-TB abstracts the complexity of multi-cloud environments into a unified, intuitive interface.

- 🌐 Multi-Cloud Orchestration: Manage AWS, Azure, GCP, Alibaba Cloud, and more from a single platform

- ⚡ Auto-provisioning: Intelligent resource recommendations and automated deployment

- 🔐 Secure Operations: Encrypted credential management and hybrid encryption protocols

- 🗺️ Visual Infrastructure Map: Interactive GUI for infrastructure visualization and management

- 🤖 AI-Powered Management: NEW! Control infrastructure using natural language via our MCP Server

-

☁️ Supported Cloud Providers & Resources

📌 Note: Reference only - functionality not guaranteed. Regular updates are made.

Kubernetes support is currently WIP with limited features available.

📋 Development Status & Contributing Notes

CB-TB has not reached version 1.0 yet. We welcome any new suggestions, issues, opinions, and contributors! Please note that the functionalities of Cloud-Barista are not yet stable or secure. Be cautious if you plan to use the current release in a production environment. If you encounter any difficulties using Cloud-Barista, please let us know by opening an issue or joining the Cloud-Barista Slack.

As an open-source project initiated by Korean members, we aim to encourage participation from Korean contributors during the initial stages of this project. Therefore, the CB-TB repository will accept the use of the Korean language in its early stages. However, we hope this project will thrive regardless of contributors' countries in the long run. To facilitate this, the maintainers recommend using English at least for the titles of Issues, Pull Requests, and Commits, while accommodating local languages in the contents.

🤖 NEW: AI-Powered Multi-Cloud Management

- Control CB-Tumblebug through AI assistants like Claude and VS Code

- Natural language interface for infrastructure provisioning and management using MCP (Model Context Protocol)

- Streamable HTTP transport for modern MCP compatibility

- 📖 MCP Server Guide | 🚀 Quick Start

🎮 GPU-Powered Multi-Cloud LLM Deployment

- Deploy GPU instances across multiple clouds for AI/ML workloads

- 🧠 LLM Scripts & Examples

Get CB-Tumblebug running in under 5 minutes:

# 1. Automated setup (recommended for new users)

curl -sSL https://raw.githubusercontent.com/cloud-barista/cb-tumblebug/main/scripts/set-tb.sh | bash

# 2. Start all services

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

make up

# 3. Configure credentials (see detailed setup below)

./init/genCredential.sh

# Edit ~/.cloud-barista/credentials.yaml with your cloud credentials

./init/encCredential.sh

make init

# 4. Access services

# - API: http://localhost:1323/tumblebug/api

# - MapUI: http://localhost:1324

# - MCP Server: http://localhost:8000/mcp (if enabled)💡 New to CB-Tumblebug? Follow the detailed setup guide below for comprehensive instructions.

| Component | Minimum Specification | Recommended |

|---|---|---|

| OS | Linux (Ubuntu 22.04+) | Ubuntu 22.04 LTS |

| CPU | 4 cores | 8+ cores |

| Memory | 6 GiB | 16+ GiB |

| Storage | 20 GiB free space | 50+ GiB SSD |

| Example | AWS c5a.xlarge

|

AWS c5a.2xlarge

|

⚠️ Performance Note: Lower specifications may cause initialization failures or performance degradation.

- Docker & Docker Compose (latest stable)

- Go 1.25.0+ (for building from source)

- Git (for cloning repository)

For new users on clean Linux systems:

# Download and run automated setup script

curl -sSL https://raw.githubusercontent.com/cloud-barista/cb-tumblebug/main/scripts/set-tb.sh | bashℹ️ After the script finishes, you may need to log out and back in to activate Docker permissions and aliases. If you'd prefer to install dependencies and clone the repository manually, follow the steps below. 👇

-

Clone the CB-Tumblebug repository:

git clone https://github.com/cloud-barista/cb-tumblebug.git $HOME/go/src/github.com/cloud-barista/cb-tumblebug cd ~/go/src/github.com/cloud-barista/cb-tumblebug

Optionally, you can register aliases for the CB-Tumblebug directory to simplify navigation:

echo "alias cdtb='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug'" >> ~/.bashrc echo "alias cdtbsrc='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug/src'" >> ~/.bashrc echo "alias cdtbtest='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug/src/testclient/scripts'" >> ~/.bashrc source ~/.bashrc

-

Check Docker Compose Installation:

Ensure that Docker Engine and Docker Compose are installed on your system. If not, you can use the following script to install them (note: this script is not intended for production environments):

# download and install docker with docker compose curl -sSL get.docker.com | sh # optional: add user to docker groupd sudo groupadd docker sudo usermod -aG docker ${USER} newgrp docker # test the docker works docker run hello-world

-

Start All Components Using Docker Compose:

To run all components, use the following command:

cd ~/go/src/github.com/cloud-barista/cb-tumblebug docker compose up

This command will start all components as defined in the preconfigured docker-compose.yaml file. For configuration customization, please refer to the guide.

The following components will be started:

- ETCD: CB-Tumblebug KeyValue DB

- CB-Spider: a Cloud API controller

- CB-MapUI: a simple Map-based GUI web server

- CB-Tumblebug: the system with API server

- CB-Tumblebug MCP Server: AI assistant interface (if enabled)

- PostgreSQL: Specs and Images storage

- Traefik: Reverse proxy for secure access

Container Architecture Overview:

graph TB subgraph "External Access" User[👤 User] AI[🤖 AI Assistant<br/>Claude/VS Code] end subgraph "Docker Compose Environment" subgraph "Frontend & Interfaces" UI[CB-MapUI<br/>:1324] MCP[TB-MCP Server<br/>:8000] Proxy[Traefik Proxy<br/>:80/:443] end subgraph "Backend Services" TB[CB-Tumblebug<br/>:1323<br/>Multi-Cloud Management] Spider[CB-Spider<br/>:1024<br/>Cloud API Abstraction] ETCD[ETCD<br/>:2379<br/>Metadata Store] PG[PostgreSQL<br/>:5432<br/>Specs/Images DB] end end subgraph "Cloud Providers" AWS[AWS] Azure[Azure] GCP[GCP] Others[Others...] end %% User connections User -->|HTTP/HTTPS| Proxy User -->|HTTP| UI User -->|HTTP| TB AI -->|MCP HTTP| MCP %% Proxy routing Proxy -->|Route| UI %% Internal service connections UI -.->|API calls| TB MCP -->|REST API| TB TB -->|REST API| Spider TB -->|gRPC| ETCD TB -->|SQL| PG %% Cloud connections Spider -->|Cloud APIs| AWS Spider -->|Cloud APIs| Azure Spider -->|Cloud APIs| GCP Spider -->|Cloud APIs| Others %% Styling classDef frontend fill:#e3f2fd,stroke:#1976d2 classDef backend fill:#f3e5f5,stroke:#7b1fa2 classDef storage fill:#e8f5e8,stroke:#388e3c classDef cloud fill:#fff3e0,stroke:#f57c00 class UI,MCP,Proxy frontend class TB,Spider,ETCD,PG backend class AWS,Azure,GCP,Others cloud

After running the command, you should see output similar to the following:

Service Endpoints:

- CB-Tumblebug API: http://localhost:1323/tumblebug/api

- CB-MapUI: http://localhost:1324 (direct) or https://cb-mapui.localhost (via Traefik with SSL)

- MCP Server: http://localhost:8000/mcp (if enabled)

- Traefik Dashboard: http://localhost:8080 (reverse proxy monitoring)

Note: Before using CB-Tumblebug, you need to initialize it.

To provisioning multi-cloud infrastructures with CB-TB, it is necessary to register the connection information (credentials) for clouds, as well as commonly used images and specifications.

-

Create

credentials.yamlfile and input your cloud credentials-

Overview

-

credentials.yamlis a file that includes multiple credentials to use API of Clouds supported by CB-TB (AWS, GCP, AZURE, ALIBABA, etc.) - It should be located in the

~/.cloud-barista/directory and securely managed. - Refer to the

template.credentials.yamlfor the template

-

-

Create

credentials.yamlthe fileAutomatically generate the

credentials.yamlfile in the~/.cloud-barista/directory using the CB-TB scriptcd ~/go/src/github.com/cloud-barista/cb-tumblebug ./init/genCredential.sh

-

Input credential data

Put credential data to

~/.cloud-barista/credentials.yaml(Reference: How to obtain a credential for each CSP)### Cloud credentials for credential holders (default: admin) credentialholder: admin: alibaba: # ClientId(ClientId): client ID of the EIAM application # Example: app_mkv7rgt4d7i4u7zqtzev2mxxxx ClientId: # ClientSecret(ClientSecret): client secret of the EIAM application # Example: CSEHDcHcrUKHw1CuxkJEHPveWRXBGqVqRsxxxx ClientSecret: aws: # ClientId(aws_access_key_id) # ex: AKIASSSSSSSSSSS56DJH ClientId: # ClientSecret(aws_secret_access_key) # ex: jrcy9y0Psejjfeosifj3/yxYcgadklwihjdljMIQ0 ClientSecret: ...

-

-

Encrypt

credentials.yamlintocredentials.yaml.encTo protect sensitive information,

credentials.yamlis not used directly. Instead, it must be encrypted usingencCredential.sh. The encrypted filecredentials.yaml.encis then used byinit.py. This approach ensures that sensitive credentials are not stored in plain text.-

Encrypting Credentials

init/encCredential.sh

When executing the script, you have two options: 1) enter your password or 2) let the system generate a random passkey.

Option 1: Entering your password:

Option 2: Letting the system generate a random passkey, which MUST be securely stored in a safe location:

If you need to update your credentials, decrypt the encrypted file using

decCredential.sh, make the necessary changes tocredentials.yaml, and then re-encrypt it. -

-

(INIT) Register all multi-cloud connection information and common resources

-

How to register

Refer to README.md for init.py, and execute the

init.pyscript by theinit.sh. (enter 'y' for confirmation prompts)init.sh --helpwill show the available options.You can also simply use the

make initcommand as follows:cd ~/go/src/github.com/cloud-barista/cb-tumblebug make init

-

The credentials in

~/.cloud-barista/credentials.yaml.enc(encrypted file from thecredentials.yaml) will be automatically registered (all CSP and region information recorded incloudinfo.yamlwill be automatically registered in the system)- Note: You can check the latest regions and zones of CSP using

update-cloudinfo.pyand review the file for updates. (contributions to updates are welcome)

- Note: You can check the latest regions and zones of CSP using

-

Common images and specifications recorded in the

cloudimage.csvandcloudspec.csvfiles in theassetsdirectory will be automatically registered. -

init.pywill apply the hybrid encryption for secure transmission of credentials- Retrieve RSA Public Key: Use the

/credential/publicKeyAPI to get the public key. - Encrypt Credentials: Encrypt credentials with a randomly generated

AESkey, then encrypt theAESkey with theRSA public key. - Transmit Encrypted Data: Send

the encrypted credentialsandAES keyto the server. The server decrypts the AES key and uses it to decrypt the credentials.

This method ensures your credentials are securely transmitted and protected during registration. See init.py for a Python implementation. In detail, check out Secure Credential Registration Guide (How to use the credential APIs)

- Retrieve RSA Public Key: Use the

-

-

-

Shutting down CB-TB and related components

-

Stop all containers by

ctrl+cor type the commanddocker compose stop/docker compose down/make down(When a shutdown event occurs to CB-TB, the system will be shutting down gracefully: API requests that can be processed within 10 seconds will be completed) -

In case of cleanup is needed due to internal system errors

- Check and delete resources created through CB-TB

- Delete CB-TB & CB-Spider metadata using the provided script

cd ~/go/src/github.com/cloud-barista/cb-tumblebug make clean-db

-

-

Upgrading the CB-TB & CB-Spider versions

The following cleanup steps are unnecessary if you clearly understand the impact of the upgrade

- Check and delete resources created through CB-TB

- Delete CB-TB & CB-Spider metadata

cd ~/go/src/github.com/cloud-barista/cb-tumblebug make clean-db

- Restart with the upgraded version

- 🤖 Using CB-TB MCP Server (AI Assistant Interface) (NEW!)

- Using CB-TB MapUI (recommended)

- Using CB-TB REST API (recommended)

🚀 NEW: Control CB-Tumblebug with AI assistants like Claude!

The Model Context Protocol (MCP) Server enables natural language interaction with CB-Tumblebug through AI assistants:

- 🧠 AI-Powered Infrastructure Management: Deploy and manage multi-cloud resources using natural language commands

- 🔗 Seamless Integration: Works with Claude Desktop (via proxy), VS Code (direct), and other MCP-compatible clients

- 📡 Modern Protocol: Uses Streamable HTTP transport (current MCP standard)

-

⚡ Quick Start: Enable with

make upand uncomment MCP service indocker-compose.yaml

# Enable MCP Server (Proof of Concept)

# 1. Uncomment cb-tumblebug-mcp-server in docker-compose.yaml

# 2. Launch with Docker Compose

make up

# Access MCP server at http://localhost:8000/mcpVisual Infrastructure Management with Interactive Maps

CB-MapUI provides an intuitive, map-based interface for managing multi-cloud infrastructure:

- 🗺️ Geographic Visualization: See your infrastructure deployed across the globe

- 📊 Real-time Monitoring: Monitor resource status and performance

- 🎮 Interactive Control: Create, manage, and control resources visually

- 🌐 Multi-Cloud View: Unified view across all cloud providers

# Access CB-MapUI (auto-started with Docker Compose)

open http://localhost:1324

# Or run standalone MapUI container

./scripts/runMapUI.shFeatures:

- Drag-and-drop resource creation

- Real-time infrastructure mapping

- Cross-cloud resource relationships

- Performance metrics overlay

📖 Learn More: CB-MapUI Repository

Programmatic Multi-Cloud Infrastructure Management

CB-Tumblebug provides a comprehensive REST API for automated infrastructure management:

🌐 API Dashboard & Documentation

- Interactive API Explorer: http://localhost:1323/tumblebug/api

-

Live Documentation:

🔐 Authentication CB-TB uses Basic Authentication (development phase - not production-ready):

# Include base64 encoded credentials in request headers

Authorization: Basic <base64(username:password)>🚀 Quick Infrastructure Creation Following the Quick MCI Creation Guide:

# 1. Create VM specification

curl -X POST "http://localhost:1323/tumblebug/ns/default/resources/spec" \

-H "Authorization: Basic <credentials>" \

-d '{"name": "web-spec", "connectionName": "aws-ap-northeast-2"}'

# 2. Create VM image

curl -X POST "http://localhost:1323/tumblebug/ns/default/resources/image" \

-H "Authorization: Basic <credentials>" \

-d '{"name": "ubuntu-image", "connectionName": "aws-ap-northeast-2"}'

# 3. Create Multi-Cloud Infrastructure

curl -X POST "http://localhost:1323/tumblebug/ns/default/mci" \

-H "Authorization: Basic <credentials>" \

-d @mci-config.json🛠️ Core API Categories

- Infrastructure Resources: VM specs, images, networks, security groups

- Multi-Cloud Infrastructure (MCI): Provision and manage distributed infrastructure

- Monitoring & Control: Performance metrics, scaling, lifecycle management

-

Credentials & Connections: Secure cloud provider configuration

- Create access key object

- Create, view, control, execute remote commands, shut down, and delete MCI using the MCI(multi-cloud infrastructure service) management APIs

- CB-TB optimal and dynamic provisioning

-

Setup required tools

-

Install: git, gcc, make

sudo apt update sudo apt install make gcc git

-

Install: Golang

-

Check https://golang.org/dl/ and setup Go

-

Download

wget https://go.dev/dl/go1.25.0.linux-amd64.tar.gz; sudo rm -rf /usr/local/go && sudo tar -C /usr/local -xzf go1.25.0.linux-amd64.tar.gz

-

Setup environment

echo 'export PATH=$PATH:/usr/local/go/bin:$HOME/go/bin' >> ~/.bashrc echo 'export GOPATH=$HOME/go' >> ~/.bashrc

source ~/.bashrc echo $GOPATH go env go version

-

-

-

-

Run Docker Compose with the build option

To build the current CB-Tumblebug source code into a container image and run it along with the other containers, use the following command:

cd ~/go/src/github.com/cloud-barista/cb-tumblebug sudo DOCKER_BUILDKIT=1 docker compose up --build

This command will automatically build the CB-Tumblebug from the local source code and start it within a Docker container, along with any other necessary services as defined in the

docker-compose.ymlfile.DOCKER_BUILDKIT=1setting is used to speed up the build by using the go build cache technique.

-

Build the Golang source code using the Makefile

cd ~/go/src/github.com/cloud-barista/cb-tumblebug/src make

All dependencies will be downloaded automatically by Go.

The initial build will take some time, but subsequent builds will be faster by the Go build cache.

Note To update the Swagger API documentation, run

make swag- API documentation file will be generated at

cb-tumblebug/src/interface/rest/docs/swagger.yaml - API documentation can be viewed in a web browser at http://localhost:1323/tumblebug/api (provided when CB-TB is running)

- Detailed information on how to update the API

- API documentation file will be generated at

-

Set environment variables required to run CB-TB (in another tab)

- Check and configure the contents of

cb-tumblebug/conf/setup.env(CB-TB environment variables, modify as needed)- Apply the environment variables to the system

cd ~/go/src/github.com/cloud-barista/cb-tumblebug source conf/setup.env

- (Optional) Automatically set the TB_SELF_ENDPOINT environment variable (an externally accessible address) using a script if needed

- This is necessary if you want to access and control the Swagger API Dashboard from outside when CB-TB is running

cd ~/go/src/github.com/cloud-barista/cb-tumblebug source ./scripts/setPublicIP.sh

- Apply the environment variables to the system

- Check and configure the contents of

-

Execute the built cb-tumblebug binary by using

make runcd ~/go/src/github.com/cloud-barista/cb-tumblebug/src make run

CB-TB welcomes improvements from both new and experienced contributors!

Check out CONTRIBUTING.

Thanks goes to these wonderful people (emoji key):

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cb-tumblebug

Similar Open Source Tools

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

awesome-alt-clouds

Awesome Alt Clouds is a curated list of non-hyperscale cloud providers offering specialized infrastructure and services catering to specific workloads, compliance requirements, and developer needs. The list includes various categories such as Infrastructure Clouds, Sovereign Clouds, Unikernels & WebAssembly, Data Clouds, Workflow and Operations Clouds, Network, Connectivity and Security Clouds, Vibe Clouds, Developer Happiness Clouds, Authorization, Identity, Fraud and Abuse Clouds, Monetization, Finance and Legal Clouds, Customer, Marketing and eCommerce Clouds, IoT, Communications, and Media Clouds, Blockchain Clouds, Source Code Control, Cloud Adjacent, and Future Clouds.

mcp-fundamentals

The mcp-fundamentals repository is a collection of fundamental concepts and examples related to microservices, cloud computing, and DevOps. It covers topics such as containerization, orchestration, CI/CD pipelines, and infrastructure as code. The repository provides hands-on exercises and code samples to help users understand and apply these concepts in real-world scenarios. Whether you are a beginner looking to learn the basics or an experienced professional seeking to refresh your knowledge, mcp-fundamentals has something for everyone.

TuyaOpen

TuyaOpen is an open source AI+IoT development framework supporting cross-chip platforms and operating systems. It provides core functionalities for AI+IoT development, including pairing, activation, control, and upgrading. The SDK offers robust security and compliance capabilities, meeting data compliance requirements globally. TuyaOpen enables the development of AI+IoT products that can leverage the Tuya APP ecosystem and cloud services. It continues to expand with more cloud platform integration features and capabilities like voice, video, and facial recognition.

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

apex

Apex is a powerful and flexible programming language for building cloud-based applications on the Salesforce platform. It allows developers to create custom business logic, automate processes, and integrate with external systems. With its robust features and easy-to-use syntax, Apex empowers developers to extend the capabilities of Salesforce and deliver tailored solutions to meet business requirements.

redb-open

reDB Node is a distributed, policy-driven data mesh platform that enables True Data Portability across various databases, warehouses, clouds, and environments. It unifies data access, data mobility, and schema transformation into one open platform. Built for developers, architects, and AI systems, reDB addresses the challenges of fragmented data ecosystems in modern enterprises by providing multi-database interoperability, automated schema versioning, zero-downtime migration, real-time developer data environments with obfuscation, quantum-resistant encryption, and policy-based access control. The project aims to build a foundation for future-proof data infrastructure.

kalavai-client

Kalavai is an open-source platform that transforms everyday devices into an AI supercomputer by aggregating resources from multiple machines. It facilitates matchmaking of resources for large AI projects, making AI hardware accessible and affordable. Users can create local and public pools, connect with the community's resources, and share computing power. The platform aims to be a management layer for research groups and organizations, enabling users to unlock the power of existing hardware without needing a devops team. Kalavai CLI tool helps manage both versions of the platform.

aimeos-core

Aimeos is an Open Source e-commerce framework for online shops consisting of the e-commerce library, the administration interface and different front-ends. It offers a modular stack that provides flexibility and speed. Unlike other shop systems, Aimeos allows users to choose from several user front-ends and customize them according to their needs or create their own. It is suitable for medium to large businesses requiring seamless integration into existing systems like content management, customer relationship management, or enterprise resource planning systems. Aimeos also serves as a base for portals or marketplaces.

azure-openai-landing-zone

The Azure Open AI Application Landing Zone Solution Accelerator aims to assist in setting up development and production environments for Generative AI solutions using Azure Open AI and Azure Services. It provides deployment templates for common Gen AI solution patterns and offers customization options. The solution accelerator also offers best practices for technology usage in various scenarios.

agent-o-rama

Agent-O-Rama is a powerful open-source tool designed for automating repetitive tasks in the field of software development. It provides a user-friendly interface to create and manage automated agents that can perform various tasks such as code deployment, testing, and monitoring. With Agent-O-Rama, developers can save time and effort by automating routine processes and focusing on more critical aspects of their projects. The tool is highly customizable and extensible, allowing users to tailor it to their specific needs and integrate it with other tools and services. Agent-O-Rama is suitable for both individual developers and teams working on projects of any size, providing a scalable solution for improving productivity and efficiency in software development.

holmesgpt

HolmesGPT is an open-source DevOps assistant powered by OpenAI or any tool-calling LLM of your choice. It helps in troubleshooting Kubernetes, incident response, ticket management, automated investigation, and runbook automation in plain English. The tool connects to existing observability data, is compliance-friendly, provides transparent results, supports extensible data sources, runbook automation, and integrates with existing workflows. Users can install HolmesGPT using Brew, prebuilt Docker container, Python Poetry, or Docker. The tool requires an API key for functioning and supports OpenAI, Azure AI, and self-hosted LLMs.

robustmq

RobustMQ is a next-generation, high-performance, multi-protocol message queue built in Rust. It aims to create a unified messaging infrastructure tailored for modern cloud-native and AI systems. With features like high performance, distributed architecture, multi-protocol support, pluggable storage, cloud-native readiness, multi-tenancy, security features, observability, and user-friendliness, RobustMQ is designed to be production-ready and become a top-level Apache project in the message queue ecosystem by the second half of 2025.

aws-genai-llm-chatbot

This repository provides code to deploy a chatbot powered by Multi-Model and Multi-RAG using AWS CDK on AWS. Users can experiment with various Large Language Models and Multimodal Language Models from different providers. The solution supports Amazon Bedrock, Amazon SageMaker self-hosted models, and third-party providers via API. It also offers additional resources like AWS Generative AI CDK Constructs and Project Lakechain for building generative AI solutions and document processing. The roadmap and authors are listed, along with contributors. The library is licensed under the MIT-0 License with information on changelog, code of conduct, and contributing guidelines. A legal disclaimer advises users to conduct their own assessment before using the content for production purposes.

rover

Rover is a command-line tool for managing and deploying Docker containers. It provides a simple and intuitive interface to interact with Docker images and containers, allowing users to easily build, run, and manage their containerized applications. With Rover, users can streamline their development workflow by automating container deployment and management tasks. The tool is designed to be lightweight and easy to use, making it ideal for developers and DevOps professionals looking to simplify their container management processes.

For similar tasks

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

dataengineering-roadmap

A repository providing basic concepts, technical challenges, and resources on data engineering in Spanish. It is a curated list of free, Spanish-language materials found on the internet to facilitate the study of data engineering enthusiasts. The repository covers programming fundamentals, programming languages like Python, version control with Git, database fundamentals, SQL, design concepts, Big Data, analytics, cloud computing, data processing, and job search tips in the IT field.

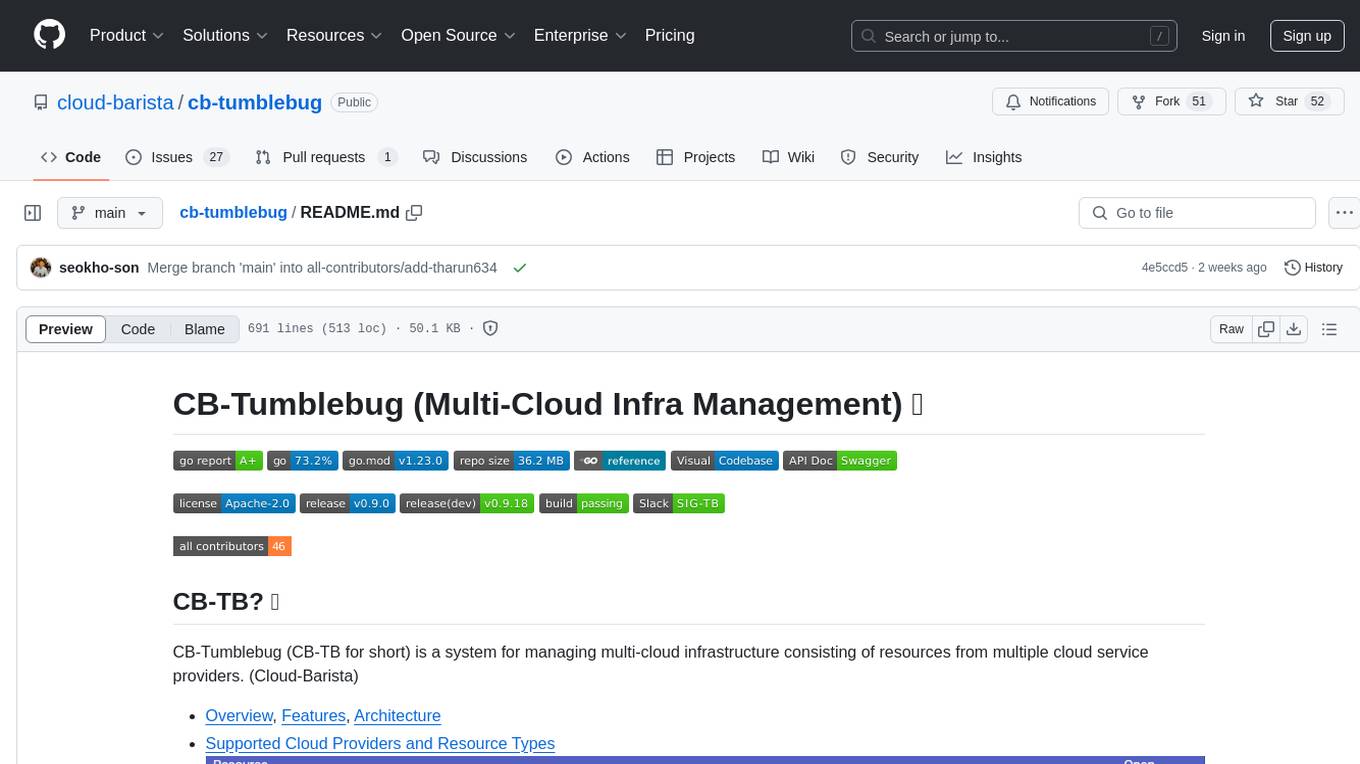

awesome-hosting

awesome-hosting is a curated list of hosting services sorted by minimal plan price. It includes various categories such as Web Services Platform, Backend-as-a-Service, Lambda, Node.js, Static site hosting, WordPress hosting, VPS providers, managed databases, GPU cloud services, and LLM/Inference API providers. Each category lists multiple service providers along with details on their minimal plan, trial options, free tier availability, open-source support, and specific features. The repository aims to help users find suitable hosting solutions based on their budget and requirements.

ubicloud

Ubicloud is an open source cloud platform that provides Infrastructure as a Service (IaaS) features on bare metal providers like Hetzner, Leaseweb, and AWS Bare Metal. Users can either set it up themselves on these providers or use the managed service offered by Ubicloud. The platform allows users to cloudify bare metal Linux machines, provision and manage cloud resources, and offers an open source alternative to traditional cloud providers, reducing costs and returning control of infrastructure to the users.

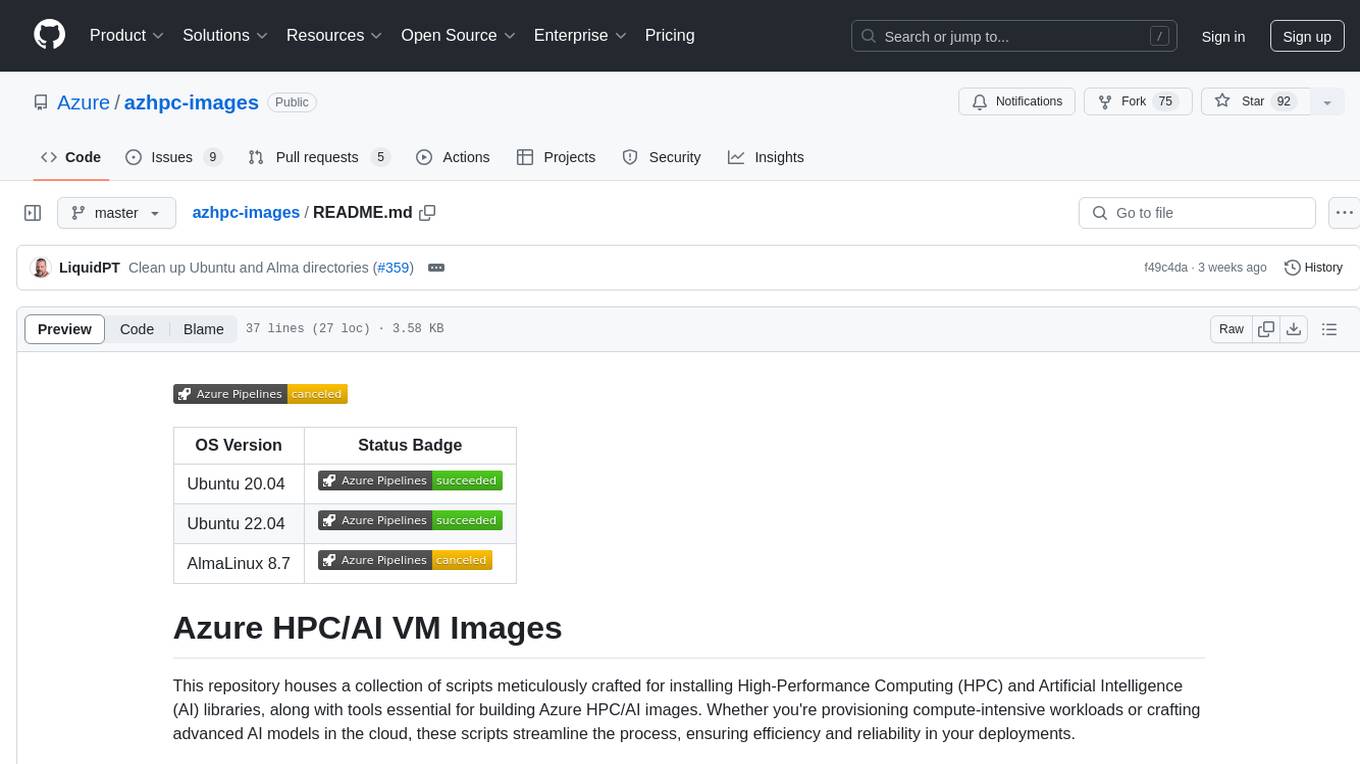

azhpc-images

This repository contains scripts for installing HPC and AI libraries and tools to build Azure HPC/AI images. It streamlines the process of provisioning compute-intensive workloads and crafting advanced AI models in the cloud, ensuring efficiency and reliability in deployments.

For similar jobs

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

awesome-devops-mcp-servers

A curated list of awesome Model Context Protocol (MCP) servers focused on DevOps tools and capabilities. MCP is an open protocol that enables AI models to securely interact with local and remote resources through standardized server implementations. This list focuses on DevOps-related MCP servers that extend AI capabilities through cloud infrastructure management, CLI operations, version control, security scanning, and other DevOps-related services.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

awesome-mcp-servers-devops

This repository, 'awesome-mcp-servers-devops', is a curated list of Model Context Protocol servers for DevOps workflows. It includes servers for various aspects of DevOps such as infrastructure, CI/CD, monitoring, security, and cloud operations. The repository provides information on different MCP servers available for tools like GitHub, GitLab, Azure DevOps, Gitea, Terraform, Vault, Pulumi, Kubernetes, Docker Hub, Portainer, Qovery, various command line and local operation tools, browser automation tools, code execution tools, coding agents, aggregators, CI/CD tools like Argo CD, Jenkins, GitHub Actions, Codemagic, DevOps visibility tools, build tools, cloud platforms like AWS, Azure, Cloudflare, Alibaba Cloud, observability tools like Grafana, Datadog, Prometheus, VictoriaMetrics, Alertmanager, APM & monitoring tools, security tools like Snyk, Semgrep, and community security servers, collaboration tools like Atlassian, Jira, project management tools, service desks, Notion, and more.

sre-agent

SRE Agent is an open-source AI agent designed to help Site Reliability Engineers (SREs) debug, maintain healthy Kubernetes systems, and simplify DevOps tasks. With a command-line interface (CLI), users can interact directly with the agent to diagnose issues, report diagnostics, and streamline operations. The agent supports root cause debugging, Kubernetes log querying, GitHub codebase search, and CLI-powered interactions. It is powered by the Model Context Protocol (MCP) for seamless connectivity. Users can configure AWS credentials, GitHub integration, and Anthropic API key to start monitoring deployments and diagnosing issues. The tool is structured with Python services and TypeScript MCP servers for development and maintenance.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

generative-bi-using-rag

Generative BI using RAG on AWS is a comprehensive framework designed to enable Generative BI capabilities on customized data sources hosted on AWS. It offers features such as Text-to-SQL functionality for querying data sources using natural language, user-friendly interface for managing data sources, performance enhancement through historical question-answer ranking, and entity recognition. It also allows customization of business information, handling complex attribution analysis problems, and provides an intuitive question-answering UI with a conversational approach for complex queries.