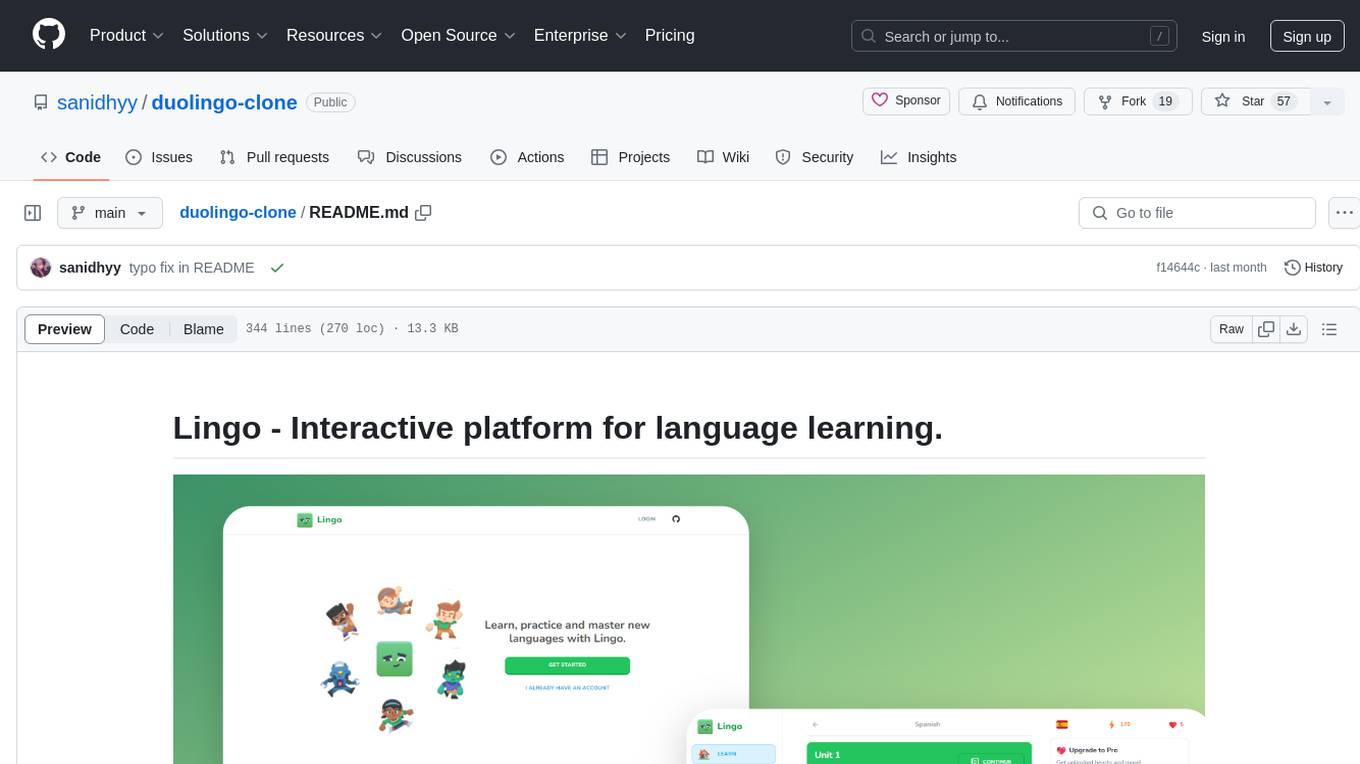

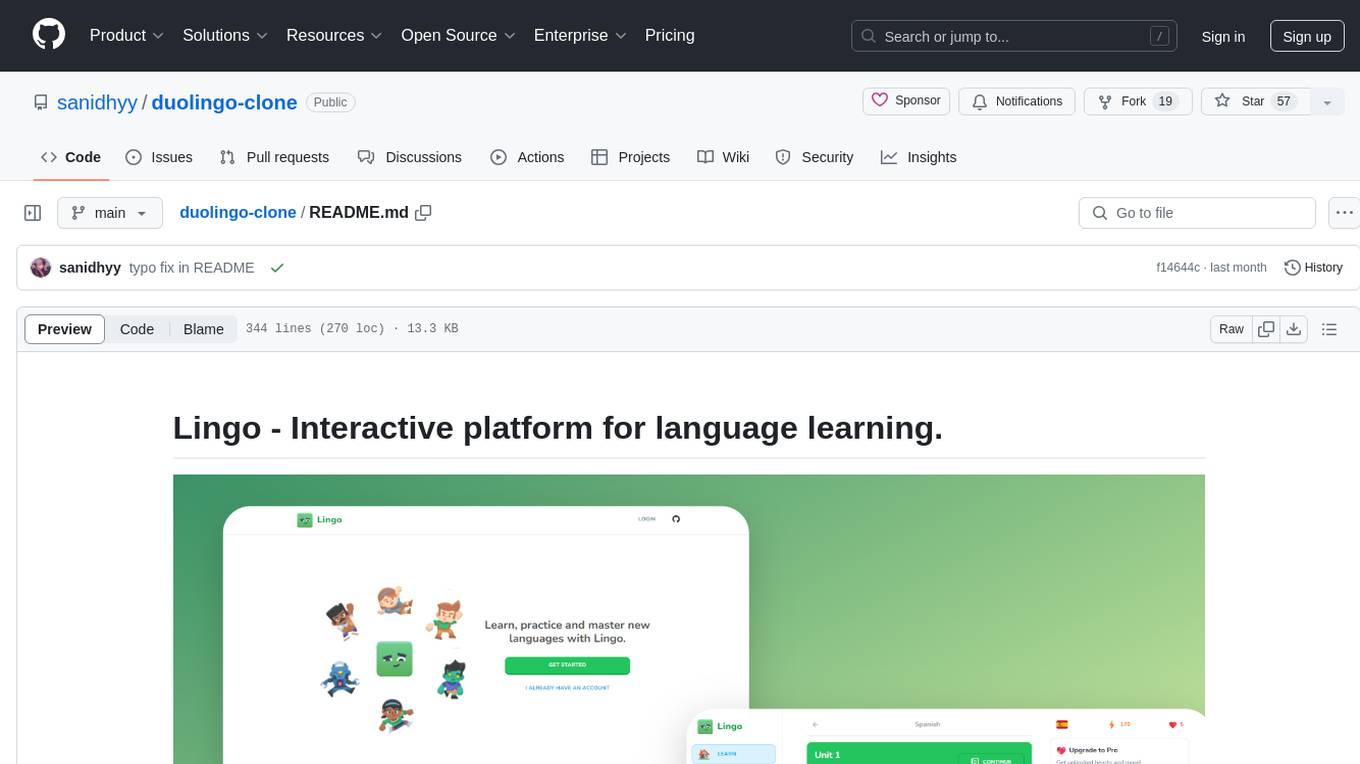

duolingo-clone

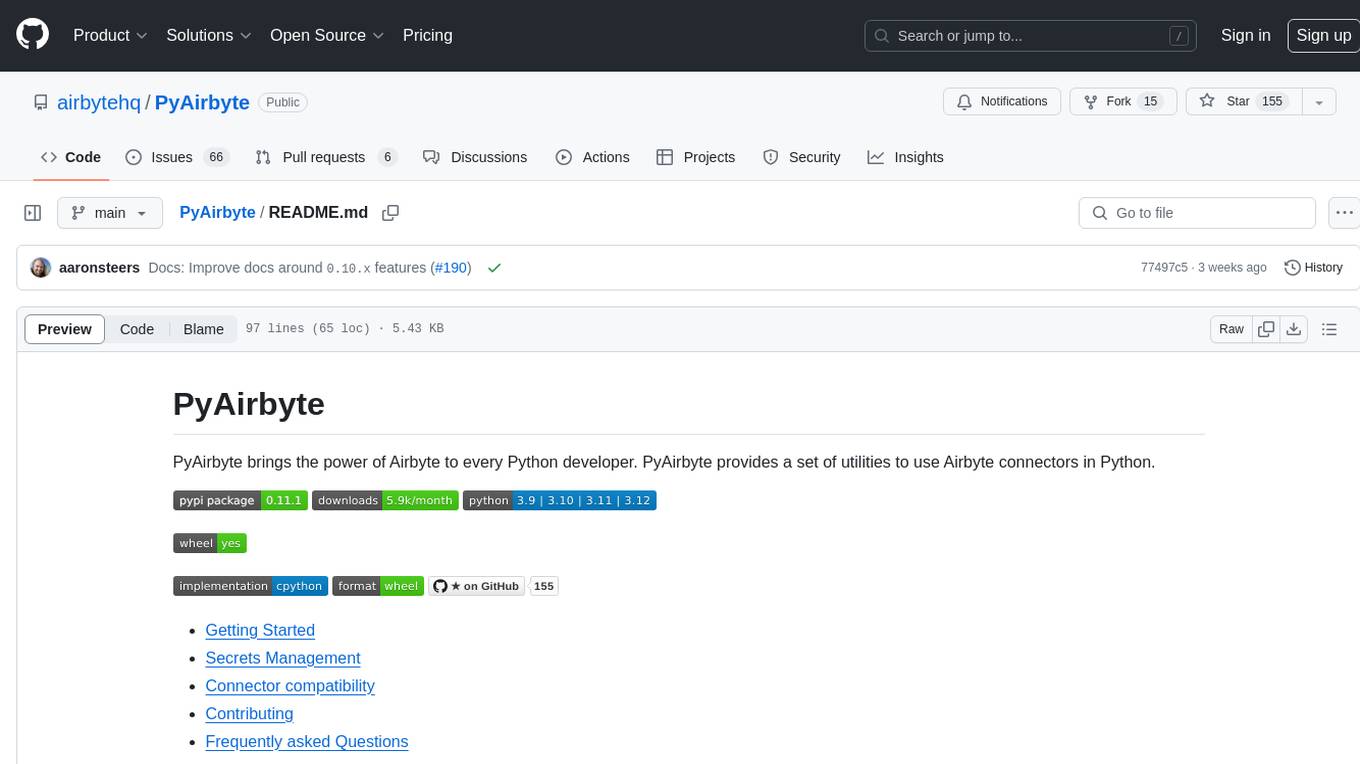

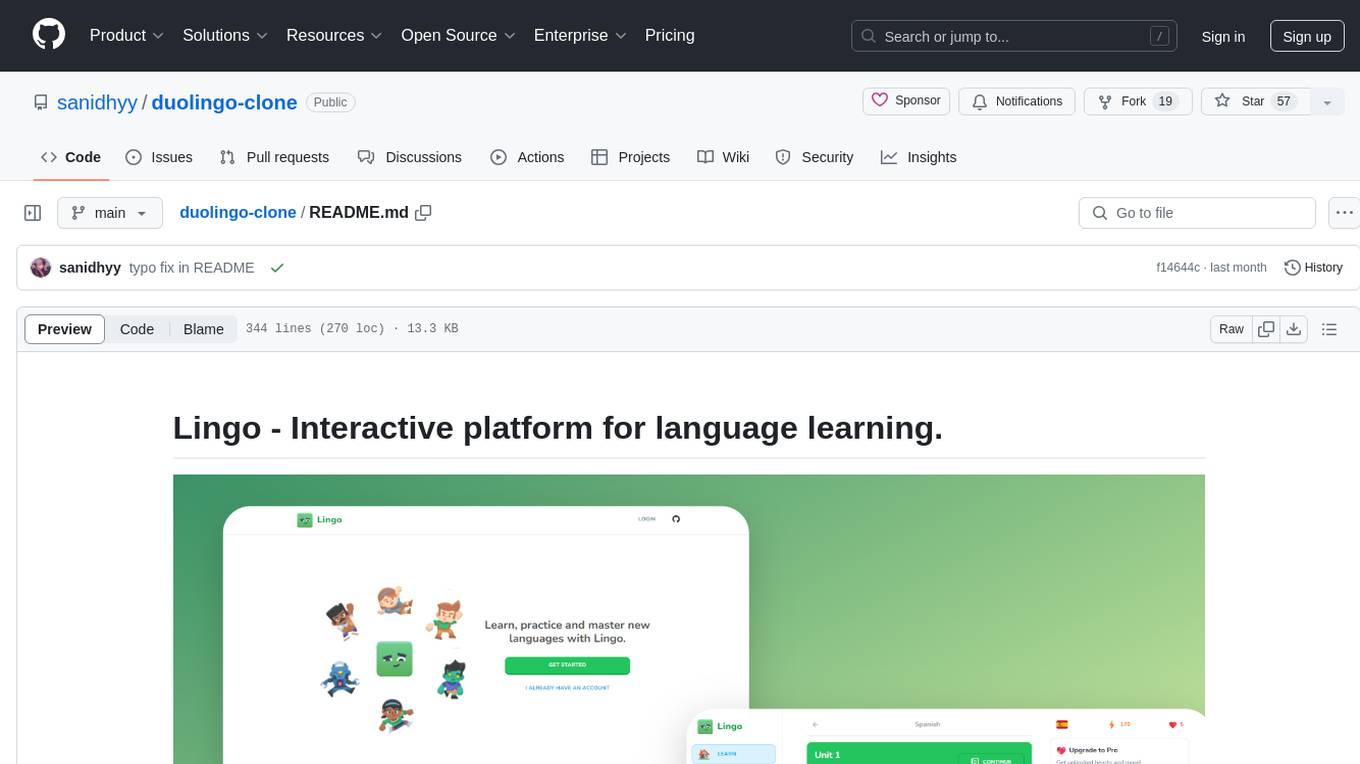

Interactive platform for language learning with lessons, quizzes, and progress tracking.

Stars: 104

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

README:

Here is the folder structure of this app.

duolingo-clone/

|- actions/

|- challenge-progress.ts

|- user-progress.ts

|- user-subscription.ts

|- app/

|-- (main)/

|--- courses/

|--- leaderboard/

|--- learn/

|--- quests/

|--- shop/

|--- layout.tsx

|-- (marketing)/

|--- footer.tsx

|--- header.tsx

|--- layout.tsx

|--- page.tsx

|-- admin/

|--- challenge/

|--- challengeOption/

|--- course/

|--- lesson/

|--- unit/

|--- app.tsx

|--- page.tsx

|-- api/

|--- challengeOptions/

|--- challenges/

|--- courses/

|--- lessons/

|--- units/

|--- webhooks/stripe/

|-- lesson/

|--- [lessonId]/

|--- card.tsx

|--- challenge.tsx

|--- footer.tsx

|--- header.tsx

|--- layout.tsx

|--- page.tsx

|--- question-bubble.tsx

|--- quiz.tsx

|--- result-card.tsx

|-- apple-icon.png

|-- favicon.ico

|-- globals.css

|-- icon1.png

|-- icon2.png

|-- layout.tsx

|- components/

|-- modals/

|-- ui/

|-- feed-wrapper.tsx

|-- mobile-wrapper.tsx

|-- mobile-sidebar.tsx

|-- promo.tsx

|-- quests.tsx

|-- sidebar-item.tsx

|-- sidebar.tsx

|-- sticky-wrapper.tsx

|-- user-progress.tsx

|- config/

|-- index.ts

|- db/

|-- drizzle.ts

|-- queries.ts

|-- schema.ts

|- lib/

|-- admin.ts

|-- stripe.ts

|-- utils.ts

|- public/

|- scripts/

|-- prod.ts

|-- reset.ts

|-- seed.ts

|- store/

|-- use-exit-modal.ts

|-- use-hearts-modal.ts

|-- use-practice-modal.ts

|- types/

|-- canvas.ts

|- .env

|- .env.example

|- .eslintrc.js

|- .gitignore

|- .prettierrc.json

|- components.json

|- constants.ts

|- drizzle.config.ts

|- environment.d.ts

|- middleware.ts

|- next.config.mjs

|- package-lock.json

|- package.json

|- postcss.config.js

|- tailwind.config.ts

|- tsconfig.json- Make sure Git and NodeJS is installed.

- Clone this repository to your local computer.

- Create

.envfile in root directory. - Contents of

.env:

# .env

# disabled next.js telemetry

NEXT_TELEMETRY_DISABLED=1

# clerk auth keys

NEXT_PUBLIC_CLERK_PUBLISHABLE_KEY=pk_test_XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

CLERK_SECRET_KEY=sk_test_XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

# neon db uri

DATABASE_URL="postgresql://<user>:<password>@<host>:<post>/lingo?sslmode=require"

# stripe api key and webhook

STRIPE_API_SECRET_KEY=sk_test_XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

STRIPE_WEBHOOK_SECRET=whsec_XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

# public app url

NEXT_PUBLIC_APP_URL=http://localhost:3000

# clerk admin user id(s) separated by comma and space (, )

CLERK_ADMIN_IDS="user_xxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

# or CLERK_ADMIN_IDS="user_xxxxxxxxxxxxxxxxxxxxxxxxxxxxx, user_xxxxxxxxxxxxxxxxxxxxxx" for multiple admins.

-

Obtain Clerk Authentication Keys

- Source: Clerk Dashboard or Settings Page

-

Procedure:

- Log in to your Clerk account.

- Navigate to the dashboard or settings page.

- Look for the section related to authentication keys.

- Copy the

NEXT_PUBLIC_CLERK_PUBLISHABLE_KEYandCLERK_SECRET_KEYprovided in that section.

-

Retrieve Neon Database URI

- Source: Database Provider (e.g., Neon, PostgreSQL)

-

Procedure:

- Access your database provider's platform or configuration.

- Locate the database connection details.

- Replace

<user>,<password>,<host>, and<port>placeholders in the URI with your actual database credentials. - Ensure to include

?sslmode=requireat the end of the URI for SSL mode requirement.

-

Fetch Stripe API Key and Webhook Secret

- Source: Stripe Dashboard

-

Procedure:

- Log in to your Stripe account.

- Navigate to the dashboard or API settings.

- Find the section related to API keys and webhook secrets.

- Copy the

STRIPE_API_SECRET_KEYandSTRIPE_WEBHOOK_SECRET.

-

Specify Public App URL

-

Procedure:

- Replace

http://localhost:3000with the URL of your deployed application.

- Replace

-

Procedure:

-

Identify Clerk Admin User IDs

- Source: Clerk Dashboard or Settings Page

-

Procedure:

- Log in to your Clerk account.

- Navigate to the dashboard or settings page.

- Find the section related to admin user IDs.

- Copy the user IDs provided, ensuring they are separated by commas and spaces.

-

Save and Secure:

- Save the changes to the

.envfile.

- Save the changes to the

-

Install Project Dependencies using

npm install --legacy-peer-depsoryarn install --legacy-peer-deps. -

Run the Seed Script:

In the same terminal, run the following command to execute the seed script:

npm run db:push && npm run db:prodThis command uses npm to execute the Typescript file (scripts/prod.ts) and writes challenges data in database.

- Verify Data in Database:

Once the script completes, check your database to ensure that the challenges data has been successfully seeded.

- Now app is fully configured 👍 and you can start using this app using either one of

npm run devoryarn dev.

NOTE: Please make sure to keep your API keys and configuration values secure and do not expose them publicly.

You might encounter some bugs while using this app. You are more than welcome to contribute. Just submit changes via pull request and I will review them before merging. Make sure you follow community guidelines.

Useful resources and dependencies that are used in Lingo.

-

Special Thanks to Kenney Assets: https://kenney.nl/

-

Freesound: https://freesound.org/

-

Elevenlabs AI: https://elevenlabs.io/

-

Flagpack: https://flagpack.xyz/

-

@clerk/nextjs: ^4.29.9

-

@neondatabase/serverless: ^0.9.0

-

@radix-ui/react-avatar: ^1.0.4

-

@radix-ui/react-dialog: ^1.0.5

-

@radix-ui/react-progress: ^1.0.3

-

@radix-ui/react-separator: ^1.0.3

-

@radix-ui/react-slot: ^1.0.2

-

class-variance-authority: ^0.7.0

-

clsx: ^2.1.0

-

dotenv: ^16.4.5

-

drizzle-orm: ^0.30.4

-

lucide-react: ^0.359.0

-

next: 14.1.4

-

next-themes: ^0.3.0

-

ra-data-simple-rest: ^4.16.12

-

react: ^18

-

react-admin: ^4.16.13

-

react-circular-progressbar: ^2.1.0

-

react-confetti: ^6.1.0

-

react-dom: ^18

-

react-use: ^17.5.0

-

sonner: ^1.4.32

-

stripe: ^14.22.0

-

tailwind-merge: ^2.2.2

-

tailwindcss-animate: ^1.0.7

-

zustand: ^4.5.2

To learn more about Next.js, take a look at the following resources:

- Next.js Documentation - learn about Next.js features and API.

- Learn Next.js - an interactive Next.js tutorial.

You can check out the Next.js GitHub repository - your feedback and contributions are welcome!

The easiest way to deploy your Next.js app is to use the Vercel Platform from the creators of Next.js.

Check out Next.js deployment documentation for more details.

You can also give this repository a star to show more people and they can use this repository.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for duolingo-clone

Similar Open Source Tools

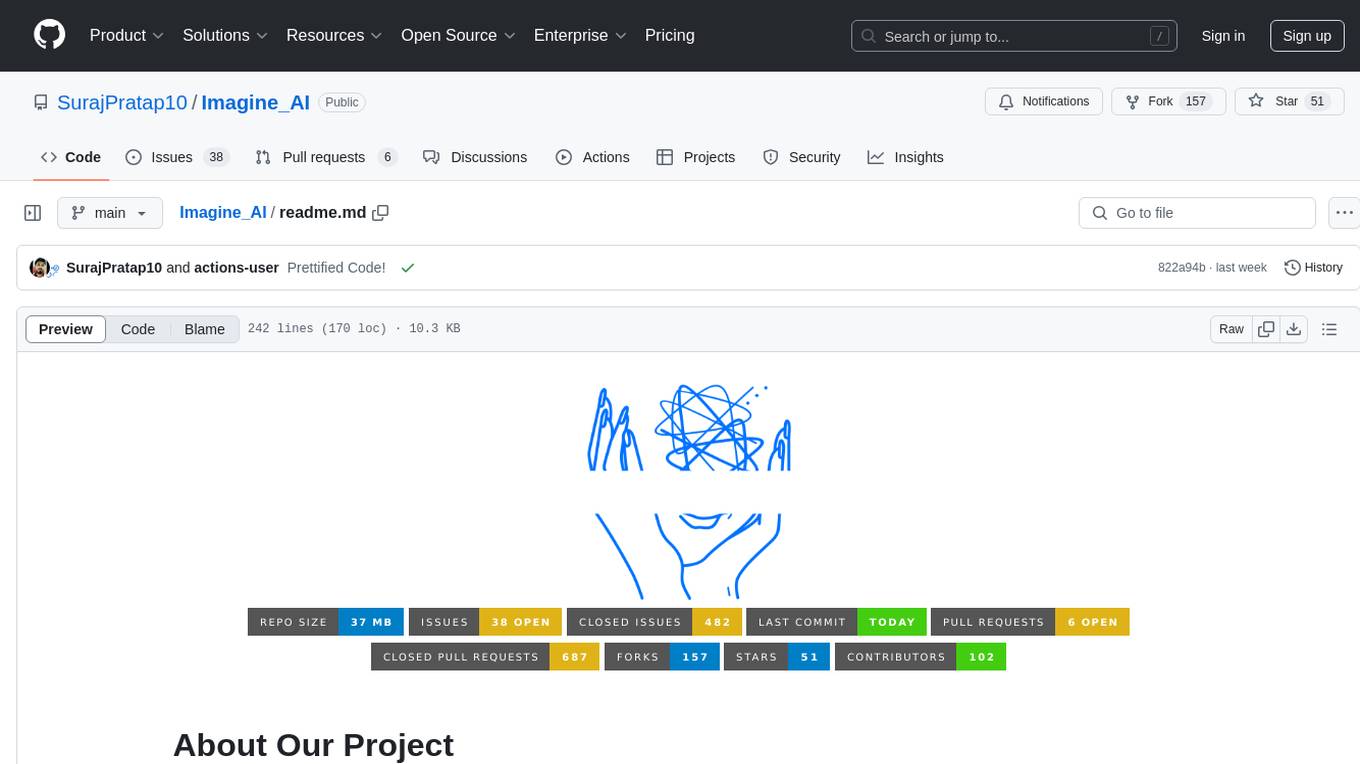

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

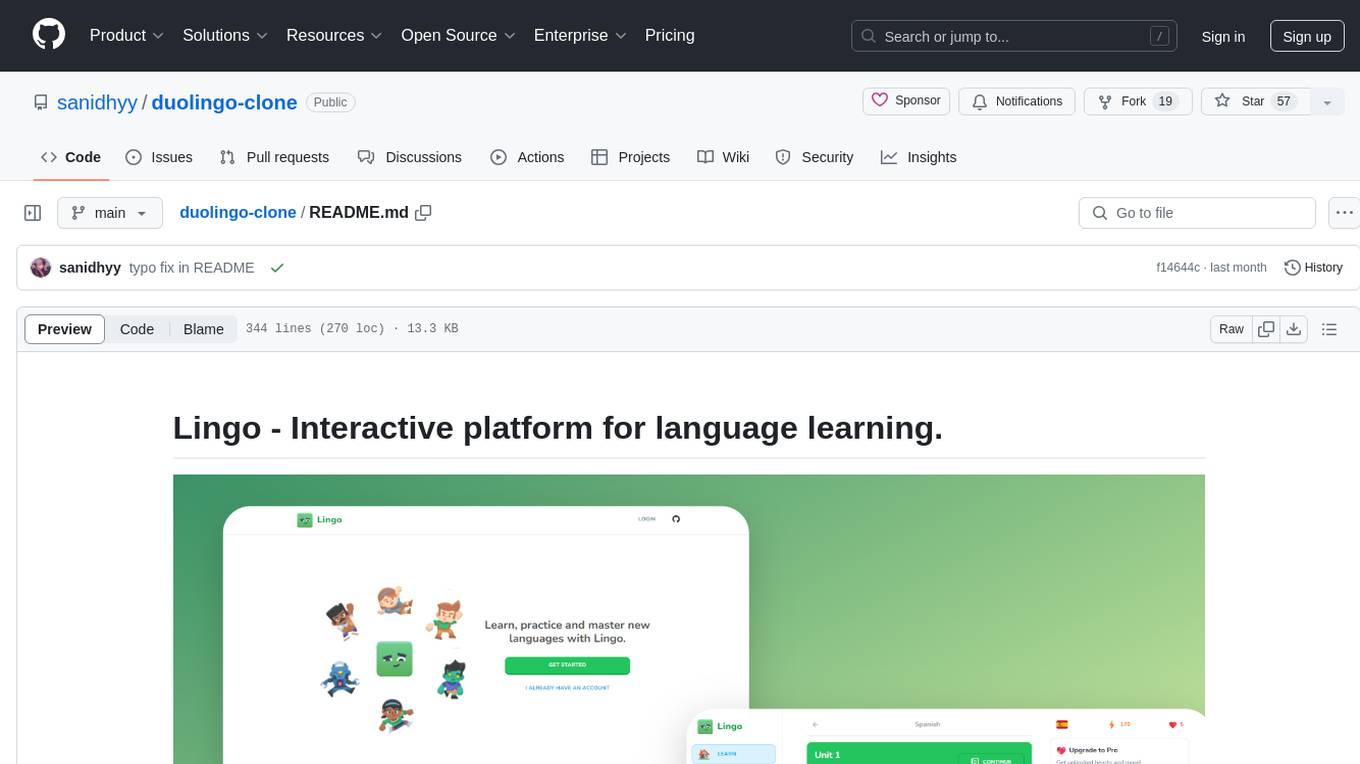

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

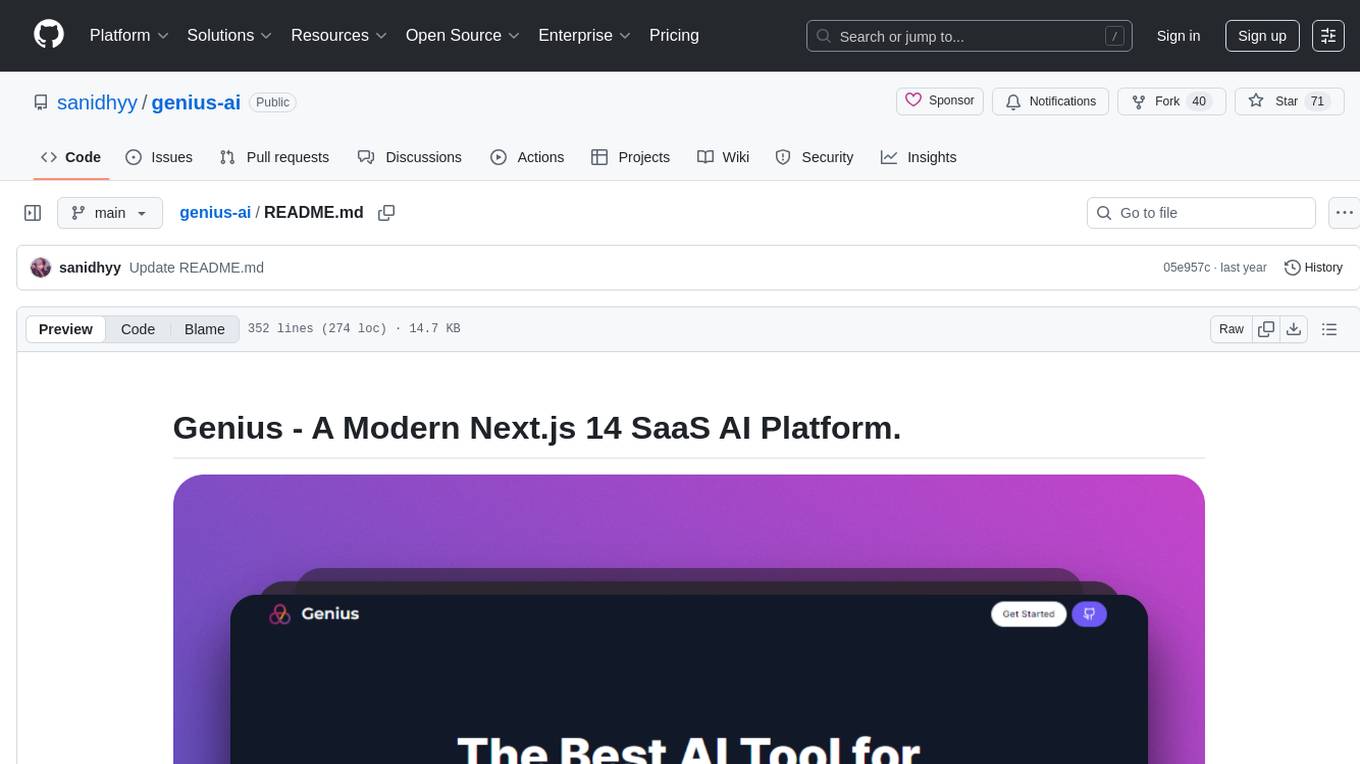

Imagine_AI

IMAGINE - AI is a groundbreaking image generator tool that leverages the power of OpenAI's DALL-E 2 API library to create extraordinary visuals. Developed using Node.js and Express, this tool offers a transformative way to unleash artistic creativity and imagination by generating unique and captivating images through simple prompts or keywords.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

Free-GPT4-WEB-API

FreeGPT4-WEB-API is a Python server that allows you to have a self-hosted GPT-4 Unlimited and Free WEB API, via the latest Bing's AI. It uses Flask and GPT4Free libraries. GPT4Free provides an interface to the Bing's GPT-4. The server can be configured by editing the `FreeGPT4_Server.py` file. You can change the server's port, host, and other settings. The only cookie needed for the Bing model is `_U`.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

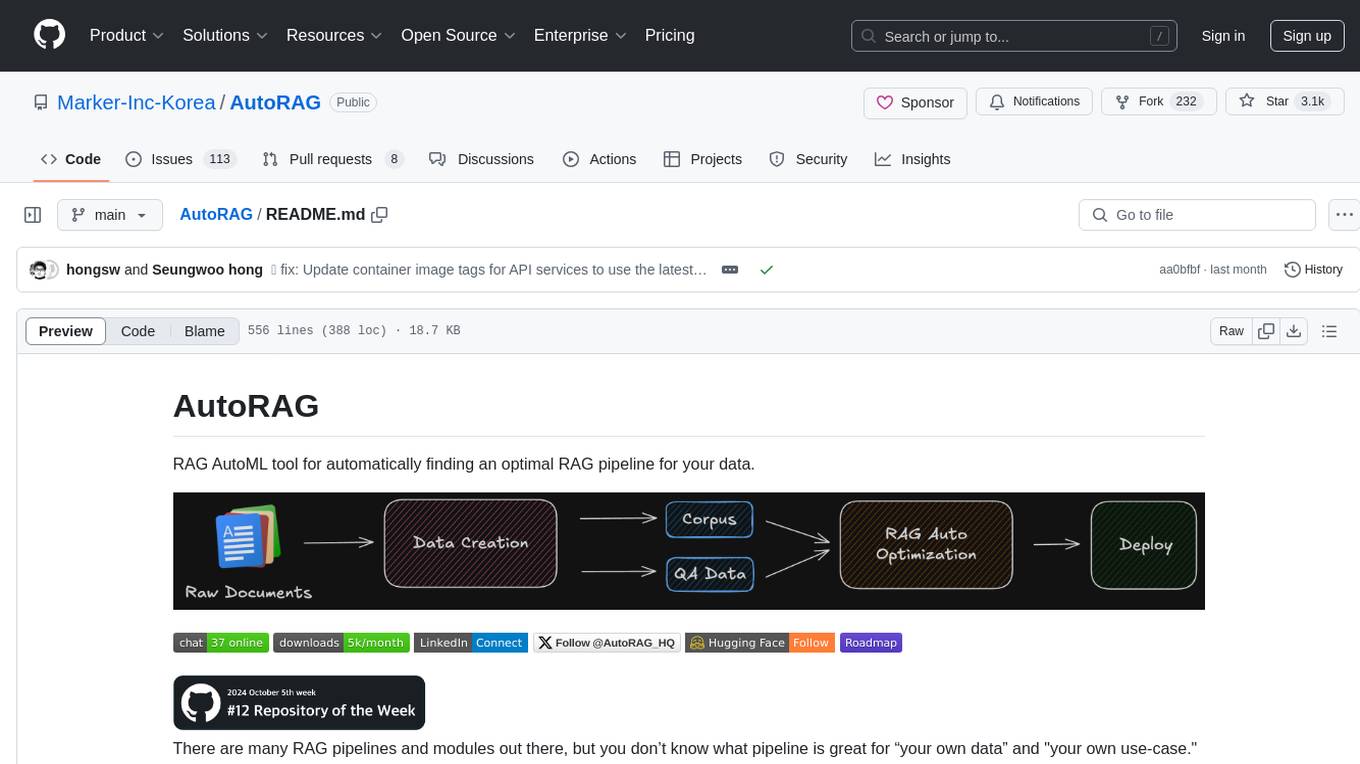

AutoRAG

AutoRAG is an AutoML tool designed to automatically find the optimal RAG pipeline for your data. It simplifies the process of evaluating various RAG modules to identify the best pipeline for your specific use-case. The tool supports easy evaluation of different module combinations, making it efficient to find the most suitable RAG pipeline for your needs. AutoRAG also offers a cloud beta version to assist users in running and optimizing the tool, along with building RAG evaluation datasets for a starting price of $9.99 per optimization.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

unity-mcp

MCP for Unity is a tool that acts as a bridge, enabling AI assistants to interact with the Unity Editor via a local MCP Client. Users can instruct their LLM to manage assets, scenes, scripts, and automate tasks within Unity. The tool offers natural language control, powerful tools for asset management, scene manipulation, and automation of workflows. It is extensible and designed to work with various MCP Clients, providing a range of functions for precise text edits, script management, GameObject operations, and more.

For similar tasks

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

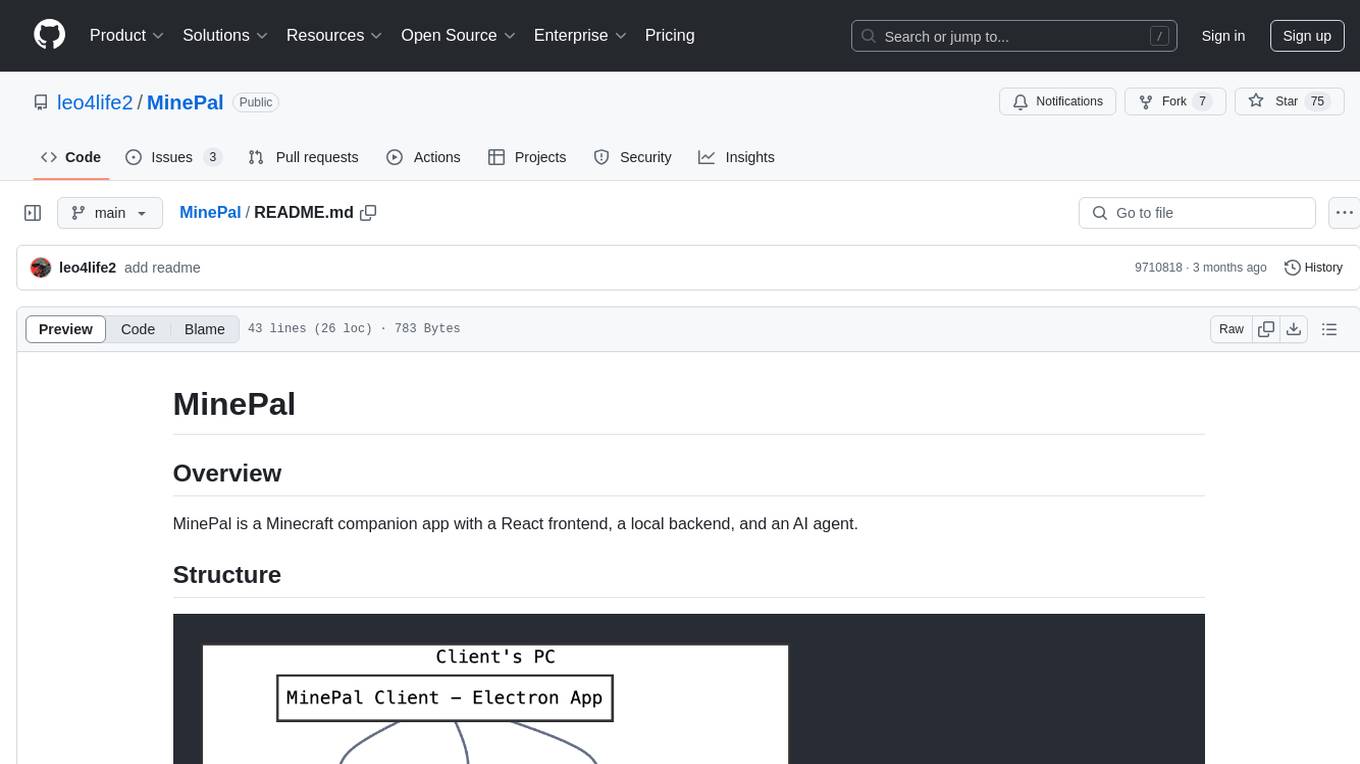

MinePal

MinePal is a Minecraft companion app with a React frontend, a local backend, and an AI agent. The frontend is built with React and Vite, the local backend APIs are in server.js, and the Minecraft agent logic is in src/agent/. Users can set up the frontend by installing dependencies and building it, refer to the backend repository for backend setup, and navigate to src/agent/ to access actions that the bot can take.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

yolo-ios-app

The Ultralytics YOLO iOS App GitHub repository offers an advanced object detection tool leveraging YOLOv8 models for iOS devices. Users can transform their devices into intelligent detection tools to explore the world in a new and exciting way. The app provides real-time detection capabilities with multiple AI models to choose from, ranging from 'nano' to 'x-large'. Contributors are welcome to participate in this open-source project, and licensing options include AGPL-3.0 for open-source use and an Enterprise License for commercial integration. Users can easily set up the app by following the provided steps, including cloning the repository, adding YOLOv8 models, and running the app on their iOS devices.

PyAirbyte

PyAirbyte brings the power of Airbyte to every Python developer by providing a set of utilities to use Airbyte connectors in Python. It enables users to easily manage secrets, work with various connectors like GitHub, Shopify, and Postgres, and contribute to the project. PyAirbyte is not a replacement for Airbyte but complements it, supporting data orchestration frameworks like Airflow and Snowpark. Users can develop ETL pipelines and import connectors from local directories. The tool simplifies data integration tasks for Python developers.

For similar jobs

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

Verbiverse

Verbiverse is a tool that uses a large language model to assist in reading PDFs and watching videos, aimed at improving language proficiency. It provides a more convenient and efficient way to use large models through predefined prompts, designed for those looking to enhance their language skills. The tool analyzes unfamiliar words and sentences in foreign language PDFs or video subtitles, providing better contextual understanding compared to traditional dictionary translations or ambiguous meanings. It offers features such as automatic loading of subtitles, word analysis by clicking or double-clicking, and a word database for collecting words. Users can run the tool on Windows x86_64 or ubuntu_22.04 x86_64 platforms by downloading the precompiled packages or by cloning the source code and setting up a virtual environment with Python. It is recommended to use a local model or smaller PDF files for testing due to potential token consumption issues with large files.

AnnA_Anki_neuronal_Appendix

AnnA is a Python script designed to create filtered decks in optimal review order for Anki flashcards. It uses Machine Learning / AI to ensure semantically linked cards are reviewed far apart. The script helps users manage their daily reviews by creating special filtered decks that prioritize reviewing cards that are most different from the rest. It also allows users to reduce the number of daily reviews while increasing retention and automatically identifies semantic neighbors for each note.

EngAce

EngAce is a cutting-edge, generative AI-powered application revolutionizing Vietnamese English learning. It offers personalized learning experiences combining AI with comprehensive features. The repository contains source code, documentation, and resources for the app.

TheoremExplainAgent

TheoremExplainAgent is an AI system that generates long-form Manim videos to visually explain theorems, proving its deep understanding while uncovering reasoning flaws that text alone often hides. The codebase for the paper 'TheoremExplainAgent: Towards Multimodal Explanations for LLM Theorem Understanding' is available in this repository. It provides a tool for creating multimodal explanations for theorem understanding using AI technology.

vocabulary-book-by-deepseek

Vocabulary Book by DeepSeek is a manual for CET-4, postgraduate entrance examination, and TOEFL vocabulary, providing word meanings, roots, example sentences, mnemonic aids, and mnemonic images. The project uses Cline + DeepSeek-R1-16b for over 80% of the code to automatically encode the vocabulary manual. The generated manual includes vocabulary from A to Z for CET-4, CET-6, postgraduate entrance examination, and TOEFL, along with features to generate Anki cards and PDFs. The tool also allows for the creation of mnemonic images for each word and articles.

awesome-ai-llm4education

The 'awesome-ai-llm4education' repository is a curated list of papers related to artificial intelligence (AI) and large language models (LLM) for education. It collects papers from top conferences, journals, and specialized domain-specific conferences, categorizing them based on specific tasks for better organization. The repository covers a wide range of topics including tutoring, personalized learning, assessment, material preparation, specific scenarios like computer science, language, math, and medicine, aided teaching, as well as datasets and benchmarks for educational research.