airflow-code-editor

A plugin for Apache Airflow that allows you to edit DAGs in browser

Stars: 416

The Airflow Code Editor Plugin is a tool designed for Apache Airflow users to edit Directed Acyclic Graphs (DAGs) directly within their browser. It offers a user-friendly file management interface for effortless editing, uploading, and downloading of files. With Git support enabled, users can store DAGs in a Git repository, explore Git history, review local modifications, and commit changes. The plugin enhances workflow efficiency by providing seamless DAG management capabilities.

README:

This plugin for Apache Airflow allows you to edit DAGs directly within your browser, providing a seamless and efficient workflow for managing your pipelines. Offering a user-friendly file management interface within designated directories, it facilitates effortless editing, uploading, and downloading of files. With Git support enabled, DAGs are stored in a Git repository, enabling users to explore Git history, review local modifications, and commit changes.

- Airflow Versions

- 1.10.3 or newer

- git Versions (git is not required if git support is disabled)

- 2.0 or newer

For the ease of deployment, use the production-ready reference container image. The image is based on the reference images for Apache Airflow.

You can find the following images there:

- andreax79/airflow-code-editor:latest - the latest released Airflow Code Editor image with the latest Apache Airflow version

- andreax79/airflow-code-editor:2.10.5 - the latest released Airflow Code Editor with specific Airflow version

- andreax79/airflow-code-editor:2.10.5-7.8.0 - specific version of Airflow and Airflow Code Editor

- Install the plugin

pip install airflow-code-editor- Install optional dependencies

-

black - Black Python code formatter

-

isort - A Python utility/library to sort imports

-

fs-s3fs - S3FS Amazon S3 Filesystem

-

fs-gcsfs - Google Cloud Storage Filesystem

-

... other filesystems supported by PyFilesystem - see https://www.pyfilesystem.org/page/index-of-filesystems/

pip install black isort fs-s3fs fs-gcsfs

-

Restart the Airflow Web Server

-

Open Admin - DAGs Code Editor

You can set options editing the Airflow's configuration file or setting environment variables. You can edit your airflow.cfg adding any of the following settings in the [code_editor] section. All the settings are optional.

- enabled enable this plugin (default: True).

- git_enabled enable git support (default: True). If git is not installed, disable this option.

- git_cmd git command (path)

- git_default_args git arguments added to each call (default: -c color.ui=true)

- git_author_name human-readable name in the author/committer (default logged user first and last names)

- git_author_email email for the author/committer (default: logged user email)

- git_init_repo initialize a git repo in DAGs folder (default: True)

- root_directory root folder (default: Airflow DAGs folder)

- line_length Python code formatter - max line length (default: 88)

- string_normalization Python code formatter - if true normalize string quotes and prefixes (default: False)

- mount, mount1, ... configure additional folder (mount point) - format: name=xxx,path=yyy

- ignored_entries comma-separated list of entries to be excluded from file/directory list (default: .*,__pycache__)

[code_editor]

enabled = True

git_enabled = True

git_cmd = /usr/bin/git

git_default_args = -c color.ui=true

git_init_repo = False

root_directory = /home/airflow/dags

line_length = 88

string_normalization = False

mount = name=data,path=/home/airflow/data

mount1 = name=logs,path=/home/airflow/logs

mount2 = name=data,path=s3://example

Mount Options:

- name: mount name (destination)

- path: local path or PyFilesystem FS URLs - see https://docs.pyfilesystem.org/en/latest/openers.html

Example:

- name=ftp_server,path=ftp://user:[email protected]/private

- name=data,path=s3://example

- name=tmp,path=/tmp

You can also set options with the following environment variables:

- AIRFLOW__CODE_EDITOR__ENABLED

- AIRFLOW__CODE_EDITOR__GIT_ENABLED

- AIRFLOW__CODE_EDITOR__GIT_CMD

- AIRFLOW__CODE_EDITOR__GIT_DEFAULT_ARGS

- AIRFLOW__CODE_EDITOR__GIT_AUTHOR_NAME

- AIRFLOW__CODE_EDITOR__GIT_AUTHOR_EMAIL

- AIRFLOW__CODE_EDITOR__GIT_INIT_REPO

- AIRFLOW__CODE_EDITOR__ROOT_DIRECTORY

- AIRFLOW__CODE_EDITOR__LINE_LENGTH

- AIRFLOW__CODE_EDITOR__STRING_NORMALIZATION

- AIRFLOW__CODE_EDITOR__MOUNT, AIRFLOW__CODE_EDITOR__MOUNT1, AIRFLOW__CODE_EDITOR__MOUNT2, ...

- AIRFLOW__CODE_EDITOR__IGNORED_ENTRIES

Example:

export AIRFLOW__CODE_EDITOR__STRING_NORMALIZATION=True

export AIRFLOW__CODE_EDITOR__MOUNT='name=data,path=/home/airflow/data'

export AIRFLOW__CODE_EDITOR__MOUNT1='name=logs,path=/home/airflow/logs'

export AIRFLOW__CODE_EDITOR__MOUNT2='name=tmp,path=/tmp'

Airflow Code Editor provides a REST API. Through this API, users can interact with the application programmatically, enabling automation, data retrieval, and integration with other software.

For detailed information on how to use each endpoint, refer to the API documentation.

The API authentication is inherited from the Apache Airflow.

If you want to check which auth backend is currently set, you can use

airflow config get-value api auth_backends command as in the example below.

$ airflow config get-value api auth_backends

airflow.api.auth.backend.basic_authFor details on configuring the authentication, see API Authorization.

-

Fork the repo

-

Clone it on the local machine

git clone https://github.com/andreax79/airflow-code-editor.git

cd airflow-code-editor- Create dev image

make dev-image- Switch node version

nvm use- Make changes you need. Build npm package with:

make npm-build- You can start Airflow webserver with:

make webserver- Run tests

make test-

Commit and push changes

-

Create pull request to the original repo

- Apache Airflow

- Codemirror, In-browser code editor

- codemirror-theme-bundle, custom themes for CodeMirror 6

- codemirror-vim, Vim keybindings for CodeMirror 6

- codemirror-emacs, Emacs keybindings for CodeMirror 6

- Git WebUI, A standalone local web based user interface for git repositories

- Black, The Uncompromising Code Formatter

- isort, A Python utility/library to sort imports

- pss, power-tool for searching source files

- Vue.js

- Vue-good-table, data table for VueJS

- Vue-tree, TreeView control for VueJS

- Vue-universal-modal Universal modal plugin for Vue@3

- Vue-simple-context-menu

- vue3-notification, Vue.js notifications

- Splitpanes

- Axios, Promise based HTTP client for the browser and node.js

- PyFilesystem2, Python's Filesystem abstraction layer

- Amazon S3 PyFilesystem

- Google Cloud Storage PyFilesystem

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airflow-code-editor

Similar Open Source Tools

airflow-code-editor

The Airflow Code Editor Plugin is a tool designed for Apache Airflow users to edit Directed Acyclic Graphs (DAGs) directly within their browser. It offers a user-friendly file management interface for effortless editing, uploading, and downloading of files. With Git support enabled, users can store DAGs in a Git repository, explore Git history, review local modifications, and commit changes. The plugin enhances workflow efficiency by providing seamless DAG management capabilities.

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

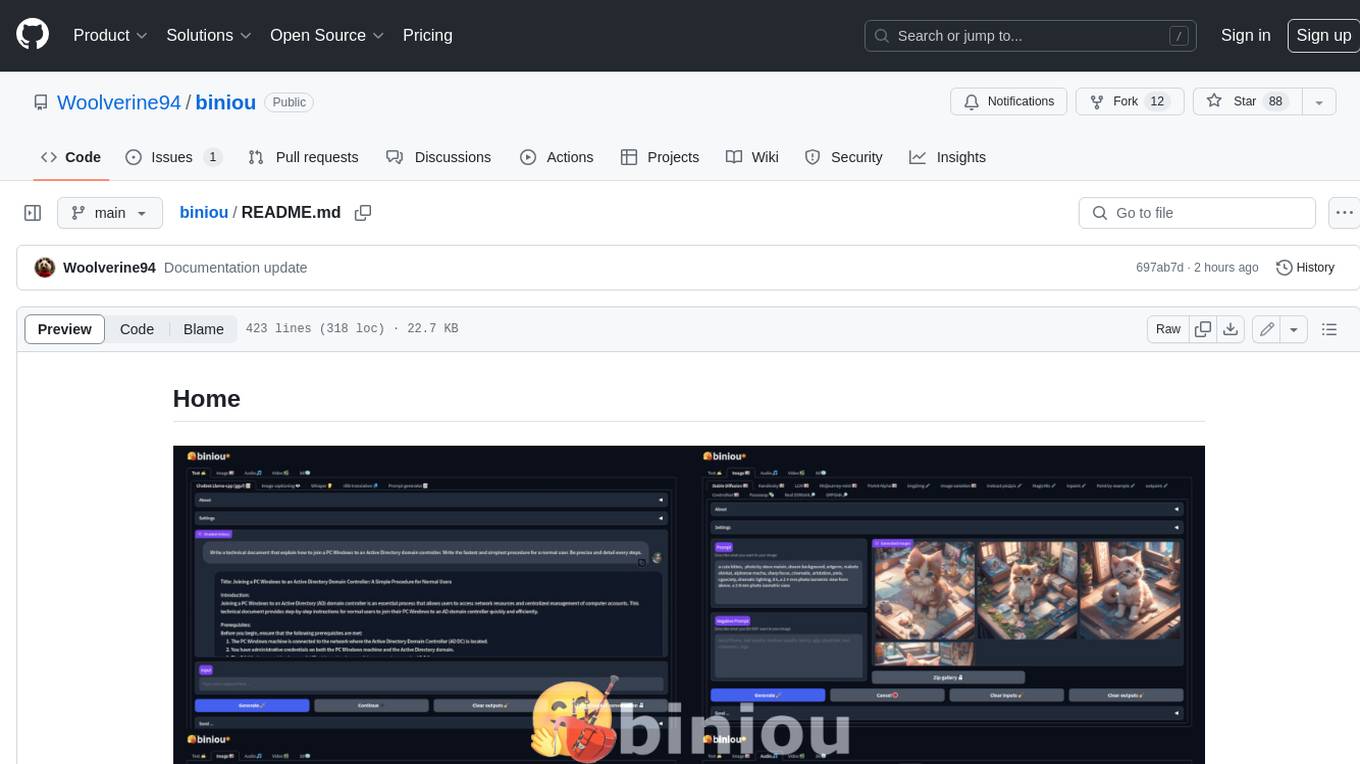

biniou

biniou is a self-hosted webui for various GenAI (generative artificial intelligence) tasks. It allows users to generate multimedia content using AI models and chatbots on their own computer, even without a dedicated GPU. The tool can work offline once deployed and required models are downloaded. It offers a wide range of features for text, image, audio, video, and 3D object generation and modification. Users can easily manage the tool through a control panel within the webui, with support for various operating systems and CUDA optimization. biniou is powered by Huggingface and Gradio, providing a cross-platform solution for AI content generation.

panko-gpt

PankoGPT is an AI companion platform that allows users to easily create and deploy custom AI companions on messaging platforms like WhatsApp, Discord, and Telegram. Users can customize companion behavior, configure settings, and equip companions with various tools without the need for coding. The platform aims to provide contextual understanding and user-friendly interface for creating companions that respond based on context and offer configurable tools for enhanced capabilities. Planned features include expanded functionality, pre-built skills, and optimization for better performance.

databend

Databend is an open-source cloud data warehouse built in Rust, offering fast query execution and data ingestion for complex analysis of large datasets. It integrates with major cloud platforms, provides high performance with AI-powered analytics, supports multiple data formats, ensures data integrity with ACID transactions, offers flexible indexing options, and features community-driven development. Users can try Databend through a serverless cloud or Docker installation, and perform tasks such as data import/export, querying semi-structured data, managing users/databases/tables, and utilizing AI functions.

deepchat

DeepChat is a versatile chat tool that supports multiple model cloud services and local model deployment. It offers multi-channel chat concurrency support, platform compatibility, complete Markdown rendering, and easy usability with a comprehensive guide. The tool aims to enhance chat experiences by leveraging various AI models and ensuring efficient conversation management.

fast-llm-security-guardrails

ZenGuard AI enables AI developers to integrate production-level, low-code LLM (Large Language Model) guardrails into their generative AI applications effortlessly. With ZenGuard AI, ensure your application operates within trusted boundaries, is protected from prompt injections, and maintains user privacy without compromising on performance.

archestra

Archestra is an enterprise-grade platform that enables non-technical users to safely leverage AI agents and MCP servers. It provides a secure runtime environment for AI interactions with sandboxing, resource controls, and prompt injection prevention. The platform supports MCP Protocol and is designed with a local-first architecture, enterprise-level security, and extensible tool system.

aide

Aide is a Visual Studio Code extension that offers AI-powered features to help users master any code. It provides functionalities such as code conversion between languages, code annotation for readability, quick copying of files/folders as AI prompts, executing custom AI commands, defining prompt templates, multi-file support, setting keyboard shortcuts, and more. Users can enhance their productivity and coding experience by leveraging Aide's intelligent capabilities.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

mcp-router

MCP Router is a desktop application that simplifies the management of Model Context Protocol (MCP) servers. It is a universal tool that allows users to connect to any MCP server, supports remote or local servers, and provides data portability by enabling easy export and import of MCP configurations. The application prioritizes privacy and security by storing all data locally, ensuring secure credentials, and offering complete control over server connections and data. Transparency is maintained through auditable source code, verifiable privacy practices, and community-driven security improvements and audits.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

pebble

Pebbling is an open-source protocol for agent-to-agent communication, enabling AI agents to collaborate securely using Decentralised Identifiers (DIDs) and mutual TLS (mTLS). It provides a lightweight communication protocol built on JSON-RPC 2.0, ensuring reliable and secure conversations between agents. Pebbling allows agents to exchange messages safely, connect seamlessly regardless of programming language, and communicate quickly and efficiently. It is designed to pave the way for the next generation of collaborative AI systems, promoting secure and effortless communication between agents across different environments.

axolotl

Axolotl is a lightweight and efficient tool for managing and analyzing large datasets. It provides a user-friendly interface for data manipulation, visualization, and statistical analysis. With Axolotl, users can easily import, clean, and explore data to gain valuable insights and make informed decisions. The tool supports various data formats and offers a wide range of functions for data processing and modeling. Whether you are a data scientist, researcher, or business analyst, Axolotl can help streamline your data workflows and enhance your data analysis capabilities.

UI-TARS-desktop

UI-TARS-desktop is a desktop application that provides a native GUI Agent based on the UI-TARS model. It offers features such as natural language control powered by Vision-Language Model, screenshot and visual recognition support, precise mouse and keyboard control, cross-platform support (Windows/MacOS/Browser), real-time feedback and status display, and private and secure fully local processing. The application aims to enhance the user's computer experience, introduce new browser operation features, and support the advanced UI-TARS-1.5 model for improved performance and precise control.

For similar tasks

airflow-code-editor

The Airflow Code Editor Plugin is a tool designed for Apache Airflow users to edit Directed Acyclic Graphs (DAGs) directly within their browser. It offers a user-friendly file management interface for effortless editing, uploading, and downloading of files. With Git support enabled, users can store DAGs in a Git repository, explore Git history, review local modifications, and commit changes. The plugin enhances workflow efficiency by providing seamless DAG management capabilities.

ai-commit

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

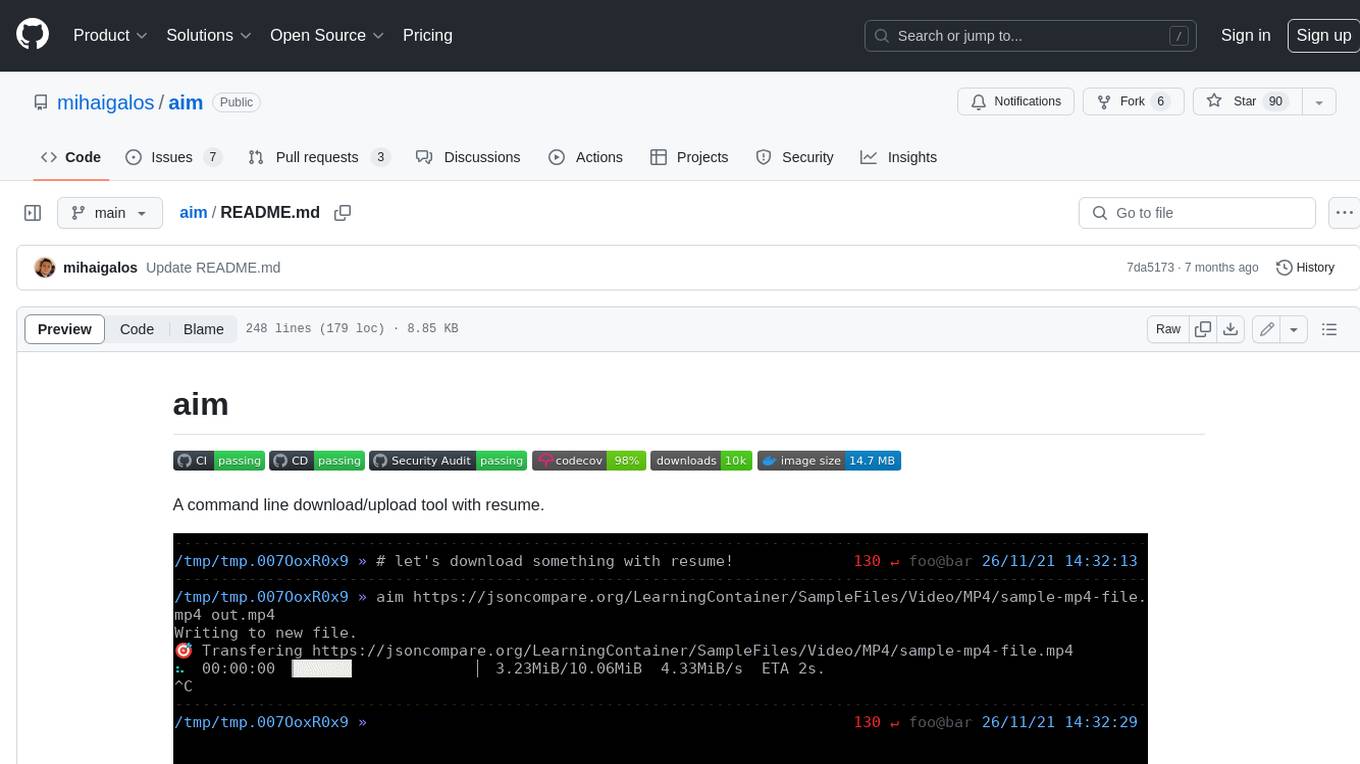

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

aiotieba

Aiotieba is an asynchronous Python library for interacting with the Tieba API. It provides a comprehensive set of features for working with Tieba, including support for authentication, thread and post management, and image and file uploading. Aiotieba is well-documented and easy to use, making it a great choice for developers who want to build applications that interact with Tieba.

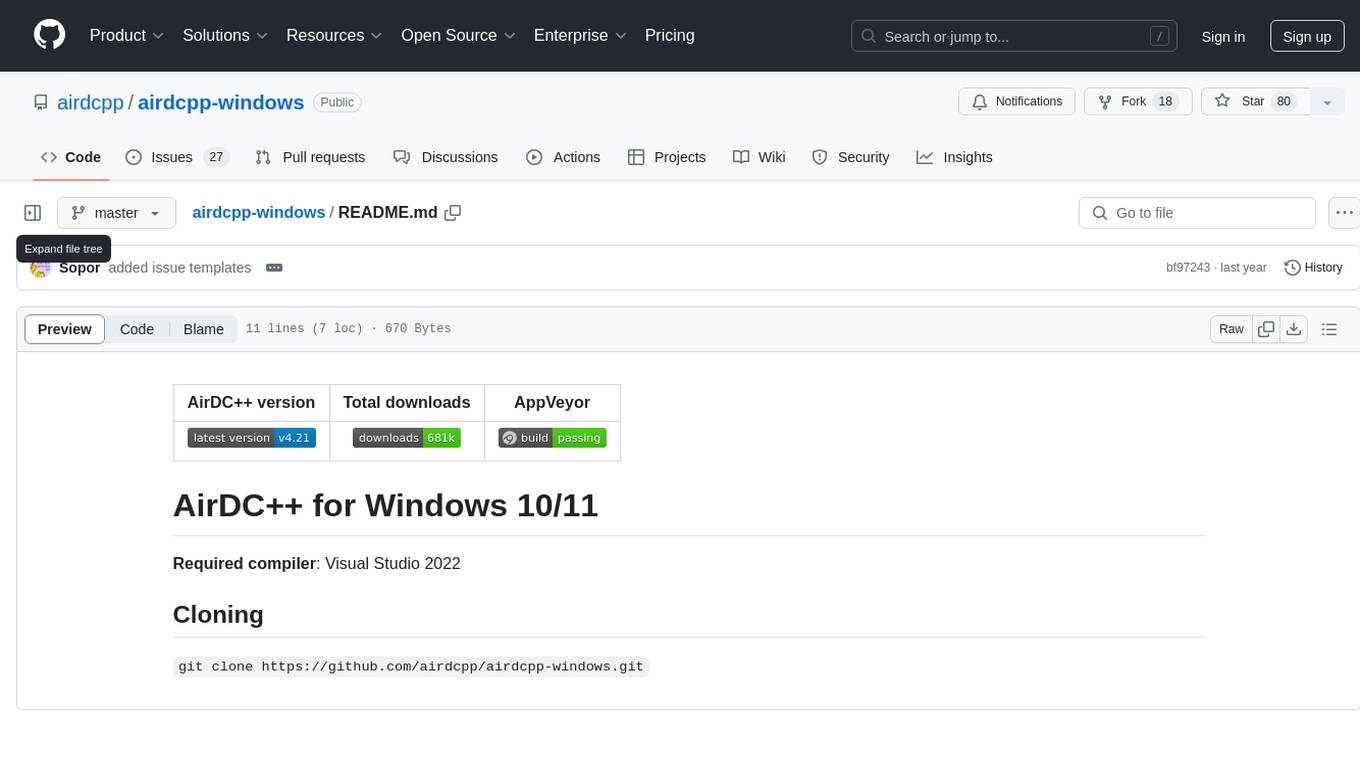

airdcpp-windows

AirDC++ for Windows 10/11 is a file sharing client with a focus on ease of use and performance. It is designed to provide a seamless experience for users looking to share and download files over the internet. The tool is built using Visual Studio 2022 and offers a range of features to enhance the file sharing process. Users can easily clone the repository to access the latest version and contribute to the development of the tool.

KEITH-MD

KEITH-MD is a versatile bot updated and working for all downloaders fixed and are working. Overall performance improvements. Fork the repository to get the latest updates. Get your session code for pair programming. Deploy on Heroku with a single tap. Host on Discord. Download files and deploy on Scalingo. Join the WhatsApp group for support. Enjoy the diverse features of KEITH-MD to enhance your WhatsApp experience.

allchat

ALLCHAT is a Node.js backend and React MUI frontend for an application that interacts with the Gemini Pro 1.5 (and others), with history, image generating/recognition, PDF/Word/Excel upload, code run, model function calls and markdown support. It is a comprehensive tool that allows users to connect models to the world with Web Tools, run locally, deploy using Docker, configure Nginx, and monitor the application using a dockerized monitoring solution (Loki+Grafana).

chat-xiuliu

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.