aiges

AI Serving framework loader

Stars: 275

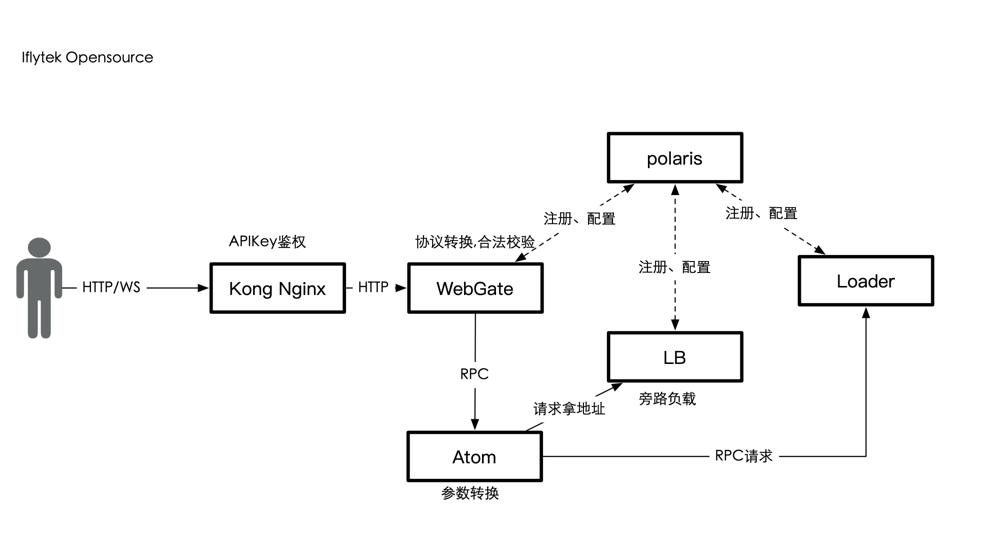

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

README:

AIGES是 Athena Serving Framework中的核心组件,它是一个个专为AI能力开发者打造的AI算法模型、引擎的通用封装工具。 您可以通过集成AIGES,快速部署AI算法模型、引擎,并托管于Athena Serving Framework,即可使用网络、分发策略、数据处理等配套辅助系统。 Athena Serving Framework 致力于加速AI算法模型、引擎云服务化,并借助云原生架构,为云服务的稳定提供多重保障。 您无需关注底层基础设施及服务化相关的开发、治理和运维,即可高效、安全地对模型、引擎进行部署、升级、扩缩、运营和监控。

☑ 支持模型推理成RPC服务(Serving框架会转成HTTP服务)

☑ 支持C代码推理

☑ 支持Python代码推理

☑ 支持once(非流式)推理、流式推理

☑ 支持配置中心,服务发现

☑ 支持负载均衡配置

☑ 支持HTTP/GRPC服务

☑ Cgo模式/GRPC模式切换 [go 和 python通信方式]

参见: 👉👉👉ase-proto

python 版本请选用 3.9+ 也可以下载我们的docker镜像

如下流程可在容器环境中进行 (无需gpu):

docker run -itd --name mnist2 -p 1889:1888 public.ecr.aws/iflytek-open/aiges-gpu:10.1-1.17-3.9.13-ubuntu1804-v3.0-alpha11 bash您也可以自己准备环境, 直接下载二进制在您自己的任何环境上运行aiges.

pip3 install aiges==0.5.0 -i https://pypi.python.org/simple

wget https://github.com/iflytek/aiges/releases/download/v3.0-alpha11/aiges_3.0-alpha11_linux_amd64.tar.gz

通过aiges创建一个名为mnist的项目

python3 -m aiges create -n mnistroot# tree mnist/

mnist/

├── Dockerfile

├── README.md

├── requirements.txt

└── wrapper

├── test_data

│ └── test.png

└── wrapper.pytar zxvf aiges_3.0-alpha11_linux_amd64.tar.gz -C mnist

首次执行:

root@505a3a0e670c:/home/aiges# ./AIservice

加载器运行方法:

- 本地模式运行

1: ./AIservice -init , 初始化配置文件 aiges.toml (若存在,则不会替换)

2: ./AIservice -m=0 , 仅用于本地模式运行

3: ./AIservice -mnist , 下载mnistdemo

- 配置中心模式 (开源计划删除)

- 更多参数选项: 请执行 ./AIservice -h此时项目结构如下

➜ mnist git:(master) ✗ tree -L 3 .

.

├── AIservice

├── Dockerfile

├── include

│ ├── type.h

│ └── wrapper.h

├── library

│ ├── libahsc.so

│ ├── libIce.so.34

│ └── libIceUtil.so.34

├── README.md

├── requirements.txt

└── wrapper

├── test_data

│ └── test.png

└── wrapper.py

4 directories, 11 files顺序执行如下:

-

export AIGES_PLUGIN_MODE=python -

./AIservice -init【会在当前目录下生成一个 aiges.toml】 -

./AIservice -m 0 -c aiges.toml -s svcName

启动引擎,此时结果如下:【注意svcName必须和aiges 的section对应,当前默认就是 svcName】

root@012d31456c50:/home/aiges/mnist# ./AIservice -m 0 -c aiges.toml -s svcName

2022/11/15 18:22:01 widgetpy.go:26: Starting Using Python :

config.toml version:

2022/11/15 18:22:01 utils.NewLocalLog success. -> LOGLEVEL:debug, FILENAME:./log/aiges.log, MAXSIZE:3, MAXBACKUPS:3, MAXAGE:3

2022/11/15 18:22:01 host2ip->ip:0.0.0.0,port:5090

2022/11/15 18:22:01 finderSwitch:0,finderSwitchErr:<nil>

2022/11/15 18:22:01 about to deal with hermes.

2022/11/15 18:22:02 NewSessionManager success.

2022/11/15 18:22:02 NewSidGenerator success.

2022/11/15 18:22:02 fn:AbleTrace,able:false

2022/11/15 18:22:02 about to deal finder.

2022/11/15 18:22:02 about to deal metrics.

2022/11/15 18:22:02 metrics is disable

2022/11/15 18:22:02 about to deal rateLimiter.

2022/11/15 18:22:02 about to deal vCpuManager.

2022/11/15 18:22:02 about to deal bvtVerifier.

2022/11/15 18:22:02 namespace not set, use default

2022/11/15 18:22:02 bvt is disable

header pass list: []

2022-11-15T18:22:02.476+0800 [WARN] python-plugin: plugin configured with a nil SecureConfig

2022-11-15T18:22:02.477+0800 [DEBUG] python-plugin: starting plugin: path=/bin/sh args=[sh, -c, "/usr/bin/env python -m aiges.serve"]

2022-11-15T18:22:02.478+0800 [DEBUG] python-plugin: plugin started: path=/bin/sh pid=126

2022-11-15T18:22:02.478+0800 [DEBUG] python-plugin: waiting for RPC address: path=/bin/sh

2022-11-15T18:22:02.653+0800 [DEBUG] python-plugin: using plugin: version=1

2022-11-15T18:22:02.655+0800 [DEBUG] python-plugin.stdio: received EOF, stopping recv loop: err="rpc error: code = Unimplemented desc = Method not found!"

2022-11-15T18:22:02.656+0800 [DEBUG] python-plugin.sh: root:wrapperInit:107 - INFO: Importing module from wrapper.py: wrapper

2022-11-15T18:22:02.657+0800 [DEBUG] python-plugin.sh: root:wrapperInit:119 - ERROR: module 'wrapper' has no attribute 'Wrapper'

2022/11/15 18:22:02 grpc.go:20: Call WrapperInit Failed...ret: 30001这是因为我们的 wrapper还未准备好

下载 mnist demo:

./AIservice -mnist

默认会下载 https://github.com/iflytek/aiges_demo.git 项目,并解压到当前目录 aiges_demo

如果此命令长时间没有反应,可能是因为GFW问题, 可手动下载 https://github.com/iflytek/aiges_demo/archive/refs/tags/v1.0.0.zip

unzip 解压到当 aiges_demo目录中即可【注意手动解压可能嵌套了一层 aiges_demo_1.0.0目录】。

删除 当前mnist下默认生成的wrapper目录,替换上述的demo

rm -r wrappercp -ra aiges_demo/mnist/wrapper/ ./cp -ra aiges_demo/mnist/requirements.txt mnist/pip install -r requirements.txtexport AIGES_PLUGIN_MODE=pythonexport PYTHONPATH=/home/aiges/mnist/wrapper再次运行引擎 ./AIservice -m 0 -c aiges.toml -s svcName

标准输出如下:

2022/11/15 21:26:29 widgetpy.go:26: Starting Using Python :

config.toml version:

2022/11/15 21:26:29 utils.NewLocalLog success. -> LOGLEVEL:debug, FILENAME:./log/aiges.log, MAXSIZE:3, MAXBACKUPS:3, MAXAGE:3

2022/11/15 21:26:29 host2ip->ip:0.0.0.0,port:5090

2022/11/15 21:26:29 finderSwitch:0,finderSwitchErr:<nil>

2022/11/15 21:26:29 about to deal with hermes.

2022/11/15 21:26:30 NewSessionManager success.

2022/11/15 21:26:30 NewSidGenerator success.

2022/11/15 21:26:30 fn:AbleTrace,able:false

2022/11/15 21:26:30 about to deal finder.

2022/11/15 21:26:30 about to deal metrics.

2022/11/15 21:26:30 metrics is disable

2022/11/15 21:26:30 about to deal rateLimiter.

2022/11/15 21:26:30 about to deal vCpuManager.

2022/11/15 21:26:30 about to deal bvtVerifier.

2022/11/15 21:26:30 namespace not set, use default

2022/11/15 21:26:30 bvt is disable

header pass list: []

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.

[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.

- using env: export GIN_MODE=release

- using code: gin.SetMode(gin.ReleaseMode)

[GIN-debug] GET /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] POST /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] PUT /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] PATCH /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] HEAD /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

2022-11-15T21:26:30.116+0800 [WARN] python-plugin: plugin configured with a nil SecureConfig

2022-11-15T21:26:30.116+0800 [DEBUG] python-plugin: starting plugin: path=/bin/sh args=[sh, -c, "/usr/bin/env python -m aiges.serve"]

[GIN-debug] OPTIONS /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] DELETE /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] CONNECT /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] TRACE /v1/svcName --> github.com/xfyun/aiges/httproto.(*Server).ginHandler.func1 (3 handlers)

[GIN-debug] GET /test.json --> github.com/xfyun/aiges/httproto.getDemo (3 handlers)

2022-11-15T21:26:30.116+0800 [DEBUG] python-plugin: plugin started: path=/bin/sh pid=1081

2022-11-15T21:26:30.116+0800 [DEBUG] python-plugin: waiting for RPC address: path=/bin/sh

[GIN-debug] GET /swagger/*any --> github.com/swaggo/gin-swagger.CustomWrapHandler.func1 (3 handlers)

2022-11-15T21:26:30.242+0800 [DEBUG] python-plugin: using plugin: version=1

2022-11-15T21:26:30.244+0800 [DEBUG] python-plugin.stdio: received EOF, stopping recv loop: err="rpc error: code = Unimplemented desc = Method not found!"

2022-11-15T21:26:30.245+0800 [DEBUG] python-plugin.sh: root:wrapperInit:107 - INFO: Importing module from wrapper.py: wrapper

2022-11-15T21:26:31.642+0800 [DEBUG] python-plugin.sh: root:_check_path:151 - WARNING: <class 'FileNotFoundError'>

2022-11-15T21:26:31.643+0800 [DEBUG] python-plugin.sh: root:wrapperInit:112 - INFO: User Wrapper newed Success.. starting call user init functions...

2022-11-15T21:26:31.643+0800 [DEBUG] python-plugin.sh: root:wrapperInit:85 - INFO: Initializing ...

2022-11-15T21:26:31.666+0800 [DEBUG] python-plugin.sh: root:wrapperSchema:141 - INFO: Entering warpperSchema ...

2022-11-15T21:26:31.672+0800 [DEBUG] python-plugin.sh: root:test_value:233 - WARNING: test_data/0.png not exist.. check

2022-11-15T21:26:31.672+0800 [DEBUG] python-plugin.sh: root:schema:434 - INFO: Genrating Schema...

aiService.Init: init success!

2022/11/15 21:26:31 about to x.run

2022/11/15 21:26:31 about init interceptor

2022/11/15 21:26:31 success init interceptor

2022/11/15 21:26:31 about to call grpc.NewServer(opts...),maxRecv:4194304,maxSend:4194304

2022/11/15 21:26:31 about to call utils.RegisterXsfCallServer(x.grpcserver, srv)

2022/11/15 21:26:31 about to call reflection.Register(x.grpcserver)

2022/11/15 21:26:31 about to exec userCallback

2022/11/15 21:26:31 deal with UserHighPriority

2022/11/15 21:26:31 deal with UserNormalPriority

2022/11/15 21:26:31 deal with UserLowPriority

2022/11/15 21:26:31 about to call x.grpcserver.Serve

2022/11/15 21:26:31 about to check if the grpc service([::]:5090) is started

2022/11/15 21:26:31 grpc server([::]:5090) started successfully

2022/11/15 21:26:31 bvtVerifierInst is disable,ignore...

2022/11/15 21:26:31 about to call finderadapter.Register([::]:5090)

2022/11/15 21:26:31 about to exec fcDelayInst

2022/11/15 21:26:31 about to call fc delay task

2022/11/15 21:26:31 blocking for grpcserver.Serve默认监听 http端口是: 1888, 可从aiges.toml文件中看到

-

api地址: http://youIP:1888/v1/svcName [具体地址可以访问 http://youIP:1888 swagger进行查看]

-

post方式请求: body 部分

{

"header":{

"appid":"123456",

"uid":"39769795890",

"did":"SR082321940000200",

"imei":"8664020318693660",

"imsi":"4600264952729100",

"mac":"6c:92:bf:65:c6:14",

"net_type":"wifi",

"net_isp":"CMCC",

"status":3,

"res_id":""

},

"parameter":{

"svcName":{

"result":{

"encoding":"utf8",

"compress":"raw",

"format":"plain",

"data_type": "text"

}

}

},

"payload":{

"img":{

"encoding":"jpg",

"status":3,

"image":"iVBORw0KGgoAAAANSUhEUgAAAOEAAADgCAYAAAD17wHfAAANa0lEQVR4Xu3dr29USxyG8VpUExQOhcGRIAkGU1OFh/+gCZI/AEdSWdMEj0A2QW+CQyFRyAYFloS9eTf5hsm7c2a295w9c3484hPgnrvdbbvPzrYzc87J5eXl9uLiYvvmzRsAI3r9+vV2s9lsTxTgyckJgAZubm62JyrSDwAYx+fPn4kQaGkX4ePHj/cOABgHIyHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0BgRAo0RIdAYEQKNESHQGBECjREh0NguwsePH+8dADAORkKgMSIEGiNCoDEiBBojQqAxIgQaI0KgMSIEGiNCoDEiBBojQqCxRUT49OnT7YsXL7KeP3++1ed3fX29vbq6ynr37l3V27dvs3Ts/Px8++TJk05am/vw4cNOp6ene58T1mMRESq2ly9fdrq8vNxuNpvdJ5vz8ePH7YcPHzpFwGnI6X97/fr19tmzZ7vgcxTio0ePOt2/f3/vc8J6LD5CjVKK0MP7PxHmpBHmECFqiHCACF+9erUXX4oIUUKEA0TISIg+iHCACEsjIRGihgiJEI0tIkI92c/OzrIUqKYRbm5uOik0j8sp5BwdU+yaavC4iAyHWESEGsm+ffuW9fXr1+3t7e32z58/29+/fx/Fr1+/tj9//uyk+//x40cnTZ/4C8Na6AmodxNd9AKpF1PNtfr8a/Dnw9wsIkJ9M/2JnVIkf//+bab2AqAXiy9fvqySXiT1Itrl06dPu3caik2LMnL8+TA3i4/w+/fvk4hQjyFn7RGKh0eEM0SE8+bhEeEMEeG8eXhEOENEOG8eHhHOEBHOm4dHhDOkCEvTAHqiexhjSiOMKY30T0WoaYoSf+Km/P+dE49QUxIeok9R+HSFPx/mZhERXlxc7O3/S2lC3b+xqdJkvGi+ym+T0hfRn1wpjcZ6keji84opharbl9Q+xrHFO44u/v+n9Pn518O/Nu/fv9/tCc3Rns579+7tPSfmZBER6tXQ9/AFrabR2xmF2kVL29LbxGbgoGVpfpuUQvWlbimFWBqpNVL66Jkq3XaMkV6Pr0SheDzhkM+vRi90CtFfHGPFEhFOgH4u8B31KUXkr6ApRerL3VLaJeG3CYpQTwQPL6W3XKWQahGVbnvI7fvy6FyMhl2Ps2+E+hoqwhyFSIQTQITl2/fl0bk0QkeEdUR4QISl2xPhvwgdER6GCA+IkJFwP7xchOnox0h4OCIkwiqPzqUjob8VFSIsW0SEsZ/Q4wuKyE9VmFKkur1+Syr+99Lt9bWrTWFop4D/aj7lTzpX+u2j+P8/Nr0I+OeU6hth/HY0ogv6N78dnQhtmn3w4EGn0oZb0XG/zV1un67m0AtC+meM0ulu/5RCL420MVLHi0JO7fZ9+ZSM04uYf15Bn7vmCn2lUMqjcwpO9+OPS5gnxMFKT5SY5/RTY8TpMWo780vzpEPwZWJOL1L6/HL0+LQiSLH5CHnoOwGNeHqh8fhD6Ws7B0Q4Aj1JFFKOzr5d+5lWo7H+vxx97Nrt+/IXBhen8MjR50+EZUQ4AiIkwpJdhFoQ6wcwHCIkwhJGwhEQIRGWEOEIiJAIS4hwBGNEWJon7ct/W+oUoT+uoM9fEXp4d4kwpihy9NwlQlTpSVJSm4eM2LrUbt+XPn6JXgQUYy5Y/b4hVs108RVAKUWqUVAjrn/s4F/vuSFC9KYQfJI+nayvrerRiqJUeuJmTfRrlNf9+IuPxGg7Z0SI3vpGGNHlRIQKzt/Kpz93zhkRordjR6ileUQIFBw7QkZCoIII+yFC9EaE/RAheot5ypirTP+uY5pq8PBKEcapEvV33ZafCbF6/qT3ALTzXcH4VEOobeqNzdEh/h0bp2Nts09PBH+8c0OEqFJsvtE56LjOgF5allaL0Dcpp/SWNu5nqYgQVYdEqNj8tBuhFqH/HOmIEKtHhMdFhKgiwuMiQlQR4XERIaqI8LiIEFU+JeDTA8eOUNul/DEtCRGiSiFoT1/QeUZFf9exmAvUSYDvShH6/sCUJvyXMCFfQoSo0oS5X3g16JhWt/joloo5xDjZb/p3xavJ+JIl7BksIUJU6fnh8d01wi61CHViYyLE6h07wrgOfQ4RAifHj9DDc0SI1WsZISMhcHL8CHk7SoSo0Pk9/bqMQc+dWoQ+LeFTFBGh/swhQiyezi3qT/yg1Sq60rA218ZmWxcxddGFPD3eoNHU9yi6JewZLCFC7GLTxLif3j5OcR874/XWMcejcxpJdR/aZe90H/541oYIsQvE4/MIPTzn4XmESz6Ddl9ECCJsjAjRNEKtDfXHszZEiN4RenSOCMuIEL0jrIVIhGVEiN0cnf/WMmgvXy1Cj87p+UWE3YgQu32BPn+XTsbXLuSpucLNZpOlvYYKbckn7+2LCLF7u+jL0dJlabUIdeJfPZFyFKJGPL9P/EOE2I2EHt8xIvRTYyzlDNp9ESGIsLFdhPrB3A9gPYiwLUZCEGFjRAgibIwIMViEPj0h2gZFhGVEuAL+pPcALi8v90awEJc98wn6dKL+48ePu2mOHD23tOrGHxP+IcIV8PA8wtpFPrVp1+NLI3z//n1x1Q0T8mVEuAIe3l0jrI2EGklL60+JsIwIV8DDu2uEh4yERPj/EeEKeHhDR1gaCfVLGSIsI8IV8PDGjJCRsI4IV8DDI8JpIUJsr6+v98ILmuc7JMI4c5rj7WgdEa6AJuP1Pc7RXJ5GQQXlJ+aN+BSjT8Knk/H6GLof3ycYYsRFHhGugL6/vhImXRFTO4O2YtOkfY6eQLpgqN8nDkeEKxARpjvm038TYVtEuAK5CNMQibAtIlyBrggZCaeBCFeACKeNCFeACKeNCFegdH1BHatFqGkIPVFyFKimQPw+cTgiXABtI/JJ8qBjCkVzgV20adfnB9N5Qi3Qjr2BTiHrfvwx4XBEuACK4Pz8PEvHbm9v90a3lGLzy1inl7PWSNd1JV2tlNGFRP0x4XBEuACHRuhLzsIhEXYtSxOdKt8fEw5HhAtAhPNGhAtAhPNGhAtAhPNGhAtAhPNGhAug31L6df+Cfqup6wvWIuyi45qeIMLjIcIZOD097aTjV1dXuwl13+sXFJNPS6Q0VxirX5xur+sL6r40FZHDfsF+iHAGFIBvlA06/unTp91ol6PVMBrNPLyUAtYpLnQS3xyNgv6YMBwinIFDI1RwOX0i1McmwuMiwhkgwmUjwhkgwmUjwhkgwmUjwhkgwmUjwgVQLH0j9PjSCDUX6PeJ4RDhDGhXvOYCdZJdp71+mucrrXrRfsEuOq6T/2pVjF9bMHB9weMiwhnQN6l0mnqF5KNbqjRK6pi+/5pw90l40WoYJuOPiwhnQN8kDy89TX3fCLU7XsFpxMshwuMiwhkgwmXbRagFwH4A00GEy8ZIOANEuGxEOANEuGxEOAPaZhTB5WiqwcNLaVOvYsvRMSJsiwgnIKYCcnRcI1ZpHrA2Ga85RoXmZ+GOy6Npv6BC872KgQiPiwgnQLH56BN0vHbe0Bp9fxXa2dnZHp0CI+4HbRDhBIwRYQTnXr58SYSNEeEEtI5Q56Hxx4TxEOEEtI6QkbAtIpwAIlw3IpwAIlw3IpyBvhFqOiKCy+FnwraIcATaAR/zfk7HtXm2tFWpNg+oyXzN92lvodOeQ60NLu3OZx6wLSIcQS1CRVRa1VKLUBGXLuLJ281pI8IRHBKhh0eE60GEIyBClBDhCIgQJUQ4AiJECRGOgAhRQoQjiMuK+dSA6HjfqyopwgguhwinjQhHoNUqfi7PoPN9xlygX6AzeHRO+wW1VclXwwS9APhjwnQQ4Qi0KkUT57GJNqWRSqOdh3UX+tga7TQp73QK+7iYKKaJCEegCD2+oSPU0jMFl0OE00aEIyBClBDhCIgQJUQ4AiJECRGOgAhRQoQjiAh9/i4Q4boR4QBKAeiYrv9X2qp0yFxgCRHOGxEOQPNxz58/39FVbdM/dUwrWkqT8bUVMTVEOG9EOICIUOG5WoSHnEG7hgjnjQgHQITogwgHQITogwgHQITogwgHcNcIFV76JxGuGxEOQAF4fEHHFGFEF9J/E+G6EeEAtGdP+wJzXr16tfsiKxbFluNROV0EVPOMOYo4Nu4qRKeRmAinjQgHoNh8FUzQ11ZX0/Ww7iJ22OfomO5Hp8pQdBFjGiUn9502IhxALcLNZrMX1l3cJcIcIpw2IhwAEaKPXYT6ucEP4HBTijD3syERThsj4QCIEH0Q4QCIEH0Q4QCOHWFpG5SOpRHmMEUxbUQ4gL4RKiQf4dKRTh9DG4M155ijn+k12ik2FycYxnQR4QD6RqjQ/MKgQRcP1ZI4v08sBxEO4NgR6uzaGun8FPqBn/nmjQgHQITogwgHQITogwgHQITogwgHQITogwgHUIpQarsoFKFiy9E0BREuGxEOQJt3dQ3CHAWkr6+uIdildGJg0SoYhVbijwnzQYRAY0QINEaEQGNECDRGhEBjRAg0RoRAY4rwP1Ov0CXSgGK4AAAAAElFTkSuQmCC"

}

}

}返回响应

{

"header": {

"code": 0,

"sid": "0d9115af-0c6b-4526-a539-05b3c8aa9cfa",

"status": 3

},

"payload": {

"result": {

"compress": "raw",

"encoding": "utf8",

"format": "plain",

"seq": "0",

"status": "3",

"text":"{\"result\": 7, \"msg\": \"\识\别\结\果\为\数\字: 7\"}"

}

}

}当前默认集成了 swagger2.0 for openapi3.0.

启动后访问:

http://<yourip>:1888

如下图:

Try it out ! 可以复制上述 postman部分的 body进行请求。

可以看到识别结果返回

- 至此,单独的aiges加载器完成基本运行

由于alpha 是裁剪后,并刚刚合并了 http接口部分,很多功能还不完善,但是基本可以托管能力

目前已知问题:

-

python进程退出未做处理,需要跟随父进程自动退出

-

部分运行异常暂时没时间处理

- focus on:

- contact:

注意备注来源: 开源

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiges

Similar Open Source Tools

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

EduChat

EduChat is a large-scale language model-based chatbot system designed for intelligent education by the EduNLP team at East China Normal University. The project focuses on developing a dialogue-based language model for the education vertical domain, integrating diverse education vertical domain data, and providing functions such as automatic question generation, homework correction, emotional support, course guidance, and college entrance examination consultation. The tool aims to serve teachers, students, and parents to achieve personalized, fair, and warm intelligent education.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

goodsKill

The 'goodsKill' project aims to build a complete project framework integrating good technologies and development techniques, mainly focusing on backend technologies. It provides a simulated flash sale project with unified flash sale simulation request interface. The project uses SpringMVC + Mybatis for the overall technology stack, Dubbo3.x for service intercommunication, Nacos for service registration and discovery, and Spring State Machine for data state transitions. It also integrates Spring AI service for simulating flash sale actions.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

siliconflow-plugin

SiliconFlow-PLUGIN (SF-PLUGIN) is a versatile AI integration plugin for the Yunzai robot framework, supporting multiple AI services and models. It includes features such as AI drawing, intelligent conversations, real-time search, text-to-speech synthesis, resource management, link handling, video parsing, group functions, WebSocket support, and Jimeng-Api interface. The plugin offers functionalities for drawing, conversation, search, image link retrieval, video parsing, group interactions, and more, enhancing the capabilities of the Yunzai framework.

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

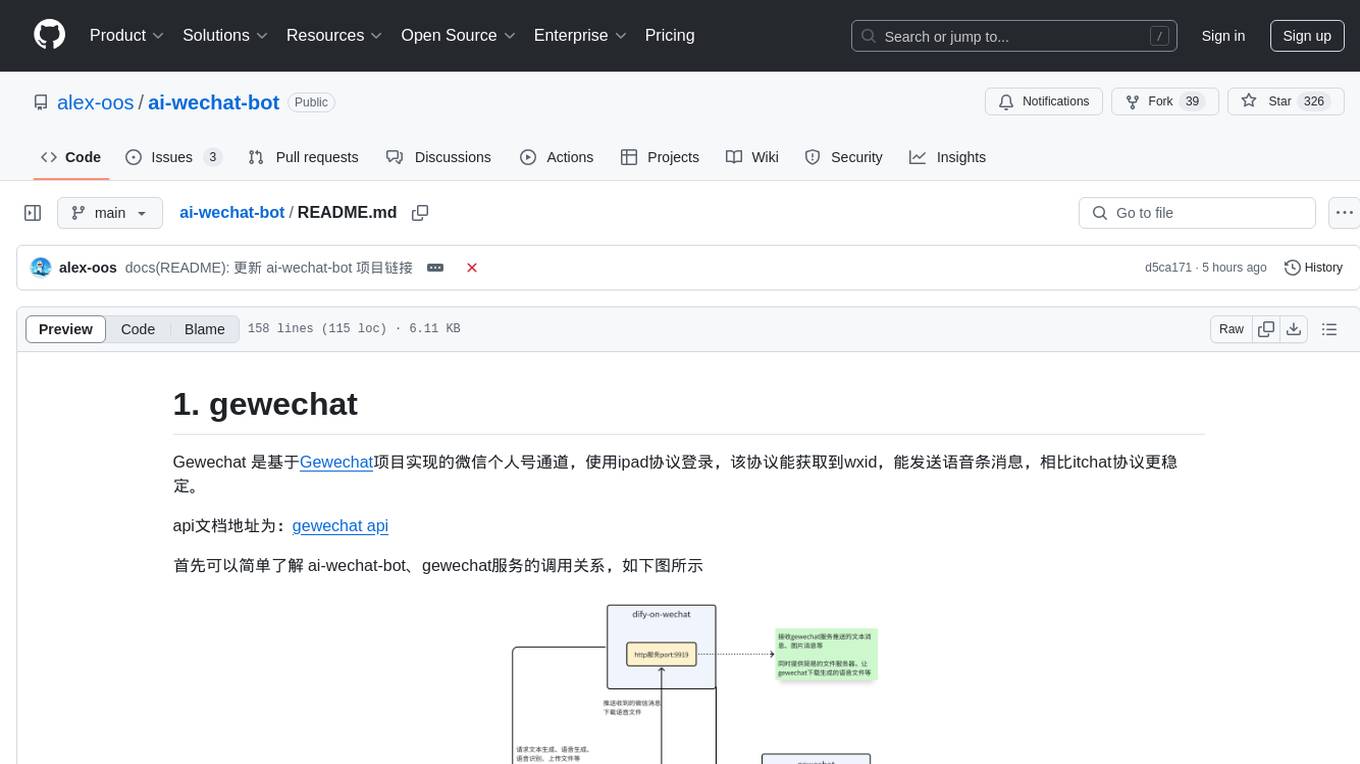

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

For similar tasks

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.