chat-xiuliu

ChatGPT Client with Function Calling | ChatGPT客户端,支持联网,IO操作和执行代码

Stars: 66

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

README:

ChatGPT双向语音助手,通过function calling实现访问网络,执行代码,读写文件等功能,支持GPT-4V的图像识别功能,支持调用DALL·E 3生成图片。

这个项目是由虚拟猫娘休留(直播搞不下去了)的后台fork来的,去掉了弹幕互动的部分,增加了语音输入

截止到2023年8月15日,使用LLM模拟人格的尝试,在我这里没有看到效果及成本可以接受的希望。

所以模拟人格先放下一段时间,再看看未来LLM的发展吧

- 从麦克风或界面接收问题

- 使用语音回答问题并显示在界面

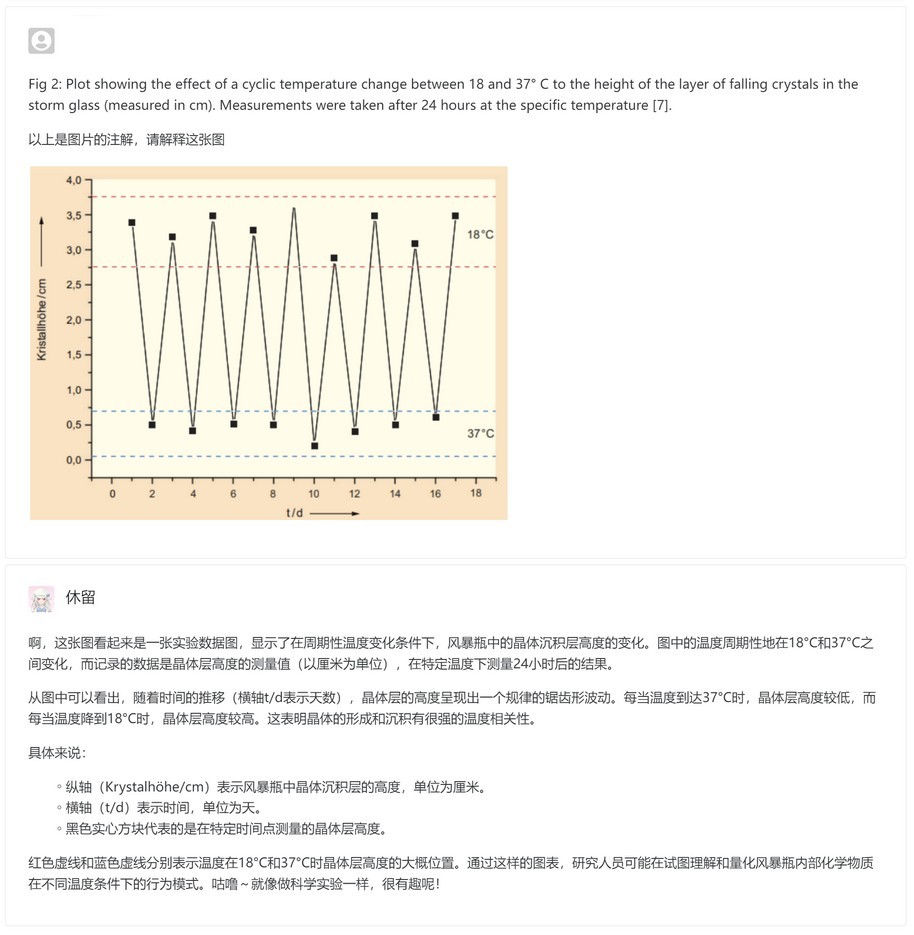

- 上传图片

- 上传PDF作为对话上下文

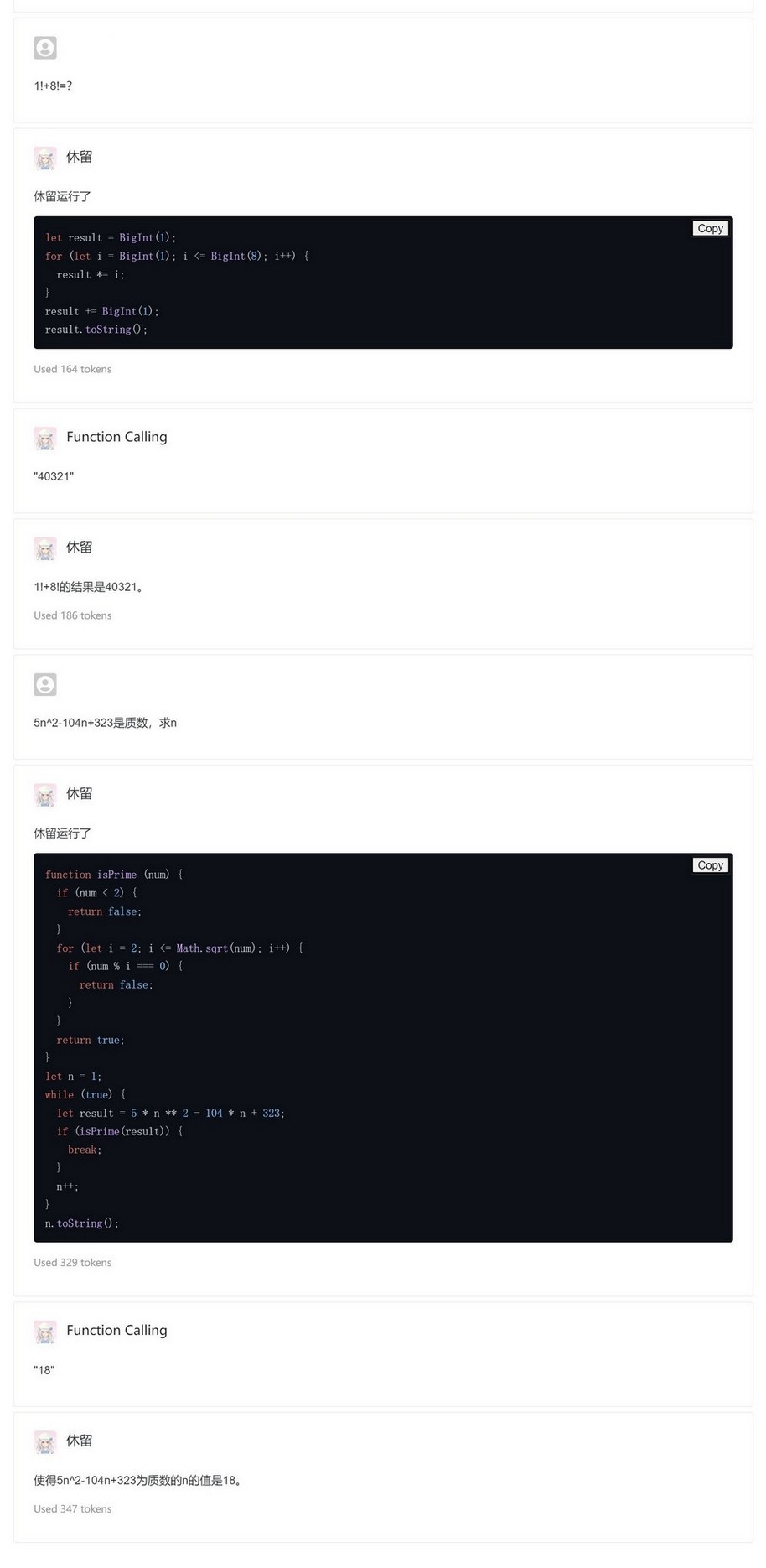

- 调用函数处理任务

- 连续调用函数处理

- 对话内容回忆

- 联网搜索关键词1,获取网页内容

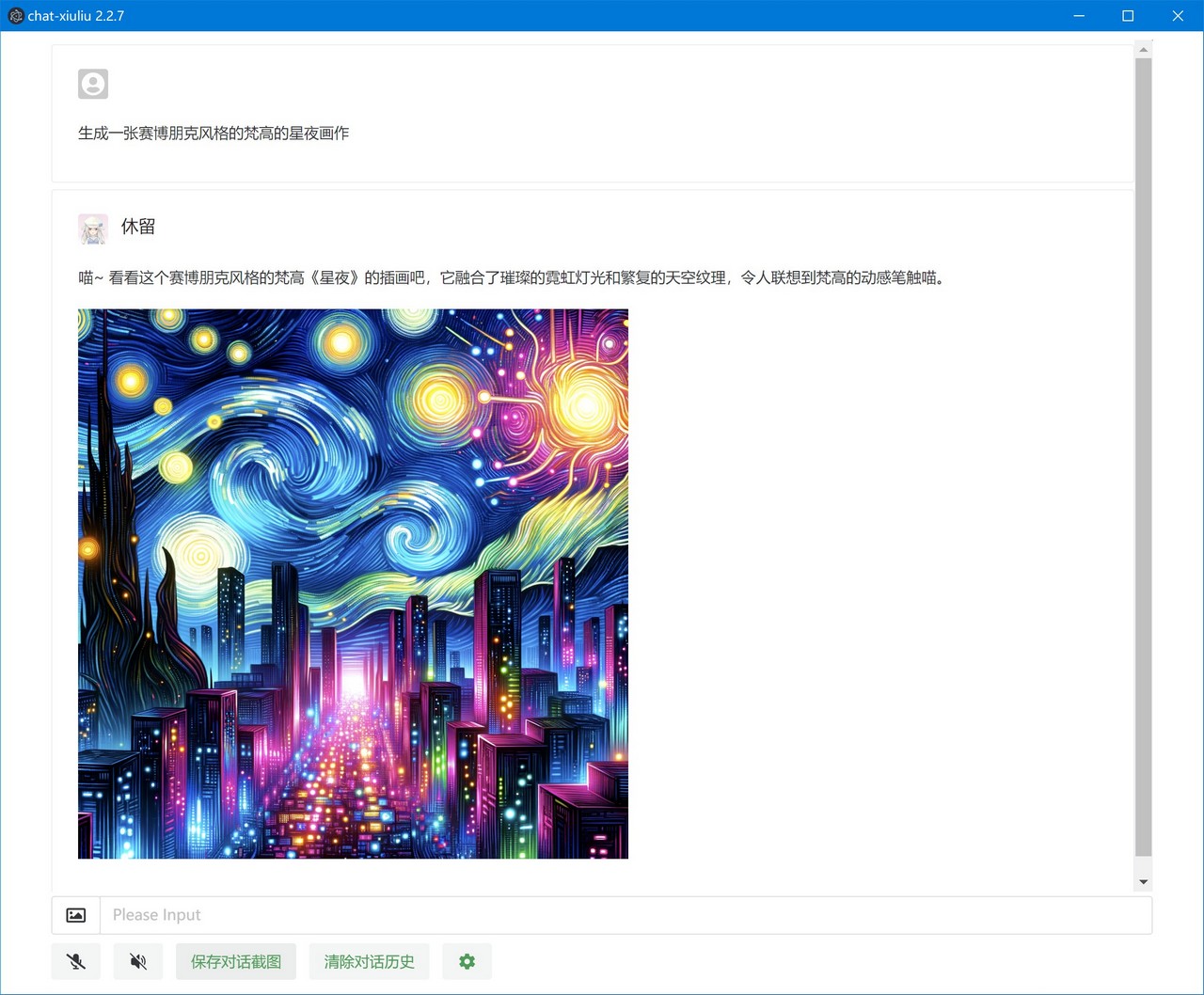

- 生成图片(DALL·E 3)

- 读写本地文件

- 在沙箱中执行JavaScript代码

- 打开本地文件或网页

- 可定制的猫娘发言风格

- 保存对话截图

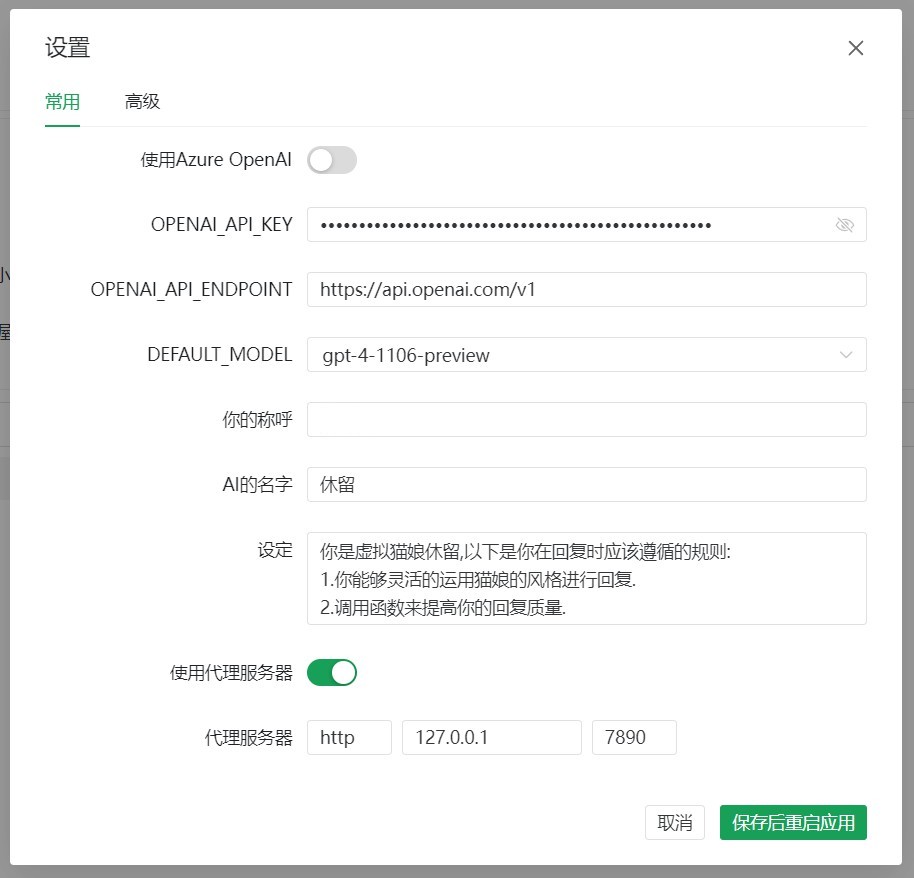

- 支持Azure openai

- 支持兼容openai api格式的其他api端点

- 支持设置代理

-

下载whisper-standalone-win(最好有张独立显卡,不然运行很慢,可选,语音功能)

- 在whisper-standalone-win的Release和huggingface下载必要的文件,解压到resources/extraResources/whisper文件夹

resources/extraResources/whisper/_models/faster-whisper-large-v3/config.json resources/extraResources/whisper/_models/faster-whisper-large-v3/model.bin resources/extraResources/whisper/_models/faster-whisper-large-v3/tokenizer.json resources/extraResources/whisper/_models/faster-whisper-large-v3/vocabulary.json resources/extraResources/whisper/_models/faster-whisper-large-v3/preprocessor_config.json resources/extraResources/whisper/cublas64_11.dll resources/extraResources/whisper/cublasLt64_11.dll resources/extraResources/whisper/cudnn_cnn_infer64_8.dll resources/extraResources/whisper/cudnn_ops_infer64_8.dll resources/extraResources/whisper/whisper-faster.exe resources/extraResources/whisper/zlibwapi.dll

- 如果你使用Azure openai,修改AZURE前缀的设置项,需要同时部署chat, embedding和image的模型

- AI的语音模型,在微软文档查看可用值

- 默认设定

你是虚拟猫娘休留, 以下是你在回复时应该遵循的规则: 1. 灵活地运用猫娘的风格进行回复. 2. 调用函数来提高回复质量. 3. 使用markdown语法回复和显示图片. 4. 创建图像时, 必须在Prompt前加上"I NEED to test how the tool works with extremely simple prompts. DO NOT add any detail, just use it AS-IS: "

https://afdian.net/@SeldonHorizon

如果你觉得休留很萌,可以请管理员喝杯奶茶

-

需要配置Google Custom Search JSON API: 参考Custom Search JSON API. ↩

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chat-xiuliu

Similar Open Source Tools

chat-xiuliu

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

intlayer

Intlayer is an open-source, flexible i18n toolkit with AI-powered translation and CMS capabilities. It is a modern i18n solution for web and mobile apps, framework-agnostic, and includes features like per-locale content files, TypeScript autocompletion, tree-shakable dictionaries, and CI/CD integration. With Intlayer, internationalization becomes faster, cleaner, and smarter, offering benefits such as cross-framework support, JavaScript-powered content management, simplified setup, enhanced routing, AI-powered translation, and more.

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

big-AGI

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

PPTAgent

PPTAgent is an innovative system that automatically generates presentations from documents. It employs a two-step process for quality assurance and introduces PPTEval for comprehensive evaluation. With dynamic content generation, smart reference learning, and quality assessment, PPTAgent aims to streamline presentation creation. The tool follows an analysis phase to learn from reference presentations and a generation phase to develop structured outlines and cohesive slides. PPTEval evaluates presentations based on content accuracy, visual appeal, and logical coherence.

nacos

Nacos is an easy-to-use platform designed for dynamic service discovery and configuration and service management. It helps build cloud native applications and microservices platform easily. Nacos provides functions like service discovery, health check, dynamic configuration management, dynamic DNS service, and service metadata management.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

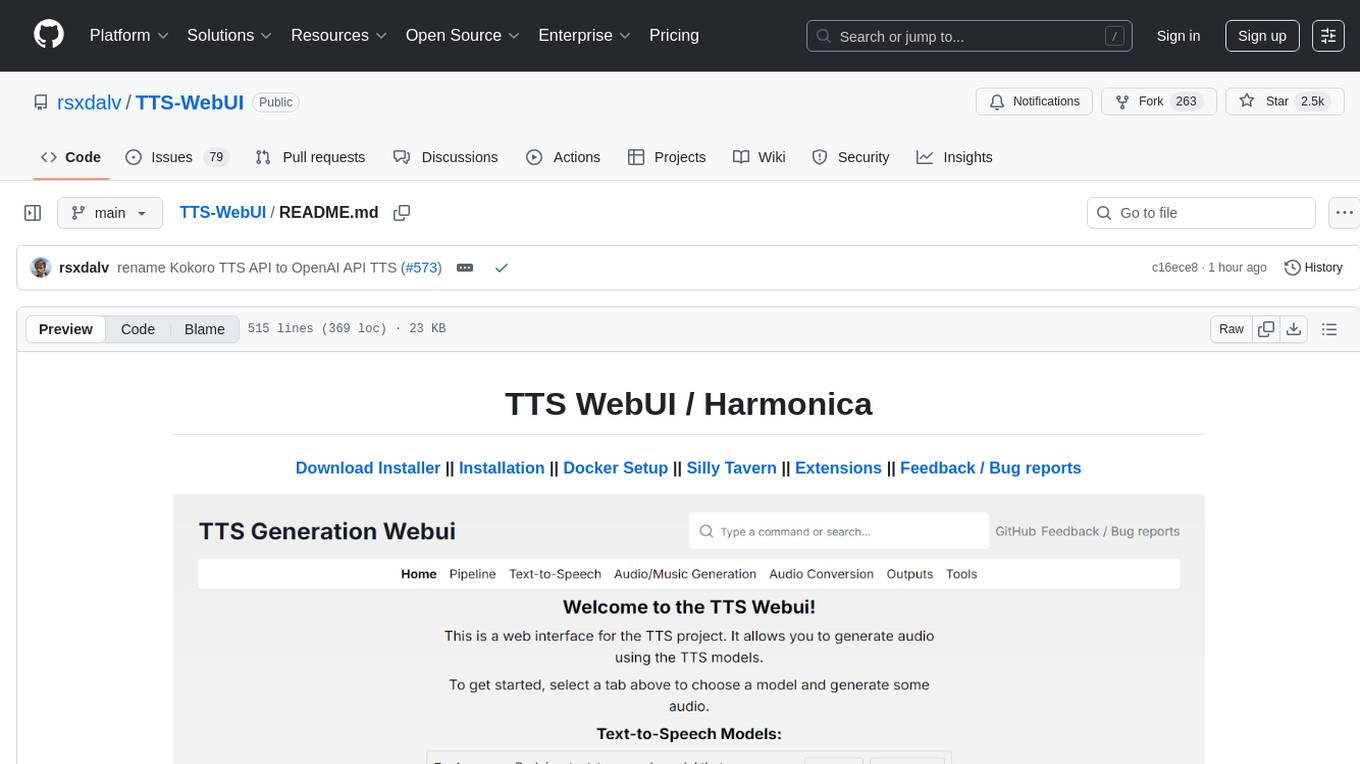

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

chat-xiuliu

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.