LLM-Powered-RAG-System

A collection of RAG systems powered by LLM.

Stars: 162

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

README:

-

langchain - ⚡ Building applications with LLMs through composability ⚡

-

llama_index - LlamaIndex (formerly GPT Index) is a data framework for your LLM applications

-

graphrag - A modular graph-based Retrieval-Augmented Generation (RAG) system

-

embedchain - Embedchain is an Open Source RAG Framework that makes it easy to create and deploy AI apps. -

-

FlagEmbedding - Dense Retrieval and Retrieval-augmented LLMs -

-

fastRAG - Efficient Retrieval Augmentation and Generation Framework -

-

llmware - Providing enterprise-grade LLM-based development framework, tools, and fine-tuned models. -

-

llm-applications - A comprehensive guide to building RAG-based LLM applications for production. -

-

DB-GPT - Revolutionizing Database Interactions with Private LLM Technology -

- pandas-ai - Chat with your data (SQL, CSV, pandas, polars, noSQL, etc). PandasAI makes data analysis conversational using LLMs (GPT 3.5 / 4, Anthropic, VertexAI) and RAG.

-

canopy - Retrieval Augmented Generation (RAG) framework and context engine powered by Pinecone -

-

autollm - Ship RAG based LLM web apps in seconds. -

-

quivr - Your GenAI Second Brain 🧠 A personal productivity assistant (RAG) ⚡️🤖 Chat with your docs (PDF, CSV, ...) & apps using Langchain, GPT 3.5 / 4 turbo, Private, Anthropic, VertexAI, Ollama, LLMs, that you can share with users ! - Ship RAG based LLM web apps in seconds. -

-

fastGPT - FastGPT is a knowledge-based platform built on the LLM, offers out-of-the-box data processing and model invocation capabilities, allows for workflow orchestration through Flow visualization! -

-

Langchain-Chatchat - Langchain-Chatchat(原Langchain-ChatGLM)基于 Langchain 与 ChatGLM 等语言模型的本地知识库问答 -

-

LangChain-ChatGLM-Webui - 基于LangChain和ChatGLM-6B等系列LLM的针对本地知识库的自动问答 -

-

anything-llm - Open-source multi-user ChatGPT for all LLMs, embedders, and vector databases. Unlimited documents, messages, and users in one privacy-focused app. -

- QAnything - Question and Answer based on Anything.

-

danswer - Ask Questions in natural language and get Answers backed by private sources. Connects to tools like Slack, GitHub, Confluence, etc. -

-

rags - Build ChatGPT over your data, all with natural language -

-

khoj - A copilot to search and chat (using RAG) with your knowledge base (pdf, markdown, org). Use powerful, online (e.g gpt4) or private, offline (e.g mistral) LLMs. -

-

Verba - Retrieval Augmented Generation (RAG) chatbot powered by Weaviate -

-

llm-app - LLM App templates for RAG, knowledge mining, and stream analytics. Ready to run with Docker,⚡in sync with your data sources. -

-

casibase - ⚡️Open-source AI LangChain-like RAG (Retrieval-Augmented Generation) knowledge database with web UI and Enterprise SSO⚡️ -

-

trt-llm-rag-windows - A developer reference project for creating Retrieval Augmented Generation (RAG) chatbots on Windows using TensorRT-LLM -

-

GPT-RAG - GPT-RAG core is a Retrieval-Augmented Generation pattern running in Azure, using Azure Cognitive Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences. -

-

rag-demystified - An LLM-powered advanced RAG pipeline built from scratch -

-

cody - Cody is an AI code assistant that uses advanced search and codebase context to help you write and fix code. -

-

adrenaline - Instant answers to any programming question -

-

repo-chat - Use AI to ask questions about any GitHub repo. -

-

LARS - An application for running LLMs locally on your device, with your documents, facilitating detailed citations in generated responses. -

- privateGPT - Interact with your documents using the power of GPT, 100% privately, no data leaks

- localGPT - Chat with your documents on your local device using GPT models. No data leaves your device and 100% private.

- ChatFiles - Document Chatbot

- pdfGPT - PDF GPT allows you to chat with the contents of your PDF file by using GPT capabilities. The most effective open source solution to turn your pdf files in a chatbot!

-

chatd - Chat with your documents using local AI -

- IncarnaMind - Connect and chat with your multiple documents (pdf and txt) through GPT 3.5, GPT-4 Turbo, Claude and Local Open-Source LLMs

-

ArXivChatGuru - Use ArXiv ChatGuru to talk to research papers. This app uses LangChain, OpenAI, Streamlit, and Redis as a vector database/semantic cache. -

-

vanna - 🤖 Chat with your SQL database 📊. Accurate Text-to-SQL Generation via LLMs using RAG 🔄. -

-

txtai - 💡 All-in-one open-source embeddings database for semantic search, LLM orchestration and language model workflows -

-

infinity - The AI-native database built for LLM applications, providing incredibly fast vector and full-text search -

-

postgresml - The GPU-powered AI application database. -

- lancedb - Developer-friendly, serverless vector database for AI applications. Easily add long-term memory to your LLM apps!

-

sparrow - Data extraction with ML and LLM -

-

fastembed - Fast, Accurate, Lightweight Python library to make State of the Art Embedding -

-

self-rag - SELF-RAG: Learning to Retrieve, Generate and Critique through Self-reflection -

- instructor - Your Gateway to Structured Outputs with OpenAI

-

swirl-search - Swirl is open source software that simultaneously searches multiple content sources and returns AI ranked results. -

-

kernel-memory - Index and query any data using LLM and natural language, tracking sources and showing citations. -

-

RAGFoundry - Framework for specializing LLMs for retrieval-augmented-generation tasks using fine-tuning. -

- chatgpt-retrieval-plugin - The ChatGPT Retrieval Plugin lets you easily find personal or work documents by asking questions in natural language.

-

RAGxplorer - Open-source tool to visualise your RAG 🔮 -

-

swiftide - Fast, streaming indexing and query library for AI (RAG) applications, written in Rust -

-

ragas - Evaluation framework for your Retrieval Augmented Generation (RAG) pipelines -

- Awesome-LLM-RAG - This repo aims to record advanced papers of Retrieval Agumented Generation (RAG) in LLMs.

- Building RAG-based LLM Applications for Production

- A-Guide-to-Retrieval-Augmented-LLM

- 一文详谈20多种RAG优化方法

- rag-resources - A collection of curated RAG (Retrieval Augmented Generation) resources.

- RAG-Survey

- Awesome-LLM-RAG-Application - the resources about the application based on LLM with RAG pattern

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Powered-RAG-System

Similar Open Source Tools

LLM-Powered-RAG-System

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

awesome-agents

Awesome Agents is a curated list of open source AI agents designed for various tasks such as private interactions with documents, chat implementations, autonomous research, human-behavior simulation, code generation, HR queries, domain-specific research, and more. The agents leverage Large Language Models (LLMs) and other generative AI technologies to provide solutions for complex tasks and projects. The repository includes a diverse range of agents for different use cases, from conversational chatbots to AI coding engines, and from autonomous HR assistants to vision task solvers.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

postiz-app

Postiz is an ultimate AI social media scheduling tool that offers everything you need to manage your social media posts, build an audience, capture leads, and grow your business. It allows you to schedule posts with AI features, measure work with analytics, collaborate with team members, and invite others to comment and schedule posts. The tech stack includes NX (Monorepo), NextJS (React), NestJS, Prisma, Redis, and Resend for email notifications.

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

awesome-langchain

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain. Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

complexity

Complexity is a community-driven, open-source, and free third-party extension that enhances the features of Perplexity.ai. It provides various UI/UX/QoL tweaks, LLM/Image gen model selectors, a customizable theme, and a prompts library. The tool intercepts network traffic to alter the behavior of the host page, offering a solution to the limitations of Perplexity.ai. Users can install Complexity from Chrome Web Store, Mozilla Add-on, or build it from the source code.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

TEN-Agent

TEN Agent is an open-source multimodal agent powered by the world’s first real-time multimodal framework, TEN Framework. It offers high-performance real-time multimodal interactions, multi-language and multi-platform support, edge-cloud integration, flexibility beyond model limitations, and real-time agent state management. Users can easily build complex AI applications through drag-and-drop programming, integrating audio-visual tools, databases, RAG, and more.

Folo

Folo is a content organization tool that creates a noise-free timeline for users. It allows sharing lists, exploring collections, and distraction-free browsing. Users can subscribe to feeds, curate favorites, and utilize AI-powered features like translation and summaries. Folo supports various content types such as articles, videos, images, and audio. It introduces an ownership economy with $POWER tipping for creators and fosters a community-driven experience. The tool is under active development, welcoming feedback from users and developers.

stable-pi-core

Stable-Pi-Core is a next-generation decentralized ecosystem integrating blockchain, quantum AI, IoT, edge computing, and AR/VR for secure, scalable, and personalized solutions in payments, governance, and real-world applications. It features a Dual-Value System, cross-chain interoperability, AI-powered security, and a self-healing network. The platform empowers seamless payments, decentralized governance via DAO, and real-world applications across industries, bridging digital and physical worlds with innovative features like robotic process automation, machine learning personalization, and a dynamic cross-chain bridge framework.

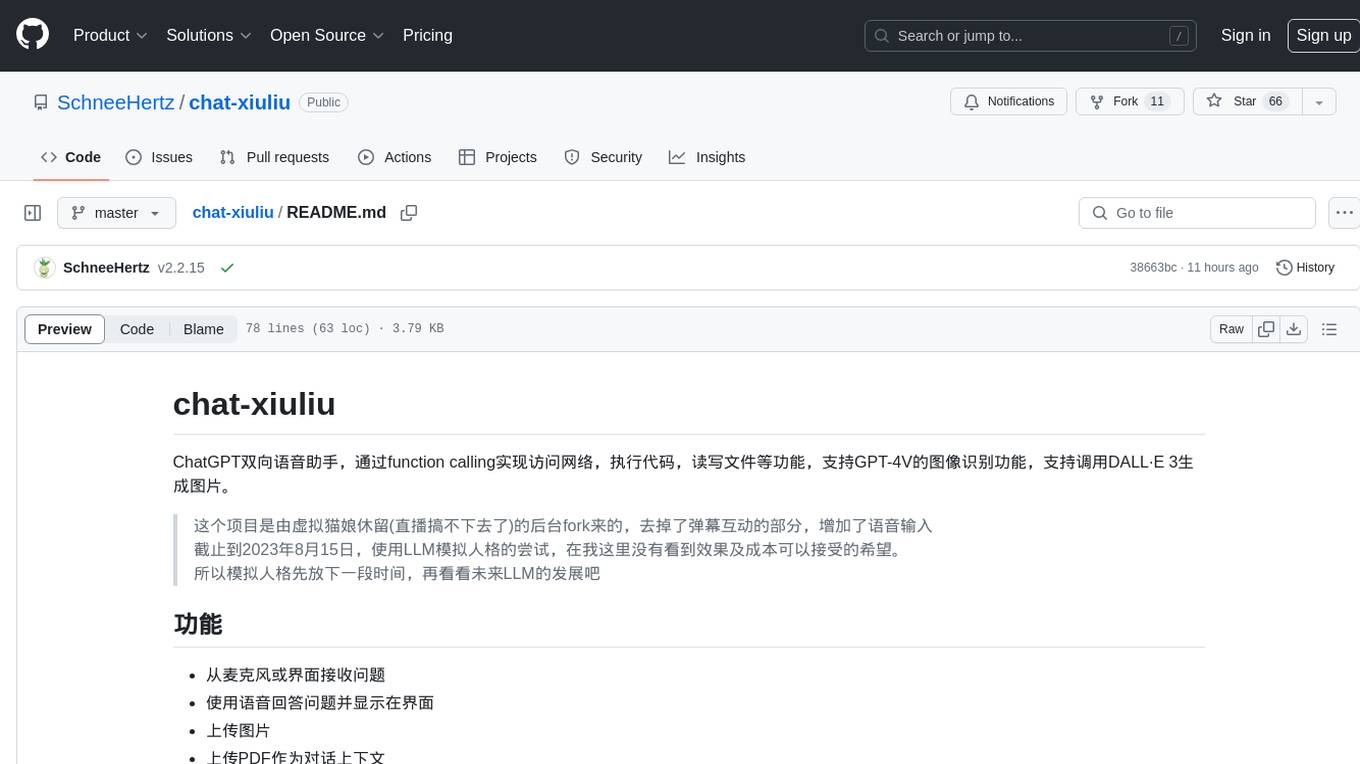

chat-xiuliu

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

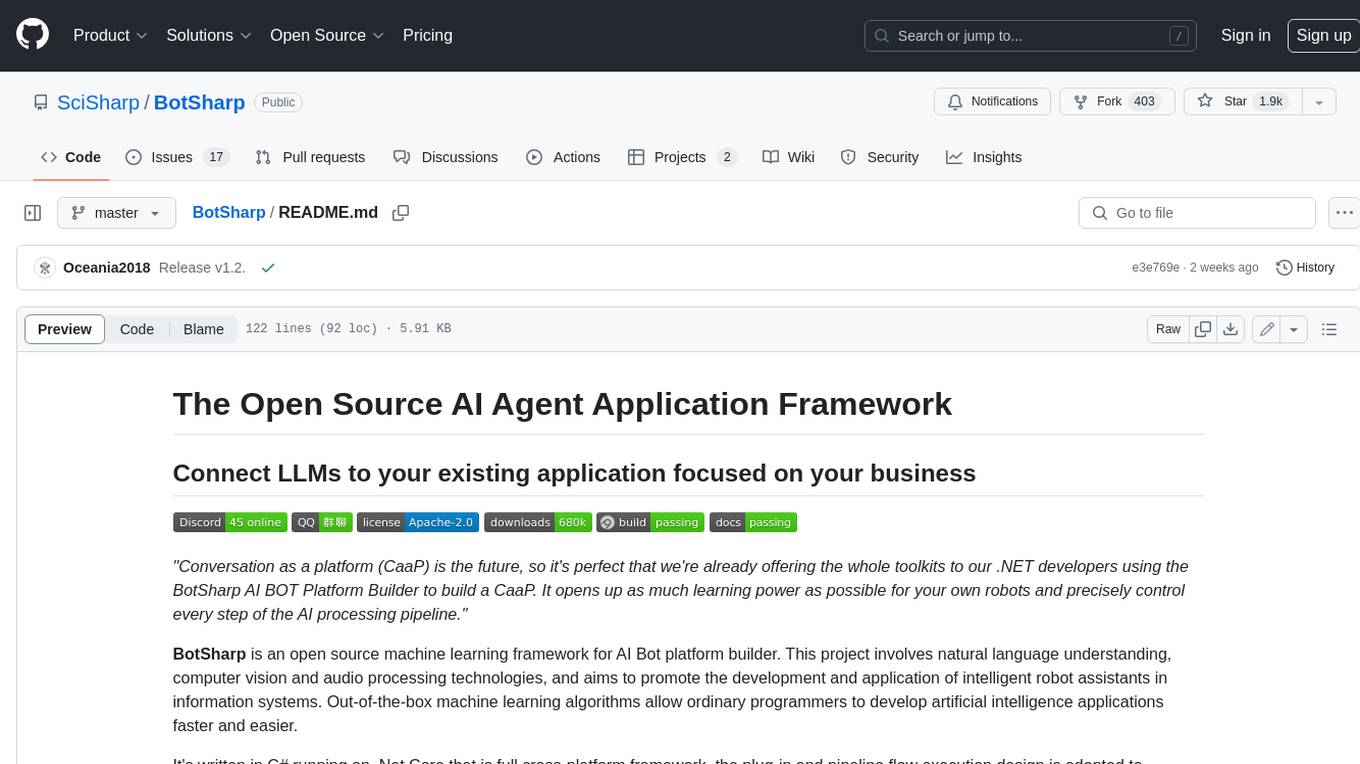

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.