complexity

⚡ Supercharge your favourite AI Chat web apps. Currently supports Perplexity AI.

Stars: 917

Complexity is a community-driven, open-source, and free third-party extension that enhances the features of Perplexity.ai. It provides various UI/UX/QoL tweaks, LLM/Image gen model selectors, a customizable theme, and a prompts library. The tool intercepts network traffic to alter the behavior of the host page, offering a solution to the limitations of Perplexity.ai. Users can install Complexity from Chrome Web Store, Mozilla Add-on, or build it from the source code.

README:

[!NOTE] Originally a Perplexity AI extension, this repository has now been restructured into a suite of enhancements for multiple platforms and services.

- Provides a comprehensive set of added features and UI/UX improvements with excellent modularity and customization

- Supports 22 languages

- Runs flawlessly on Firefox Android

- Navigate to

./perplexity/extension/for more information

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for complexity

Similar Open Source Tools

complexity

Complexity is a community-driven, open-source, and free third-party extension that enhances the features of Perplexity.ai. It provides various UI/UX/QoL tweaks, LLM/Image gen model selectors, a customizable theme, and a prompts library. The tool intercepts network traffic to alter the behavior of the host page, offering a solution to the limitations of Perplexity.ai. Users can install Complexity from Chrome Web Store, Mozilla Add-on, or build it from the source code.

autoflow

AutoFlow is an open source graph rag based knowledge base tool built on top of TiDB Vector and LlamaIndex and DSPy. It features a Perplexity-style Conversational Search page and an Embeddable JavaScript Snippet for easy integration into websites. The tool allows for comprehensive coverage and streamlined search processes through sitemap URL scraping.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

rikkahub

RikkaHub is a native Android LLM chat client that supports switching between different providers for conversations. It features a modern Android app design with dark mode, support for multiple provider types, multimodal input support, Markdown rendering, search capabilities, prompt variables, QR code export/import, agent customization, ChatGPT-like memory feature, AI translation, and custom HTTP request headers and bodies. The project is developed using Kotlin, Koin, Jetpack Compose, DataStore, Room, Coil, Material You, Navigation Compose, Okhttp, kotlinx.serialization, and compose-icons/lucide.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

pipeshub-ai

Pipeshub-ai is a versatile tool for automating data pipelines in AI projects. It provides a user-friendly interface to design, deploy, and monitor complex data workflows, enabling seamless integration of various AI models and data sources. With Pipeshub-ai, users can easily create end-to-end pipelines for tasks such as data preprocessing, model training, and inference, streamlining the AI development process and improving productivity. The tool supports integration with popular AI frameworks and cloud services, making it suitable for both beginners and experienced AI practitioners.

assistant-ui

assistant-ui is a set of React components for AI chat, providing wide model provider support out of the box and the ability to integrate custom APIs. It includes integrations with Langchain, Vercel AI SDK, TailwindCSS, shadcn-ui, react-markdown, react-syntax-highlighter, React Hook Form, and more. The tool allows users to quickly create AI chat applications with pre-configured templates and easy setup steps.

deepchat

DeepChat is a versatile chat tool that supports multiple model cloud services and local model deployment. It offers multi-channel chat concurrency support, platform compatibility, complete Markdown rendering, and easy usability with a comprehensive guide. The tool aims to enhance chat experiences by leveraging various AI models and ensuring efficient conversation management.

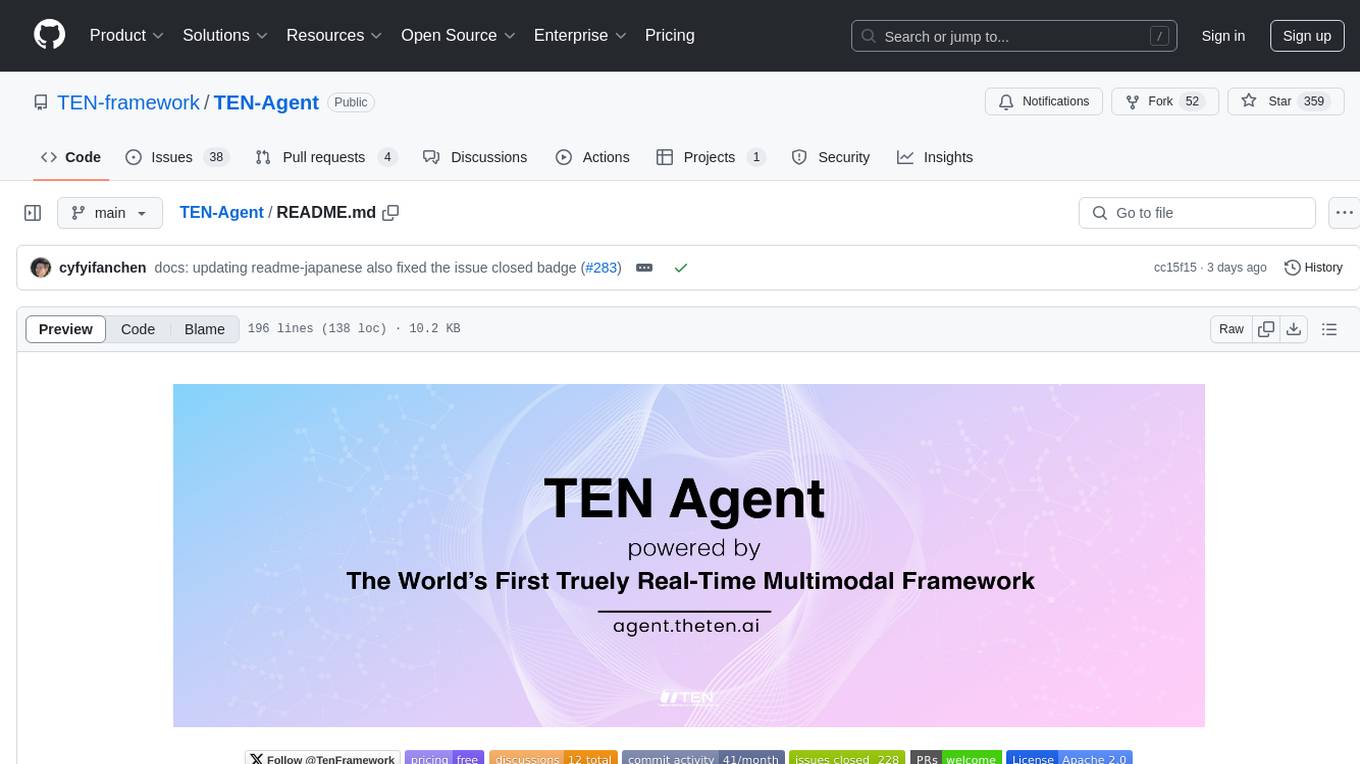

TEN-Agent

TEN Agent is an open-source multimodal agent powered by the world’s first real-time multimodal framework, TEN Framework. It offers high-performance real-time multimodal interactions, multi-language and multi-platform support, edge-cloud integration, flexibility beyond model limitations, and real-time agent state management. Users can easily build complex AI applications through drag-and-drop programming, integrating audio-visual tools, databases, RAG, and more.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

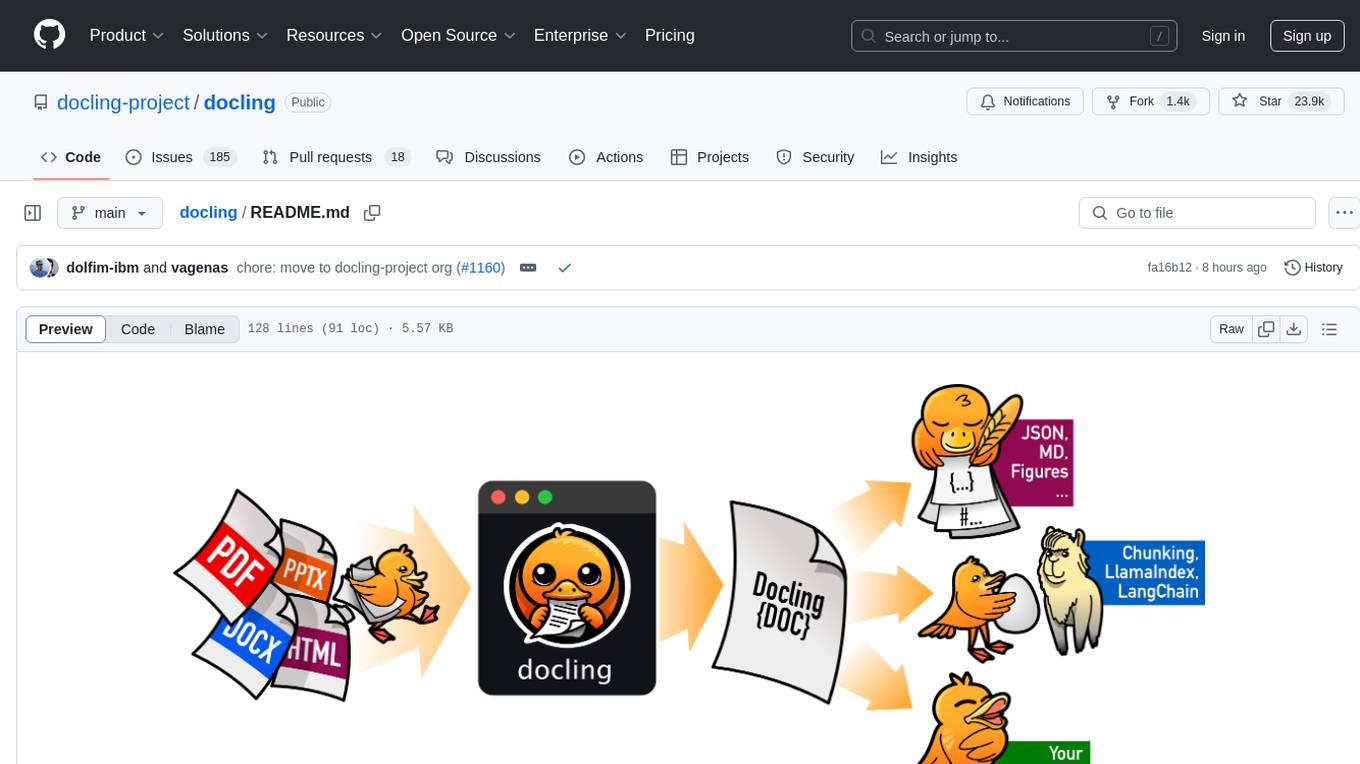

docling

Docling simplifies document processing, parsing diverse formats including advanced PDF understanding, and providing seamless integrations with the general AI ecosystem. It offers features such as parsing multiple document formats, advanced PDF understanding, unified DoclingDocument representation format, various export formats, local execution capabilities, plug-and-play integrations with agentic AI tools, extensive OCR support, and a simple CLI. Coming soon features include metadata extraction, visual language models, chart understanding, and complex chemistry understanding. Docling is installed via pip and works on macOS, Linux, and Windows environments. It provides detailed documentation, examples, integrations with popular frameworks, and support through the discussion section. The codebase is under the MIT license and has been developed by IBM.

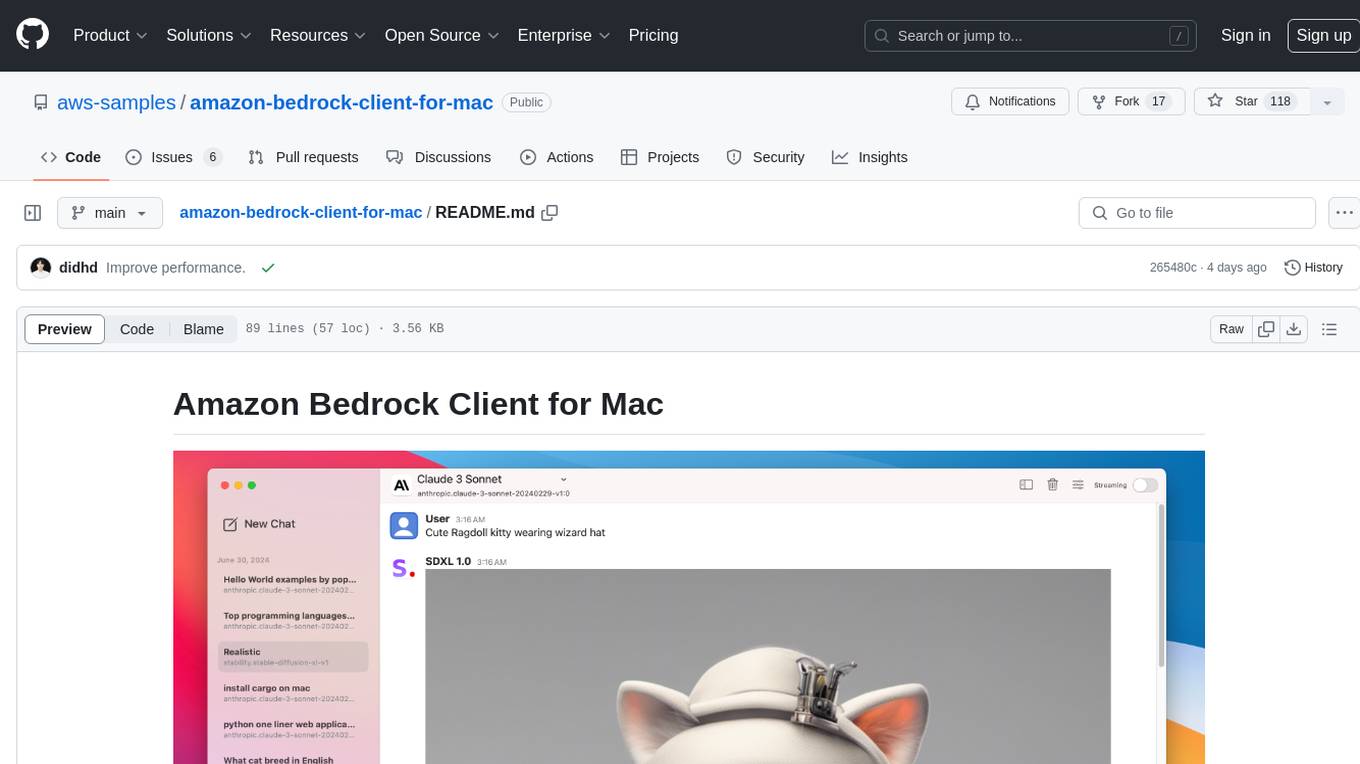

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

ragstack-ai

RAGStack is an out-of-the-box solution simplifying Retrieval Augmented Generation (RAG) in GenAI apps. RAGStack includes the best open-source for implementing RAG, giving developers a comprehensive Gen AI Stack leveraging LangChain, CassIO, and more. RAGStack leverages the LangChain ecosystem and is fully compatible with LangSmith for monitoring your AI deployments.

For similar tasks

complexity

Complexity is a community-driven, open-source, and free third-party extension that enhances the features of Perplexity.ai. It provides various UI/UX/QoL tweaks, LLM/Image gen model selectors, a customizable theme, and a prompts library. The tool intercepts network traffic to alter the behavior of the host page, offering a solution to the limitations of Perplexity.ai. Users can install Complexity from Chrome Web Store, Mozilla Add-on, or build it from the source code.

Noi

Noi is an AI-enhanced customizable browser designed to streamline digital experiences. It includes curated AI websites, allows adding any URL, offers prompts management, Noi Ask for batch messaging, various themes, Noi Cache Mode for quick link access, cookie data isolation, and more. Users can explore, extend, and empower their browsing experience with Noi.

svelte-commerce

Svelte Commerce is an open-source frontend for eCommerce, utilizing a PWA and headless approach with a modern JS stack. It supports integration with various eCommerce backends like MedusaJS, Woocommerce, Bigcommerce, and Shopify. The API flexibility allows seamless connection with third-party tools such as payment gateways, POS systems, and AI services. Svelte Commerce offers essential eCommerce features, is both SSR and SPA, superfast, and free to download and modify. Users can easily deploy it on Netlify or Vercel with zero configuration. The tool provides features like headless commerce, authentication, cart & checkout, TailwindCSS styling, server-side rendering, proxy + API integration, animations, lazy loading, search functionality, faceted filters, and more.

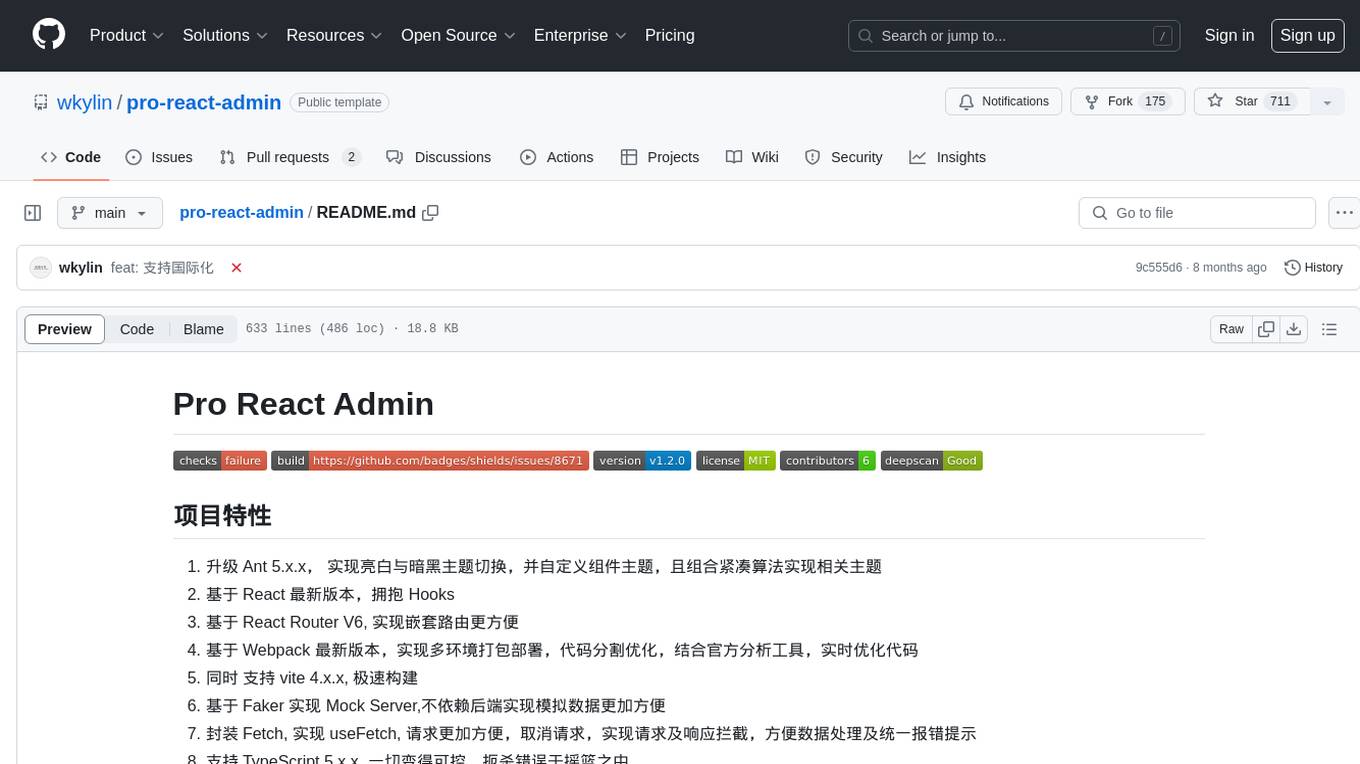

pro-react-admin

Pro React Admin is a comprehensive React admin template that includes features such as theme switching, custom component theming, nested routing, webpack optimization, TypeScript support, multi-tabs, internationalization, code styling, commit message configuration, error handling, code splitting, component documentation generation, and more. It also provides tools for mock server implementation, deployment, linting, formatting, and continuous code review. The template supports various technologies like React, React Router, Webpack, Babel, Ant Design, TypeScript, and Vite, making it suitable for building efficient and scalable React admin applications.

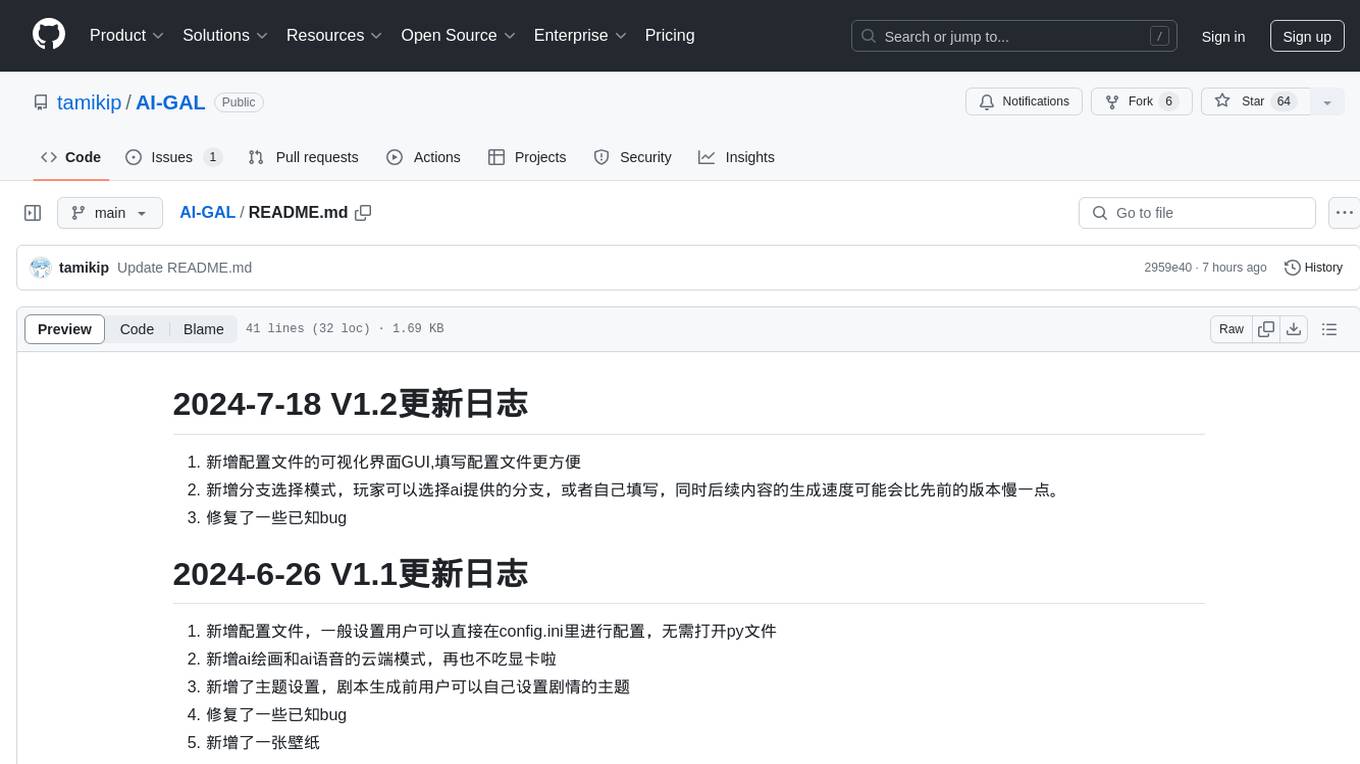

AI-GAL

AI-GAL is a tool that offers a visual GUI for easier configuration file editing, branch selection mode for content generation, and bug fixes. Users can configure settings in config.ini, utilize cloud-based AI drawing and voice modes, set themes for script generation, and enjoy a wallpaper. Prior to usage, ensure a 4GB+ GPU, chatgpt key or local LLM deployment, and installation of stable diffusion, gpt-sovits, and rembg. To start, fill out the config.ini file and run necessary APIs. Restart a storyline by clearing story.txt in the game directory. Encounter errors? Copy the log.txt details and send them for assistance.

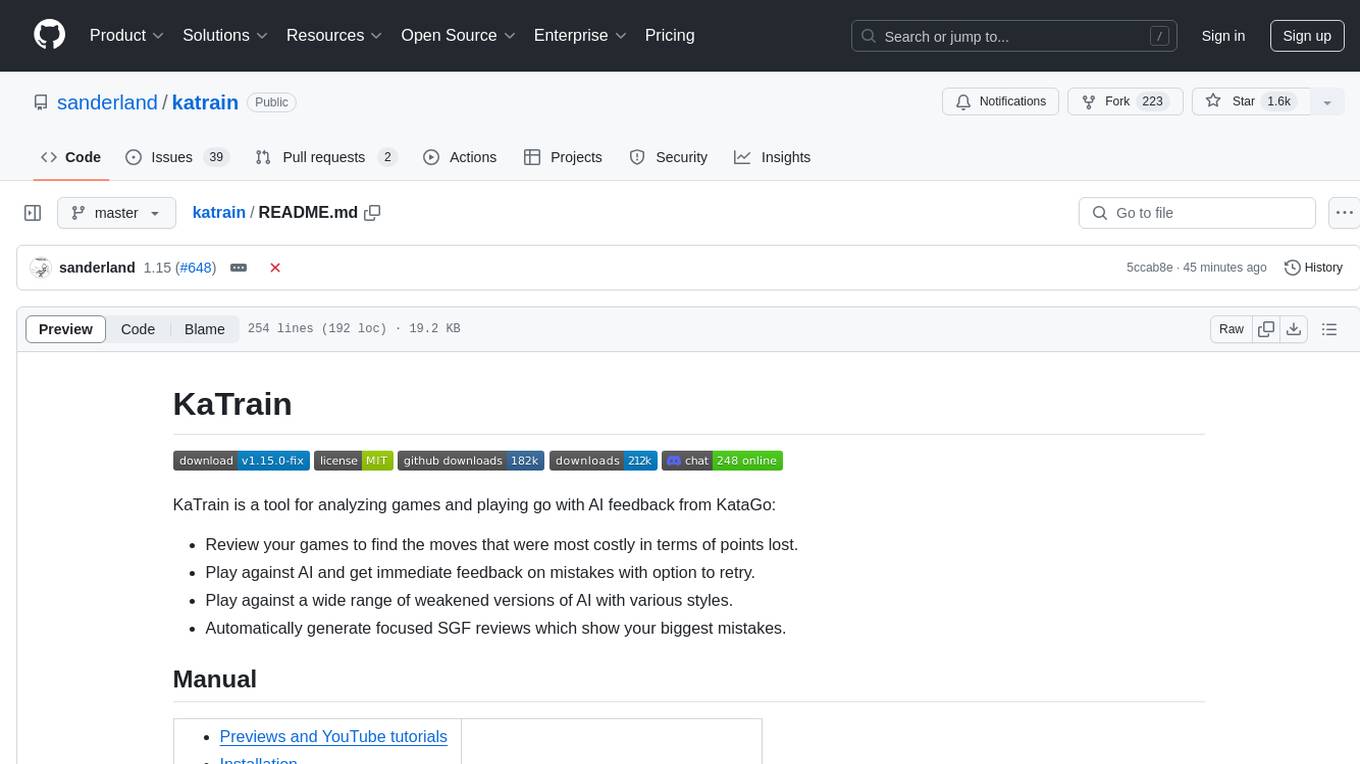

katrain

KaTrain is a tool designed for analyzing games and playing go with AI feedback from KataGo. Users can review their games to find costly moves, play against AI with immediate feedback, play against weakened AI versions, and generate focused SGF reviews. The tool provides various features such as previews, tutorials, installation instructions, and configuration options for KataGo. Users can play against AI, receive instant feedback on moves, explore variations, and request in-depth analysis. KaTrain also supports distributed training for contributing to KataGo's strength and training bigger models. The tool offers themes customization, FAQ section, and opportunities for support and contribution through GitHub issues and Discord community.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

x

Ant Design X is a tool for crafting AI-driven interfaces effortlessly. It is built on the best practices of enterprise-level AI products, offering flexible and diverse atomic components for various AI dialogue scenarios. The tool provides out-of-the-box model integration with inference services compatible with OpenAI standards. It also enables efficient management of conversation data flows, supports rich template options, complete TypeScript support, and advanced theme customization. Ant Design X is designed to enhance development efficiency and deliver exceptional AI interaction experiences.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.