AI-GAL

AI GAL是专门为Galgame场景设计的程序,旨在让得每一名用户都能享受到独一无二的剧情。程序基于renpy框架开发

Stars: 179

AI-GAL is a tool that offers a visual GUI for easier configuration file editing, branch selection mode for content generation, and bug fixes. Users can configure settings in config.ini, utilize cloud-based AI drawing and voice modes, set themes for script generation, and enjoy a wallpaper. Prior to usage, ensure a 4GB+ GPU, chatgpt key or local LLM deployment, and installation of stable diffusion, gpt-sovits, and rembg. To start, fill out the config.ini file and run necessary APIs. Restart a storyline by clearing story.txt in the game directory. Encounter errors? Copy the log.txt details and send them for assistance.

README:

- 新增了多语种支持功能,目前支持英语和日语(包括字幕和语音)

- 支持快照功能,可以将当前剧情保存,下次可以直接恢复,也可以分享给他人

- 新增对话模式(β测试),可以与剧情角色一对一聊天

- 重构优化了游戏代码,优化了gui界面

- 当前支持deepseek-r1等思考模型

- 修复云端语音错误的问题

- rembg软件被废除,改用sd插件模式

- 重建了GUI界面,更名为AI GAL启动器,更加美观和好用

- 优化了ai音乐逻辑

- 重构了部分代码,增加了代码的可读性

- galgame现在支持转场特效了

- gpt-sovits支持V2版本

- 修复了一些bug

- 新增加了更新器,用户可以直接在软件里更新版本

- 新增加了发行版功能,用户可以导出现成的游戏剧本,导出后无法再有后续新剧情。

- 内置rembg安装包,用户部署更方便!

- 新增ai音乐功能

- 修复了一些已知bug

- 新增配置文件的可视化界面GUI,填写配置文件更方便

- 新增分支选择模式,玩家可以选择ai提供的分支,或者自己填写,同时后续内容的生成速度可能会比先前的版本慢一点。

- 修复了一些已知bug

- 新增配置文件,一般设置用户可以直接在config.ini里进行配置,无需打开py文件

- 新增ai绘画和ai语音的云端模式,再也不吃显卡啦

- 新增了主题设置,剧本生成前用户可以自己设置剧情的主题

- 修复了一些已知bug

- 新增了一张壁纸

- 大语言模型的密钥(或者本地部署LLM)

- 安装好以下程序(本地):

- stable diffusion

- gpt-sovits

- 项目文档:https://tamikip.github.io/AI-GAL-doc/

- qq群:982330586

-

使用GPT模型生成:

- Galgame的标题、大纲、背景和人物设定。

- 根据设定的主题和要求,生成详细的章节内容,并以对话模式输出。

-

图像生成:

- 利用AI绘画API生成角色和背景图像。

- 支持本地和云端绘图模式。

- 通过AI合成角色的语音对话。

- 支持不同版本的SOVITS模型进行语音合成。

- 提供在线和本地音频生成选项。

- 根据情感标签生成背景音乐。

- 提供下载生成的音乐文件功能。

- 自动生成剧情分支选项,供用户选择不同的剧情走向。

- 安装stable-diffusion,gpt-sovits

- 填写配置参数

- 运行stable diffusion的api

- 运行gpt-sovits的api

把log.txt的报错信息复制下来,私信我

本项目基于 Ren'Py 项目进行二次开发,并遵循以下许可证条款:

- 本项目的主要部分遵循 MIT 许可证。

- 本项目中包含的某些组件和库遵循其他许可证,包括但不限于:

- GNU 宽通用公共许可证 (LGPL)

- Zlib 许可证

- 其他相关许可证请参见各自的许可证文件。

请确保在分发本项目时,包含所有相关的许可证文件。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-GAL

Similar Open Source Tools

AI-GAL

AI-GAL is a tool that offers a visual GUI for easier configuration file editing, branch selection mode for content generation, and bug fixes. Users can configure settings in config.ini, utilize cloud-based AI drawing and voice modes, set themes for script generation, and enjoy a wallpaper. Prior to usage, ensure a 4GB+ GPU, chatgpt key or local LLM deployment, and installation of stable diffusion, gpt-sovits, and rembg. To start, fill out the config.ini file and run necessary APIs. Restart a storyline by clearing story.txt in the game directory. Encounter errors? Copy the log.txt details and send them for assistance.

eisonAI

EisonAI is an intelligent Safari browser plugin that uses advanced Large Language Models (LLM) technology to automatically summarize web content. It intelligently extracts the main content of web pages and generates accurate summaries to help users quickly understand the key points of the web page. The plugin features smart content extraction, AI-powered summarization, interactive dialogue with AI, elegant interface design, flexible display modes, and advanced functionalities like keyboard shortcuts, message deduplication, and intelligent text length limitation. The system architecture includes core modules for content extraction, UI elements injection, message handling, GPT integration, event listening, user control panel, API status management, mode switching interface, and cross-page communication. It utilizes Readability.js for web content parsing, modular event handling system, CSP security control, responsive design, and dark mode support. EisonAI requires macOS 12.0 or higher, iOS 15.0 or higher, and Safari 15.0 or higher for installation and usage.

writing-helper

A Next.js-based AI writing assistant that helps users organize writing style prompts and sends them to large language models (LLMs) to generate content. The tool aims to help writers, content creators, and copywriters improve writing efficiency and quality through AI technology. It features rich writing style customization, support for multiple LLM APIs, flexible API settings, user-friendly interface, real-time content editing, export function, detailed debugging information, dark/light mode support, and more.

Yi-Ai

Yi-Ai is a project based on the development of nineai 2.4.2. It is for learning and reference purposes only, not for commercial use. The project includes updates to popular models like gpt-4o and claude3.5, as well as new features such as model image recognition. It also supports various functionalities like model sorting, file type extensions, and bug fixes. The project provides deployment tutorials for both integrated and compiled packages, with instructions for environment setup, configuration, dependency installation, and project startup. Additionally, it offers a management platform with different access levels and emphasizes the importance of following the steps for proper system operation.

jd_scripts

jd_scripts is a repository containing scripts for automating various tasks on the JD platform. The scripts provide instructions for setting up and using the tools to enhance user experience and efficiency in managing JD accounts and assets. Users can automate processes such as receiving notifications, redeeming rewards, participating in group purchases, and monitoring ticket availability. The repository also includes resources for optimizing performance and security measures to safeguard user accounts. With a focus on simplifying interactions with the JD platform, jd_scripts offers a comprehensive solution for maximizing benefits and convenience for JD users.

jd_scripts

jd_scripts is a repository containing scripts for automating tasks related to JD (Jingdong) platform. The scripts provide functionalities such as asset notifications, account management, and task automation for various JD services. Users can utilize these scripts to streamline their interactions with the JD platform, receive asset notifications, and perform tasks efficiently.

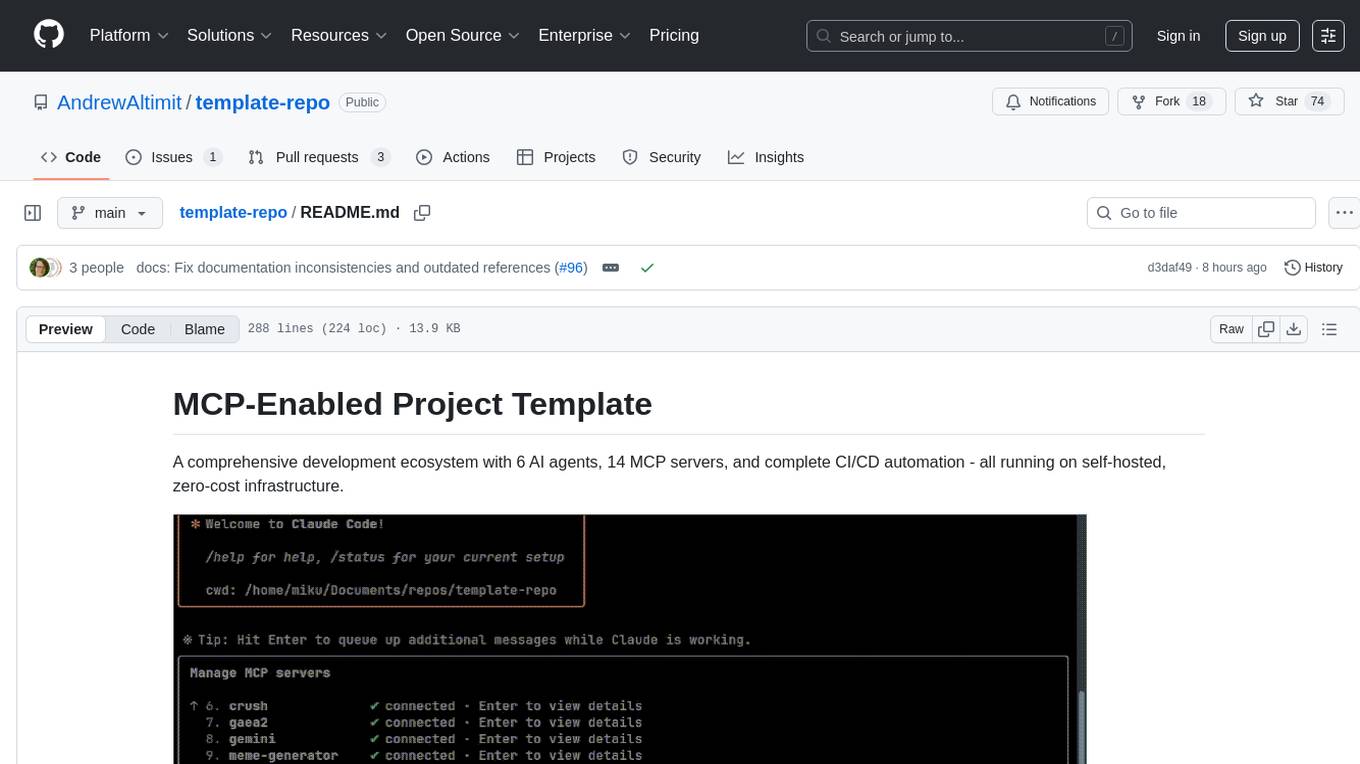

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

ai-dj

OBSIDIAN-Neural is a real-time AI music generation VST3 plugin designed for live performance. It allows users to type words and instantly receive musical loops, enhancing creative flow. The plugin features an 8-track sampler with MIDI triggering, 4 pages per track for easy variation switching, perfect DAW sync, real-time generation without pre-recorded samples, and stems separation for isolated drums, bass, and vocals. Users can generate music by typing specific keywords and trigger loops with MIDI while jamming. The tool offers different setups for server + GPU, local models for offline use, and a free API option with no setup required. OBSIDIAN-Neural is actively developed and has received over 110 GitHub stars, with ongoing updates and bug fixes. It is dual licensed under GNU Affero General Public License v3.0 and offers a commercial license option for interested parties.

FisherAI

FisherAI is a Chrome extension designed to improve learning efficiency. It supports automatic summarization, web and video translation, multi-turn dialogue, and various large language models such as gpt/azure/gemini/deepseek/mistral/groq/yi/moonshot. Users can enjoy flexible and powerful AI tools with FisherAI.

ai-assisted-devops

AI-Assisted DevOps is a 10-day course focusing on integrating artificial intelligence (AI) technologies into DevOps practices. The course covers various topics such as AI for observability, incident response, CI/CD pipeline optimization, security, and FinOps. Participants will learn about running large language models (LLMs) locally, making API calls, AI-powered shell scripting, and using AI agents for self-healing infrastructure. Hands-on activities include creating GitHub repositories with bash scripts, generating Docker manifests, predicting server failures using AI, and building AI agents to monitor deployments. The course culminates in a capstone project where learners implement AI-assisted DevOps automation and receive peer feedback on their projects.

ai-performance-engineering

This repository is a comprehensive resource for AI Systems Performance Engineering, providing code examples, tools, and resources for GPU optimization, distributed training, inference scaling, and performance tuning. It covers a wide range of topics such as performance tuning mindset, system architecture, GPU programming, memory optimization, and the latest profiling tools. The focus areas include GPU architecture, PyTorch, CUDA programming, distributed training, memory optimization, and multi-node scaling strategies.

TypeTale

TypeTale is an AIGC creation software designed specifically for content creators, primarily used for novel promotion. It offers a wide range of AI capabilities such as image, video, and audio generation, as well as text processing and story extraction. The tool also provides workflow customization, AI assistant support, and a vast library of creative materials. With a user-friendly interface and system requirements compatible with Windows operating systems, TypeTale aims to streamline the content creation process for writers and creators.

llm_note

LLM notes repository contains detailed analysis on transformer models, language model compression, inference and deployment, high-performance computing, and system optimization methods. It includes discussions on various algorithms, frameworks, and performance analysis related to large language models and high-performance computing. The repository serves as a comprehensive resource for understanding and optimizing language models and computing systems.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

MachineLearning

MachineLearning is a repository focused on practical applications in various algorithm scenarios such as ship, education, and enterprise development. It covers a wide range of topics from basic machine learning and deep learning to object detection and the latest large models. The project utilizes mature third-party libraries, open-source pre-trained models, and the latest technologies from related papers to document the learning process and facilitate direct usage by a wider audience.

For similar tasks

Noi

Noi is an AI-enhanced customizable browser designed to streamline digital experiences. It includes curated AI websites, allows adding any URL, offers prompts management, Noi Ask for batch messaging, various themes, Noi Cache Mode for quick link access, cookie data isolation, and more. Users can explore, extend, and empower their browsing experience with Noi.

svelte-commerce

Svelte Commerce is an open-source frontend for eCommerce, utilizing a PWA and headless approach with a modern JS stack. It supports integration with various eCommerce backends like MedusaJS, Woocommerce, Bigcommerce, and Shopify. The API flexibility allows seamless connection with third-party tools such as payment gateways, POS systems, and AI services. Svelte Commerce offers essential eCommerce features, is both SSR and SPA, superfast, and free to download and modify. Users can easily deploy it on Netlify or Vercel with zero configuration. The tool provides features like headless commerce, authentication, cart & checkout, TailwindCSS styling, server-side rendering, proxy + API integration, animations, lazy loading, search functionality, faceted filters, and more.

pro-react-admin

Pro React Admin is a comprehensive React admin template that includes features such as theme switching, custom component theming, nested routing, webpack optimization, TypeScript support, multi-tabs, internationalization, code styling, commit message configuration, error handling, code splitting, component documentation generation, and more. It also provides tools for mock server implementation, deployment, linting, formatting, and continuous code review. The template supports various technologies like React, React Router, Webpack, Babel, Ant Design, TypeScript, and Vite, making it suitable for building efficient and scalable React admin applications.

AI-GAL

AI-GAL is a tool that offers a visual GUI for easier configuration file editing, branch selection mode for content generation, and bug fixes. Users can configure settings in config.ini, utilize cloud-based AI drawing and voice modes, set themes for script generation, and enjoy a wallpaper. Prior to usage, ensure a 4GB+ GPU, chatgpt key or local LLM deployment, and installation of stable diffusion, gpt-sovits, and rembg. To start, fill out the config.ini file and run necessary APIs. Restart a storyline by clearing story.txt in the game directory. Encounter errors? Copy the log.txt details and send them for assistance.

katrain

KaTrain is a tool designed for analyzing games and playing go with AI feedback from KataGo. Users can review their games to find costly moves, play against AI with immediate feedback, play against weakened AI versions, and generate focused SGF reviews. The tool provides various features such as previews, tutorials, installation instructions, and configuration options for KataGo. Users can play against AI, receive instant feedback on moves, explore variations, and request in-depth analysis. KaTrain also supports distributed training for contributing to KataGo's strength and training bigger models. The tool offers themes customization, FAQ section, and opportunities for support and contribution through GitHub issues and Discord community.

complexity

Complexity is a community-driven, open-source, and free third-party extension that enhances the features of Perplexity.ai. It provides various UI/UX/QoL tweaks, LLM/Image gen model selectors, a customizable theme, and a prompts library. The tool intercepts network traffic to alter the behavior of the host page, offering a solution to the limitations of Perplexity.ai. Users can install Complexity from Chrome Web Store, Mozilla Add-on, or build it from the source code.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

x

Ant Design X is a tool for crafting AI-driven interfaces effortlessly. It is built on the best practices of enterprise-level AI products, offering flexible and diverse atomic components for various AI dialogue scenarios. The tool provides out-of-the-box model integration with inference services compatible with OpenAI standards. It also enables efficient management of conversation data flows, supports rich template options, complete TypeScript support, and advanced theme customization. Ant Design X is designed to enhance development efficiency and deliver exceptional AI interaction experiences.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.