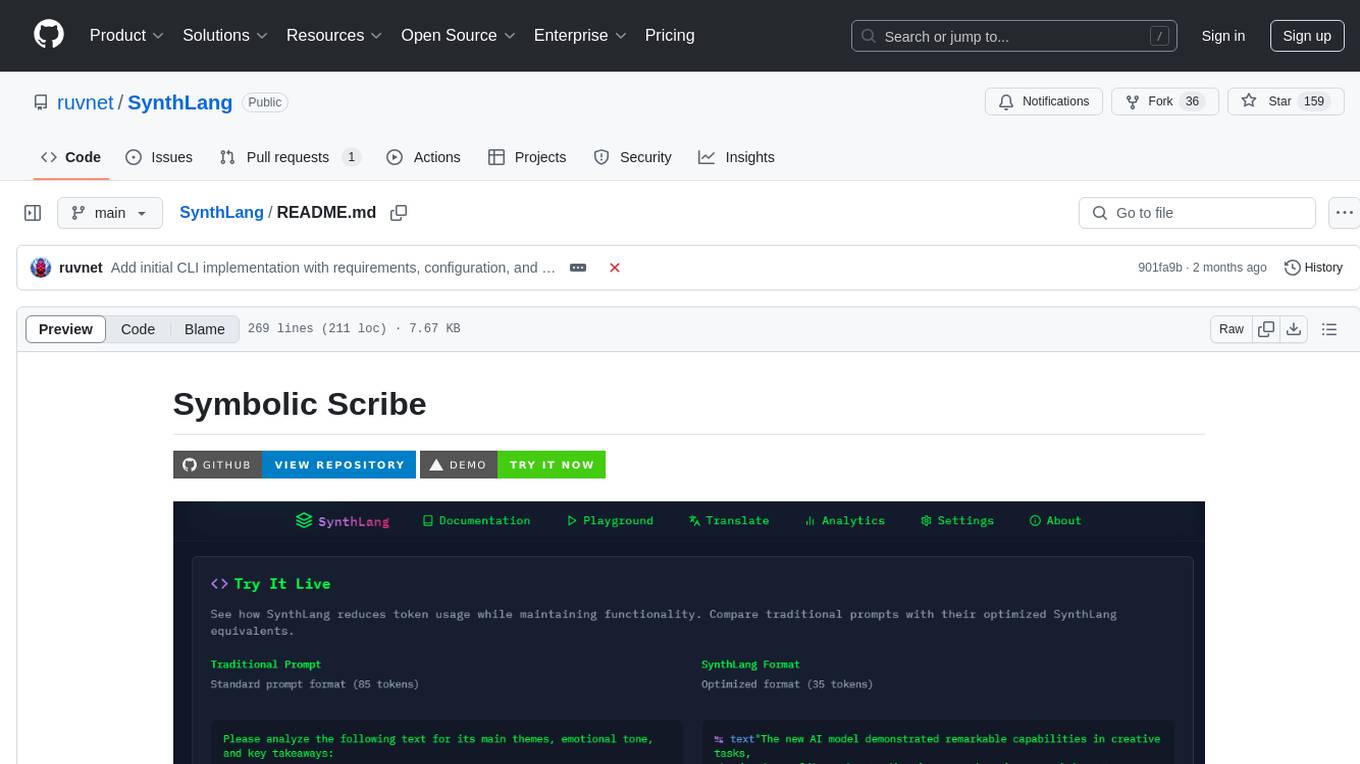

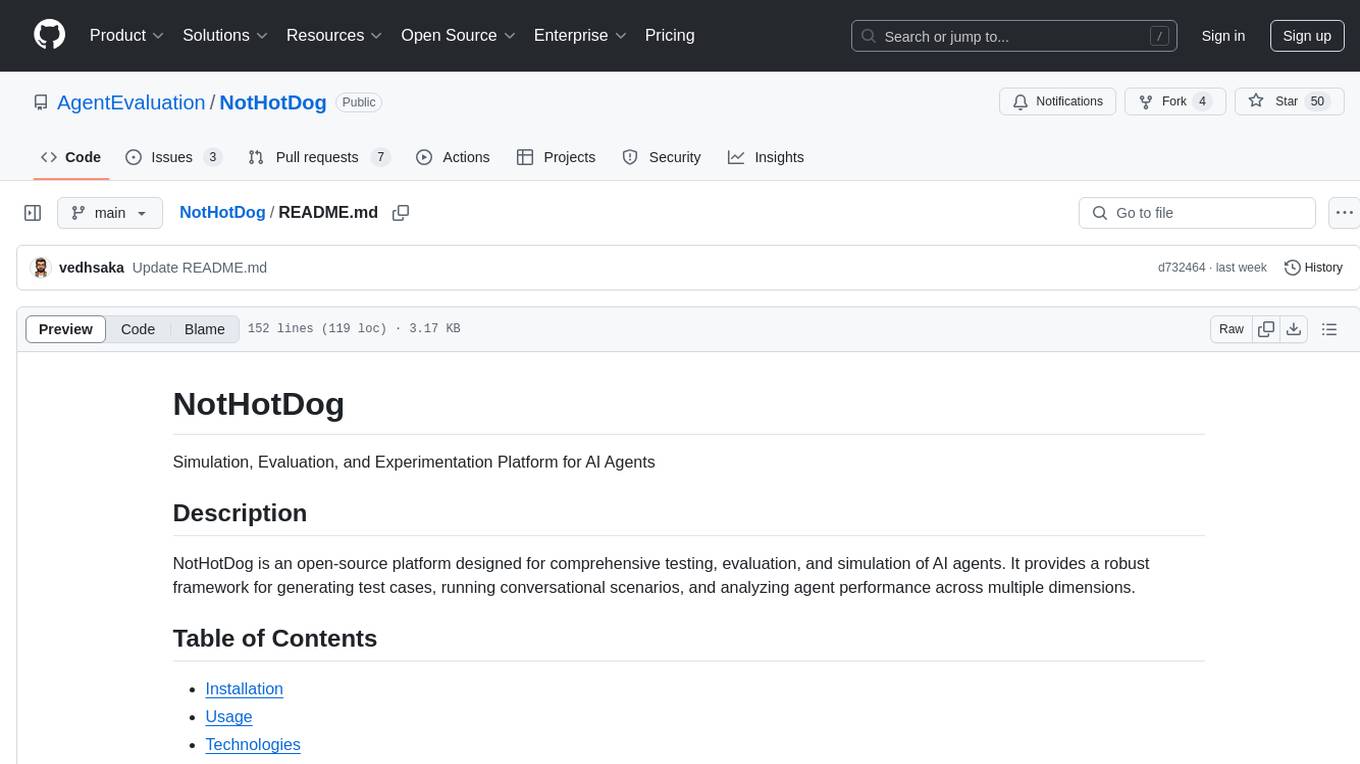

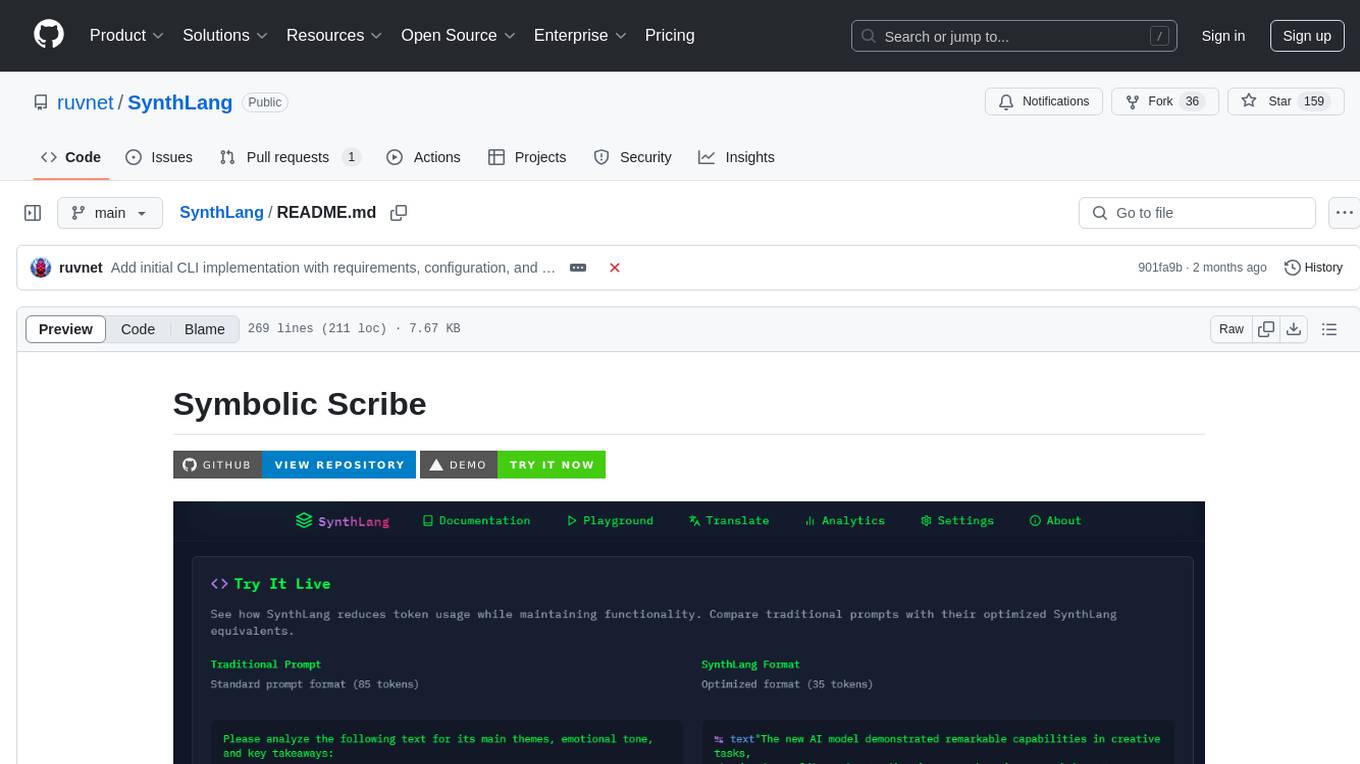

SynthLang

SynthLang is a hyper-efficient prompt language designed to optimize interactions with Large Language Models (LLMs) like GPT-4o by leveraging logographical scripts and symbolic constructs.

Stars: 157

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

README:

Reduce AI costs by up to 70% with SynthLang's efficient prompt optimization. Experience up to 233% faster processing while maintaining effectiveness.

Transform your AI interactions with mathematically-structured prompts. Symbolic Scribe brings academic rigor to prompt engineering, helping you create more precise, reliable, and powerful AI interactions.

🔄 Translator Engine

- Advanced prompt parsing and tokenization

- Intelligent structure analysis and context identification

- Pattern recognition and syntax transformation

- Real-time format validation and error detection

- Metadata extraction and processing

⚡️ Performance Optimization

- Token reduction up to 70% through advanced compression

- Processing speed improvements up to 233%

- Real-time token counting and model-specific calculations

- Semantic analysis and duplicate detection

- Context merging and density optimization

🧪 Testing Framework

- Comprehensive OpenRouter integration

- Response quality validation

- Performance monitoring (<500ms translation time)

- Success rate tracking and error management

- Usage pattern analysis

🔧 Technical Architecture

- React + TypeScript frontend with Vite

- Tailwind CSS for responsive design

- OpenRouter API integration

- Local-first architecture for privacy

- WebAssembly modules for performance

- Horizontal scaling capability

- Advanced caching strategies

🎯 System Requirements

- Response time < 500ms for translations

- 99.9% uptime for API services

- < 100ms latency for token counting

- Real-time cost calculation

- Concurrent request handling

- Load balancing and request queuing

🔒 Security Features

- Encrypted API key storage

- Request validation and access control

- Comprehensive audit logging

- Data encryption at rest and in transit

- Automated security testing

✨ Mathematical Precision - Use formal frameworks for structured prompts

🧮 Academic Rigor - Leverage set theory, topology, and abstract algebra

🔒 Enhanced Security - Built-in threat modeling and safety constraints

📱 Modern Interface - Sleek, responsive design that works everywhere

🚀 Instant Testing - Real-time preview with multiple AI models

- Set Theory Templates: Model complex relationships and hierarchies

- Category Theory: Define abstract transformations and mappings

- Abstract Algebra: Structure group operations and symmetries

- Topology: Explore continuous transformations and invariants

- Complex Analysis: Handle multi-dimensional relationships

- Information Security: Model threat vectors and attack surfaces

- Ethical Analysis: Structure moral frameworks and constraints

- AI Safety: Define system boundaries and safety properties

- Domain Adaptation: Apply mathematical rigor to any field

- Interactive Console: Terminal-style interface with modern aesthetics

- Real-time Preview: Test prompts with multiple AI models

- Template Library: Pre-built frameworks for common use cases

- Mobile Responsive: Full functionality on all device sizes

- Local Storage: Secure saving of prompts and preferences

- Encrypted local storage of API keys

- Optional environment variable configuration

- No server-side key storage

- Automatic key validation

- Client-side only processing

- No external data transmission except to OpenRouter API

- No tracking or analytics

- Configurable model selection

- Installation

git clone https://github.com/ruvnet/SynthLang.git

cd SynthLang

npm install- Configuration

cp .env.sample .env

# Edit .env with your OpenRouter API key- Development

npm run dev- Production Build

npm run build

npm run previewSynthLang includes a powerful command-line interface for prompt engineering, framework translation, and optimization capabilities.

pip install synthlang- Translate - Convert natural language to SynthLang format:

synthlang translate --source "your prompt" --framework synthlang- Optimize - Improve prompt efficiency:

synthlang optimize "path/to/prompt.txt"- Evolve - Use genetic algorithms to improve prompts:

synthlang evolve "initial_prompt"- Classify - Analyze and categorize prompts:

synthlang classify "prompt_text"For detailed documentation on CLI usage and features, see:

- Select a mathematical framework template

- Choose your target domain

- Define your variables and relationships

- Generate structured prompts

- Navigate to Templates page

- Select a base template

- Modify variables and relationships

- Save for future use

- Use the Preview function to test prompts

- Select different models for comparison

- Refine based on responses

- Export final versions

- Client-side only architecture

- No persistent server storage

- Encrypted API key storage

- Input sanitization

- Regular API key rotation

- Use environment variables in production

- Monitor API usage

- Review generated prompts for sensitive data

We welcome contributions! Please see our Contributing Guide for details.

- Fork the repository

- Create a feature branch

- Install dependencies

- Make your changes

- Run tests

- Submit a PR

- Documentation:

/docspage in app - Issues: GitHub issue tracker

- Community: Discord server (coming soon)

src/

├── core/

│ ├── translator/ # Prompt translation engine

│ ├── optimizer/ # Token optimization system

│ └── tester/ # Testing framework

├── services/

│ ├── openRouter/ # OpenRouter integration

│ ├── storage/ # State management

│ └── analytics/ # Performance metrics

└── interfaces/

├── web/ # Web interface

└── api/ # API endpoints

🔨 Code Organization

- Modular architecture with clear separation of concerns

- Consistent naming conventions and comprehensive documentation

- Type safety and robust error handling

- Extensive test coverage (unit, integration, performance)

- CI/CD pipeline with automated testing and deployment

- Comprehensive monitoring and logging

🚀 Planned Enhancements

- Advanced optimization algorithms

- Extended model support

- Enhanced analytics capabilities

- Automated optimization suggestions

- Custom testing scenarios

- Batch processing improvements

- Community features and integrations

MIT License - see LICENSE file for details

- OpenRouter for AI model access

- shadcn/ui for component library

- Tailwind CSS for styling

- Vite for build tooling

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SynthLang

Similar Open Source Tools

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

lyraios

LYRAIOS (LLM-based Your Reliable AI Operating System) is an advanced AI assistant platform built with FastAPI and Streamlit, designed to serve as an operating system for AI applications. It offers core features such as AI process management, memory system, and I/O system. The platform includes built-in tools like Calculator, Web Search, Financial Analysis, File Management, and Research Tools. It also provides specialized assistant teams for Python and research tasks. LYRAIOS is built on a technical architecture comprising FastAPI backend, Streamlit frontend, Vector Database, PostgreSQL storage, and Docker support. It offers features like knowledge management, process control, and security & access control. The roadmap includes enhancements in core platform, AI process management, memory system, tools & integrations, security & access control, open protocol architecture, multi-agent collaboration, and cross-platform support.

LLM-FuzzX

LLM-FuzzX is an open-source user-friendly fuzz testing tool for large language models (e.g., GPT, Claude, LLaMA), equipped with advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly discover potential security vulnerabilities and enhance model robustness. The tool features a user-friendly web interface for visual configuration and real-time monitoring, supports various advanced mutation methods, integrates RoBERTa model for real-time jailbreak detection and evaluation, supports multiple language models like GPT, Claude, LLaMA, provides visualization analysis with seed flowcharts and experiment data statistics, and offers detailed logging support for main, mutation, and jailbreak logs.

WeKnora

WeKnora is a document understanding and semantic retrieval framework based on large language models (LLM), designed specifically for scenarios with complex structures and heterogeneous content. The framework adopts a modular architecture, integrating multimodal preprocessing, semantic vector indexing, intelligent recall, and large model generation reasoning to build an efficient and controllable document question-answering process. The core retrieval process is based on the RAG (Retrieval-Augmented Generation) mechanism, combining context-relevant segments with language models to achieve higher-quality semantic answers. It supports various document formats, intelligent inference, flexible extension, efficient retrieval, ease of use, and security and control. Suitable for enterprise knowledge management, scientific literature analysis, product technical support, legal compliance review, and medical knowledge assistance.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

fastapi-langgraph-agent-production-ready-template

A production-ready FastAPI template for building AI agent applications with LangGraph integration. This template provides a robust foundation for building scalable, secure, and maintainable AI agent services. It includes features like FastAPI for high-performance async API endpoints, LangGraph integration, structured logging, rate limiting, PostgreSQL for data persistence, Docker support, security measures like JWT-based authentication and input sanitization, developer-friendly features like environment-specific configuration and type hints, a model evaluation framework with automated metric-based evaluation and detailed JSON reports, and a configuration system with environment-specific settings.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

CortexON

CortexON is an open-source, multi-agent AI system designed to automate and simplify everyday tasks. It integrates specialized agents like Web Agent, File Agent, Coder Agent, Executor Agent, and API Agent to accomplish user-defined objectives. CortexON excels at executing complex workflows, research tasks, technical operations, and business process automations by dynamically coordinating the agents' unique capabilities. It offers advanced research automation, multi-agent orchestration, integration with third-party APIs, code generation and execution, efficient file and data management, and personalized task execution for travel planning, market analysis, educational content creation, and business intelligence.

paelladoc

PAELLADOC is an intelligent documentation system that uses AI to analyze code repositories and generate comprehensive technical documentation. It offers a modular architecture with MECE principles, interactive documentation process, key features like Orchestrator and Commands, and a focus on context for successful AI programming. The tool aims to streamline documentation creation, code generation, and product management tasks for software development teams, providing a definitive standard for AI-assisted development documentation.

ai-doc-gen

An AI-powered code documentation generator that automatically analyzes repositories and creates comprehensive documentation using advanced language models. The system employs a multi-agent architecture to perform specialized code analysis and generate structured documentation.

aiogram-django-template

Aiogram & Django API Template is a robust and secure Django template with advanced features like Docker integration, Celery for asynchronous tasks, Sentry for error tracking, Django Rest Framework for building APIs, and more. It provides scalability options, up-to-date dependencies, and integration with AWS S3 for storage. The template includes configuration guides for secrets, ports, performance tuning, application settings, CORS and CSRF settings, and database configuration. Security, scalability, and monitoring are emphasized for efficient Django API development.

MARBLE

MARBLE (Multi-Agent Coordination Backbone with LLM Engine) is a modular framework for developing, testing, and evaluating multi-agent systems leveraging Large Language Models. It provides a structured environment for agents to interact in simulated environments, utilizing cognitive abilities and communication mechanisms for collaborative or competitive tasks. The framework features modular design, multi-agent support, LLM integration, shared memory, flexible environments, metrics and evaluation, industrial coding standards, and Docker support.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

NotHotDog

NotHotDog is an open-source platform for testing, evaluating, and simulating AI agents. It offers a robust framework for generating test cases, running conversational scenarios, and analyzing agent performance.

For similar tasks

Awesome-AI-GPTs

Awesome AI GPTs is an open repository that collects resources and fun ways to use OpenAI GPTs. It includes databases, search tools, open-source projects, articles, attack and defense strategies, installation of custom plugins, knowledge bases, and community interactions related to GPTs. Users can find curated lists, leaked prompts, and various GPT applications in this repository. The project aims to empower users with AI capabilities and foster collaboration in the AI community.

jailbreak_llms

This is the official repository for the ACM CCS 2024 paper 'Do Anything Now': Characterizing and Evaluating In-The-Wild Jailbreak Prompts on Large Language Models. The project employs a new framework called JailbreakHub to conduct the first measurement study on jailbreak prompts in the wild, collecting 15,140 prompts from December 2022 to December 2023, including 1,405 jailbreak prompts. The dataset serves as the largest collection of in-the-wild jailbreak prompts. The repository contains examples of harmful language and is intended for research purposes only.

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

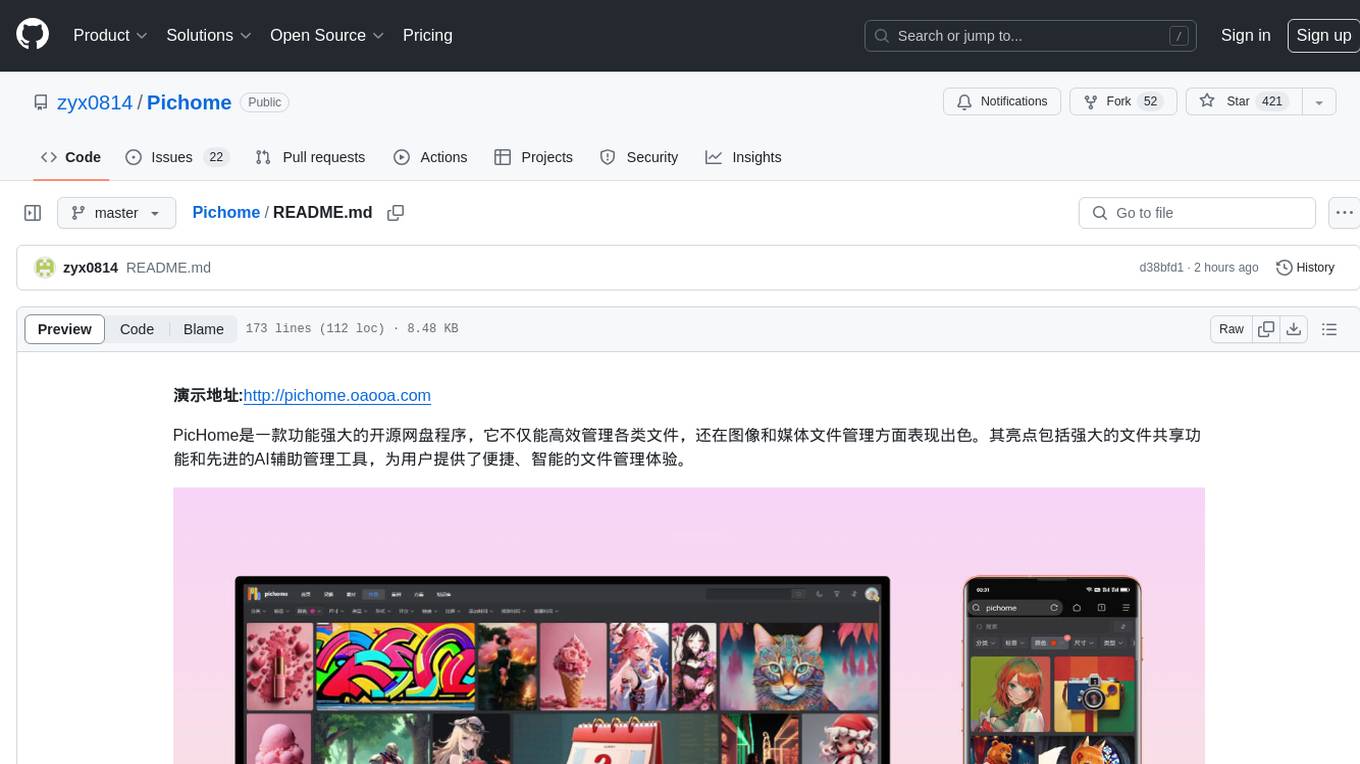

Pichome

PicHome is a powerful open-source cloud storage program that efficiently manages various types of files and excels in image and media file management. Its highlights include robust file sharing features and advanced AI-assisted management tools, providing users with a convenient and intelligent file management experience. The program offers diverse list modes, customizable file information display, enhanced quick file preview, advanced tagging, custom cover and preview images, multiple preview images, and multi-library management. Additionally, PicHome features strong file sharing capabilities, allowing users to share entire libraries, create personalized showcase web pages, and build complete data sharing websites. The AI-assisted management aspect includes AI file renaming, tagging, description writing, batch annotation, and file Q&A services, all aimed at improving file management efficiency. PicHome supports a wide range of file formats and can be applied in various scenarios such as e-commerce, gaming, design, development, enterprises, schools, labs, media, and entertainment institutions.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

LLMSpeculativeSampling

This repository implements speculative sampling for large language model (LLM) decoding, utilizing two models - a target model and an approximation model. The approximation model generates token guesses, corrected by the target model, resulting in improved efficiency. It includes implementations of Google's and Deepmind's versions of speculative sampling, supporting models like llama-7B and llama-1B. The tool is designed for fast inference from transformers via speculative decoding.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.