meeting-minutes

Privacy first, AI meeting assistant with 4x faster Parakeet/Whisper live transcription, speaker diarization, and Ollama summarization built on Rust. 100% local processing. no cloud required. Meetily (Meetly Ai - https://meetily.ai) is the #1 Self-hosted, Open-source Ai meeting note taker for macOS & Windows.

Stars: 9772

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

README:

Get latest Product updates

Website •

LinkedIn •

Meetily Discord •

Privacy-First AI •

Reddit

A privacy-first AI meeting assistant that captures, transcribes, and summarizes meetings entirely on your infrastructure. Built by expert AI engineers passionate about data sovereignty and open source solutions. Perfect for enterprises that need advanced meeting intelligence without compromising on privacy, compliance, or control.

🎉 New: Meetily PRO Available - Looking for enhanced accuracy and advanced features? Check out our professional-grade solution with custom summary templates, advanced exports (PDF, DOCX), auto-meeting detection, built-in GDPR compliance, and many more. This Community Edition remains forever free & open source. Learn more about PRO →

Table of Contents

Meetily is a privacy-first AI meeting assistant that runs entirely on your local machine. It captures your meetings, transcribes them in real-time, and generates summaries, all without sending any data to the cloud. This makes it the perfect solution for professionals and enterprises who need to maintain complete control over their sensitive information.

While there are many meeting transcription tools available, this solution stands out by offering:

- Privacy First: All processing happens locally on your device.

- Cost-Effective: Uses open-source AI models instead of expensive APIs.

- Flexible: Works offline and supports multiple meeting platforms.

- Customizable: Self-host and modify for your specific needs.

The Privacy Problem

Meeting AI tools create significant privacy and compliance risks across all sectors:

- $4.4M average cost per data breach (IBM 2024)

- €5.88 billion in GDPR fines issued by 2025

- 400+ unlawful recording cases filed in California this year

Whether you're a defense consultant, enterprise executive, legal professional, or healthcare provider, your sensitive discussions shouldn't live on servers you don't control. Cloud meeting tools promise convenience but deliver privacy nightmares with unclear data storage practices and potential unauthorized access.

Meetily solves this: Complete data sovereignty on your infrastructure, zero vendor lock-in, and full control over your sensitive conversations.

- Local First: All processing is done on your machine. No data ever leaves your computer.

- Real-time Transcription: Get a live transcript of your meeting as it happens.

- AI-Powered Summaries: Generate summaries of your meetings using powerful language models.

- Multi-Platform: Works on macOS, Windows, and Linux.

- Open Source: Meetily is open source and free to use.

- Flexible AI Provider Support: Choose from Ollama (local), Claude, Groq, OpenRouter, or use your own OpenAI-compatible endpoint.

- Download the latest

x64-setup.exefrom Releases - Run the installer

- Download

meetily_0.2.1_aarch64.dmgfrom Releases - Open the downloaded

.dmgfile - Drag Meetily to your Applications folder

- Open Meetily from Applications folder

Build from source following our detailed guides:

Quick start:

git clone https://github.com/Zackriya-Solutions/meeting-minutes

cd meeting-minutes/frontend

pnpm install

./build-gpu.shTranscribe meetings entirely on your device using Whisper or Parakeet models. No cloud required.

Generate meeting summaries with your choice of AI provider. Ollama (local) is recommended, with support for Claude, Groq, OpenRouter, and OpenAI.

All data stays on your machine. Transcription models, recordings, and transcripts are stored locally.

Use your own OpenAI-compatible endpoint for AI summaries. Perfect for organizations with custom AI infrastructure or preferred providers.

Capture microphone and system audio simultaneously with intelligent ducking and clipping prevention.

Built-in support for hardware acceleration across platforms:

- macOS: Apple Silicon (Metal) + CoreML

- Windows/Linux: NVIDIA (CUDA), AMD/Intel (Vulkan)

Automatically enabled at build time - no configuration needed.

Meetily is a single, self-contained application built with Tauri. It uses a Rust-based backend to handle all the core logic, and a Next.js frontend for the user interface.

For more details, see the Architecture documentation.

If you want to contribute to Meetily or build it from source, you'll need to have Rust and Node.js installed. For detailed build instructions, please see the Building from Source guide.

Meetily PRO is a professional-grade solution with enhanced accuracy and advanced features for serious users and teams. Built on a different codebase with superior transcription models and enterprise-ready capabilities.

- Enhanced Accuracy: Superior transcription models for professional-grade accuracy

- Custom Summary Templates: Tailor summaries to your specific workflow and needs

- Advanced Export Options: PDF, DOCX, and Markdown exports with formatting

- Auto-detect and Join Meetings: Automatic meeting detection and joining

- Speaker Identification: Distinguish between speakers automatically (Coming Soon)

- Chat with Meetings: AI-powered meeting insights and queries (Coming Soon)

- Calendar Integration: Seamless integration with your calendar (Coming Soon)

- Self-Hosted Deployment: Deploy on your own infrastructure for teams

- GDPR Compliance Built-In: Privacy by design architecture with complete audit trails

- Priority Support: Dedicated support for PRO users

- Professionals who need the highest accuracy for critical meetings

- Teams and organizations (2-100 users) requiring self-hosted deployment

- Power users who need advanced export formats and custom workflows

- Compliance-focused organizations requiring GDPR readiness

Note: Meetily Community Edition remains free & open source forever with local transcription, AI summaries, and core features. PRO is a separate professional solution for users who need enhanced accuracy and advanced capabilities.

For organizations needing 100+ users or managed compliance solutions, explore Meetily Enterprise.

Learn more about pricing and features: https://meetily.ai/pro/

We welcome contributions from the community! If you have any questions or suggestions, please open an issue or submit a pull request. Please follow the established project structure and guidelines. For more details, refer to the CONTRIBUTING.md file.

Thanks for all the contributions. Our community is what makes this project possible.

MIT License - Feel free to use this project for your own purposes.

- We borrowed some code from Whisper.cpp.

- We borrowed some code from Screenpipe.

- We borrowed some code from transcribe-rs.

- Thanks to NVIDIA for developing the Parakeet model.

- Thanks to istupakov for providing the ONNX conversion of the Parakeet model.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for meeting-minutes

Similar Open Source Tools

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

lotti

Lotti is an open-source personal assistant that helps users capture, organize, and understand their work and life through AI-enhanced task management, audio recordings, and intelligent summaries. It ensures complete data ownership, configurable AI providers, privacy-first design, and no vendor lock-in. Users can pick up tasks, record voice notes, and ask for summaries. Core features include AI-powered intelligence, comprehensive tracking, and privacy & control. Lotti supports multiple AI providers, offers installation guides, beta testing options, and development instructions. It is built on Flutter with a focus on privacy, local AI, and user data ownership.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

Linguflex

Linguflex is a project that aims to simulate engaging, authentic, human-like interaction with AI personalities. It offers voice-based conversation with custom characters, alongside an array of practical features such as controlling smart home devices, playing music, searching the internet, fetching emails, displaying current weather information and news, assisting in scheduling, and searching or generating images.

obsidian-llmsider

LLMSider is an AI assistant plugin for Obsidian that offers flexible multi-model support, deep workflow integration, privacy-first design, and a professional tool ecosystem. It provides comprehensive AI capabilities for personal knowledge management, from intelligent writing assistance to complex task automation, making AI a capable assistant for thinking and creating while ensuring data privacy.

SAM

SAM is a native macOS AI assistant built with Swift and SwiftUI, designed for non-developers who want powerful tools in their everyday life. It provides real assistance, smart memory, voice control, image generation, and custom AI model training. SAM keeps your data on your Mac, supports multiple AI providers, and offers features for documents, creativity, writing, organization, learning, and more. It is privacy-focused, user-friendly, and accessible from various devices. SAM stands out with its privacy-first approach, intelligent memory, task execution capabilities, powerful tools, image generation features, custom AI model training, and flexible AI provider support.

talkcody

TalkCody is a free, open-source AI coding agent designed for developers who value speed, cost, control, and privacy. It offers true freedom to use any AI model without vendor lock-in, maximum speed through unique four-level parallelism, and complete privacy as everything runs locally without leaving the user's machine. With professional-grade features like multimodal input support, MCP server compatibility, and a marketplace for agents and skills, TalkCody aims to enhance development productivity and flexibility.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

refact-vscode

Refact.ai is an open-source AI coding assistant that boosts developer's productivity. It supports 25+ programming languages and offers features like code completion, AI Toolbox for code explanation and refactoring, integrated in-IDE chat, and self-hosting or cloud version. The Enterprise plan provides enhanced customization, security, fine-tuning, user statistics, efficient inference, priority support, and access to 20+ LLMs for up to 50 engineers per GPU.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

BMAD-METHOD

BMAD-METHOD™ is a universal AI agent framework that revolutionizes Agile AI-Driven Development. It offers specialized AI expertise across various domains, including software development, entertainment, creative writing, business strategy, and personal wellness. The framework introduces two key innovations: Agentic Planning, where dedicated agents collaborate to create detailed specifications, and Context-Engineered Development, which ensures complete understanding and guidance for developers. BMAD-METHOD™ simplifies the development process by eliminating planning inconsistency and context loss, providing a seamless workflow for creating AI agents and expanding functionality through expansion packs.

RWKV_APP

RWKV App is an experimental application that enables users to run Large Language Models (LLMs) offline on their edge devices. It offers a privacy-first, on-device LLM experience for everyday devices. Users can engage in multi-turn conversations, text-to-speech, visual understanding, and more, all without requiring an internet connection. The app supports switching between different models, running locally without internet, and exploring various AI tasks such as chat, speech generation, and visual understanding. It is built using Flutter and Dart FFI for cross-platform compatibility and efficient communication with the C++ inference engine. The roadmap includes integrating features into the RWKV Chat app, supporting more model weights, hardware, operating systems, and devices.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

Skills-Manager

Skills Manager is a unified desktop application designed to centralize and manage AI coding assistant skills for tools like Claude Code, Codex, and Opencode. It offers smart synchronization, granular control, high performance, cross-platform support, multi-tool compatibility, custom tools integration, and a modern UI. Users can easily organize, sync, and share their skills across different AI tools, enhancing their coding experience and productivity.

For similar tasks

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

amurex

Amurex is a powerful AI meeting assistant that integrates seamlessly into your workflow. It ensures you never miss details, stay on top of action items, and make meetings more productive. With real-time suggestions, smart summaries, and follow-up emails, Amurex acts as your personal copilot. It is open-source, transparent, secure, and privacy-focused, providing a seamless AI-driven experience to take control of your meetings and focus on what truly matters.

hyprnote

Hyprnote is a local-first AI notepad designed for people in back-to-back meetings. It listens to your meetings while you write, crafts smart summaries based on your quick notes, and runs completely offline using open-source models like Whisper or HyprLLM. With Hyprnote, users can have full control over their notes as not a single byte of data leaves their laptop/server.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

For similar jobs

amurex

Amurex is a powerful AI meeting assistant that integrates seamlessly into your workflow. It ensures you never miss details, stay on top of action items, and make meetings more productive. With real-time suggestions, smart summaries, and follow-up emails, Amurex acts as your personal copilot. It is open-source, transparent, secure, and privacy-focused, providing a seamless AI-driven experience to take control of your meetings and focus on what truly matters.

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

hyprnote

Hyprnote is a local-first AI notepad designed for people in back-to-back meetings. It listens to your meetings while you write, crafts smart summaries based on your quick notes, and runs completely offline using open-source models like Whisper or HyprLLM. With Hyprnote, users can have full control over their notes as not a single byte of data leaves their laptop/server.

char

Char is an AI notetaking app designed for private meetings. It listens to meetings, crafts summaries, and can be used offline. Char captures details in real-time, offers customizable note templates, supports local language models, and integrates with various tools. It is suitable for taking notes during meetings, lectures, or organizing thoughts.

Omi

Omi is an open-source AI wearable that transforms the way conversations are captured and managed. By connecting Omi to your mobile device, you can effortlessly obtain high-quality transcriptions of meetings, chats, and voice memos on the go.

omi

Omi is an open-source AI wearable that provides automatic, high-quality transcriptions of meetings, chats, and voice memos. It revolutionizes how conversations are captured and managed by connecting to mobile devices. The tool offers features for seamless documentation and integration with third-party services.

stenoai

StenoAI is an AI-powered meeting intelligence tool that allows users to record, transcribe, summarize, and query meetings using local AI models. It prioritizes privacy by processing data entirely on the user's device. The tool offers multiple AI models optimized for different use cases, making it ideal for healthcare, legal, and finance professionals with confidential data needs. StenoAI also features a macOS desktop app with a user-friendly interface, making it convenient for users to access its functionalities. The project is open-source and not affiliated with any specific company, emphasizing its focus on meeting-notes productivity and community collaboration.

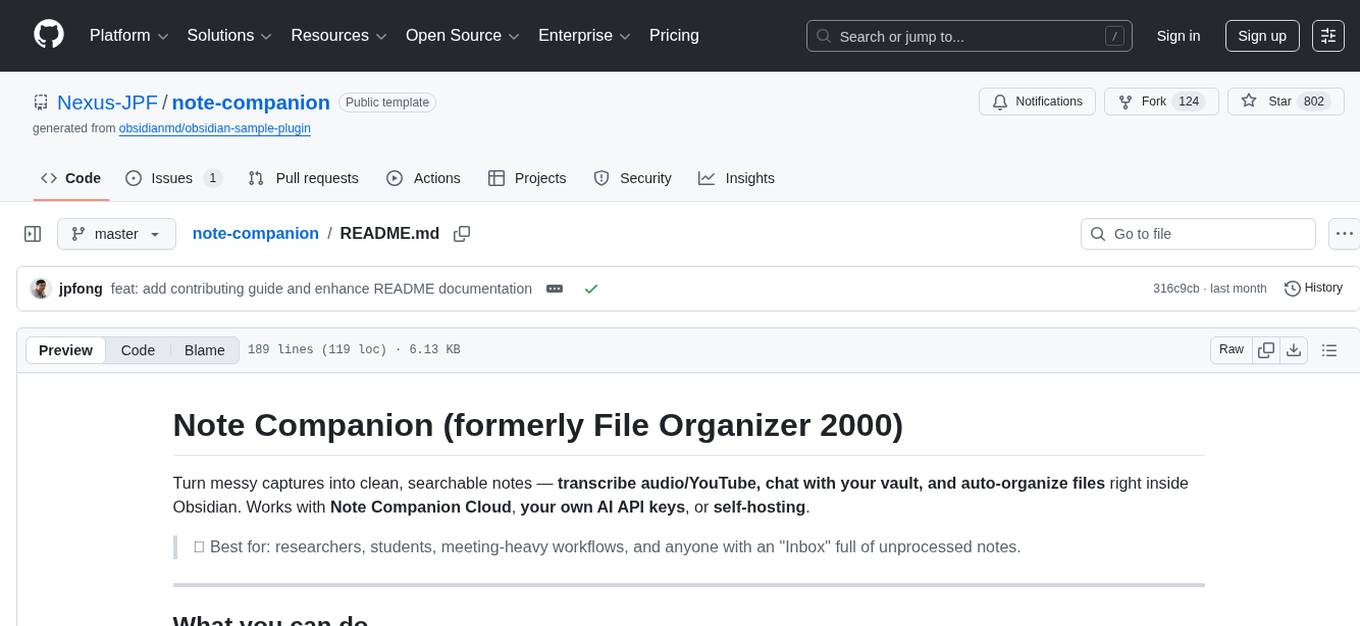

note-companion

Note Companion (formerly File Organizer 2000) helps users turn messy captures into clean, searchable notes by transcribing audio/YouTube, chatting with their vault, and auto-organizing files inside Obsidian. It works with Note Companion Cloud, user's own AI API keys, or self-hosting. The tool is best suited for researchers, students, meeting-heavy workflows, and individuals with an 'Inbox' full of unprocessed notes. Users can transcribe YouTube videos, transcribe audio & video files, chat with their notes using vault context, auto-organize & format notes, enhance meeting notes, work with multiple AI providers, and self-host the tool with Docker + service examples.