gateway

MCP-Server from your Database optimized for LLMs and AI-Agents.

Stars: 210

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

README:

🚀 Interactive Demo via GitHub Codespaces

Simple way to expose your database to AI-Agent via MCP or OpenAPI 3.1 protocols.

docker run --platform linux/amd64 -p 9090:9090 \

ghcr.io/centralmind/gateway:v0.2.6 start \

--connection-string "postgres://db-user:db-password@db-host/db-name?sslmode=require"This will run for you an API:

INFO Gateway server started successfully!

INFO MCP SSE server for AI agents is running at: http://localhost:9090/sse

INFO REST API with Swagger UI is available at: http://localhost:9090/ Which you can use inside your AI Agent:

Gateway will generate AI optimized API.

AI agents and LLM-powered applications need fast, secure access to data, but traditional APIs and databases aren't built for this purpose. We're building an API layer that automatically generates secure, LLM-optimized APIs for your structured data.

Our solution:

- Filters out PII and sensitive data to ensure compliance with GDPR, CPRA, SOC 2, and other regulations

- Adds traceability and auditing capabilities, ensuring AI applications aren't black boxes and security teams maintain control

- Optimizes for AI workloads, supporting Model Context Protocol (MCP) with enhanced meta information to help AI agents understand APIs, along with built-in caching and security features

Our primary users are companies deploying AI agents for customer support, analytics, where they need models to access the data without direct SQL access to databases elemenating security, compliance and peformance risks.

- ⚡ Automatic API Generation – Creates APIs automatically using LLM based on table schema and sampled data

- 🗄️ Structured Database Support – Supports PostgreSQL, MySQL, ClickHouse, Snowflake, MSSQL, BigQuery, Oracle Database, SQLite, ElasticSearch

- 🌍 Multiple Protocol Support – Provides APIs as REST or MCP Server including SSE mode

- 📜 API Documentation – Auto-generated Swagger documentation and OpenAPI 3.1.0 specification

- 🔒 PII Protection – Implements regex plugin or Microsoft Presidio plugin for PII and sensitive data redaction

- ⚡ Flexible Configuration – Easily extensible via YAML configuration and plugin system

- 🐳 Deployment Options – Run as a binary or Docker container with ready-to-use Helm chart

- 🤖 Multiple AI Providers Support - Support for OpenAI, Anthropic, Amazon Bedrock, Google Gemini & Google VertexAI

- 📦 Local & On-Premises – Support for self-hosted LLMs through configurable AI endpoints and models

- 🔑 Row-Level Security (RLS) – Fine-grained data access control using Lua scripts

- 🔐 Authentication Options – Built-in support for API keys and OAuth

- 👀 Comprehensive Monitoring – Integration with OpenTelemetry (OTel) for request tracking and audit trails

- 🏎️ Performance Optimization – Implements time-based and LRU caching strategies

Gateway connects to your structured databases like PostgreSQL and automatically analyzes the schema and data samples to generate an optimized API structure based on your prompt. LLM is used only on discovery stage to produce API configuration. The tool uses AI Providers to generate the API configuration while ensuring security through PII detection.

Gateway supports multiple deployment options from standalone binary, docker or Kubernetes. Check our launching guide for detailed instructions. The system uses YAML configuration and plugins for easy customization.

Access your data through REST APIs or Model Context Protocol (MCP) with built-in security features. Gateway seamlessly integrates with AI models and applications like LangChain, OpenAI and Claude Desktop using function calling or Cursor through MCP. You can also setup telemetry to local or remote destination in otel format.

# Clone the repository

git clone https://github.com/centralmind/gateway.git

# Navigate to project directory

cd gateway

# Install dependencies

go mod download

# Build the project

go build .Gateway uses LLM models to generate your API configuration. Follow these steps:

- Choose one of our supported AI providers:

- OpenAI and all OpenAI-compatible providers

- Anthropic

- Amazon Bedrock

- Google Vertex AI (Anthropic)

- Google Gemini

Google Gemini provides a generous free tier. You can obtain an API key by visiting Google AI Studio:

Once logged in, you can create an API key in the API section of AI Studio. The free tier includes a generous monthly token allocation, making it accessible for development and testing purposes.

Configure AI provider authorization. For Google Gemini, set an API key.

export GEMINI_API_KEY='yourkey'- Run the discovery command:

./gateway discover \

--ai-provider gemini \

--connection-string "postgresql://neondb_owner:MY_PASSWORD@MY_HOST.neon.tech/neondb?sslmode=require" \

--prompt "Generate for me awesome readonly API"- Monitor the generation process:

INFO 🚀 API Discovery Process

INFO Step 1: Read configs

INFO ✅ Step 1 completed. Done.

INFO Step 2: Discover data

INFO Discovered Tables:

INFO - payment_dim: 3 columns, 39 rows

INFO - fact_table: 9 columns, 1000000 rows

INFO ✅ Step 2 completed. Done.

# Additional steps and output...

INFO ✅ All steps completed. Done.

INFO --- Execution Statistics ---

INFO Total time taken: 1m10s

INFO Tokens used: 16543 (Estimated cost: $0.0616)

INFO Tables processed: 6

INFO API methods created: 18

INFO Total number of columns with PII data: 2- Review the generated configuration in

gateway.yaml:

api:

name: Awesome Readonly API

description: ''

version: '1.0'

database:

type: postgres

connection: YOUR_CONNECTION_INFO

tables:

- name: payment_dim

columns: # Table columns

endpoints:

- http_method: GET

http_path: /some_path

mcp_method: some_method

summary: Some readable summary

description: 'Some description'

query: SQL Query with params

params: # Query parameters./gateway start --config gateway.yaml restdocker compose -f ./example/simple/docker-compose.yml upGateway implements the MCP protocol for seamless integration with Claude and other tools. For detailed setup instructions, see our Claude integration guide.

- Build the gateway binary:

go build .- Configure Claude Desktop tool configuration:

{

"mcpServers": {

"gateway": {

"command": "PATH_TO_GATEWAY_BINARY",

"args": ["start", "--config", "PATH_TO_GATEWAY_YAML_CONFIG", "mcp-stdio"]

}

}

}It is always subject to change, and the roadmap will highly depend on user feedback. At this moment, we are planning the following features:

- 🗄️ Extended Database Integrations - Redshift, S3 (Iceberg and Parquet), Oracle DB, Microsoft SQL Server, Elasticsearch

- 🔑 SSH tunneling - ability to use jumphost or ssh bastion to tunnel connections

- 🔍 Advanced Query Capabilities - Complex filtering syntax and Aggregation functions as parameters

- 🔐 Enhanced MCP Security - API key and OAuth authentication

- 📦 Schema Management - Automated schema evolution and API versioning

- 🚦 Advanced Traffic Management - Intelligent rate limiting, Request throttling

- ✍️ Write Operations Support - Insert, Update operations

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gateway

Similar Open Source Tools

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

qwery-core

Qwery is a platform for querying and visualizing data using natural language without technical knowledge. It seamlessly integrates with various datasources, generates optimized queries, and delivers outcomes like result sets, dashboards, and APIs. Features include natural language querying, multi-database support, AI-powered agents, visual data apps, desktop & cloud options, template library, and extensibility through plugins. The project is under active development and not yet suitable for production use.

llmgateway

The llmgateway repository is a tool that provides a gateway for interacting with various LLM (Large Language Model) models. It allows users to easily access and utilize pre-trained language models for tasks such as text generation, sentiment analysis, and language translation. The tool simplifies the process of integrating LLMs into applications and workflows, enabling developers to leverage the power of state-of-the-art language models for various natural language processing tasks.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

fastapi-langgraph-agent-production-ready-template

A production-ready FastAPI template for building AI agent applications with LangGraph integration. This template provides a robust foundation for building scalable, secure, and maintainable AI agent services. It includes features like FastAPI for high-performance async API endpoints, LangGraph integration, structured logging, rate limiting, PostgreSQL for data persistence, Docker support, security measures like JWT-based authentication and input sanitization, developer-friendly features like environment-specific configuration and type hints, a model evaluation framework with automated metric-based evaluation and detailed JSON reports, and a configuration system with environment-specific settings.

chat-vue

Full-featured AI Chatbot Vue application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support, authentication via GitHub OAuth, chat history persistence, markdown rendering, and easy deployment to Vercel with zero configuration.

chat

Full-featured AI Chatbot Nuxt application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support via various AI providers, authentication via nuxt-auth-utils, chat history persistence using PostgreSQL database and Drizzle ORM, easy deploy to Vercel with zero configuration. The application is configured to use Vercel AI Gateway providing a unified API to access hundreds of AI models through a single endpoint with features like high reliability, spend monitoring, load balancing, and automatic retries and fallbacks between providers.

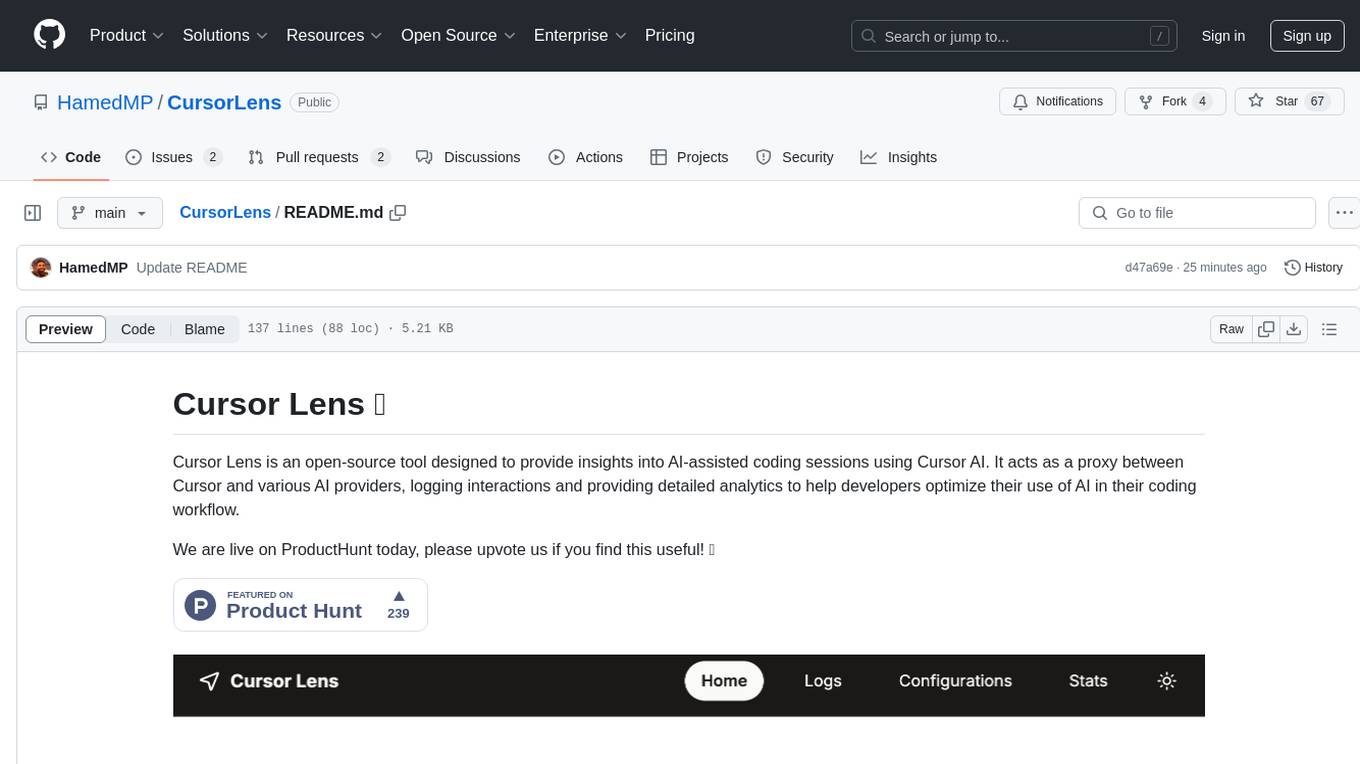

CursorLens

Cursor Lens is an open-source tool that acts as a proxy between Cursor and various AI providers, logging interactions and providing detailed analytics to help developers optimize their use of AI in their coding workflow. It supports multiple AI providers, captures and logs all requests, provides visual analytics on AI usage, allows users to set up and switch between different AI configurations, offers real-time monitoring of AI interactions, tracks token usage, estimates costs based on token usage and model pricing. Built with Next.js, React, PostgreSQL, Prisma ORM, Vercel AI SDK, Tailwind CSS, and shadcn/ui components.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

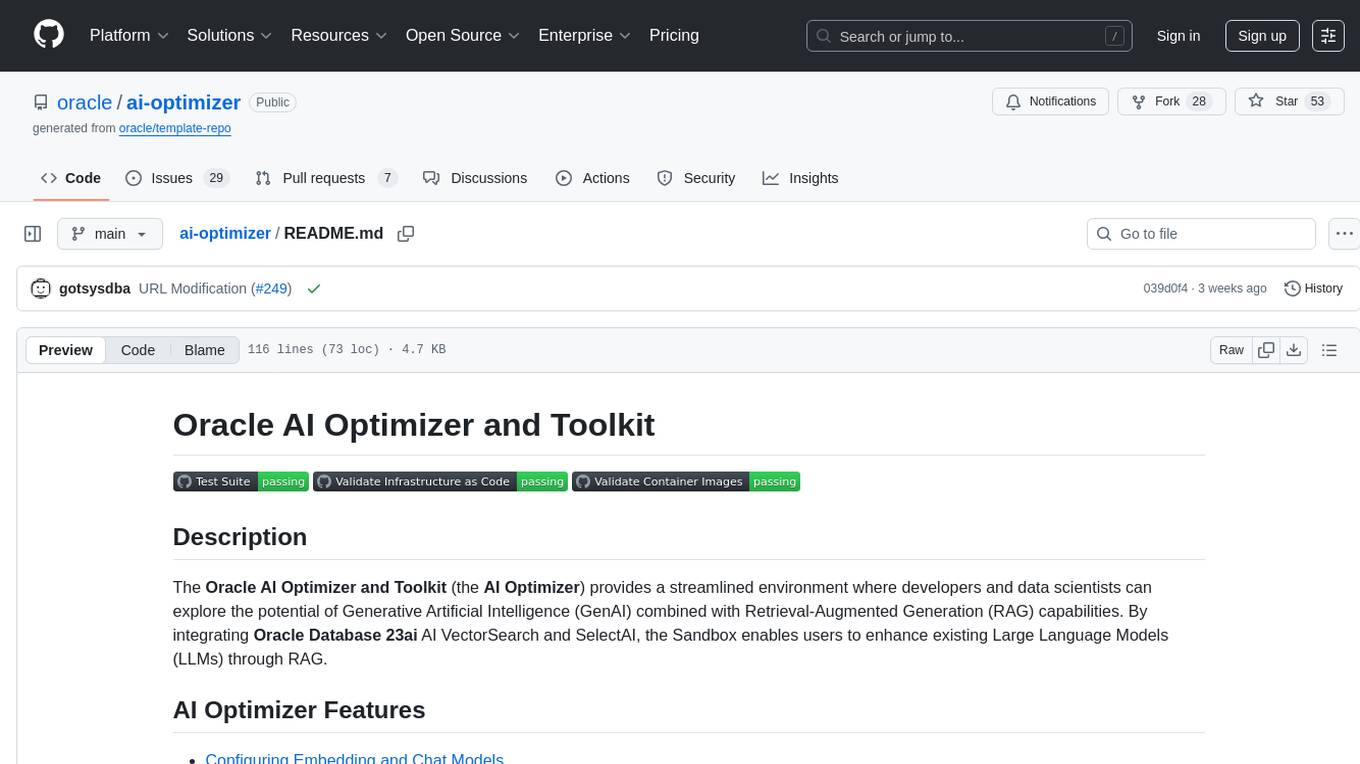

ai-optimizer

The Oracle AI Optimizer and Toolkit provides a streamlined environment for developers and data scientists to explore Generative Artificial Intelligence (GenAI) and Retrieval-Augmented Generation (RAG) capabilities. It integrates Oracle Database 23ai AI VectorSearch and SelectAI to enhance Large Language Models (LLMs) through RAG.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

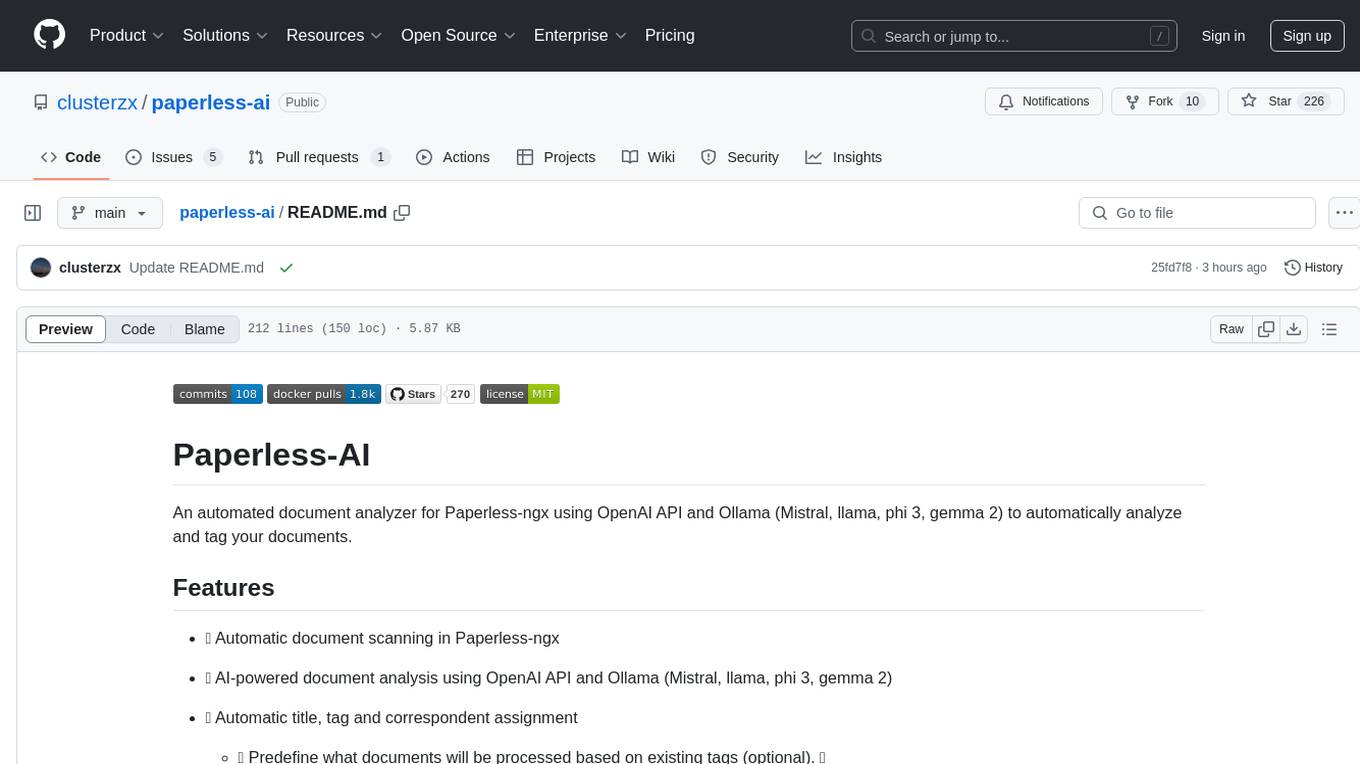

paperless-ai

Paperless-AI is an automated document analyzer tool designed for Paperless-ngx users. It utilizes the OpenAI API and Ollama (Mistral, llama, phi 3, gemma 2) to automatically scan, analyze, and tag documents. The tool offers features such as automatic document scanning, AI-powered document analysis, automatic title and tag assignment, manual mode for analyzing documents, easy setup through a web interface, document processing dashboard, error handling, and Docker support. Users can configure the tool through a web interface and access a debug interface for monitoring and troubleshooting. Paperless-AI aims to streamline document organization and analysis processes for users with access to Paperless-ngx and AI capabilities.

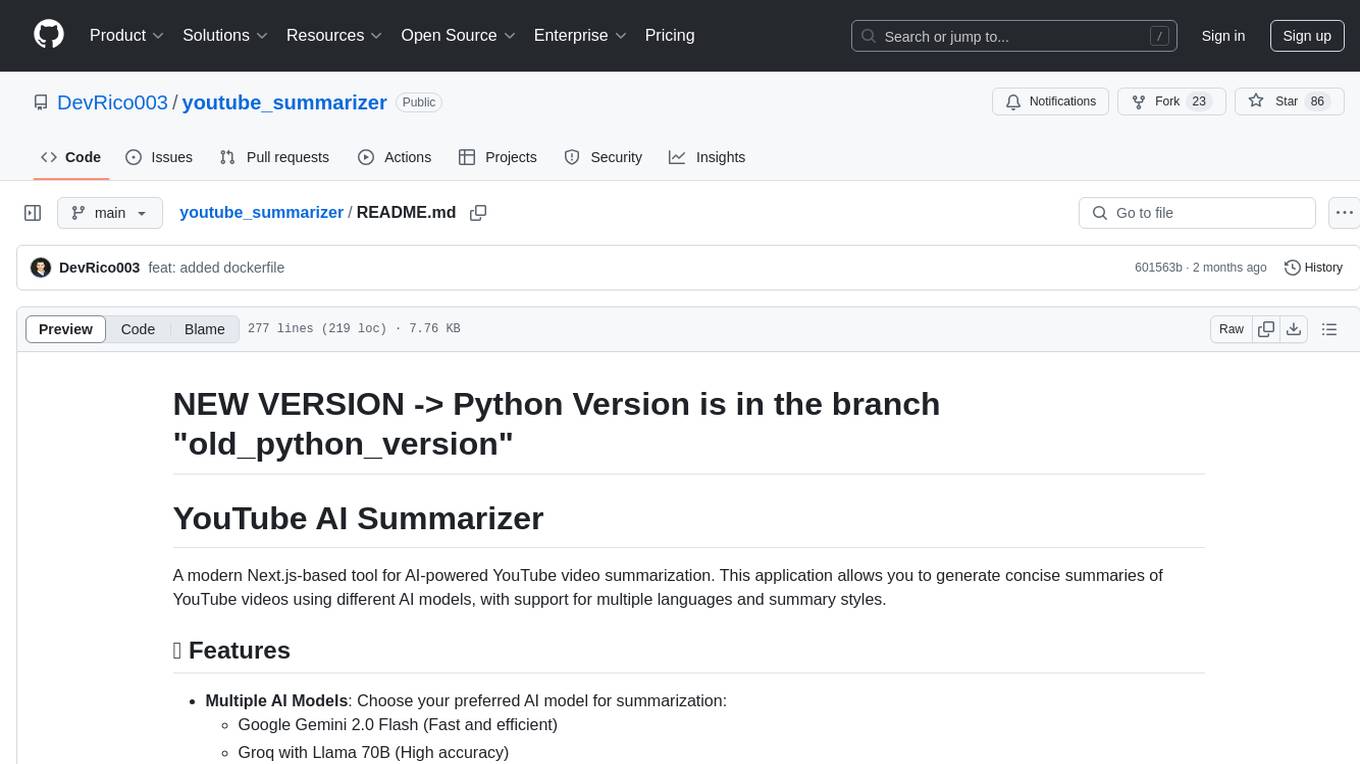

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

enferno

Enferno is a modern Flask framework optimized for AI-assisted development workflows. It combines carefully crafted development patterns, smart Cursor Rules, and modern libraries to enable developers to build sophisticated web applications with unprecedented speed. Enferno's intelligent patterns and contextual guides help create production-ready SAAS applications faster than ever. It includes features like modern stack, authentication, OAuth integration, database support, task queue, frontend components, security measures, Docker readiness, and more.

For similar tasks

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

db2rest

DB2Rest is a modern low code REST DATA API platform that enables the rapid development of intelligent applications by combining databases, language models, and vector stores. It facilitates context-aware, reasoning applications without vendor lock-in. The tool accelerates application delivery, fosters faster innovation with AI, serves as a secure database gateway, and simplifies integration. It supports various databases like PostgreSQL, MySQL, MS SQL Server, Oracle, MongoDB, and more, with planned support for additional databases. Users can connect on Discord for support and contact [email protected] for inquiries.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

dreamfactory

DreamFactory is a self-hosted platform that provides governed API access to any data source for enterprise apps and local LLMs. It is a secure enterprise data access platform built on top of the Laravel framework, offering role-based access control, identity passthrough, and customization of API behavior using PHP, Python, and NodeJS scripting languages. DreamFactory allows users to generate powerful APIs for SQL and NoSQL databases, files, email, and push notifications in seconds, while ensuring security with features like user management, SSO authentication, OAuth, and Active Directory integration.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.