Archon

Beta release of Archon OS - the knowledge and task management backbone for AI coding assistants.

Stars: 12193

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

README:

Power up your AI coding assistants with your own custom knowledge base and task management as an MCP server

Quick Start • Upgrading • What's Included • Architecture • Troubleshooting

Archon is currently in beta! Expect things to not work 100%, and please feel free to share any feedback and contribute with fixes/new features! Thank you to everyone for all the excitement we have for Archon already, as well as the bug reports, PRs, and discussions. It's a lot for our small team to get through but we're committed to addressing everything and making Archon into the best tool it possibly can be!

Archon is the command center for AI coding assistants. For you, it's a sleek interface to manage knowledge, context, and tasks for your projects. For the AI coding assistant(s), it's a Model Context Protocol (MCP) server to collaborate on and leverage the same knowledge, context, and tasks. Connect Claude Code, Kiro, Cursor, Windsurf, etc. to give your AI agents access to:

- Your documentation (crawled websites, uploaded PDFs/docs)

- Smart search capabilities with advanced RAG strategies

- Task management integrated with your knowledge base

- Real-time updates as you add new content and collaborate with your coding assistant on tasks

- Much more coming soon to build Archon into an integrated environment for all context engineering

This new vision for Archon replaces the old one (the agenteer). Archon used to be the AI agent that builds other agents, and now you can use Archon to do that and more.

It doesn't matter what you're building or if it's a new/existing codebase - Archon's knowledge and task management capabilities will improve the output of any AI driven coding.

- GitHub Discussions - Join the conversation and share ideas about Archon

- Contributing Guide - How to get involved and contribute to Archon

- Introduction Video - Getting started guide and vision for Archon

- Archon Kanban Board - Where maintainers are managing issues/features

- Dynamous AI Mastery - The birthplace of Archon - come join a vibrant community of other early AI adopters all helping each other transform their careers and businesses!

- Docker Desktop

- Node.js 18+ (for hybrid development mode)

- Supabase account (free tier or local Supabase both work)

- OpenAI API key (Gemini and Ollama are supported too!)

- (OPTIONAL) Make (see Installing Make below)

-

Clone Repository:

git clone -b stable https://github.com/coleam00/archon.git

cd archonNote: The

stablebranch is recommended for using Archon. If you want to contribute or try the latest features, use themainbranch withgit clone https://github.com/coleam00/archon.git -

Environment Configuration:

cp .env.example .env # Edit .env and add your Supabase credentials: # SUPABASE_URL=https://your-project.supabase.co # SUPABASE_SERVICE_KEY=your-service-key-here

IMPORTANT NOTES:

- For cloud Supabase: they recently introduced a new type of service role key but use the legacy one (the longer one).

- For local Supabase: set SUPABASE_URL to http://host.docker.internal:8000 (unless you have an IP address set up).

-

Database Setup: In your Supabase project SQL Editor, copy, paste, and execute the contents of

migration/complete_setup.sql -

Start Services (choose one):

Full Docker Mode (Recommended for Normal Archon Usage)

docker compose up --build -d

This starts all core microservices in Docker:

- Server: Core API and business logic (Port: 8181)

- MCP Server: Protocol interface for AI clients (Port: 8051)

- UI: Web interface (Port: 3737)

Ports are configurable in your .env as well!

-

Configure API Keys:

- Open http://localhost:3737

- You'll automatically be brought through an onboarding flow to set your API key (OpenAI is default)

Once everything is running:

- Test Web Crawling: Go to http://localhost:3737 → Knowledge Base → "Crawl Website" → Enter a doc URL (such as https://ai.pydantic.dev/llms-full.txt)

- Test Document Upload: Knowledge Base → Upload a PDF

- Test Projects: Projects → Create a new project and add tasks

- Integrate with your AI coding assistant: MCP Dashboard → Copy connection config for your AI coding assistant

🛠️ Make installation (OPTIONAL - For Dev Workflows)

# Option 1: Using Chocolatey

choco install make

# Option 2: Using Scoop

scoop install make

# Option 3: Using WSL2

wsl --install

# Then in WSL: sudo apt-get install make# Make comes pre-installed on macOS

# If needed: brew install make# Debian/Ubuntu

sudo apt-get install make

# RHEL/CentOS/Fedora

sudo yum install make🚀 Quick Command Reference for Make

| Command | Description |

|---|---|

make dev |

Start hybrid dev (backend in Docker, frontend local) ⭐ |

make dev-docker |

Everything in Docker |

make stop |

Stop all services |

make test |

Run all tests |

make lint |

Run linters |

make install |

Install dependencies |

make check |

Check environment setup |

make clean |

Remove containers and volumes (with confirmation) |

If you need to completely reset your database and start fresh:

⚠️ Reset Database - This will delete ALL data for Archon!

-

Run Reset Script: In your Supabase SQL Editor, run the contents of

migration/RESET_DB.sql⚠️ WARNING: This will delete all Archon specific tables and data! Nothing else will be touched in your DB though. -

Rebuild Database: After reset, run

migration/complete_setup.sqlto create all the tables again. -

Restart Services:

docker compose --profile full up -d

-

Reconfigure:

- Select your LLM/embedding provider and set the API key again

- Re-upload any documents or re-crawl websites

The reset script safely removes all tables, functions, triggers, and policies with proper dependency handling.

| Service | Container Name | Default URL | Purpose |

|---|---|---|---|

| Web Interface | archon-ui | http://localhost:3737 | Main dashboard and controls |

| API Service | archon-server | http://localhost:8181 | Web crawling, document processing |

| MCP Server | archon-mcp | http://localhost:8051 | Model Context Protocol interface |

| Agents Service | archon-agents | http://localhost:8052 | AI/ML operations, reranking |

To upgrade Archon to the latest version:

-

Pull latest changes:

git pull

-

Check for migrations: Look in the

migration/folder for any SQL files newer than your last update. Check the file created dates to determine if you need to run them. You can run these in the SQL editor just like you did when you first set up Archon. We are also working on a way to make handling these migrations automatic! -

Rebuild and restart:

docker compose up -d --build

This is the same command used for initial setup - it rebuilds containers with the latest code and restarts services.

- Smart Web Crawling: Automatically detects and crawls entire documentation sites, sitemaps, and individual pages

- Document Processing: Upload and process PDFs, Word docs, markdown files, and text documents with intelligent chunking

- Code Example Extraction: Automatically identifies and indexes code examples from documentation for enhanced search

- Vector Search: Advanced semantic search with contextual embeddings for precise knowledge retrieval

- Source Management: Organize knowledge by source, type, and tags for easy filtering

- Model Context Protocol (MCP): Connect any MCP-compatible client (Claude Code, Cursor, even non-AI coding assistants like Claude Desktop)

- MCP Tools: Comprehensive yet simple set of tools for RAG queries, task management, and project operations

- Multi-LLM Support: Works with OpenAI, Ollama, and Google Gemini models

- RAG Strategies: Hybrid search, contextual embeddings, and result reranking for optimal AI responses

- Real-time Streaming: Live responses from AI agents with progress tracking

- Hierarchical Projects: Organize work with projects, features, and tasks in a structured workflow

- AI-Assisted Creation: Generate project requirements and tasks using integrated AI agents

- Document Management: Version-controlled documents with collaborative editing capabilities

- Progress Tracking: Real-time updates and status management across all project activities

- WebSocket Updates: Live progress tracking for crawling, processing, and AI operations

- Multi-user Support: Collaborative knowledge building and project management

- Background Processing: Asynchronous operations that don't block the user interface

- Health Monitoring: Built-in service health checks and automatic reconnection

Archon uses true microservices architecture with clear separation of concerns:

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Frontend UI │ │ Server (API) │ │ MCP Server │ │ Agents Service │

│ │ │ │ │ │ │ │

│ React + Vite │◄──►│ FastAPI + │◄──►│ Lightweight │◄──►│ PydanticAI │

│ Port 3737 │ │ SocketIO │ │ HTTP Wrapper │ │ Port 8052 │

│ │ │ Port 8181 │ │ Port 8051 │ │ │

└─────────────────┘ └─────────────────┘ └─────────────────┘ └─────────────────┘

│ │ │ │

└────────────────────────┼────────────────────────┼────────────────────────┘

│ │

┌─────────────────┐ │

│ Database │ │

│ │ │

│ Supabase │◄──────────────┘

│ PostgreSQL │

│ PGVector │

└─────────────────┘

| Service | Location | Purpose | Key Features |

|---|---|---|---|

| Frontend | archon-ui-main/ |

Web interface and dashboard | React, TypeScript, TailwindCSS, Socket.IO client |

| Server | python/src/server/ |

Core business logic and APIs | FastAPI, service layer, Socket.IO broadcasts, all ML/AI operations |

| MCP Server | python/src/mcp/ |

MCP protocol interface | Lightweight HTTP wrapper, MCP tools, session management |

| Agents | python/src/agents/ |

PydanticAI agent hosting | Document and RAG agents, streaming responses |

- HTTP-based: All inter-service communication uses HTTP APIs

- Socket.IO: Real-time updates from Server to Frontend

- MCP Protocol: AI clients connect to MCP Server via SSE or stdio

- No Direct Imports: Services are truly independent with no shared code dependencies

- Lightweight Containers: Each service contains only required dependencies

- Independent Scaling: Services can be scaled independently based on load

- Development Flexibility: Teams can work on different services without conflicts

- Technology Diversity: Each service uses the best tools for its specific purpose

By default, Archon services run on the following ports:

- archon-ui: 3737

- archon-server: 8181

- archon-mcp: 8051

- archon-agents: 8052

- archon-docs: 3838 (optional)

To use custom ports, add these variables to your .env file:

# Service Ports Configuration

ARCHON_UI_PORT=3737

ARCHON_SERVER_PORT=8181

ARCHON_MCP_PORT=8051

ARCHON_AGENTS_PORT=8052

ARCHON_DOCS_PORT=3838Example: Running on different ports:

ARCHON_SERVER_PORT=8282

ARCHON_MCP_PORT=8151By default, Archon uses localhost as the hostname. You can configure a custom hostname or IP address by setting the HOST variable in your .env file:

# Hostname Configuration

HOST=localhost # Default

# Examples of custom hostnames:

HOST=192.168.1.100 # Use specific IP address

HOST=archon.local # Use custom domain

HOST=myserver.com # Use public domainThis is useful when:

- Running Archon on a different machine and accessing it remotely

- Using a custom domain name for your installation

- Deploying in a network environment where

localhostisn't accessible

After changing hostname or ports:

- Restart Docker containers:

docker compose down && docker compose --profile full up -d - Access the UI at:

http://${HOST}:${ARCHON_UI_PORT} - Update your AI client configuration with the new hostname and MCP port

# Install dependencies

make install

# Start development (recommended)

make dev # Backend in Docker, frontend local with hot reload

# Alternative: Everything in Docker

make dev-docker # All services in Docker

# Stop everything (local FE needs to be stopped manually)

make stopBest for active development with instant frontend updates:

- Backend services run in Docker (isolated, consistent)

- Frontend runs locally with hot module replacement

- Instant UI updates without Docker rebuilds

For all services in Docker environment:

- All services run in Docker containers

- Better for integration testing

- Slower frontend updates

# Run tests

make test # Run all tests

make test-fe # Run frontend tests

make test-be # Run backend tests

# Run linters

make lint # Lint all code

make lint-fe # Lint frontend code

make lint-be # Lint backend code

# Check environment

make check # Verify environment setup

# Clean up

make clean # Remove containers and volumes (asks for confirmation)# View logs using Docker Compose directly

docker compose logs -f # All services

docker compose logs -f archon-server # API server

docker compose logs -f archon-mcp # MCP server

docker compose logs -f archon-ui # FrontendNote: The backend services are configured with --reload flag in their uvicorn commands and have source code mounted as volumes for automatic hot reloading when you make changes.

If you see "Port already in use" errors:

# Check what's using a port (e.g., 3737)

lsof -i :3737

# Stop all containers and local services

make stop

# Change the port in .envIf you encounter permission errors with Docker:

# Add your user to the docker group

sudo usermod -aG docker $USER

# Log out and back in, or run

newgrp docker- Make not found: Install Make via Chocolatey, Scoop, or WSL2 (see Installing Make)

-

Line ending issues: Configure Git to use LF endings:

git config --global core.autocrlf false

- Check backend is running:

curl http://localhost:8181/health - Verify port configuration in

.env - For custom ports, ensure both

ARCHON_SERVER_PORTandVITE_ARCHON_SERVER_PORTare set

If docker compose commands hang:

# Reset Docker Compose

docker compose down --remove-orphans

docker system prune -f

# Restart Docker Desktop (if applicable)-

Frontend: Ensure you're running in hybrid mode (

make dev) for best HMR experience -

Backend: Check that volumes are mounted correctly in

docker-compose.yml - File permissions: On some systems, mounted volumes may have permission issues

Archon Community License (ACL) v1.2 - see LICENSE file for details.

TL;DR: Archon is free, open, and hackable. Run it, fork it, share it - just don't sell it as-a-service without permission.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Archon

Similar Open Source Tools

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

tandem

Tandem is a local-first, privacy-focused AI workspace that runs entirely on your machine. It is inspired by early AI coworking research previews, open source, and provider-agnostic. Tandem offers privacy-first operation, provider agnosticism, zero trust model, true cross-platform support, open-source licensing, modern stack, and developer superpowers for everyone. It provides folder-wide intelligence, multi-step automation, visual change review, complete undo, zero telemetry, provider freedom, secure design, cross-platform support, visual permissions, full undo, long-term memory, skills system, document text extraction, workspace Python venv, rich themes, execution planning, auto-updates, multiple specialized agent modes, multi-agent orchestration, project management, and various artifacts and outputs.

tambourine-voice

Tambourine is a personal voice interface tool that allows users to speak naturally and have their words appear wherever the cursor is. It is powered by customizable AI voice dictation, providing a universal voice-to-text interface for emails, messages, documents, code editors, and terminals. Users can capture ideas quickly, type at the speed of thought, and benefit from AI formatting that cleans up speech, adds punctuation, and applies personal dictionaries. Tambourine offers full control and transparency, with the ability to customize AI providers, formatting, and extensions. The tool supports dual-mode recording, real-time speech-to-text, LLM text formatting, context-aware formatting, customizable prompts, and more, making it a versatile solution for dictation and transcription tasks.

vibe-remote

Vibe Remote is a tool that allows developers to code using AI agents through Slack or Discord, eliminating the need for a laptop or IDE. It provides a seamless experience for coding tasks, enabling users to interact with AI agents in real-time, delegate tasks, and monitor progress. The tool supports multiple coding agents, offers a setup wizard for easy installation, and ensures security by running locally on the user's machine. Vibe Remote enhances productivity by reducing context-switching and enabling parallel task execution within isolated workspaces.

multi-agent-shogun

multi-agent-shogun is a system that runs multiple AI coding CLI instances simultaneously, orchestrating them like a feudal Japanese army. It supports Claude Code, OpenAI Codex, GitHub Copilot, and Kimi Code. The system allows you to command your AI army with zero coordination cost, enabling parallel execution, non-blocking workflow, cross-session memory, event-driven communication, and full transparency. It also features skills discovery, phone notifications, pane border task display, shout mode, and multi-CLI support.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

specweave

SpecWeave is a spec-driven Skill Fabric for AI coding agents that allows programming AI in English. It provides first-class support for Claude Code and offers reusable logic for controlling AI behavior. With over 100 skills out of the box, SpecWeave eliminates the need to learn Claude Code docs and handles various aspects of feature development. The tool enables users to describe what they want, and SpecWeave autonomously executes tasks, including writing code, running tests, and syncing to GitHub/JIRA. It supports solo developers, agent teams working in parallel, and brownfield projects, offering file-based coordination, autonomous teams, and enterprise-ready features. SpecWeave also integrates LSP Code Intelligence for semantic understanding of codebases and allows for extensible skills without forking.

mxcp

MXCP is an enterprise-grade MCP framework for building production-ready AI applications. It provides a structured methodology for data modeling, service design, smart implementation, quality assurance, and production operations. With built-in enterprise features like security, audit trail, type safety, testing framework, performance optimization, and drift detection, MXCP ensures comprehensive security, quality, and operations. The tool supports SQL for data queries and Python for complex logic, ML models, and integrations, allowing users to choose the right tool for each job while maintaining security and governance. MXCP's architecture includes LLM client, MXCP framework, implementations, security & policies, SQL endpoints, Python tools, type system, audit engine, validation & tests, data sources, and APIs. The tool enforces an organized project structure and offers CLI commands for initialization, quality assurance, data management, operations & monitoring, and LLM integration. MXCP is compatible with Claude Desktop, OpenAI-compatible tools, and custom integrations through the Model Context Protocol (MCP) specification. The tool is developed by RAW Labs for production data-to-AI workflows and is released under the Business Source License 1.1 (BSL), with commercial licensing required for certain production scenarios.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

kiss_ai

KISS AI is a lightweight and powerful multi-agent evolutionary framework that simplifies building AI agents. It uses native function calling for efficiency and accuracy, making building AI agents as straightforward as possible. The framework includes features like multi-agent orchestration, agent evolution and optimization, relentless coding agent for long-running tasks, output formatting, trajectory saving and visualization, GEPA for prompt optimization, KISSEvolve for algorithm discovery, self-evolving multi-agent, Docker integration, multiprocessing support, and support for various models from OpenAI, Anthropic, Gemini, Together AI, and OpenRouter.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

Project-AI-MemoryCore

AI MemoryCore is a universal AI memory architecture that helps create AI companions maintaining memory across conversations. It offers persistent memory, personal learning, time intelligence, simple setup, markdown database, session continuity, and self-maintaining features. The system uses markdown files as a database and includes core components like Master Memory, Identity Core, Relationship Memory, Current Session, Daily Diary, and Save Protocol. Users can set up, configure, activate, and use the AI for learning and growth through conversation. The tool supports basic commands, custom protocols, effective AI training, memory management, and customization tips. Common use cases include professional, educational, creative, personal, and technical tasks. Advanced features include auto-archive, session RAM, protocol system, self-update, and modular design. Available feature extensions cover time-based aware system, LRU project management system, memory consolidation system, and skill plugin system.

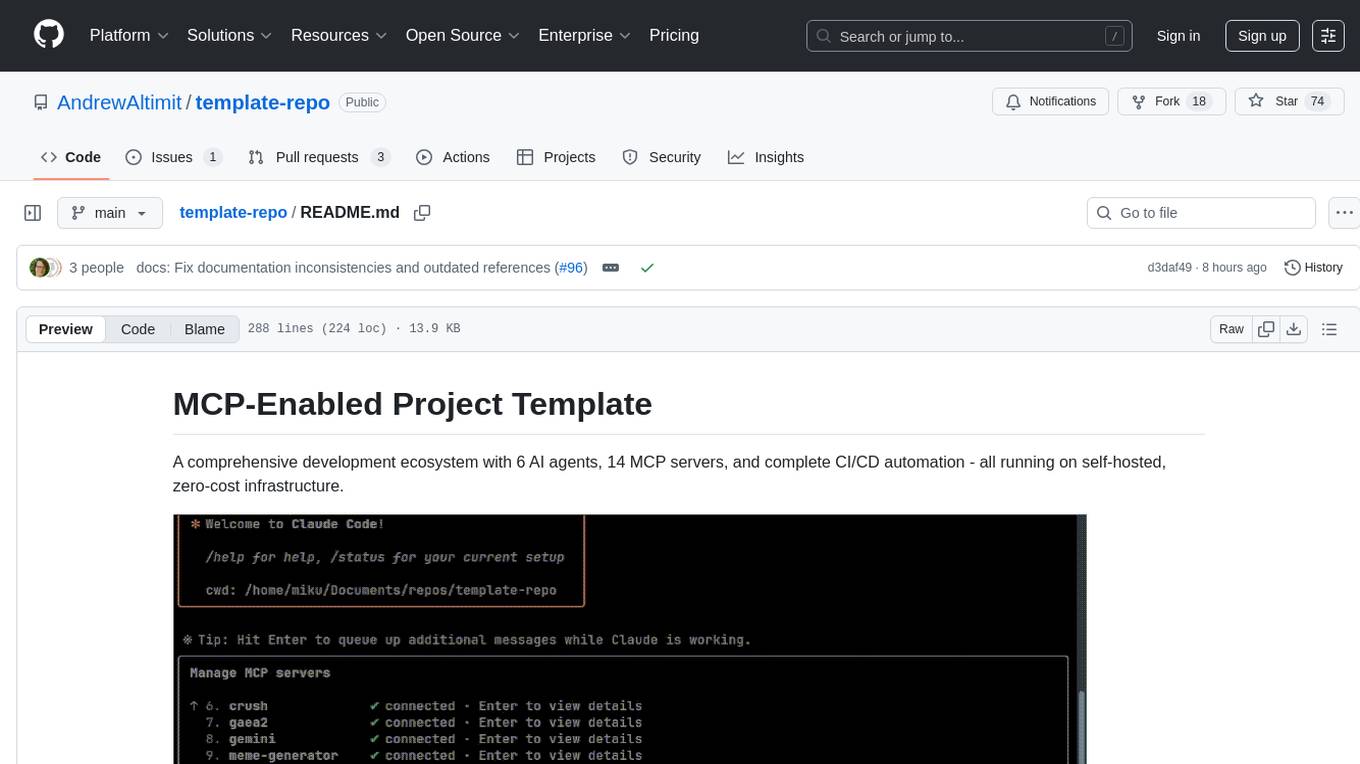

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

BioAgents

BioAgents AgentKit is an advanced AI agent framework tailored for biological and scientific research. It offers powerful conversational AI capabilities with specialized knowledge in biology, life sciences, and scientific research methodologies. The framework includes state-of-the-art analysis agents, configurable research agents, and a variety of specialized agents for tasks such as file parsing, research planning, literature search, data analysis, hypothesis generation, research reflection, and user-facing responses. BioAgents also provides support for LLM libraries, multiple search backends for literature agents, and two backends for data analysis. The project structure includes backend source code, services for chat, job queue system, real-time notifications, and JWT authentication, as well as a frontend UI built with Preact.

Call

Call is an open-source AI-native alternative to Google Meet and Zoom, offering video calling, team collaboration, contact management, meeting scheduling, AI-powered features, security, and privacy. It is cross-platform, web-based, mobile responsive, and supports offline capabilities. The tech stack includes Next.js, TypeScript, Tailwind CSS, Mediasoup-SFU, React Query, Zustand, Hono, PostgreSQL, Drizzle ORM, Better Auth, Turborepo, Docker, Vercel, and Rate Limiting.

simili-bot

Simili Bot is an AI-powered tool designed for GitHub repositories to automatically detect duplicate issues, find similar issues using semantic search, and intelligently route issues across repositories. It offers features such as semantic duplicate detection, cross-repository search, intelligent routing, smart triage, modular pipeline customization, and multi-repo support. The tool follows a 'Lego with Blueprints' architecture, with Lego Blocks representing independent pipeline steps and Blueprints providing pre-defined workflows. Users can configure AI providers like Gemini and OpenAI, set default models for embeddings, and specify workflows in a 'simili.yaml' file. Simili Bot also offers CLI commands for bulk indexing, processing single issues, and batch operations, enabling local development, testing, and analysis of historical data.

For similar tasks

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

LongRoPE

LongRoPE is a method to extend the context window of large language models (LLMs) beyond 2 million tokens. It identifies and exploits non-uniformities in positional embeddings to enable 8x context extension without fine-tuning. The method utilizes a progressive extension strategy with 256k fine-tuning to reach a 2048k context. It adjusts embeddings for shorter contexts to maintain performance within the original window size. LongRoPE has been shown to be effective in maintaining performance across various tasks from 4k to 2048k context lengths.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.