langmanus

A community-driven AI automation framework that builds upon the incredible work of the open source community. Our goal is to combine language models with specialized tools for tasks like web search, crawling, and Python code execution, while giving back to the community that made this possible.

Stars: 4900

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

README:

Come From Open Source, Back to Open Source

LangManus is a community-driven AI automation framework that builds upon the incredible work of the open source community. Our goal is to combine language models with specialized tools for tasks like web search, crawling, and Python code execution, while giving back to the community that made this possible.

Task: Calculate the influence index of DeepSeek R1 on HuggingFace. This index can be designed using a weighted sum of factors such as followers, downloads, and likes.

LangManus's Fully Automated Plan and Solution:

- Gather the latest information about "DeepSeek R1", "HuggingFace", and related topics through online searches.

- Interact with a Chromium instance to visit the HuggingFace official website, search for "DeepSeek R1" and retrieve the latest data, including followers, likes, downloads, and other relevant metrics.

- Find formulas for calculating model influence using search engines and web scraping.

- Use Python to compute the influence index of DeepSeek R1 based on the collected data.

- Present a comprehensive report to the user.

- Quick Start

- Project Statement

- Architecture

- Features

- Why LangManus?

- Setup

- Usage

- Docker

- Web UI

- Development

- FAQ

- Contributing

- License

- Acknowledgments

# Clone the repository

git clone https://github.com/langmanus/langmanus.git

cd langmanus

# Install dependencies, uv will take care of the python interpreter and venv creation

uv sync

# Playwright install to use Chromium for browser-use by default

uv run playwright install

# Configure environment

# Windows: copy .env.example .env

cp .env.example .env

# Edit .env with your API keys

# Run the project

uv run main.pyThis is an academically driven open-source project, developed by a group of former colleagues in our spare time. It aims to explore and exchange ideas in the fields of Multi-Agent and DeepResearch.

- Purpose: The primary purpose of this project is academic research, participation in the GAIA leaderboard, and the future publication of related papers.

- Independence Statement: This project is entirely independent and unrelated to our primary job responsibilities. It does not represent the views or positions of our employers or any organizations.

- No Association: This project has no association with Manus (whether it refers to a company, organization, or any other entity).

- Clarification Statement: We have not promoted this project on any social media platforms. Any inaccurate reports related to this project are not aligned with its academic spirit.

- Contribution Management: Issues and PRs will be addressed during our free time and may experience delays. We appreciate your understanding.

- Disclaimer: This project is open-sourced under the MIT License. Users assume all risks associated with its use. We disclaim any responsibility for any direct or indirect consequences arising from the use of this project.

本项目是一个学术驱动的开源项目,由一群前同事在业余时间开发,旨在探索和交流 Multi-Agent 和 DeepResearch 相关领域的技术。

- 项目目的:本项目的主要目的是学术研究、参与 GAIA 排行榜,并计划在未来发表相关论文。

- 独立性声明:本项目完全独立,与我们的本职工作无关,不代表我们所在公司或任何组织的立场或观点。

- 无关联声明:本项目与 Manus(无论是公司、组织还是其他实体)无任何关联。

- 澄清声明:我们未在任何社交媒体平台上宣传过本项目,任何与本项目相关的不实报道均与本项目的学术精神无关。

- 贡献管理:Issue 和 PR 将在我们空闲时间处理,可能存在延迟,敬请谅解。

- 免责声明:本项目基于 MIT 协议开源,使用者需自行承担使用风险。我们对因使用本项目产生的任何直接或间接后果不承担责任。

LangManus implements a hierarchical multi-agent system where a supervisor coordinates specialized agents to accomplish complex tasks:

The system consists of the following agents working together:

- Coordinator - The entry point that handles initial interactions and routes tasks

- Planner - Analyzes tasks and creates execution strategies

- Supervisor - Oversees and manages the execution of other agents

- Researcher - Gathers and analyzes information

- Coder - Handles code generation and modifications

- Browser - Performs web browsing and information retrieval

- Reporter - Generates reports and summaries of the workflow results

- 🤖 LLM Integration

- It supports the integration of most models through litellm.

- Support for open source models like Qwen

- OpenAI-compatible API interface

- Multi-tier LLM system for different task complexities

- 🔍 Search and Retrieval

- Web search via Tavily API

- Neural search with Jina

- Advanced content extraction

- 🐍 Python Integration

- Built-in Python REPL

- Code execution environment

- Package management with uv

- 📊 Visualization and Control

- Workflow graph visualization

- Multi-agent orchestration

- Task delegation and monitoring

We believe in the power of open source collaboration. This project wouldn't be possible without the amazing work of projects like:

- Qwen for their open source LLMs

- Tavily for search capabilities

- Jina for crawl search technology

- Browser-use for control browser

- And many other open source contributors

We're committed to giving back to the community and welcome contributions of all kinds - whether it's code, documentation, bug reports, or feature suggestions.

- uv package manager

LangManus leverages uv as its package manager to streamline dependency management. Follow the steps below to set up a virtual environment and install the necessary dependencies:

# Step 1: Create and activate a virtual environment through uv

uv python install 3.12

uv venv --python 3.12

source .venv/bin/activate # On Windows: .venv\Scripts\activate

# Step 2: Install project dependencies

uv syncBy completing these steps, you'll ensure your environment is properly configured and ready for development.

LangManus uses a three-layer LLM system, which are respectively used for reasoning, basic tasks, and vision-language tasks. Configuration is done using the conf.yaml file in the root directory of the project. You can copy conf.yaml.example to conf.yaml to start the configuration:

cp conf.yaml.example conf.yaml# Setting it to true will read the conf.yaml configuration, and setting it to false will use the original .env configuration. The default is false (compatible with existing configurations)

USE_CONF: true

# LLM Config

## Follow the litellm configuration parameters: https://docs.litellm.ai/docs/providers. You can click on the specific provider document to view the completion parameter examples

REASONING_MODEL:

model: "volcengine/ep-xxxx"

api_key: $REASONING_API_KEY # Supports referencing the environment variable ENV_KEY in the.env file through $ENV_KEY

api_base: $REASONING_BASE_URL

BASIC_MODEL:

model: "azure/gpt-4o-2024-08-06"

api_base: $AZURE_API_BASE

api_version: $AZURE_API_VERSION

api_key: $AZURE_API_KEY

VISION_MODEL:

model: "azure/gpt-4o-2024-08-06"

api_base: $AZURE_API_BASE

api_version: $AZURE_API_VERSION

api_key: $AZURE_API_KEYYou can create a .env file in the root directory of the project and configure the following environment variables. You can copy the.env.example file as a template to start:

cp .env.example .env# Tool API Key

TAVILY_API_KEY=your_tavily_api_key

JINA_API_KEY=your_jina_api_key # Optional

# Browser Configuration

CHROME_INSTANCE_PATH=/Applications/Google Chrome.app/Contents/MacOS/Google Chrome # Optional, the path to the Chrome executable file

CHROME_HEADLESS=False # Optional, the default is False

CHROME_PROXY_SERVER=http://127.0.0.1:10809 # Optional, the default is None

CHROME_PROXY_USERNAME= # Optional, the default is None

CHROME_PROXY_PASSWORD= # Optional, the default is NoneNote:

- The system uses different models for different types of tasks:

- The reasoning LLM is used for complex decision-making and analysis.

- The basic LLM is used for simple text tasks.

- The vision-language LLM is used for tasks involving image understanding.

- The configuration of all LLMs can be customized independently.

- The Jina API key is optional. Providing your own key can obtain a higher rate limit (you can obtain this key at jina.ai).

- The default configuration for Tavily search is to return up to 5 results (you can obtain this key at app.tavily.com).

LangManus includes a pre-commit hook that runs linting and formatting checks before each commit. To set it up:

- Make the pre-commit script executable:

chmod +x pre-commit- Install the pre-commit hook:

ln -s ../../pre-commit .git/hooks/pre-commitThe pre-commit hook will automatically:

- Run linting checks (

make lint) - Run code formatting (

make format) - Add any reformatted files back to staging

- Prevent commits if there are any linting or formatting errors

To run LangManus with default settings:

uv run main.pyLangManus provides a FastAPI-based API server with streaming support:

# Start the API server

make serve

# Or run directly

uv run server.pyThe API server exposes the following endpoints:

-

POST /api/chat/stream: Chat endpoint for LangGraph invoke with streaming support- Request body:

{ "messages": [{ "role": "user", "content": "Your query here" }], "debug": false }- Returns a Server-Sent Events (SSE) stream with the agent's responses

LangManus can be customized through various configuration files in the src/config directory:

-

env.py: Configure LLM models, API keys, and base URLs -

tools.py: Adjust tool-specific settings (e.g., Tavily search results limit) -

agents.py: Modify team composition and agent system prompts

LangManus uses a sophisticated prompting system in the src/prompts directory to define agent behaviors and responsibilities:

-

Supervisor (

src/prompts/supervisor.md): Coordinates the team and delegates tasks by analyzing requests and determining which specialist should handle them. Makes decisions about task completion and workflow transitions. -

Researcher (

src/prompts/researcher.md): Specializes in information gathering through web searches and data collection. Uses Tavily search and web crawling capabilities while avoiding mathematical computations or file operations. -

Coder (

src/prompts/coder.md): Professional software engineer role focused on Python and bash scripting. Handles:- Python code execution and analysis

- Shell command execution

- Technical problem-solving and implementation

-

File Manager (

src/prompts/file_manager.md): Handles all file system operations with a focus on properly formatting and saving content in markdown format. -

Browser (

src/prompts/browser.md): Web interaction specialist that handles:- Website navigation

- Page interaction (clicking, typing, scrolling)

- Content extraction from web pages

The prompts system uses a template engine (src/prompts/template.py) that:

- Loads role-specific markdown templates

- Handles variable substitution (e.g., current time, team member information)

- Formats system prompts for each agent

Each agent's prompt is defined in a separate markdown file, making it easy to modify behavior and responsibilities without changing the underlying code.

LangManus can be run in a Docker container. default serve api on port 8000.

Before run docker, you need to prepare environment variables in .env file.

docker build -t langmanus .

docker run --name langmanus -d --env-file .env -e CHROME_HEADLESS=True -p 8000:8000 langmanusYou can also just run the cli with docker.

docker build -t langmanus .

docker run --rm -it --env-file .env -e CHROME_HEADLESS=True langmanus uv run python main.pyLangManus provides a default web UI.

Please refer to the langmanus/langmanus-web-ui project for more details.

LangManus provides a docker-compose setup to easily run both the backend and frontend together:

# Start both backend and frontend

docker-compose up -d

# The backend will be available at http://localhost:8000

# The frontend will be available at http://localhost:3000, which could be accessed through web browserThis will:

- Build and start the LangManus backend container

- Build and start the LangManus web UI container

- Connect them using a shared network

** Make sure you have your .env file prepared with the necessary API keys before starting the services. **

Run the test suite:

# Run all tests

make test

# Run specific test file

pytest tests/integration/test_workflow.py

# Run with coverage

make coverage# Run linting

make lint

# Format code

make formatPlease refer to the FAQ.md for more details.

We welcome contributions of all kinds! Whether you're fixing a typo, improving documentation, or adding a new feature, your help is appreciated. Please see our Contributing Guide for details on how to get started.

This project is open source and available under the MIT License.

Special thanks to all the open source projects and contributors that make LangManus possible. We stand on the shoulders of giants.

In particular, we want to express our deep appreciation for:

- LangChain for their exceptional framework that powers our LLM interactions and chains

- LangGraph for enabling our sophisticated multi-agent orchestration

- Browser-use for control browser

These amazing projects form the foundation of LangManus and demonstrate the power of open source collaboration.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langmanus

Similar Open Source Tools

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

trip_planner_agent

VacAIgent is an AI tool that automates and enhances trip planning by leveraging the CrewAI framework. It integrates a user-friendly Streamlit interface for interactive travel planning. Users can input preferences and receive tailored travel plans with the help of autonomous AI agents. The tool allows for collaborative decision-making on cities and crafting complete itineraries based on specified preferences, all accessible via a streamlined Streamlit user interface. VacAIgent can be customized to use different AI models like GPT-3.5 or local models like Ollama for enhanced privacy and customization.

pear-landing-page

PearAI Landing Page is an open-source AI-powered code editor managed by Nang and Pan. It is built with Next.js, Vercel, Tailwind CSS, and TypeScript. The project requires setting up environment variables for proper configuration. Users can run the project locally by starting the development server and visiting the specified URL in the browser. Recommended extensions include Prettier, ESLint, and JavaScript and TypeScript Nightly. Contributions to the project are welcomed and appreciated.

cosdata

Cosdata is a cutting-edge AI data platform designed to power the next generation search pipelines. It features immutability, version control, and excels in semantic search, structured knowledge graphs, hybrid search capabilities, real-time search at scale, and ML pipeline integration. The platform is customizable, scalable, efficient, enterprise-grade, easy to use, and can manage multi-modal data. It offers high performance, indexing, low latency, and high requests per second. Cosdata is designed to meet the demands of modern search applications, empowering businesses to harness the full potential of their data.

aisdk-prompt-optimizer

AISDK Prompt Optimizer is an open-source tool designed to transform AI interactions by optimizing prompts. It utilizes the GEPA reflective optimizer to evolve textual components of AI systems, providing features such as reflective prompt mutation, rich textual feedback, and Pareto-based selection. Users can teach their AI desired behaviors, collect ideal samples, run optimization to generate optimized prompts, and deploy the results in their applications. The tool leverages advanced optimization algorithms to guide AI through interactive conversations and refine prompt candidates for improved performance.

next-ai-draw-io

Next AI Draw.io is a next.js web application that integrates AI capabilities with draw.io diagrams. It allows users to create, modify, and enhance diagrams through natural language commands and AI-assisted visualization. Features include LLM-Powered Diagram Creation, Image-Based Diagram Replication, Diagram History, Interactive Chat Interface, and Smart Editing. The application uses Next.js for frontend framework, @ai-sdk/react for chat interface and AI interactions, and react-drawio for diagram representation and manipulation. Diagrams are represented as XML that can be rendered in draw.io, with AI processing commands to generate or modify the XML accordingly.

swark

Swark is a VS Code extension that automatically generates architecture diagrams from code using large language models (LLMs). It is directly integrated with GitHub Copilot, requires no authentication or API key, and supports all languages. Swark helps users learn new codebases, review AI-generated code, improve documentation, understand legacy code, spot design flaws, and gain test coverage insights. It saves output in a 'swark-output' folder with diagram and log files. Source code is only shared with GitHub Copilot for privacy. The extension settings allow customization for file reading, file extensions, exclusion patterns, and language model selection. Swark is open source under the GNU Affero General Public License v3.0.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

Local-File-Organizer

The Local File Organizer is an AI-powered tool designed to help users organize their digital files efficiently and securely on their local device. By leveraging advanced AI models for text and visual content analysis, the tool automatically scans and categorizes files, generates relevant descriptions and filenames, and organizes them into a new directory structure. All AI processing occurs locally using the Nexa SDK, ensuring privacy and security. With support for multiple file types and customizable prompts, this tool aims to simplify file management and bring order to users' digital lives.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

Auto-Analyst

Auto-Analyst is an AI-driven data analytics agentic system designed to simplify and enhance the data science process. By integrating various specialized AI agents, this tool aims to make complex data analysis tasks more accessible and efficient for data analysts and scientists. Auto-Analyst provides a streamlined approach to data preprocessing, statistical analysis, machine learning, and visualization, all within an interactive Streamlit interface. It offers plug and play Streamlit UI, agents with data science speciality, complete automation, LLM agnostic operation, and is built using lightweight frameworks.

LocalAIVoiceChat

LocalAIVoiceChat is an experimental alpha software that enables real-time voice chat with a customizable AI personality and voice on your PC. It integrates Zephyr 7B language model with speech-to-text and text-to-speech libraries. The tool is designed for users interested in state-of-the-art voice solutions and provides an early version of a local real-time chatbot.

transcriptionstream

Transcription Stream is a self-hosted diarization service that works offline, allowing users to easily transcribe and summarize audio files. It includes a web interface for file management, Ollama for complex operations on transcriptions, and Meilisearch for fast full-text search. Users can upload files via SSH or web interface, with output stored in named folders. The tool requires a NVIDIA GPU and provides various scripts for installation and running. Ports for SSH, HTTP, Ollama, and Meilisearch are specified, along with access details for SSH server and web interface. Customization options and troubleshooting tips are provided in the documentation.

For similar tasks

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

waidrin

Waidrin is a powerful web scraping tool that allows users to easily extract data from websites. It provides a user-friendly interface for creating custom web scraping scripts and supports various data formats for exporting the extracted data. With Waidrin, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and scalable, making it suitable for both beginners and advanced users in the field of web scraping.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

GraphLLM

GraphLLM is a graph-based framework designed to process data using LLMs. It offers a set of tools including a web scraper, PDF parser, YouTube subtitles downloader, Python sandbox, and TTS engine. The framework provides a GUI for building and debugging graphs with advanced features like loops, conditionals, parallel execution, streaming of results, hierarchical graphs, external tool integration, and dynamic scheduling. GraphLLM is a low-level framework that gives users full control over the raw prompt and output of models, with a steeper learning curve. It is tested with llama70b and qwen 32b, under heavy development with breaking changes expected.

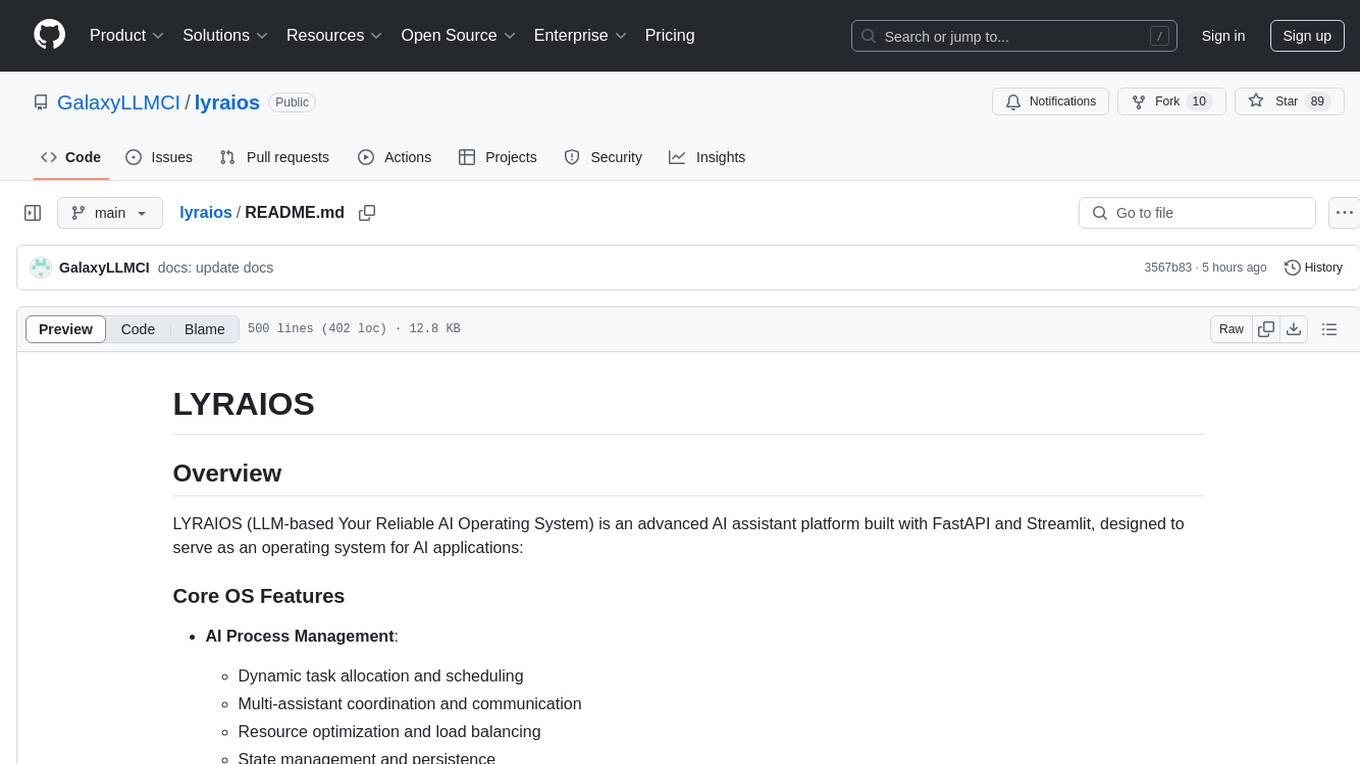

lyraios

LYRAIOS (LLM-based Your Reliable AI Operating System) is an advanced AI assistant platform built with FastAPI and Streamlit, designed to serve as an operating system for AI applications. It offers core features such as AI process management, memory system, and I/O system. The platform includes built-in tools like Calculator, Web Search, Financial Analysis, File Management, and Research Tools. It also provides specialized assistant teams for Python and research tasks. LYRAIOS is built on a technical architecture comprising FastAPI backend, Streamlit frontend, Vector Database, PostgreSQL storage, and Docker support. It offers features like knowledge management, process control, and security & access control. The roadmap includes enhancements in core platform, AI process management, memory system, tools & integrations, security & access control, open protocol architecture, multi-agent collaboration, and cross-platform support.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

For similar jobs

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.