ciso-assistant-community

CISO Assistant is a one-stop-shop for GRC, covering Risk, AppSec, Compliance/Audit Management, Privacy and supporting +100 frameworks worldwide with auto-mapping: NIST CSF, ISO 27001, SOC2, CIS, PCI DSS, NIS2, CMMC, PSPF, GDPR, HIPAA, Essential Eight, NYDFS-500, DORA, NIST AI RMF, 800-53, CyFun, AirCyber, NCSC, ECC, SCF and so much more

Stars: 3235

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

README:

Star the project 🌟 to get releases notification and help growing the community!

intuitem.com

·

SaaS Free trial

·

Roadmap

·

Docs

·

Languages

·

Discord

·

Frameworks

CISO Assistant offers a fresh perspective on Cybersecurity Management and GRC (Governance, Risk, and Compliance) practices:

- Designed as a central hub to connect multiple cybersecurity concepts with smart linking between objects,

- Built as a multi-paradigm tool that adapts to different backgrounds, methodologies, and expectations,

- Explicitly decouples compliance from cybersecurity controls, enabling reusability across the platform,

- Promotes reusability and interlinking instead of redundant work,

- Developed with an API-first approach to support both UI interaction and external automation,

- Comes packed with a wide range of built-in standards, security controls, and threat libraries,

- Offers an open format to customize and reuse your own objects and frameworks,

- Includes built-in risk assessment and remediation tracking workflows,

- Supports custom frameworks via a simple syntax and flexible tooling,

- Provides rich import/export capabilities across various channels and formats (UI, CLI, Kafka, reports, etc.).

Our vision is to create a one-stop-shop for cybersecurity management—modernizing GRC through simplification and interoperability.

As practitioners working with cybersecurity and IT professionals, we've faced the same issues: tool fragmentation, data duplication, and a lack of intuitive, integrated solutions. CISO Assistant was born from those lessons, and we're building a community around pragmatic, common-sense principles.

We’re constantly evolving with input from users and customers. Like an octopus 🐙, CISO Assistant keeps growing extra arms—bringing clarity, automation, and productivity to cybersecurity teams while reducing the effort of data input and output.

Upcoming features are listed on the roadmap.

CISO Assistant is developed and maintained by Intuitem, a company specialized in Cybersecurity, Cloud, and Data/AI.

Here’s an extract of some of the building blocks in CISO Assistant to illustrate the decoupling concept that encourages reusability:

For full details, check the data model documentation.

At the heart of CISO Assistant lies the decoupling principle, which enables powerful use cases and major time savings:

- Reuse past assessments across scopes or frameworks,

- Evaluate a single scope against multiple frameworks simultaneously,

- Let CISO Assistant handle reporting and consistency checks so you can focus on remediation,

- Separate control implementation from compliance tracking.

Here is an illustration of the decoupling principle and its advantages:

https://github.com/user-attachments/assets/87bd4497-5cc2-4221-aeff-396f6b6ebe62

[!TIP] The easiest way to get started is through the free trial of cloud instance available here.

Alternatively, once you have Docker and Docker-compose installed, on your workstation or server:

clone the repo:

git clone --single-branch -b main https://github.com/intuitem/ciso-assistant-community.git

and run the starter script

./docker-compose.shIf you are looking for other installation options for self-hosting, check the config builder and the docs.

[!NOTE] The docker-compose script uses prebuilt Docker images supporting most of the standard hardware architecture. If you're using Windows, Make sure to have WSL installed and trigger the script within a WSL command line. It will feed Docker Desktop on your behalf.

The docker compose file can be adjusted to pass extra parameters to suit your setup (e.g. Mailer settings).

[!WARNING] If you're getting warnings or errors about image's platform not matching host platform, raise an issue with the details and we'll add it shortly after. You can also use

docker-compose-build.shinstead (see below) to build for your specific architecture.

[!CAUTION] Don't use the

mainbranch code directly for production as it's the merge upstream and can have breaking changes during our development. Either use thetagsfor stable versions or prebuilt images.

Check out the online documentation on https://intuitem.gitbook.io/ciso-assistant.

- ISO 27001:2013 & 27001:2022 🌐

- NIST Cyber Security Framework (CSF) v1.1 🇺🇸

- NIST Cyber Security Framework (CSF) v2.0 🇺🇸

- NIS2 🇪🇺

- SOC2 🇺🇸

- PCI DSS 4.0 💳

- CMMC v2 🇺🇸

- PSPF 🇦🇺

- General Data Protection Regulation (GDPR): Full text and checklist from GDPR.EU 🇪🇺

- Essential Eight 🇦🇺

- NYDFS 500 with 2023-11 amendments 🇺🇸

- DORA (Act, RTS, ITS and GL) 🇪🇺

- NIST AI Risk Management Framework 🇺🇸🤖

- NIST SP 800-53 rev5 🇺🇸

- France LPM/OIV rules 🇫🇷

- CCB CyberFundamentals Framework 🇧🇪

- NIST SP-800-66 (HIPAA) 🏥

- HDS/HDH 🇫🇷

- OWASP Application Security Verification Standard (ASVS) 4 🐝🖥️

- RGS v2.0 🇫🇷

- AirCyber

✈️ 🌐 - Cyber Resilience Act (CRA) 🇪🇺

- TIBER-EU 🇪🇺

- NIST Privacy Framework 🇺🇸

- TISAX (VDA ISA) v5.1 and v6.0 🚘

- ANSSI hygiene guide 🇫🇷

- Essential Cybersecurity Controls (ECC) 🇸🇦

- CIS Controls v8* 🌐

- CSA CCM (Cloud Controls Matrix)* ☁️

- FADP (Federal Act on Data Protection) 🇨🇭

- NIST SP 800-171 rev2 (2021) 🇺🇸

- ANSSI : recommandations de sécurité pour un système d'IA générative 🇫🇷🤖

- NIST SP 800-218: Secure Software Development Framework (SSDF) 🖥️

- GSA FedRAMP rev5 ☁️🇺🇸

- Cadre Conformité Cyber France (3CF) v1 (2021)

✈️ 🇫🇷 - ANSSI : SecNumCloud ☁️🇫🇷

- Cadre Conformité Cyber France (3CF) v2 (2024)

✈️ 🇫🇷 - ANSSI : outil d’autoévaluation de gestion de crise cyber 💥🇫🇷

- BSI: IT-Grundschutz-Kompendium 🇩🇪

- NIST SP 800-171 rev3 (2024) 🇺🇸

- ENISA: 5G Security Controls Matrix 🇪🇺

- OWASP Mobile Application Security Verification Standard (MASVS) 🐝📱

- Agile Security Framework (ASF) - baseline - by intuitem 🤗

- ISO 27001:2013 🌐 (For legacy and migration)

- EU AI Act 🇪🇺🤖

- FBI CJIS 🇺🇸👮

- Operational Technology Cybersecurity Controls (OTCC) 🇸🇦

- Secure Controls Framework (SCF) 🇺🇸🌐

- NCSC Cyber Assessment Framework (CAF) 🇬🇧

- California Consumer Privacy Act (CCPA) 🇺🇸

- California Consumer Privacy Act Regulations 🇺🇸

- NCSC Cyber Essentials 🇬🇧

- Directive Nationale de la Sécurité des Systèmes d'Information (DNSSI) Maroc 🇲🇦

- Part-IS

✈️ 🇪🇺 - ENS Esquema Nacional de seguridad 🇪🇸

- Korea ISA ISMS-P 🇰🇷

- Swiss ICT minimum standard 🇨🇭

- Adobe Common Controls Framework (CCF) v5 🌐

- BSI Cloud Computing Compliance Criteria Catalogue (C5) 🇩🇪

- Référentiel d’Audit de la Sécurité des Systèmes d’Information, ANCS Tunisie 🇹🇳

- ECB Cyber resilience oversight expectations for financial market infrastructures 🇪🇺

- Mindeststandard-des-BSI-zur-Nutzung-externer-Cloud-Dienste (Version 2.1) 🇩🇪

- Formulaire d'évaluation de la maturité - niveau fondamental (DGA) 🇫🇷

- NIS2 technical and methodological requirements 2024/2690 🇪🇺

- Saudi Arabian Monetary Authority (SAMA) Cybersecurity Framework 🇸🇦

- Guide de sécurité des données (CNIL) 🇫🇷

- International Traffic in Arms Regulations (ITAR) 🇺🇸

- Federal Trade Commission (FTC) Standards for Safeguarding Customer Information 🇺🇸

- OWASP's checklist for LLM governance and security 🌐

- Recommandations pour les architectures des systèmes d’information sensibles ou à diffusion restreinte (ANSSI) 🇫🇷

- CIS benchmark for Kubernetes v1.10 🌐

- De tekniske minimumskrav for statslige myndigheder 🇩🇰

- Google SAIF framework 🤖

- Recommandations relatives à l'administration sécurisée des SI (ANSSI) 🇫🇷

- Prudential Standard CPS 230 - Operational Risk Management (APRA) 🇦🇺

- Prudential Standard CPS 234 - Information Security (APRA) 🇦🇺

- Vehicle Cyber Security Audit (VCSA) v1.1 🚘

- Cisco Cloud Controls Framework (CCF) v3.0 ☁️🌐

- FINMA - Circular 2023/01 - Operational risks and resilience - Banks 🇨🇭

- Post-Quantum Cryptography (PQC) Migration Roadmap (May 2025) 🔐

- PGSSI-S (Politique Générale de Sécurité des Systèmes d'Information de Santé) 🇫🇷

- ANSSI : Recommandations de configuration d'un système GNU/Linux 🇫🇷

- PSSI-MCAS (Politique de sécurité des systèmes d’information pour les ministères chargés des affaires sociales) 🇫🇷

- ANSSI : Recommandations pour la protection des systèmes d'information essentiels 🇫🇷

- ANSSI : Recommandations de sécurité pour l'architecture d'un système de journalisation 🇫🇷

- ANSSI : Recommandations de sécurité relatives à TLS 🇫🇷

- New Zealand Information Security Manual (NZISM) 🇳🇿

- Clausier de sécurité numérique du Club RSSI Santé 🇫🇷

- Référentiel National de Sécurité de l’Information (RNSI), MPT Algérie 🇩🇿

- Misure minime di sicurezza ICT per le pubbliche amministrazioni, AGID Italia 🇮🇹

- Framework Nazionale CyberSecurity v2, FNCS Italia 🇮🇹

- Framework Nazionale per la Cybersecurity e la Data Protection, ACN Italia 🇮🇹

- PSSIE du Bénin, ANSSI Bénin 🇧🇯

- IGI 1300 / II 901 - Liste des exigences pour la mise en oeuvre d'un SI classifié (ANSSI) 🇫🇷

- Référentiel Général de Sécurité 2.0 - Annexe B2 🇫🇷

- Recommandations sur la sécurisation des systèmes de contrôle d'accès physique et de vidéoprotection 🇫🇷

- Recommandations pour un usage sécurisé d’(Open)SSH 🇫🇷

- Recommandations de sécurité relatives à IPsec pour la protection des flux réseau 🇫🇷

- Recommandations relatives à l'interconnexion d'un système d'information à internet 🇫🇷

- Guides des mécanismes cryptographiques 🇫🇷

- Swift Customer Security Controls Framework (CSCF) v2025 🏦🌐

- OWASP Application Security Verification Standard (ASVS) 5 🐝🖥️

- NIST 800-82 (OT) - appendix 🏭🤖

[!NOTE] Frameworks with

*require an extra manual step of getting the latest Excel sheet through their website as their license prevent direct usage.

Checkout the library and tools for the Domain Specific Language used and how you can define your own.

-

Indonesia PDP 🇮🇩

-

OWASP SAMM

-

COBAC R-2024/01

-

ICO Data protection self-assessment 🇬🇧

-

ASD ISM 🇦🇺

-

Baseline informatiebeveiliging Overheid (BIO) 🇳🇱

-

and much more: just ask on Discord. If it's an open standard, we'll do it for you, free of charge 😉

A library can be a framework, a catalog of threats or reference controls, and even a custom risk matrix.

Take a look at the tools directory and its dedicated README. The convert_library_v2.py script will help you create your library from a simple Excel file. Once you have structured your items in that format, just run the script and use the resulting YAML file.

You can also find some specific converters in the tools directory (e.g. for CIS or CCM Controls).

There is also a tool to facilitate the creation of mappings, called prepare_mapping_v2.py that will create an Excel file based on two framework libraries in YAML. Once properly filled, this Excel file can be processed by the convert_library_v2.py tool to get the resulting mapping library.

Join our open Discord community to interact with the team and other GRC experts.

The fastest and easiest way to get started is through the free trial of cloud instance available here.

To run CISO Assistant locally in a straightforward way, you can use Docker compose.

- Update docker

Make sure you have a recent version of docker (>= 27.0).

- Clone the repository

git clone --single-branch -b main https://github.com/intuitem/ciso-assistant-community.git

cd ciso-assistant-community- Launch docker-compose script for prebuilt images:

./docker-compose.shAlternatively, you can use this variant to build the docker images for your specific architecture:

./docker-compose-build.shWhen asked for, enter your email and password for your superuser.

You can then reach CISO Assistant using your web browser at https://localhost:8443/

For the following executions, use "docker compose up" directly.

- Python 3.12+

- pip 20.3+

- poetry 2.0+

- node 22+

- npm 10.2+

- pnpm 9.0+

- yaml-cpp (brew install yaml-cpp libyaml or apt install libyaml-cpp-dev)

- Clone the repository.

git clone [email protected]:intuitem/ciso-assistant-community.git

cd ciso-assistant-community- Create a file in the parent folder (e.g. ../myvars) and store your environment variables within it by copying and modifying the following code and replace

"<XXX>"by your private values. Take care not to commit this file in your git repo.

Mandatory variables

All variables in the backend have handy default values.

Recommended variables

export DJANGO_DEBUG=True

# Default url is set to http://localhost:5173 but you can change it, e.g. to use https with a caddy proxy

export CISO_ASSISTANT_URL=https://localhost:8443

# Setup a development mailer with Mailhog for example

export EMAIL_HOST_USER=''

export EMAIL_HOST_PASSWORD=''

export [email protected]

export EMAIL_HOST=localhost

export EMAIL_PORT=1025

export EMAIL_USE_TLS=TrueOther variables

# CISO Assistant will use SQLite by default, but you can setup PostgreSQL by declaring these variables

export POSTGRES_NAME=ciso-assistant

export POSTGRES_USER=ciso-assistantuser

export POSTGRES_PASSWORD=<XXX>

export POSTGRES_PASSWORD_FILE=<XXX> # alternative way to specify password

export DB_HOST=localhost

export DB_PORT=5432 # optional, default value is 5432

# CISO Assistant will use filesystem storage backend by default.

# You can use a S3 Bucket by declaring these variables

# The S3 bucket must be created before starting CISO Assistant

export USE_S3=True

export AWS_ACCESS_KEY_ID=<XXX>

export AWS_SECRET_ACCESS_KEY=<XXX>

export AWS_STORAGE_BUCKET_NAME=<your-bucket-name>

export AWS_S3_ENDPOINT_URL=<your-bucket-endpoint>

# Add a second backup mailer (will be deprecated, not recommended anymore)

export EMAIL_HOST_RESCUE=<XXX>

export EMAIL_PORT_RESCUE=587

export EMAIL_HOST_USER_RESCUE=<XXX>

export EMAIL_HOST_PASSWORD_RESCUE=<XXX>

export EMAIL_USE_TLS_RESCUE=True

# You can define the email of the first superuser, useful for automation. A mail is sent to the superuser for password initialization

export CISO_SUPERUSER_EMAIL=<XXX>

# By default, Django secret key is generated randomly at each start of CISO Assistant. This is convenient for quick test,

# but not recommended for production, as it can break the sessions (see

# this [topic](https://stackoverflow.com/questions/15170637/effects-of-changing-djangos-secret-key) for more information).

# To set a fixed secret key, use the environment variable DJANGO_SECRET_KEY.

export DJANGO_SECRET_KEY=...

# Logging configuration

export LOG_LEVEL=INFO # optional, default value is INFO. Available options: DEBUG, INFO, WARNING, ERROR, CRITICAL

export LOG_FORMAT=plain # optional, default value is plain. Available options: json, plain

# Authentication options

export AUTH_TOKEN_TTL=3600 # optional, default value is 3600 seconds (60 minutes). It defines the time to live of the authentication token

export AUTH_TOKEN_AUTO_REFRESH=True # optional, default value is True. It defines if the token TTL should be refreshed automatically after each request authenticated with the token

export AUTH_TOKEN_AUTO_REFRESH_TTL=36000 # optional, default value is 36000 seconds (10 hours). It defines the time to live of the authentication token after auto refresh. You can disable it by setting it to 0.- Install poetry

Visit the poetry website for instructions: https://python-poetry.org/docs/#installation

- Install required dependencies.

poetry install- Recommended: Install the pre-commit hooks.

pre-commit install- If you want to setup Postgres:

- Launch one of these commands to enter in Postgres:

psql as superadminsudo su postgrespsql

- Create the database "ciso-assistant"

create database ciso-assistant;

- Create user "ciso-assistantuser" and grant it access

create user ciso-assistantuser with password '<POSTGRES_PASSWORD>';grant all privileges on database ciso-assistant to ciso-assistantuser;

- If you want to setup s3 bucket:

- Choose your s3 provider or try s3 feature with miniO with this command:

docker run -p 9000:9000 -p 9001:9001 -e "MINIO_ROOT_USER=XXX" -e "MINIO_ROOT_PASSWORD=XXX" quay.io/minio/minio server /data --console-address ":9001"

- You can now check your bucket on http://localhost:9001

- Fill the login with the credentials you filled on the docker run env variables

- Export in the backend directory all the env variables asked about S3

- You can see the list above in the recommanded variables

- Apply migrations.

poetry run python manage.py migrate- Create a Django superuser, that will be CISO Assistant administrator.

If you have set a mailer and CISO_SUPERUSER_EMAIL variable, there's no need to create a Django superuser with

createsuperuser, as it will be created automatically on first start. You should receive an email with a link to setup your password.

poetry run python manage.py createsuperuser- Run development server.

poetry run python manage.py runserver- for Huey (tasks runner)

- prepare a mailer for testing.

- run

python manage.py run_huey -w 2 -k processor equivalent in a separate shell. - you can use

MAIL_DEBUGto have mail on the console for easier debug

- cd into the frontend directory

cd frontend- Install dependencies

npm install -g pnpm

pnpm install- Start a development server (make sure that the django app is running)

pnpm run dev- Reach the frontend on http://localhost:5173

[!NOTE] Safari will not properly work in this setup, as it requires https for secure cookies. The simplest solution is to use Chrome or Firefox. An alternative is to use a caddy proxy. Please see the readme file in frontend directory for more information on this.

- Environment variables

All variables in the frontend have handy default values.

If you move the frontend on another host, you should set the following variable: PUBLIC_BACKEND_API_URL. Its default value is http://localhost:8000/api.

The PUBLIC_BACKEND_API_EXPOSED_URL is necessary for proper functioning of the SSO. It points to the URL of the API as seen from the browser. It should be equal to the concatenation of CISO_ASSISTANT_URL (in the backend) with "/api".

When you launch "node server" instead of "pnpm run dev", you need to set the ORIGIN variable to the same value as CISO_ASSISTANT_URL in the backend (e.g. http://localhost:3000).

The migrations are tracked by version control, https://docs.djangoproject.com/en/4.2/topics/migrations/#version-control

For the first version of the product, it is recommended to start from a clean migration.

Note: to clean existing migrations, type:

find . -path "*/migrations/*.py" -not -name "__init__.py" -delete

find . -path "*/migrations/*.pyc" -deleteAfter a change (or a clean), it is necessary to re-generate migration files:

poetry run python manage.py makemigrations

poetry run python manage.py migrateThese migration files should be tracked by version control.

To run API tests on the backend, simply type "poetry run pytest" in a shell in the backend folder.

To run functional tests on the frontend, do the following actions:

- in the frontend folder, launch the following command:

tests/e2e-tests.shThe goal of the test harness is to prevent any regression, i.e. all the tests shall be successful, both for backend and frontend.

- The API is available only on dev mode. To get that, you need to switch on the backend, for instance,

export DJANGO_DEBUG=True - The API documentation will be available on

<backend_endpoint>/api/schema/swagger/, for instance http://127.0.0.1:8000/api/schema/swagger/

To interact with it:

- call

/api/iam/login/with your credentials in the body to get the token - pass it then as a header

Authorization: Token {token}for your next calls. Notice it'sTokennotBearer.

The docker-compose-prod.yml highlights a relevant configuration with a Caddy proxy in front of the frontend. It exposes API calls only for SSO. Note that docker-compose.yml exposes the full API, which is not yet recommended for production.

Set DJANGO_DEBUG=False for security reason.

[!NOTE] The frontend cannot infer the host automatically, so you need to either set the ORIGIN variable, or the HOST_HEADER and PROTOCOL_HEADER variables. Please see the sveltekit doc on this tricky issue. Beware that this approach does not work with "pnpm run dev", which should not be a worry for production.

[!NOTE] Caddy needs to receive a SNI header. Therefore, for your public URL (the one declared in CISO_ASSISTANT_URL), you need to use a FQDN, not an IP address, as the SNI is not transmitted by a browser if the host is an IP address. Another tricky issue!

- FR: French

- EN: English

- AR: Arabic

- PT: Portuguese

- ES: Spanish

- DE: German

- NL: Dutch

- IT: Italian

- PL: Polish

- RO: Romanian

- HI: Hindi

- UR: Urdu

- CS: Czech

- SV: Swedish

- ID: Indonesian

- DA: Danish

- HU: Hungarian

- UK: Ukrainian

- EL: Greek

- TR: Turkish

- HR: Croatian

- Django - Python Web Development Framework

- SvelteKit - Frontend Framework

- eCharts - Charting library

- unovis - Complementary charting library

- Gunicorn - Python WSGI HTTP Server for UNIX

- Caddy - The coolest reverse Proxy

- Gitbook - Documentation platform

- PostgreSQL - Open Source RDBMS

- SQLite - Open Source RDBMS

- Docker - Container Engine

- inlang - The ecosystem to globalize your software

- Huey - A lightweight task queue

Great care has been taken to follow security best practices. Please report any issue to [email protected].

This repository contains the source code for both the Open Source edition of CISO Assistant (Community Edition), released under the AGPL v3, as well as the commercial edition of CISO Assistant (Pro and Enterprise Editions), released under the intuitem Commercial Software License. This mono-repository approach is adopted for simplicity.

All the files within the top-level "enterprise" directory are released under the intuitem Commercial Software License.

All the files outside the top-level "enterprise" directory are released under the AGPLv3.

See LICENSE.md for details. For more details about the commercial editions, you can reach us on [email protected].

Unless otherwise noted, all files are © intuitem.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ciso-assistant-community

Similar Open Source Tools

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

verifywise

Verifywise is a tool designed to help developers easily verify the correctness of their code. It provides a simple and intuitive interface for running various types of tests and checks on codebases, ensuring that the code meets quality standards and requirements. With Verifywise, developers can automate the verification process, saving time and effort in identifying and fixing potential issues in their code. The tool supports multiple programming languages and frameworks, making it versatile and adaptable to different project requirements. Whether you are working on a small personal project or a large-scale software development initiative, Verifywise can help you ensure the reliability and robustness of your codebase.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

verifywise

VerifyWise is an open-source AI governance platform designed to help businesses harness the power of AI safely and responsibly. The platform ensures compliance and robust AI management without compromising on security. It offers additional products like MaskWise for data redaction, EvalWise for AI model evaluation, and FlagWise for security threat monitoring. VerifyWise simplifies AI governance for organizations, aiding in risk management, regulatory compliance, and promoting responsible AI practices. It features options for on-premises or private cloud hosting, open-source with AGPLv3 license, AI-generated answers for compliance audits, source code transparency, Docker deployment, user registration, role-based access control, and various AI governance tools like risk management, bias & fairness checks, evidence center, AI trust center, and more.

AgentBench

AgentBench is a benchmark designed to evaluate Large Language Models (LLMs) as autonomous agents in various environments. It includes 8 distinct environments such as Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles. The tool provides a comprehensive evaluation of LLMs' ability to operate as agents by offering Dev and Test sets for each environment. Users can quickly start using the tool by following the provided steps, configuring the agent, starting task servers, and assigning tasks. AgentBench aims to bridge the gap between LLMs' proficiency as agents and their practical usability.

gpustack

GPUStack is an open-source GPU cluster manager designed for running large language models (LLMs). It supports a wide variety of hardware, scales with GPU inventory, offers lightweight Python package with minimal dependencies, provides OpenAI-compatible APIs, simplifies user and API key management, enables GPU metrics monitoring, and facilitates token usage and rate metrics tracking. The tool is suitable for managing GPU clusters efficiently and effectively.

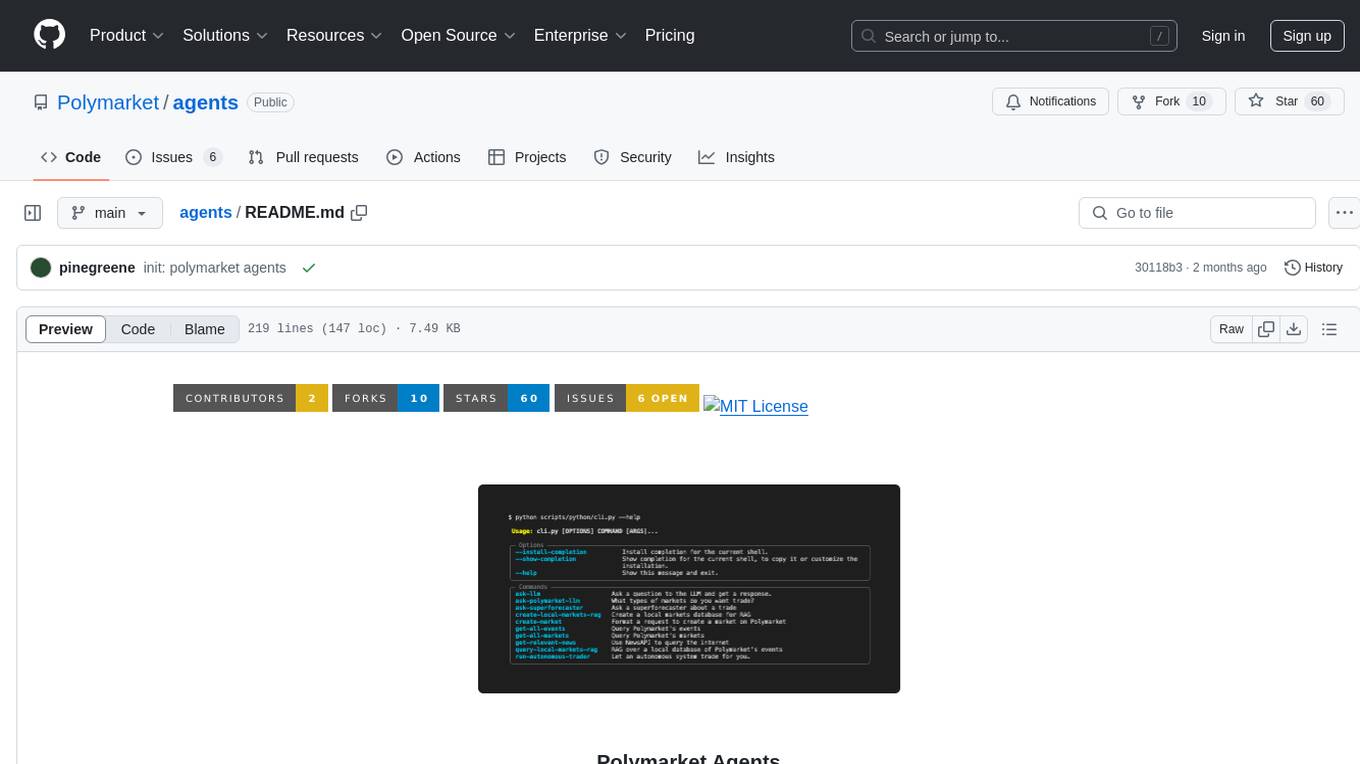

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

For similar tasks

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

LLM-Finetuning-Toolkit

LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. It allows users to control all elements of a typical experimentation pipeline - prompts, open-source LLMs, optimization strategy, and LLM testing - through a single YAML configuration file. The toolkit supports basic, intermediate, and advanced usage scenarios, enabling users to run custom experiments, conduct ablation studies, and automate fine-tuning workflows. It provides features for data ingestion, model definition, training, inference, quality assurance, and artifact outputs, making it a comprehensive tool for fine-tuning large language models.

For similar jobs

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

gigachad-grc

A comprehensive, modular, containerized Governance, Risk, and Compliance (GRC) platform built with modern technologies. Manage your entire security program from compliance tracking to risk management, third-party assessments, and external audits. The platform includes specialized modules for Compliance, Data Management, Risk Management, Third-Party Risk Management, Trust, Audit, Tools, AI & Automation, and Administration. It offers features like controls management, frameworks assessment, policies lifecycle management, vendor risk management, security questionnaires, knowledge base, audit management, awareness training, phishing simulations, AI-powered risk scoring, and MCP server integration. The tech stack includes Node.js, TypeScript, React, PostgreSQL, Keycloak, Traefik, Redis, and RustFS for storage.

llm-course

The llm-course repository is a collection of resources and materials for a course on Legal and Legislative Drafting. It includes lecture notes, assignments, readings, and other educational materials to help students understand the principles and practices of drafting legal documents. The course covers topics such as statutory interpretation, legal drafting techniques, and the role of legislation in the legal system. Whether you are a law student, legal professional, or someone interested in understanding the intricacies of legal language, this repository provides valuable insights and resources to enhance your knowledge and skills in legal drafting.

non-ai-licenses

This repository provides templates for software and digital work licenses that restrict usage in AI training datasets or AI technologies. It includes various license styles such as Apache, BSD, MIT, UPL, ISC, CC0, and MPL-2.0.

sec-parser

The `sec-parser` project simplifies extracting meaningful information from SEC EDGAR HTML documents by organizing them into semantic elements and a tree structure. It helps in parsing SEC filings for financial and regulatory analysis, analytics and data science, AI and machine learning, causal AI, and large language models. The tool is especially beneficial for AI, ML, and LLM applications by streamlining data pre-processing and feature extraction.

docq

Docq is a private and secure GenAI tool designed to extract knowledge from business documents, enabling users to find answers independently. It allows data to stay within organizational boundaries, supports self-hosting with various cloud vendors, and offers multi-model and multi-modal capabilities. Docq is extensible, open-source (AGPLv3), and provides commercial licensing options. The tool aims to be a turnkey solution for organizations to adopt AI innovation safely, with plans for future features like more data ingestion options and model fine-tuning.

AwesomeResponsibleAI

Awesome Responsible AI is a curated list of academic research, books, code of ethics, courses, data sets, frameworks, institutes, newsletters, principles, podcasts, reports, tools, regulations, and standards related to Responsible, Trustworthy, and Human-Centered AI. It covers various concepts such as Responsible AI, Trustworthy AI, Human-Centered AI, Responsible AI frameworks, AI Governance, and more. The repository provides a comprehensive collection of resources for individuals interested in ethical, transparent, and accountable AI development and deployment.

verifywise

VerifyWise is an open-source AI governance platform designed to help businesses harness the power of AI safely and responsibly. The platform ensures compliance and robust AI management without compromising on security. It offers additional products like MaskWise for data redaction, EvalWise for AI model evaluation, and FlagWise for security threat monitoring. VerifyWise simplifies AI governance for organizations, aiding in risk management, regulatory compliance, and promoting responsible AI practices. It features options for on-premises or private cloud hosting, open-source with AGPLv3 license, AI-generated answers for compliance audits, source code transparency, Docker deployment, user registration, role-based access control, and various AI governance tools like risk management, bias & fairness checks, evidence center, AI trust center, and more.