gptme

Your agent in your terminal, equipped with local tools: writes code, uses the terminal, browses the web, vision.

Stars: 3458

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

README:

/ʤiː piː tiː miː/

Getting Started • Website • Documentation

📜 Personal AI assistant/agent in your terminal, with tools so it can:

Use the terminal, run code, edit files, browse the web, use vision, and much more;

a great coding agent, but is general-purpose to assists in all kinds of knowledge-work, from a simple but powerful CLI.

An unconstrained local alternative to: ChatGPT with "Code Interpreter", Cursor Agent, etc.

Not limited by lack of software, internet access, timeouts, or privacy concerns (if using local models).

[!NOTE] These demos are very out of date and do not reflect the latest capabilities. We hope to update them soon!

| Fibonacci (old) | Snake with curses |

|---|---|

Steps

|

Steps

|

| Mandelbrot with curses | Answer question from URL |

Steps

|

Steps

|

| Terminal UI | Web UI |

Features

|

Features

|

You can find more Demos and Examples in the documentation.

- 💻 Code execution

- 🧩 Read, write, and change files

- Makes incremental changes with the patch tool.

- 🌐 Search and browse the web.

- Can use a browser via Playwright with the browser tool.

- 👀 Vision

- Can see images referenced in prompts, screenshots of your desktop, and web pages.

- 🔄 Self-correcting

- Output is fed back to the assistant, allowing it to respond and self-correct.

- 🤖 Support for several LLM providers

- Use OpenAI, Anthropic, OpenRouter, or serve locally with

llama.cpp

- Use OpenAI, Anthropic, OpenRouter, or serve locally with

- 🌐 Web UI and REST API

- Modern web interface at chat.gptme.org (gptme-webui)

- Simple built-in web UI included in the Python package

- Server with REST API

- 💻 Computer use tool, as hyped by Anthropic (see #216)

- Give the assistant access to a full desktop, allowing it to interact with GUI applications.

- 🤖 Long-running agents and advanced agent architectures (see #143 and #259)

- Create your own agent with persistence using gptme-agent-template, like Bob.

- ✨ Many smaller features to ensure a great experience

- 🚰 Pipe in context via

stdinor as arguments.- Passing a filename as an argument will read the file and include it as context.

- → Smart completion and highlighting:

- Tab completion and highlighting for commands and paths

- 📝 Automatic naming of conversations

- ✅ Detects and integrates pre-commit

- 🗣️ Text-to-Speech support, locally generated using Kokoro

- 🎯 Feature flags for advanced usage, see configuration docs

- 🚰 Pipe in context via

- 🖥 Development: Write and run code faster with AI assistance.

- 🎯 Shell Expert: Get the right command using natural language (no more memorizing flags!).

- 📊 Data Analysis: Process and analyze data directly in your terminal.

- 🎓 Interactive Learning: Experiment with new technologies or codebases hands-on.

- 🤖 Agents & Tools: Experiment with agents & tools in a local environment.

- 🧰 Easy to extend

- Most functionality is implemented as tools, making it easy to add new features.

- 🧪 Extensive testing, high coverage.

- 🧹 Clean codebase, checked and formatted with

mypy,ruff, andpyupgrade. - 🤖 GitHub Bot to request changes from comments! (see #16)

- Operates in this repo! (see #18 for example)

- Runs entirely in GitHub Actions.

- 📊 Evaluation suite for testing capabilities of different models

- 📝 gptme.vim for easy integration with vim

- 🌳 Tree-based conversation structure (see #17)

- 📜 RAG to automatically include context from local files (see #59)

- 🏆 Advanced evals for testing frontier capabilities

Install with pipx:

# requires Python 3.10+

pipx install gptmeNow, to get started, run:

gptmeHere are some examples:

gptme 'write an impressive and colorful particle effect using three.js to particles.html'

gptme 'render mandelbrot set to mandelbrot.png'

gptme 'suggest improvements to my vimrc'

gptme 'convert to h265 and adjust the volume' video.mp4

git diff | gptme 'complete the TODOs in this diff'

make test | gptme 'fix the failing tests'For more, see the Getting Started guide and the Examples in the documentation.

$ gptme --help

Usage: gptme [OPTIONS] [PROMPTS]...

gptme is a chat-CLI for LLMs, empowering them with tools to run shell

commands, execute code, read and manipulate files, and more.

If PROMPTS are provided, a new conversation will be started with it. PROMPTS

can be chained with the '-' separator.

The interface provides user commands that can be used to interact with the

system.

Available commands:

/undo Undo the last action

/log Show the conversation log

/tools Show available tools

/edit Edit the conversation in your editor

/rename Rename the conversation

/fork Create a copy of the conversation with a new name

/summarize Summarize the conversation

/replay Re-execute codeblocks in the conversation, wont store output in log

/impersonate Impersonate the assistant

/tokens Show the number of tokens used

/export Export conversation as standalone HTML

/help Show this help message

/exit Exit the program

Options:

-n, --name TEXT Name of conversation. Defaults to generating a random

name.

-m, --model TEXT Model to use, e.g. openai/gpt-4o,

anthropic/claude-3-5-sonnet-20240620. If only

provider given, a default is used.

-w, --workspace TEXT Path to workspace directory. Pass '@log' to create a

workspace in the log directory.

-r, --resume Load last conversation

-y, --no-confirm Skips all confirmation prompts.

-n, --non-interactive Force non-interactive mode. Implies --no-confirm.

--system TEXT System prompt. Can be 'full', 'short', or something

custom.

-t, --tools TEXT Comma-separated list of tools to allow. Available:

read, save, append, patch, shell, subagent, tmux,

browser, gh, chats, screenshot, vision, computer,

python.

--no-stream Don't stream responses

--show-hidden Show hidden system messages.

-v, --verbose Show verbose output.

--version Show version and configuration information

--help Show this message and exit.For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gptme

Similar Open Source Tools

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

OpenAdapt

OpenAdapt is an open-source software adapter between Large Multimodal Models (LMMs) and traditional desktop and web Graphical User Interfaces (GUIs). It aims to automate repetitive GUI workflows by leveraging the power of LMMs. OpenAdapt records user input and screenshots, converts them into tokenized format, and generates synthetic input via transformer model completions. It also analyzes recordings to generate task trees and replay synthetic input to complete tasks. OpenAdapt is model agnostic and generates prompts automatically by learning from human demonstration, ensuring that agents are grounded in existing processes and mitigating hallucinations. It works with all types of desktop GUIs, including virtualized and web, and is open source under the MIT license.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

Sanmill

Sanmill is a free, powerful UCI-like N men's morris program with CUI, Flutter GUI and Qt GUI. Nine men's morris is a strategy board game for two players dating at least to the Roman Empire. The game is also known as nine-man morris , mill , mills , the mill game , merels , merrills , merelles , marelles , morelles , and ninepenny marl in English.

ha-llmvision

LLM Vision is a Home Assistant integration that allows users to analyze images, videos, and camera feeds using multimodal LLMs. It supports providers such as OpenAI, Anthropic, Google Gemini, LocalAI, and Ollama. Users can input images and videos from camera entities or local files, with the option to downscale images for faster processing. The tool provides detailed instructions on setting up LLM Vision and each supported provider, along with usage examples and service call parameters.

Open-LLM-VTuber

Open-LLM-VTuber is a voice-interactive AI companion supporting real-time voice conversations and featuring a Live2D avatar. It can run offline on Windows, macOS, and Linux, offering web and desktop client modes. Users can customize appearance and persona, with rich LLM inference, text-to-speech, and speech recognition support. The project is highly customizable, extensible, and actively developed with exciting features planned. It provides privacy with offline mode, persistent chat logs, and various interaction features like voice interruption, touch feedback, Live2D expressions, pet mode, and more.

rowboat

Rowboat is a tool that allows users to build AI agents instantly with natural language, connect tools with one-click integrations, power workflows with knowledge by adding documents for RAG, automate workflows by setting up triggers and actions, and deploy anywhere via API or SDK. Users can access a hosted version to start building agents right away. The tool provides features such as native RAG support, custom LLM providers, tools & triggers for automation, and API & SDK integration. Users can refer to the documentation to learn how to start building agents with Rowboat.

For similar tasks

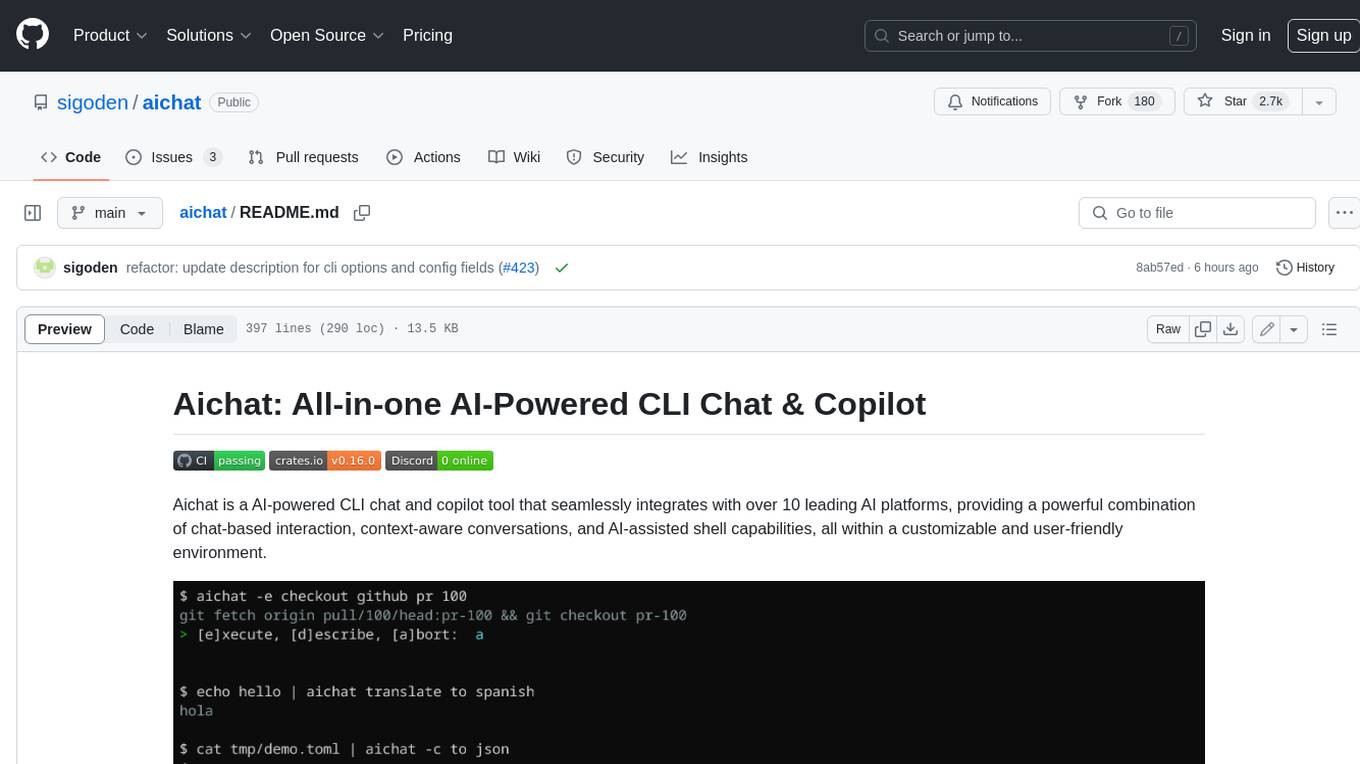

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

AIRAVAT

AIRAVAT is a multifunctional Android Remote Access Tool (RAT) with a GUI-based Web Panel that does not require port forwarding. It allows users to access various features on the victim's device, such as reading files, downloading media, retrieving system information, managing applications, SMS, call logs, contacts, notifications, keylogging, admin permissions, phishing, audio recording, music playback, device control (vibration, torch light, wallpaper), executing shell commands, clipboard text retrieval, URL launching, and background operation. The tool requires a Firebase account and tools like ApkEasy Tool or ApkTool M for building. Users can set up Firebase, host the web panel, modify Instagram.apk for RAT functionality, and connect the victim's device to the web panel. The tool is intended for educational purposes only, and users are solely responsible for its use.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

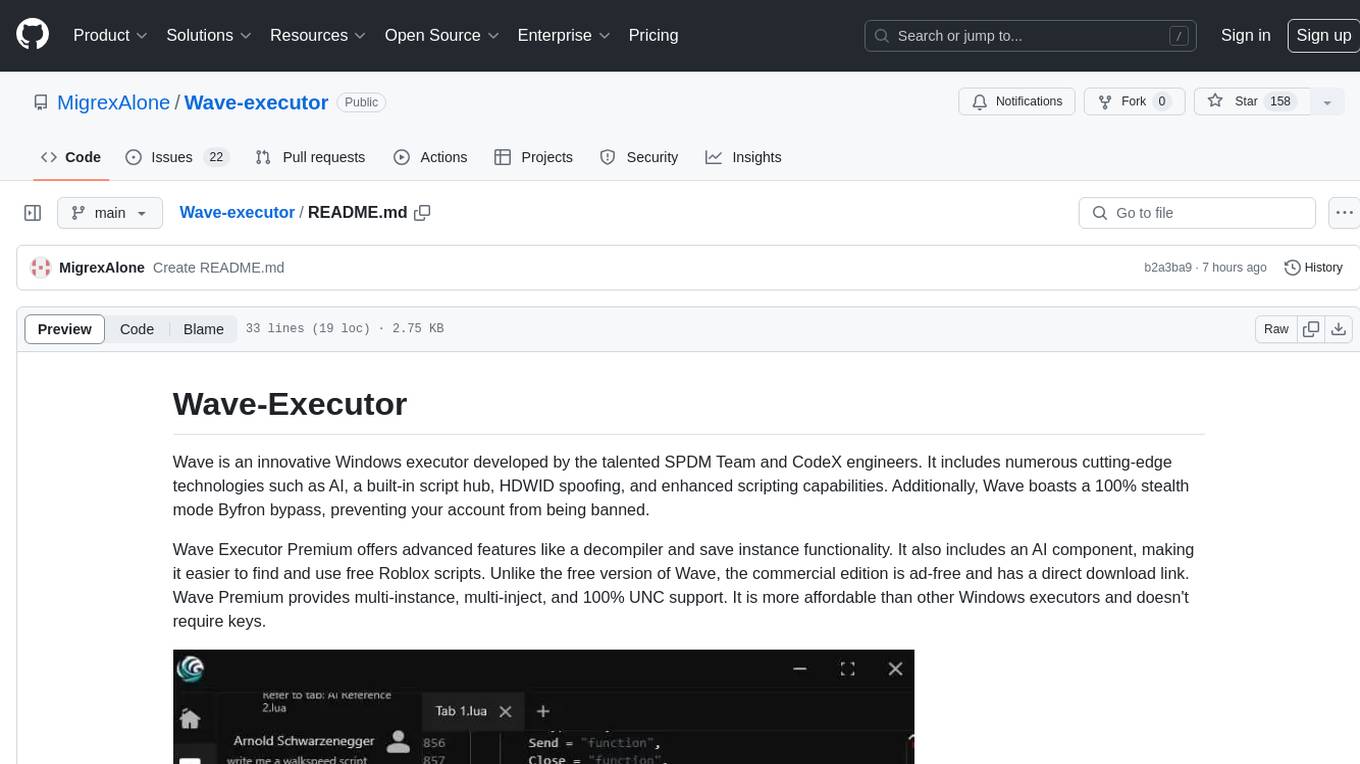

Wave-executor

Wave Executor is an innovative Windows executor developed by SPDM Team and CodeX engineers, featuring cutting-edge technologies like AI, built-in script hub, HDWID spoofing, and enhanced scripting capabilities. It offers a 100% stealth mode Byfron bypass, advanced features like decompiler and save instance functionality, and a commercial edition with ad-free experience and direct download link. Wave Premium provides multi-instance, multi-inject, and 100% UNC support, making it a cost-effective option for executing scripts in popular Roblox games.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.