zenml

ZenML 🙏: One AI Platform from Pipelines to Agents. https://zenml.io.

Stars: 5216

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

README:

Projects •

Roadmap •

Changelog •

Report Bug •

Sign up for ZenML Pro •

Blog •

Docs

🎉 For the latest release, see the changelog.

ZenML is built for ML or AI Engineers working on traditional ML use-cases, LLM workflows, or agents, in a company setting.

At it's core, ZenML allows you to write workflows (pipelines) that run on any infrastructure backend (stacks). You can embed any Pythonic logic within these pipelines, like training a model, or running an agentic loop. ZenML then operationalizes your application by:

- Automatically containerizing and tracking your code.

- Tracking individual runs with metrics, logs, and metadata.

- Abstracting away infrastructure complexity.

- Integrating your existing tools and infrastructure e.g. MLflow, Langgraph, Langfuse, Sagemaker, GCP Vertex, etc.

- Allowing you to quickly iterate on experiments with an observable layer, in development and in production.

...amongst many other features.

ZenML is used by thousands of companies to run their AI workflows. Here are some featured ones:

(please email [email protected] if you want to be featured)

# Install ZenML with server capabilities

pip install "zenml[server]" # pip install zenml will install a slimmer client

# Initialize your ZenML repository

zenml init

# Start local server or connect to a remote one

zenml loginYou can then explore any of the examples in this repo. We recommend starting with the quickstart, which demonstrates core ZenML concepts: pipelines, steps, artifacts, snapshots, and deployments.

ZenML uses a client-server architecture with an integrated web dashboard (zenml-io/zenml-dashboard):

-

Local Development:

pip install "zenml[local]"- runs both client and server locally -

Production: Deploy server separately, connect with

pip install zenml+zenml login <server-url>

Here is a short demo:

The best way to learn about ZenML is through our comprehensive documentation and tutorials:

- Documentation - Complete product documentation

- Your First AI Pipeline - Build and evaluate an AI service in minutes

- Starter Guide - From zero to production in 30 minutes

- LLMOps Guide - Specific patterns for LLM applications

- SDK Reference - Complete SDK reference

- Agent Architecture Comparison - Compare AI agents with LangGraph workflows, LiteLLM integration, and automatic visualizations via custom materializers

- Deploying ML Models - Deploy classical ML models as production endpoints with monitoring and versioning

- Deploying Agents - Document analysis service with pipelines, evaluation, and embedded web UI

- E2E Batch Inference - Complete MLOps pipeline with feature engineering

- LLM RAG Pipeline - Production RAG with evaluation loops

- Agentic Workflow (Deep Research) - Orchestrate your agents with ZenML

- Fine-tuning Pipeline - Fine-tune and deploy LLMs

Stop clicking through dashboards to understand your ML workflows. The ZenML MCP Server lets you query your pipelines, analyze runs, and trigger deployments using natural language through Claude Desktop, Cursor, or any MCP-compatible client.

💬 "Which pipeline runs failed this week and why?"

📊 "Show me accuracy metrics for all my customer churn models"

🚀 "Trigger the latest fraud detection pipeline with production data"

Quick Setup:

- Download the

.dxtfile from zenml-io/mcp-zenml - Drag it into Claude Desktop settings

- Add your ZenML server URL and API key

- Start chatting with your ML infrastructure

The MCP (Model Context Protocol) integration transforms your ZenML metadata into conversational insights, making pipeline debugging and analysis as easy as asking a question. Perfect for teams who want to democratize access to ML operations without requiring dashboard expertise.

ZenML is featured in these comprehensive guides to production AI systems.

Contribute:

- 🌟 Star us on GitHub - Help others discover ZenML

- 🤝 Contributing Guide - Start with

good-first-issue - 💻 Write Integrations - Add your favorite tools

Stay Updated:

- 🗺 Public Roadmap - See what's coming next

- 📰 Blog - Best practices and case studies

- 🎙 Slack - Talk with AI practitioners

Q: "Do I need to rewrite my agents or models to use ZenML?"

A: No. Wrap your existing code in a @step. Keep using scikit-learn, PyTorch, LangGraph, LlamaIndex, or raw API calls. ZenML orchestrates your tools, it doesn't replace them.

Q: "How is this different from LangSmith/Langfuse?"

A: They provide excellent observability for LLM applications. We orchestrate the full MLOps lifecycle for your entire AI stack. With ZenML, you manage both your classical ML models and your AI agents in one unified framework, from development and evaluation all the way to production deployment.

Q: "Can I use my existing MLflow/W&B setup?"

A: Yes! ZenML integrates with both MLflow and Weights & Biases. Your experiments, our pipelines.

Q: "Is this just MLflow with extra steps?"

A: No. MLflow tracks experiments. We orchestrate the entire development process – from training and evaluation to deployment and monitoring – for both models and agents.

Q: "How do I configure ZenML with Kubernetes?"

A: ZenML integrates with Kubernetes through the native Kubernetes orchestrator, Kubeflow, and other K8s-based orchestrators. See our Kubernetes orchestrator guide and Kubeflow guide, plus deployment documentation.

Q: "What about cost? I can't afford another platform."

A: ZenML's open-source version is free forever. You likely already have the required infrastructure (like a Kubernetes cluster and object storage). We just help you make better use of it for MLOps.

Manage pipelines directly from your editor:

Install from VS Code Marketplace.

ZenML is distributed under the terms of the Apache License Version 2.0. See LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for zenml

Similar Open Source Tools

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

langgraph

LangGraph is a low-level orchestration framework for building, managing, and deploying long-running, stateful agents. It provides durable execution, human-in-the-loop capabilities, comprehensive memory management, debugging tools, and production-ready deployment infrastructure. LangGraph can be used standalone or integrated with other LangChain products to streamline LLM application development.

bisheng

Bisheng is a leading open-source **large model application development platform** that empowers and accelerates the development and deployment of large model applications, helping users enter the next generation of application development with the best possible experience.

unstract

Unstract is a no-code platform that enables users to launch APIs and ETL pipelines to structure unstructured documents. With Unstract, users can go beyond co-pilots by enabling machine-to-machine automation. Unstract's Prompt Studio provides a simple, no-code approach to creating prompts for LLMs, vector databases, embedding models, and text extractors. Users can then configure Prompt Studio projects as API deployments or ETL pipelines to automate critical business processes that involve complex documents. Unstract supports a wide range of LLM providers, vector databases, embeddings, text extractors, ETL sources, and ETL destinations, providing users with the flexibility to choose the best tools for their needs.

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

tools

This repository contains a collection of various tools and utilities that can be used for different purposes. It includes scripts, programs, and resources to assist with tasks related to software development, data analysis, automation, and more. The tools are designed to be versatile and easy to use, providing solutions for common challenges faced by developers and users alike.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

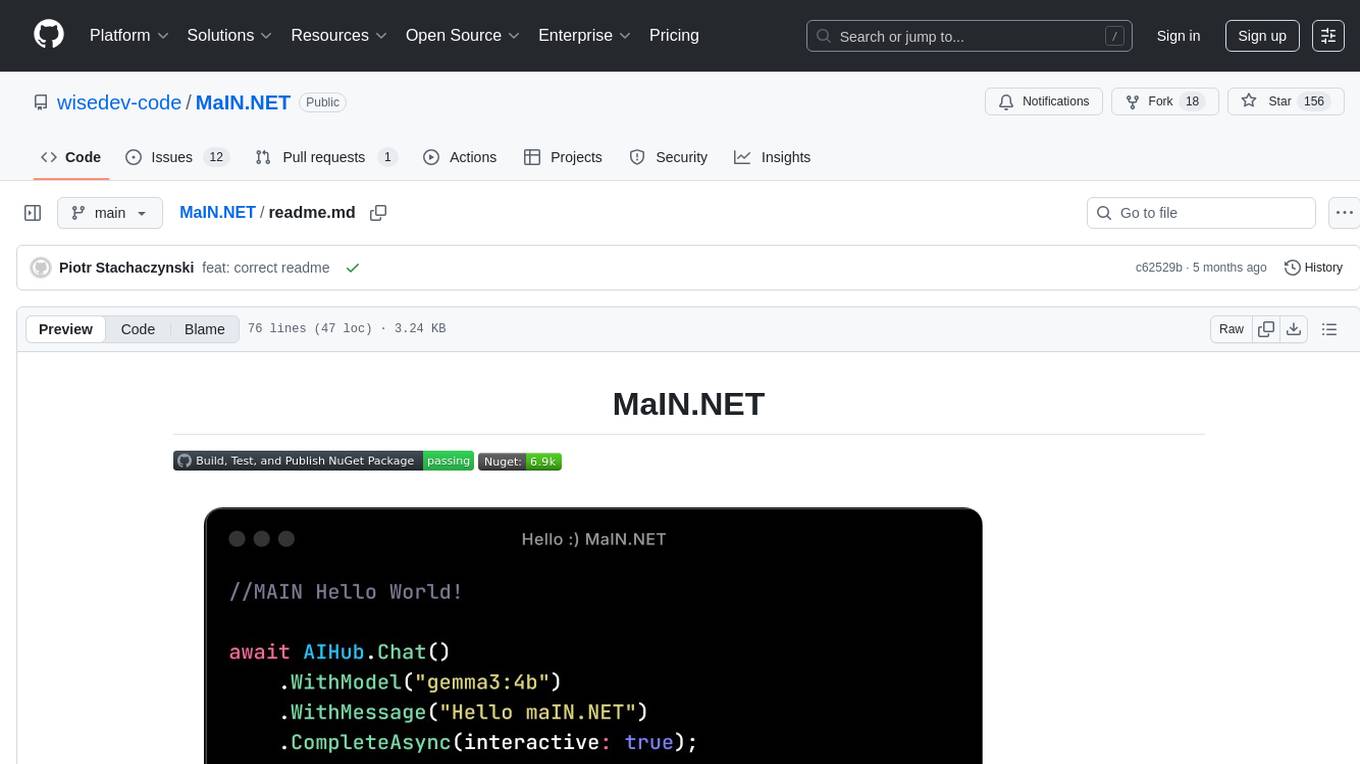

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

sdk-python

Strands Agents is a lightweight and flexible SDK that takes a model-driven approach to building and running AI agents. It supports various model providers, offers advanced capabilities like multi-agent systems and streaming support, and comes with built-in MCP server support. Users can easily create tools using Python decorators, integrate MCP servers seamlessly, and leverage multiple model providers for different AI tasks. The SDK is designed to scale from simple conversational assistants to complex autonomous workflows, making it suitable for a wide range of AI development needs.

redb-open

reDB Node is a distributed, policy-driven data mesh platform that enables True Data Portability across various databases, warehouses, clouds, and environments. It unifies data access, data mobility, and schema transformation into one open platform. Built for developers, architects, and AI systems, reDB addresses the challenges of fragmented data ecosystems in modern enterprises by providing multi-database interoperability, automated schema versioning, zero-downtime migration, real-time developer data environments with obfuscation, quantum-resistant encryption, and policy-based access control. The project aims to build a foundation for future-proof data infrastructure.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

emqx

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

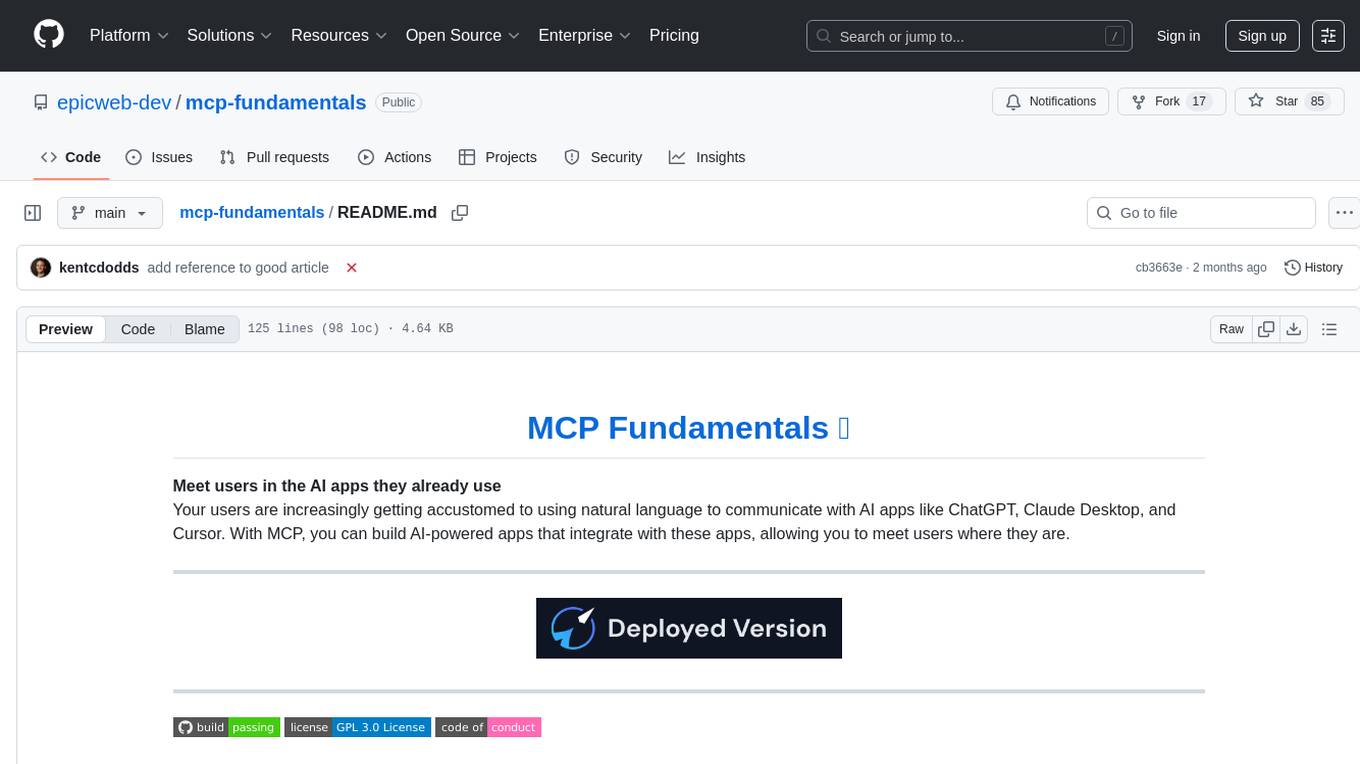

mcp-fundamentals

The mcp-fundamentals repository is a collection of fundamental concepts and examples related to microservices, cloud computing, and DevOps. It covers topics such as containerization, orchestration, CI/CD pipelines, and infrastructure as code. The repository provides hands-on exercises and code samples to help users understand and apply these concepts in real-world scenarios. Whether you are a beginner looking to learn the basics or an experienced professional seeking to refresh your knowledge, mcp-fundamentals has something for everyone.

For similar tasks

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

LLM-Finetuning-Toolkit

LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. It allows users to control all elements of a typical experimentation pipeline - prompts, open-source LLMs, optimization strategy, and LLM testing - through a single YAML configuration file. The toolkit supports basic, intermediate, and advanced usage scenarios, enabling users to run custom experiments, conduct ablation studies, and automate fine-tuning workflows. It provides features for data ingestion, model definition, training, inference, quality assurance, and artifact outputs, making it a comprehensive tool for fine-tuning large language models.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.