Best AI tools for< Manage Infrastructure >

11 - AI tool Sites

Microsoft Azure

Microsoft Azure is a cloud computing service that offers a wide range of products and solutions for businesses and developers. It provides services such as databases, analytics, compute, containers, hybrid cloud, AI, application development, and more. Azure aims to help organizations innovate, modernize, and scale their operations by leveraging the power of the cloud. With a focus on flexibility, performance, and security, Azure is designed to support a variety of workloads and use cases across different industries.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

Render

Render is a platform that simplifies the deployment and scaling of web applications and services. It provides a seamless experience for developers to launch their applications quickly and efficiently. With Render, users can easily manage their infrastructure, monitor performance, and ensure high availability of their applications. The platform offers a range of features to streamline the deployment process and optimize the performance of web applications.

Heroku

Heroku is a cloud platform that enables developers to build, deliver, monitor, and scale applications quickly and easily. It supports multiple programming languages and provides a seamless deployment process. With Heroku, developers can focus on coding without worrying about infrastructure management.

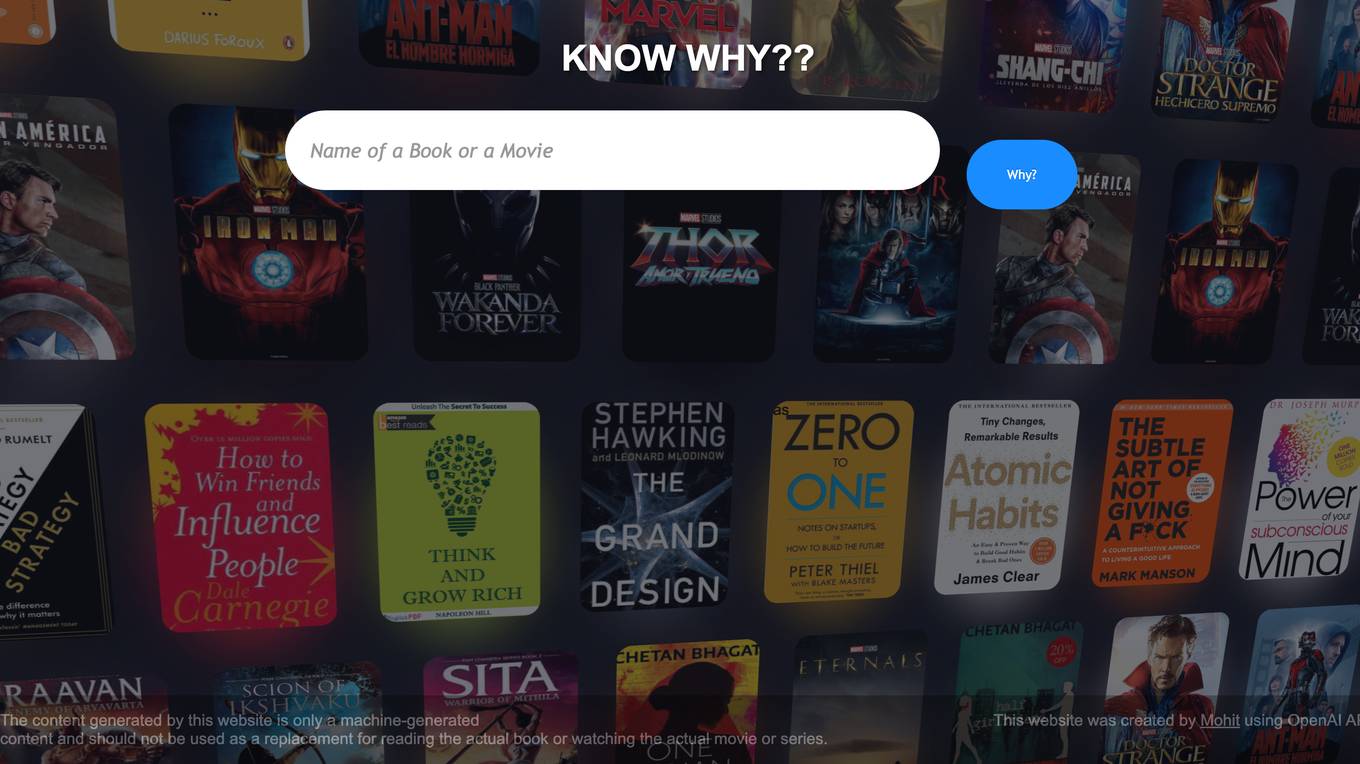

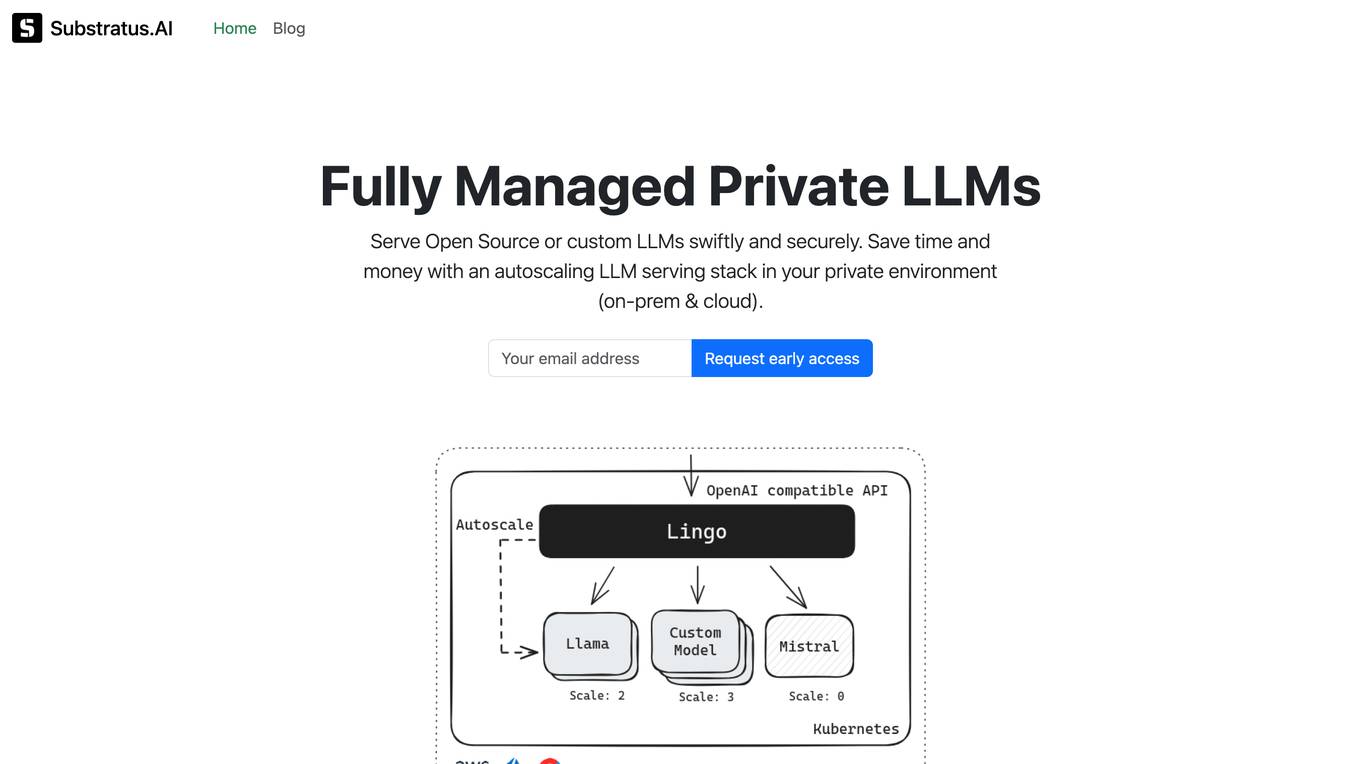

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

IBM

IBM is a leading technology company that offers a wide range of AI and machine learning solutions to help businesses innovate and grow. From AI models to cloud services, IBM provides cutting-edge technology to address various business challenges. The company also focuses on AI ethics and offers training programs to enhance skills in cybersecurity and data analytics. With a strong emphasis on research and development, IBM continues to push the boundaries of technology to solve real-world problems and drive digital transformation across industries.

AlphaCode

AlphaCode is an AI-powered tool that helps businesses understand and leverage their data. It offers a range of services, including data vision, cloud, and product development. AlphaCode's AI capabilities enable it to analyze data, identify patterns, and make predictions, helping businesses make better decisions and achieve their goals.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

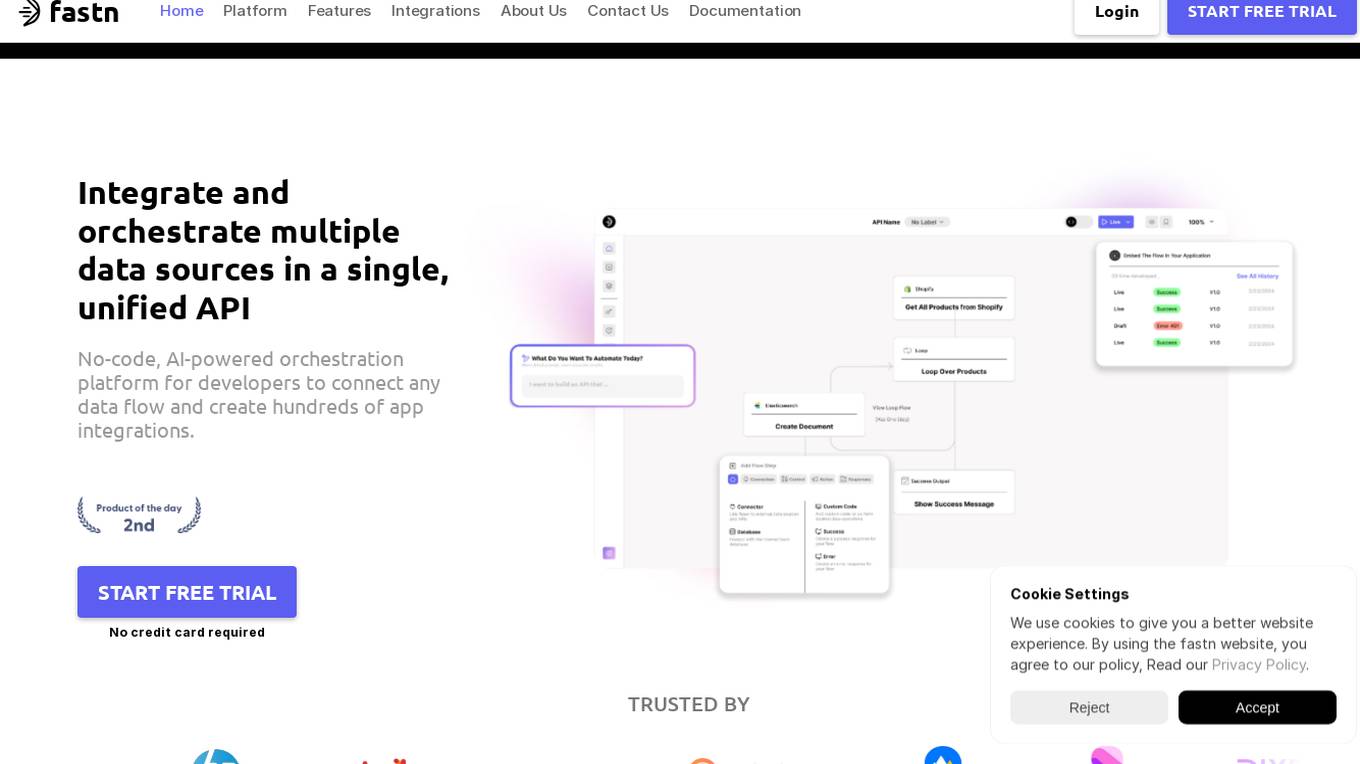

Fastn

Fastn is a no-code, AI-powered orchestration platform for developers to integrate and orchestrate multiple data sources in a single, unified API. It allows users to connect any data flow and create hundreds of app integrations efficiently. Fastn simplifies API integration, ensures API security, and handles data from multiple sources with features like real-time data orchestration, instant API composition, and infrastructure management on autopilot.

IBM Cloud

IBM Cloud is a cloud computing service offered by IBM that provides a suite of infrastructure and platform services. It allows businesses to build, deploy, and manage applications on the cloud, enabling them to scale and innovate rapidly. With a wide range of services including AI, analytics, blockchain, and IoT, IBM Cloud caters to various industries and use cases, empowering organizations to leverage cutting-edge technologies to drive digital transformation.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

7 - Open Source AI Tools

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

omnia

Omnia is a deployment tool designed to turn servers with RPM-based Linux images into functioning Slurm/Kubernetes clusters. It provides an Ansible playbook-based deployment for Slurm and Kubernetes on servers running an RPM-based Linux OS. The tool simplifies the process of setting up and managing clusters, making it easier for users to deploy and maintain their infrastructure.

devopness

Devopness is a tool that simplifies the management of cloud applications and multi-cloud infrastructure for both AI agents and humans. It provides role-based access control, permission management, cost control, and visibility into DevOps and CI/CD workflows. The tool allows provisioning and deployment to major cloud providers like AWS, Azure, DigitalOcean, and GCP. Devopness aims to make software deployment and cloud infrastructure management accessible and affordable to all involved in software projects.

skyflo

Skyflo.ai is an AI agent designed for Cloud Native operations, providing seamless infrastructure management through natural language interactions. It serves as a safety-first co-pilot with a human-in-the-loop design. The tool offers flexible deployment options for both production and local Kubernetes environments, supporting various LLM providers and self-hosted models. Users can explore the architecture of Skyflo.ai and contribute to its development following the provided guidelines and Code of Conduct. The community engagement includes Discord, Twitter, YouTube, and GitHub Discussions.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

caracal

Caracal is a pre-execution authority enforcement system for AI agents and automated software operating in production environments. It enforces a single rule: no action executes unless there is explicit, valid authority for that action at that moment. Caracal offers two interfaces: Caracal Flow for operators, FinOps, and monitoring teams, and Caracal Core for developers, CI/CD engineers, and system architects. Core capabilities include dynamic identity & access, budget enforcement, secure ledger, and agent-native data model. The infrastructure is designed to scale with environments for local and production setups.

13 - OpenAI Gpts

Awesome-Selfhosted

Recommends self-hosted IT solutions, tailored for professionals (from https://awesome-selfhosted.net/)

Infrastructure as Code Advisor

Develops, advises and optimizes infrastructure-as-code practices across the organization.

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM