action_mcp

Rails Engine with MCP compliant Spec.

Stars: 65

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

README:

ActionMCP is a Ruby gem focused on providing Model Context Protocol (MCP) capability to Ruby on Rails applications, specifically as a server.

ActionMCP is designed for production Rails environments and does not support STDIO transport. STDIO is not included because it is not production-ready and is only suitable for desktop or script-based use cases. Instead, ActionMCP is built for robust, network-based deployments.

The client functionality in ActionMCP is intended to connect to remote MCP servers, not to local processes via STDIO.

It offers base classes and helpers for creating MCP applications, making it easier to integrate your Ruby/Rails application with the MCP standard.

With ActionMCP, you can focus on your app's logic while it handles the boilerplate for MCP compliance.

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to large language models (LLMs).

Think of it as a universal interface for connecting AI assistants to external data sources and tools.

MCP allows AI systems to plug into various resources in a consistent, secure way, enabling two-way integration between your data and AI-powered applications.

This means an AI (like an LLM) can request information or actions from your application through a well-defined protocol, and your app can provide context or perform tasks for the AI in return.

ActionMCP is targeted at developers building MCP-enabled Rails applications. It simplifies the process of integrating Ruby and Rails apps with the MCP standard by providing a set of base classes and an easy-to-use server interface.

ActionMCP supports MCP 2025-06-18 (current) with backward compatibility for MCP 2025-03-26. The protocol implementation is fully compliant with the MCP specification, including:

- JSON-RPC 2.0 transport layer

- Capability negotiation during initialization

- Error handling with proper error codes (-32601 for method not found, -32002 for consent required)

- Session management with resumable sessions

- Change notifications for dynamic capability updates

For a detailed (and entertaining) breakdown of protocol versions, features, and our design decisions, see The Hitchhiker's Guide to MCP.

Don't Panic: The guide contains everything you need to know about surviving MCP protocol versions.

Note: STDIO transport is not supported in ActionMCP. This gem is focused on production-ready, network-based deployments. STDIO is only suitable for desktop or script-based experimentation and is intentionally excluded.

Instead of implementing MCP support from scratch, you can subclass and configure the provided Prompt, Tool, and ResourceTemplate classes to expose your app's functionality to LLMs.

ActionMCP handles the underlying MCP message format and routing, so you can adhere to the open standard with minimal effort.

In short, ActionMCP helps you build an MCP server (the component that exposes capabilities to AI) more quickly and with fewer mistakes.

Client connections: The client part of ActionMCP is meant to connect to remote MCP servers only. Connecting to local processes (such as via STDIO) is not supported.

To start using ActionMCP, add it to your project:

# Add gem to your Gemfile

$ bundle add actionmcp

# Install dependencies

bundle install

# Copy migrations from the engine

bin/rails action_mcp:install:migrations

# Generate base classes and configuration

bin/rails generate action_mcp:install

# Create necessary database tables

bin/rails db:migrateThe action_mcp:install generator will:

- Create base application classes (ApplicationGateway, ApplicationMCPTool, etc.)

- Generate the MCP configuration file (

config/mcp.yml) - Set up the basic directory structure for MCP components (

app/mcp/)

Database migrations are copied separately using bin/rails action_mcp:install:migrations.

ActionMCP provides three core abstractions to streamline MCP server development:

ActionMCP::Prompt enables you to create reusable prompt templates that can be discovered and used by LLMs. Each prompt is defined as a Ruby class that inherits from ApplicationMCPPrompt.

Key features:

- Define expected arguments with descriptions and validation rules

- Build multi-step conversations with mixed content types

- Support for text, images, audio, and resource attachments

- Add messages with different roles (user/assistant)

Example:

class AnalyzeCodePrompt < ApplicationMCPPrompt

prompt_name "analyze_code"

description "Analyze code for potential improvements"

argument :language, description: "Programming language", default: "Ruby"

argument :code, description: "Code to explain", required: true

validates :language, inclusion: { in: %w[Ruby Python JavaScript] }

def perform

render(text: "Please analyze this #{language} code for improvements:")

render(text: code)

# You can add assistant messages too

render(text: "Here are some things to focus on in your analysis:", role: :assistant)

# Even add resources if needed

render(resource: "file://documentation/#{language.downcase}_style_guide.pdf",

mime_type: "application/pdf",

blob: get_style_guide_pdf(language))

end

private

def get_style_guide_pdf(language)

# Implementation to retrieve style guide as base64

end

endPrompts can be executed by instantiating them and calling the call method:

analyze_prompt = AnalyzeCodePrompt.new(language: "Ruby", code: "def hello; puts 'Hello, world!'; end")

result = analyze_prompt.callActionMCP::Tool allows you to create interactive functions that LLMs can call with arguments to perform specific tasks. Each tool is a Ruby class that inherits from ApplicationMCPTool.

Key features:

- Define input properties with types, descriptions, and validation

- Return multiple response types (text, images, errors)

- Progressive responses with multiple render calls

- Automatic input validation based on property definitions

- Consent management for sensitive operations

Example:

class CalculateSumTool < ApplicationMCPTool

tool_name "calculate_sum"

description "Calculate the sum of two numbers"

property :a, type: "number", description: "The first number", required: true

property :b, type: "number", description: "The second number", required: true

def perform

sum = a + b

render(text: "Calculating #{a} + #{b}...")

render(text: "The sum is #{sum}")

# You can report errors if needed

if sum > 1000

report_error("Warning: Sum exceeds recommended limit")

end

# Or even images

render(image: generate_visualization(a, b), mime_type: "image/png")

end

private

def generate_visualization(a, b)

# Implementation to create a visualization as base64

end

endFor tools that perform sensitive operations (file system access, database modifications, external API calls), you can require explicit user consent:

class FileSystemTool < ApplicationMCPTool

tool_name "read_file"

description "Read contents of a file"

# Require explicit consent before execution

requires_consent!

property :file_path, type: "string", description: "Path to file", required: true

def perform

# This code only runs after user grants consent

content = File.read(file_path)

render(text: "File contents: #{content}")

end

endConsent Flow:

- When a consent-required tool is called without consent, it returns a JSON-RPC error with code

-32002 - The client must explicitly grant consent for the specific tool

- Once granted, the tool can execute normally for that session

- Consent is session-scoped and can be revoked at any time

Managing Consent:

# Check if consent is granted

session.consent_granted_for?("read_file")

# Grant consent for a tool

session.grant_consent("read_file")

# Revoke consent

session.revoke_consent("read_file")Tools can be executed by instantiating them and calling the call method:

sum_tool = CalculateSumTool.new(a: 5, b: 10)

result = sum_tool.callActionMCP::ResourceTemplate facilitates the creation of URI templates for dynamic resources that LLMs can access.

This allows models to request specific data using parameterized URIs.

Example:

class ProductResourceTemplate < ApplicationMCPResTemplate

uri_template "product/{id}"

description "Access product information by ID"

parameter :id, description: "Product identifier", required: true

validates :id, format: { with: /\A\d+\z/, message: "must be numeric" }

def resolve

product = Product.find_by(id: id)

return unless product

ActionMCP::Resource.new(

uri: "ecommerce://products/#{product_id}",

name: "Product #{product_id}",

description: "Product information for product #{product_id}",

mime_type: "application/json",

size: product.to_json.length

)

end

endbefore_resolve do |template|

# Starting to resolve product: #{template.product_id}

end

after_resolve do |template|

# Finished resolving product resource for product: #{template.product_id}

end

around_resolve do |template, block|

start_time = Time.current

# Starting resolution for product: #{template.product_id}

resource = block.call

if resource

# Product #{template.product_id} resolved successfully in #{Time.current - start_time}s

else

# Product #{template.product_id} not found

end

resource

endResource templates are automatically registered and used when LLMs request resources matching their patterns.

ActionMCP provides comprehensive documentation across multiple specialized guides. Each guide focuses on specific aspects to keep information organized and prevent context overload:

- Installation & Configuration - Initial setup, database migrations, and basic configuration

- Authentication with Gateway - User authentication and authorization patterns

-

📋 TOOLS.MD - Complete guide to developing MCP tools

- Generator usage and best practices

- Property definitions, validation, and consent management

- Output schemas for structured responses

- Error handling, testing, and security considerations

- Advanced features like additional properties and authentication context

-

📝 PROMPTS.MD - Prompt template development guide

- Creating reusable prompt templates

- Multi-step conversations and mixed content types

- Argument validation and prompt chaining

-

🔗 RESOURCE_TEMPLATES.md - Resource template implementation

- URI template patterns and parameter extraction

- Dynamic resource resolution and collections

- Callbacks and validation patterns

-

🔌 CLIENTUSAGE.MD - Complete client implementation guide

- Session management and resumability

- Transport configuration and connection handling

- Tool, prompt, and resource collections

- Production deployment patterns

-

🚀 The Hitchhiker's Guide to MCP - Protocol versions and migration

- Comprehensive comparison of MCP protocol versions (2024-11-05, 2025-03-26, 2025-06-18)

- Design decisions and architectural rationale

- Migration paths and compatibility considerations

- Feature evolution and technical specifications (Don't Panic!)

- Session Storage - Volatile vs ActiveRecord vs custom session stores

- Thread Pool Management - Performance tuning and graceful shutdown

- Profiles System - Multi-tenant capability filtering

- Production Deployment - Falcon, Unix sockets, and reverse proxy setup

- Generators - Rails generators for scaffolding components

- Testing with TestHelper - Comprehensive testing strategies

- Development Commands - Rake tasks for debugging and inspection

- MCP Inspector Integration - Interactive testing and validation

- Error Handling - JSON-RPC error codes and debugging

- Production Considerations - Security, performance, and monitoring

-

Middleware Conflicts - Using

mcp_vanilla.rufor production

💡 Pro Tip: Start with the component-specific guides (TOOLS.MD, PROMPTS.MD, RESOURCE_TEMPLATES.md) for hands-on development, then reference the Hitchhiker's Guide for protocol details and CLIENTUSAGE.MD for integration patterns.

ActionMCP is configured via config.action_mcp in your Rails application.

By default, the name is set to your application's name and the version defaults to "0.0.1" unless your app has a version file.

You can override these settings in your configuration (e.g., in config/application.rb):

module Tron

class Application < Rails::Application

config.action_mcp.name = "Friendly MCP (Master Control Program)" # defaults to Rails.application.name

config.action_mcp.version = "1.2.3" # defaults to "0.0.1"

config.action_mcp.logging_enabled = true # defaults to true

config.action_mcp.logging_level = :info # defaults to :info, can be :debug, :info, :warn, :error, :fatal

end

endFor dynamic versioning, consider adding the rails_app_version gem.

ActionMCP uses a pub/sub system for real-time communication. You can choose between several adapters:

- SolidMCP - Database-backed pub/sub (no Redis required)

- Simple - In-memory pub/sub for development and testing

- Redis - Redis-backed pub/sub (if you prefer Redis)

If you were previously using ActionCable with ActionMCP, you will need to migrate to the new PubSub system. Here's how:

- Remove the ActionCable dependency from your Gemfile (if you don't need it for other purposes)

- Install one of the PubSub adapters (SolidMCP recommended)

- Create a configuration file at

config/mcp.yml(you can use the generator:bin/rails g action_mcp:config) - Run your tests to ensure everything works correctly

The new PubSub system maintains the same API as the previous ActionCable-based implementation, so your existing code should continue to work without changes.

Configure your adapter in config/mcp.yml:

development:

adapter: solid_mcp

polling_interval: 0.1.seconds

# Thread pool configuration (optional)

# min_threads: 5 # Minimum number of threads in the pool

# max_threads: 10 # Maximum number of threads in the pool

# max_queue: 100 # Maximum number of tasks that can be queued

test:

adapter: test # Uses the simple in-memory adapter

production:

adapter: solid_mcp

polling_interval: 0.5.seconds

# Optional: connects_to: cable # If using a separate database

# Thread pool configuration for high-traffic environments

min_threads: 10 # Minimum number of threads in the pool

max_threads: 20 # Maximum number of threads in the pool

max_queue: 500 # Maximum number of tasks that can be queuedFor SolidMCP, add it to your Gemfile:

gem "solid_mcp" # Database-backed adapter optimized for MCPThen install it:

bundle install

bin/rails solid_mcp:install:migrations

bin/rails db:migrateThe installer will create the necessary database migration for message storage. Configure it in your config/mcp.yml.

If you prefer Redis, add it to your Gemfile:

gem "redis", "~> 5.0"Then configure the Redis adapter in your config/mcp.yml:

production:

adapter: redis

url: <%= ENV.fetch("REDIS_URL") { "redis://localhost:6379/1" } %>

channel_prefix: your_app_production

# Thread pool configuration for high-traffic environments

min_threads: 10 # Minimum number of threads in the pool

max_threads: 20 # Maximum number of threads in the pool

max_queue: 500 # Maximum number of tasks that can be queuedActionMCP provides a pluggable session storage system that allows you to choose how sessions are persisted based on your environment and requirements.

ActionMCP includes three session store implementations:

-

:volatile- In-memory storage using Concurrent::Hash- Default for development and test environments

- Sessions are lost on server restart

- Fast and lightweight for local development

- No external dependencies

-

:active_record- Database-backed storage- Default for production environment

- Sessions persist across server restarts

- Supports session resumability

- Requires database migrations

-

:test- Special store for testing- Tracks notifications and method calls

- Provides assertion helpers

- Automatically used in test environment when using TestHelper

You can configure the session store type in your Rails configuration or config/mcp.yml:

# config/application.rb or environment files

Rails.application.configure do

config.action_mcp.session_store_type = :active_record # or :volatile

endOr in config/mcp.yml:

# Global session store type (used by both client and server)

session_store_type: volatile

# Client-specific session store type (falls back to session_store_type if not specified)

client_session_store_type: volatile

# Server-specific session store type (falls back to session_store_type if not specified)

server_session_store_type: active_recordThe defaults are:

- Production:

:active_record - Development:

:volatile - Test:

:volatile(or:testwhen using TestHelper)

You can configure different session store types for client and server operations:

-

session_store_type: Global setting used by both client and server when specific types aren't set -

client_session_store_type: Session store used by ActionMCP client connections (falls back to global setting) -

server_session_store_type: Session store used by ActionMCP server sessions (falls back to global setting)

This allows you to optimize each component separately. For example, you might use volatile storage for client sessions (faster, temporary) while using persistent storage for server sessions (maintains state across restarts).

# The session store is automatically selected based on configuration

# You can access it directly if needed:

session_store = ActionMCP::Server.session_store

# Create a session

session = session_store.create_session(session_id, {

status: "initialized",

protocol_version: "2025-03-26",

# ... other session attributes

})

# Load a session

session = session_store.load_session(session_id)

# Update a session

session_store.update_session(session_id, { status: "active" })

# Delete a session

session_store.delete_session(session_id)With the :active_record store, clients can resume sessions after disconnection:

# Client includes session ID in request headers

# Server automatically resumes the existing session

headers["Mcp-Session-Id"] = "existing-session-id"

# If the session exists, it will be resumed

# If not, a new session will be createdYou can create custom session stores by inheriting from ActionMCP::Server::SessionStore::Base:

class MyCustomSessionStore < ActionMCP::Server::SessionStore::Base

def create_session(session_id, payload = {})

# Implementation

end

def load_session(session_id)

# Implementation

end

def update_session(session_id, updates)

# Implementation

end

def delete_session(session_id)

# Implementation

end

def exists?(session_id)

# Implementation

end

end

# Register your custom store

ActionMCP::Server.session_store = MyCustomSessionStore.newActionMCP uses thread pools to efficiently handle message callbacks. This prevents the system from being overwhelmed by too many threads under high load.

You can configure the thread pool in your config/mcp.yml:

production:

adapter: solid_mcp

# Thread pool configuration

min_threads: 10 # Minimum number of threads to keep in the pool

max_threads: 20 # Maximum number of threads the pool can grow to

max_queue: 500 # Maximum number of tasks that can be queuedThe thread pool will automatically:

- Start with

min_threadsthreads - Scale up to

max_threadsas needed - Queue tasks up to

max_queuelimit - Use caller's thread if queue is full (fallback policy)

When your application is shutting down, you should call:

ActionMCP::Server.shutdownThis ensures all thread pools are properly terminated and tasks are completed.

ActionMCP runs as a standalone Rack application. Do not attempt to mount it in your application's routes.rb—it is not designed to be mounted as an engine at a custom path. When you use run ActionMCP::Engine in your mcp.ru, the MCP endpoint is always available at the root path (/).

ActionMCP includes generators to help you set up your project quickly. The install generator creates all necessary base classes and configuration files:

# Install ActionMCP with base classes and configuration

bin/rails generate action_mcp:installThis will create:

-

app/mcp/prompts/application_mcp_prompt.rb- Base prompt class -

app/mcp/tools/application_mcp_tool.rb- Base tool class -

app/mcp/resource_templates/application_mcp_res_template.rb- Base resource template class -

app/mcp/application_gateway.rb- Gateway for authentication -

config/mcp.yml- Configuration file with example settings for all environments

Note: Authentication and authorization are not included. You are responsible for securing the endpoint.

ActionMCP provides a Gateway system similar to ActionCable's Connection for handling authentication. The Gateway allows you to authenticate users and make them available throughout your MCP components.

ActionMCP uses a Gateway pattern with pluggable identifiers for authentication. You can implement custom authentication strategies using session-based auth, API keys, bearer tokens, or integrate with existing authentication systems like Warden, Devise, or external OAuth providers.

When you run the install generator, it creates an ApplicationGateway class:

# app/mcp/application_gateway.rb

class ApplicationGateway < ActionMCP::Gateway

# Specify what attributes identify a connection

identified_by :user

protected

def authenticate!

token = extract_bearer_token

raise ActionMCP::UnauthorizedError, "Missing token" unless token

payload = ActionMCP::JwtDecoder.decode(token)

user = resolve_user(payload)

raise ActionMCP::UnauthorizedError, "Unauthorized" unless user

# Return a hash with all identified_by attributes

{ user: user }

end

private

def resolve_user(payload)

user_id = payload["user_id"] || payload["sub"]

User.find_by(id: user_id) if user_id

end

endYou can identify connections by multiple attributes:

class ApplicationGateway < ActionMCP::Gateway

identified_by :user, :organization

protected

def authenticate!

# ... authentication logic ...

{

user: user,

organization: user.organization

}

end

endOnce authenticated, the current user (and other identifiers) are available in your tools, prompts, and resource templates:

class MyTool < ApplicationMCPTool

def perform

# Access the authenticated user

if current_user

render text: "Hello, #{current_user.name}!"

else

render text: "Hi Stranger! It's been a while "

end

end

endActionMCP uses Rails' CurrentAttributes to store the authenticated context. The ActionMCP::Current class provides:

-

ActionMCP::Current.user- The authenticated user -

ActionMCP::Current.gateway- The gateway instance - Any other attributes you define with

identified_by

# Load the full Rails environment to access models, DB, Redis, etc.

require_relative "config/environment"

# No need to set a custom endpoint path. The MCP endpoint is always served at root ("/")

# when using ActionMCP::Engine directly.

run ActionMCP::Enginebin/rails s -c mcp.ru -p 62770 -P tmp/pids/mcps0.pidIf your Rails application uses middleware that interferes with MCP server operation (like Devise, Warden, Ahoy, Rack::Cors, etc.), use mcp_vanilla.ru instead:

# mcp_vanilla.ru - A minimal Rack app with only essential middleware

# This avoids conflicts with authentication, tracking, and other web-specific middleware

# See the file for detailed documentation on when and why to use it

bundle exec rails s -c mcp_vanilla.ru -p 62770

# Or with Falcon:

bundle exec falcon serve --bind http://0.0.0.0:62770 --config mcp_vanilla.ruCommon middleware that can cause issues:

-

Devise/Warden - Expects cookies and sessions, throws

Devise::MissingWardenerrors - Ahoy - Analytics tracking that intercepts requests

- Rack::Attack - Rate limiting designed for web traffic

- Rack::Cors - CORS headers meant for browsers

- Any middleware assuming HTML responses or cookie-based authentication

An example of a minimal mcp_vanilla.ru file is located in the dummy app : test/dummy/mcp_vanilla.ru.

This file is a minimal Rack application that only includes the essential middleware needed for MCP server operation, avoiding conflicts with web-specific middleware.

But remember to add any instrumentation or logging middleware you need, as the minimal setup will not include them by default.

## Production Deployment of MCPS0

In production, **MCPS0** (the MCP server) is a standard Rack application. You can run it using any Rack-compatible server (such as Puma, Unicorn, or Passenger).

> **For best performance and concurrency, it is highly recommended to use a modern, synchronous server like [Falcon](https://github.com/socketry/falcon)**. Falcon is optimized for streaming and concurrent workloads, making it ideal for MCP servers. You can still use Puma, Unicorn, or Passenger, but Falcon will generally provide superior throughput and responsiveness for real-time and streaming use cases.

You have two main options for exposing the server:

### 1. Dedicated Port

Run MCPS0 on its own TCP port (commonly `62770`):

**With Falcon:**

```bash

bundle exec falcon serve --bind http://0.0.0.0:62770 --config mcp.ruWith Puma:

bundle exec rails s -c mcp.ru -p 62770Then, use your web server (Nginx, Apache, etc.) to reverse proxy requests to this port.

Alternatively, you can run MCPS0 on a Unix socket for improved performance and security (especially when the web server and app server are on the same machine):

With Falcon:

bundle exec falcon serve --bind unix:/tmp/mcps0.sock mcp.ruWith Puma:

bundle exec puma -C config/puma.rb -b unix:///tmp/mcps0.sock -c mcp.ruAnd configure your web server to proxy to the socket:

location /mcp/ {

proxy_pass http://unix:/tmp/mcps0.sock:;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}Key Points:

- MCPS0 is a standalone Rack app—run it separately from your main Rails server.

- You can expose it via a TCP port (e.g., 62770) or a Unix socket.

- Use a reverse proxy (Nginx, Apache, etc.) to route requests to MCPS0 as needed.

- This separation ensures reliability and scalability for both your main app and MCP services.

ActionMCP includes Rails generators to help you quickly set up your MCP server components.

First, install ActionMCP to create base classes and configuration:

bin/rails action_mcp:install:migrations # to copy the migrations

bin/rails generate action_mcp:installThis will create the base application classes, configuration file, and authentication gateway in your app directory.

bin/rails generate action_mcp:prompt AnalyzeCodebin/rails generate action_mcp:tool CalculateSumActionMCP provides a TestHelper module to simplify testing of tools and prompts:

require "test_helper"

require "action_mcp/test_helper"

class ToolTest < ActiveSupport::TestCase

include ActionMCP::TestHelper

test "CalculateSumTool returns the correct sum" do

assert_tool_findable("calculate_sum")

result = execute_tool("calculate_sum", a: 5, b: 10)

assert_tool_output(result, "15.0")

end

test "AnalyzeCodePrompt returns the correct analysis" do

assert_prompt_findable("analyze_code")

result = execute_prompt("analyze_code", language: "Ruby", code: "def hello; puts 'Hello, world!'; end")

assert_equal "Analyzing Ruby code: def hello; puts 'Hello, world!'; end", assert_prompt_output(result)

end

endThe TestHelper provides several assertion methods:

-

assert_tool_findable(name)- Verifies a tool exists and is registered -

assert_prompt_findable(name)- Verifies a prompt exists and is registered -

execute_tool(name, **args)- Executes a tool with arguments -

execute_prompt(name, **args)- Executes a prompt with arguments -

assert_tool_output(result, expected)- Asserts tool output matches expected text -

assert_prompt_output(result)- Extracts and returns prompt output for assertions

You can use the MCP Inspector to test your server implementation:

# Start your MCP server

bundle exec rails s -c mcp.ru -p 62770

# In another terminal, run the inspector

npx @modelcontextprotocol/inspector --url http://localhost:62770The MCP Inspector provides an interactive interface to:

- Test tool executions with custom arguments

- Validate prompt responses

- Inspect resource templates and their outputs

- Debug protocol compliance and error handling

ActionMCP includes several rake tasks for development and debugging:

# List all MCP components

bundle exec rails action_mcp:list

# List specific component types

bundle exec rails action_mcp:list_tools

bundle exec rails action_mcp:list_prompts

bundle exec rails action_mcp:list_resources

bundle exec rails action_mcp:list_profiles

# Show configuration and statistics

bundle exec rails action_mcp:info

bundle exec rails action_mcp:stats

# Show profile configuration

bundle exec rails action_mcp:show_profile[profile_name]ActionMCP provides comprehensive error handling following the JSON-RPC 2.0 specification:

- -32601: Method not found - The requested method doesn't exist

- -32002: Consent required - Tool requires user consent to execute

- -32603: Internal error - Server encountered an unexpected error

- -32600: Invalid request - The request is malformed

Tools should return clear error messages to the LLM using the render method:

class MyTool < ApplicationMCPTool

def perform

# Check for error conditions and return clear messages

if some_error_condition?

report_error("Clear error message for the LLM")

return

end

# Normal processing

render(text: "Success message")

end

end- Session not found: Ensure sessions are properly created and saved in the session store

- Tool not registered: Verify tools are properly defined and inherit from ApplicationMCPTool

-

Consent required: Grant consent using

session.grant_consent(tool_name) -

Middleware conflicts: Use

mcp_vanilla.ruto avoid web-specific middleware

- Check server logs for detailed error information

- Use

bundle exec rails action_mcp:infoto verify configuration - Test with MCP Inspector to isolate protocol issues

- Ensure proper session management in production environments

ActionMCP supports a flexible profile system that allows you to selectively expose tools, prompts, and resources based on different usage scenarios. This is particularly useful for applications that need different MCP capabilities for different contexts (e.g., public API vs. admin interface).

Profiles are named configurations that define:

- Which tools are available

- Which prompts are accessible

- Which resources can be accessed

- Configuration options like logging level and change notifications

By default, ActionMCP includes two profiles:

-

primary: Exposes all tools, prompts, and resources -

minimal: Exposes no tools, prompts, or resources by default

Profiles are configured via a config/mcp.yml file in your Rails application. If this file doesn't exist, ActionMCP will use default settings from the gem.

Example configuration:

default:

tools:

- all # Include all tools

prompts:

- all # Include all prompts

resources:

- all # Include all resources

options:

list_changed: false

logging_enabled: true

logging_level: info

resources_subscribe: false

api_only:

tools:

- calculator

- weather

prompts: [] # No prompts for API

resources:

- user_profile

options:

list_changed: false

logging_level: warn

admin:

tools:

- all

options:

logging_level: debug

list_changed: true

resources_subscribe: trueEach profile can specify:

-

tools: Array of tool names to include (useallto include all tools) -

prompts: Array of prompt names to include (useallto include all prompts) -

resources: Array of resource names to include (useallto include all resources) -

options: Additional configuration options:-

list_changed: Whether to send change notifications -

logging_enabled: Whether to enable logging -

logging_level: The logging level to use -

resources_subscribe: Whether to enable resource subscriptions

-

You can switch between profiles programmatically in your code:

# Permanently switch to a different profile

ActionMCP.configuration.use_profile(:only_tools) # Switch to a profile named "only_tools"

# Temporarily use a profile for a specific operation

ActionMCP.with_profile(:minimal) do

# Code here uses the minimal profile

# After the block, reverts to the previous profile

endThis makes it easy to control which MCP capabilities are available in different contexts of your application.

ActionMCP includes rake tasks to help you manage and inspect your profiles:

# List all available profiles with their configurations

bin/rails action_mcp:list_profiles

# Show detailed information about a specific profile

bin/rails action_mcp:show_profile[admin]

# List all tools, prompts, resources, and profiles

bin/rails action_mcp:listThe profile inspection tasks will highlight any issues, such as configured tools, prompts, or resources that don't actually exist in your application.

Profiles are particularly useful for:

- Multi-tenant applications: Use different profiles for different customer tiers with Dorp or other gems

- Access control: Create profiles for different user roles (admin, staff, public)

- Performance optimization: Use a minimal profile for high-traffic endpoints

- Testing environments: Use specific test profiles in your test environment

- Progressive enhancement: Start with a minimal profile and gradually add capabilities

By leveraging profiles, you can maintain a single ActionMCP codebase while providing tailored MCP capabilities for different contexts.

ActionMCP includes a client for connecting to remote MCP servers. The client handles session management, protocol negotiation, and provides a simple API for interacting with MCP servers.

For comprehensive client documentation, including examples, session management, transport configuration, and API usage, see CLIENTUSAGE.md.

- Never expose sensitive data through MCP components

- Use authentication via Gateway for production deployments

- Implement proper authorization in your tools and prompts

- Validate all inputs using property definitions and Rails validations

- Use consent management for sensitive operations

- Configure appropriate thread pools for high-traffic scenarios

- Use Redis or SolidMCP for production pub/sub

- Choose ActiveRecord session store for session persistence

- Monitor session cleanup to prevent memory leaks

- Use profiles to limit exposed capabilities

- Enable logging and configure appropriate log levels

-

Monitor session statistics using

action_mcp:stats - Track tool usage and performance metrics

- Set up alerts for error rates and response times

- Use Falcon for optimal performance with streaming workloads

- Deploy on dedicated ports or Unix sockets

- Use reverse proxies (Nginx, Apache) for SSL termination

- Implement health checks for your MCP endpoints

-

Use

mcp_vanilla.ruto avoid middleware conflicts

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for action_mcp

Similar Open Source Tools

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

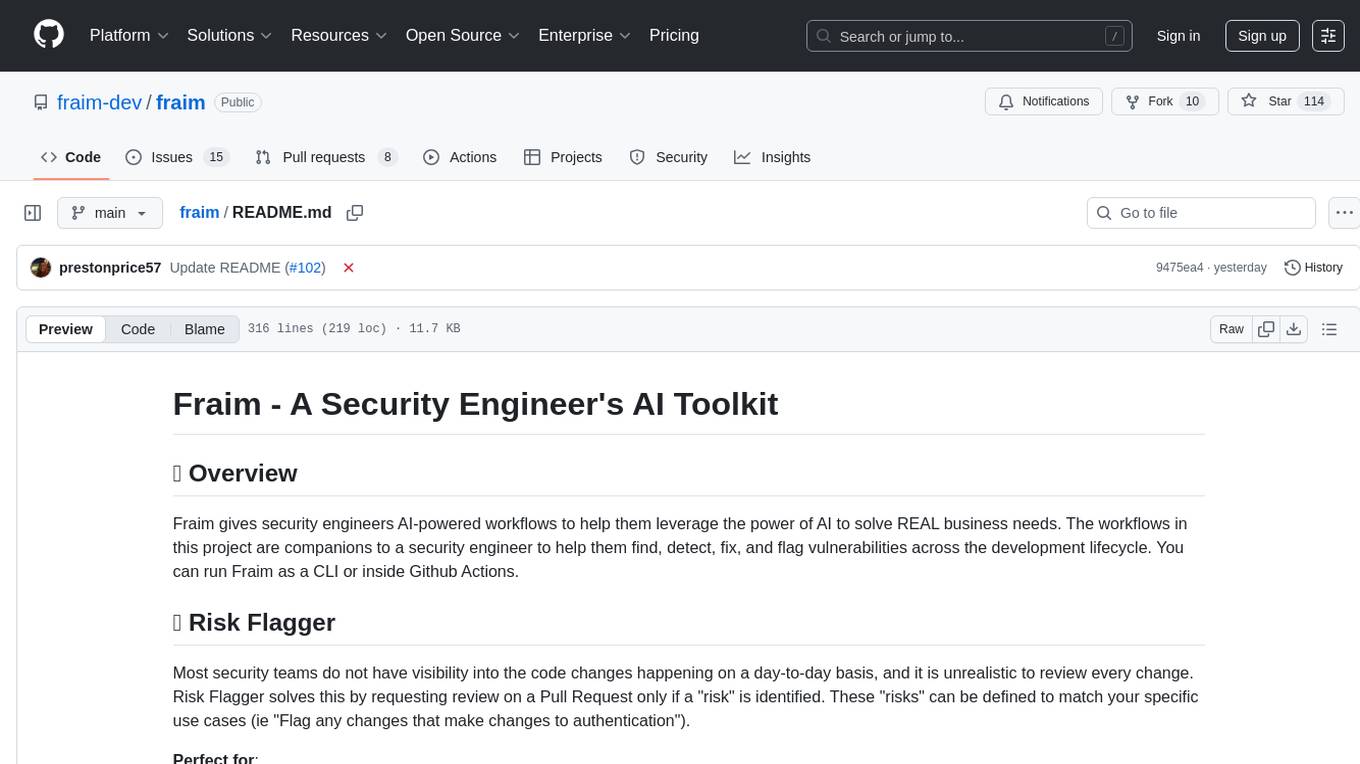

fraim

Fraim is an AI-powered toolkit designed for security engineers to enhance their workflows by leveraging AI capabilities. It offers solutions to find, detect, fix, and flag vulnerabilities throughout the development lifecycle. The toolkit includes features like Risk Flagger for identifying risks in code changes, Code Security Analysis for context-aware vulnerability detection, and Infrastructure as Code Analysis for spotting misconfigurations in cloud environments. Fraim can be run as a CLI tool or integrated into Github Actions, making it a versatile solution for security teams and organizations looking to enhance their security practices with AI technology.

fast-mcp

Fast MCP is a Ruby gem that simplifies the integration of AI models with your Ruby applications. It provides a clean implementation of the Model Context Protocol, eliminating complex communication protocols, integration challenges, and compatibility issues. With Fast MCP, you can easily connect AI models to your servers, share data resources, choose from multiple transports, integrate with frameworks like Rails and Sinatra, and secure your AI-powered endpoints. The gem also offers real-time updates and authentication support, making AI integration a seamless experience for developers.

model-compose

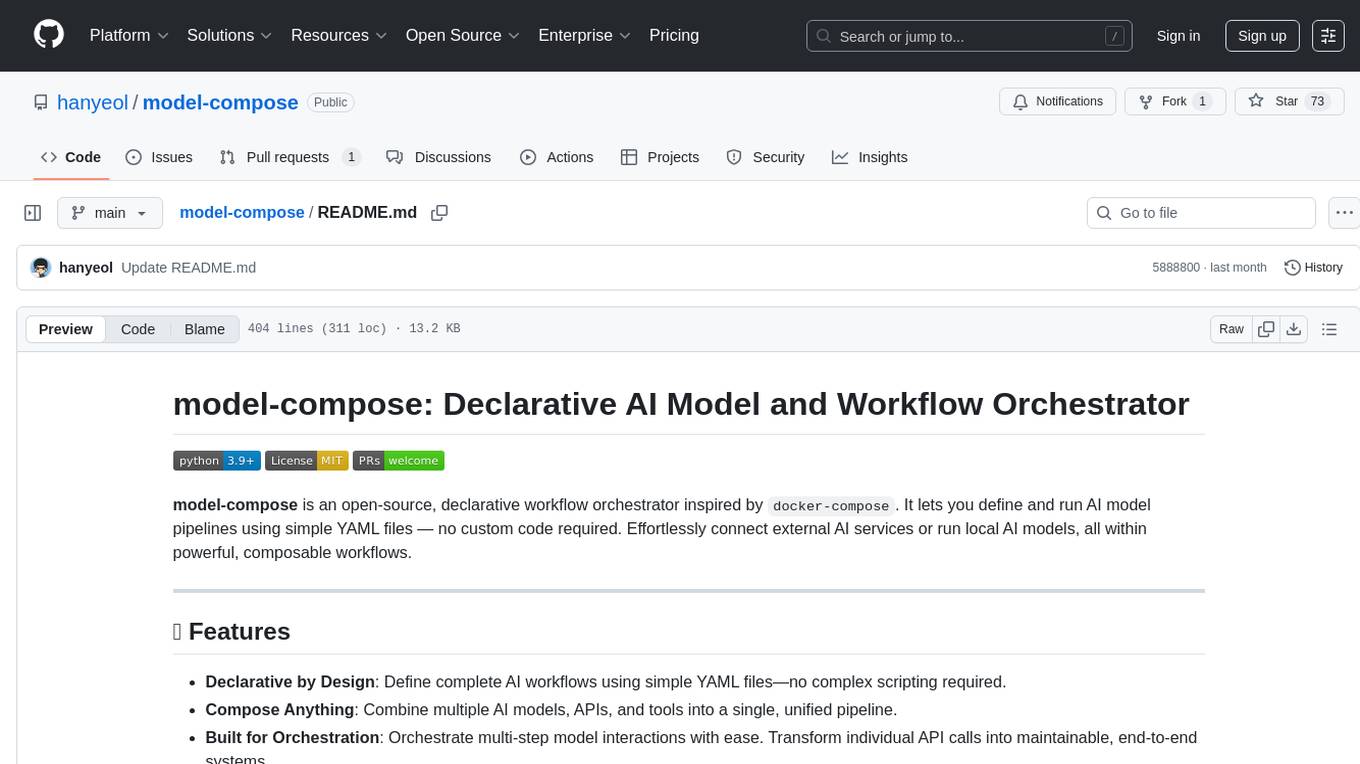

model-compose is an open-source, declarative workflow orchestrator inspired by docker-compose. It lets you define and run AI model pipelines using simple YAML files. Effortlessly connect external AI services or run local AI models within powerful, composable workflows. Features include declarative design, multi-workflow support, modular components, flexible I/O routing, streaming mode support, and more. It supports running workflows locally or serving them remotely, Docker deployment, environment variable support, and provides a CLI interface for managing AI workflows.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

mcp-server

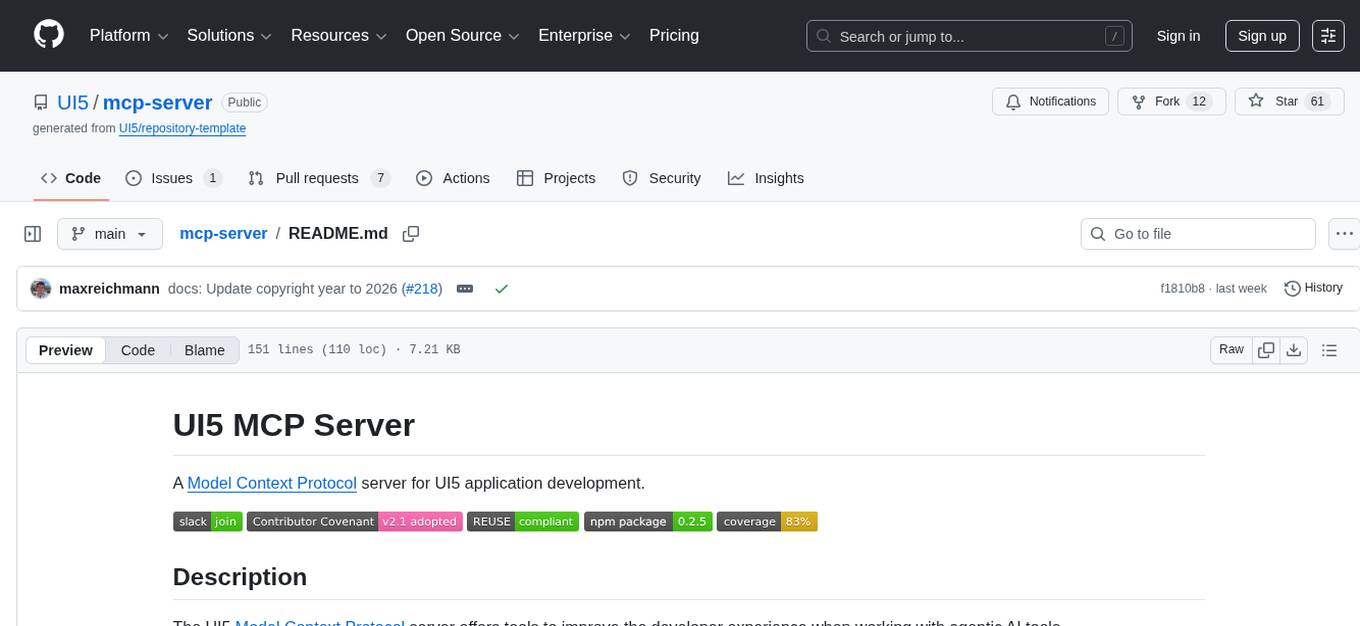

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

Toolify

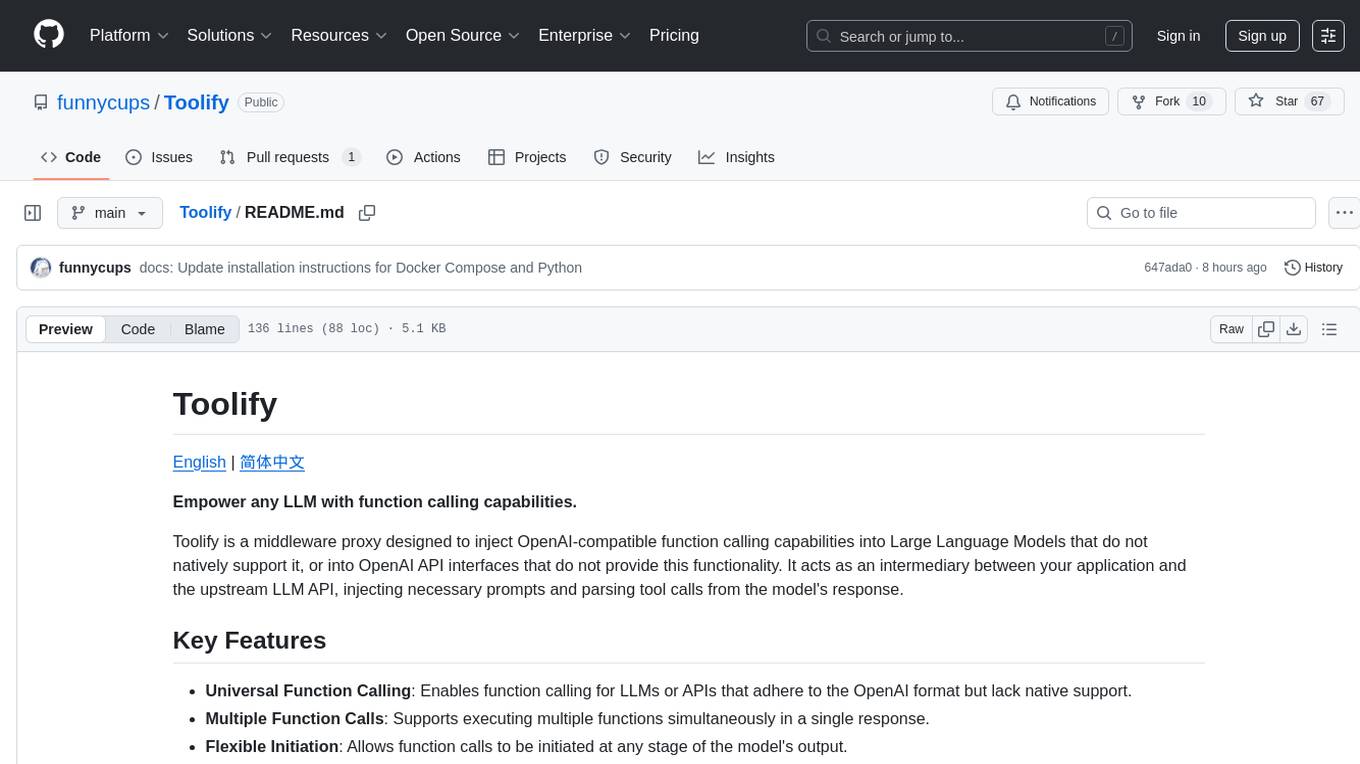

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

golf

Golf is a simple command-line tool for calculating the distance between two geographic coordinates. It uses the Haversine formula to accurately determine the distance between two points on the Earth's surface. This tool is useful for developers working on location-based applications or projects that require distance calculations. With Golf, users can easily input latitude and longitude coordinates and get the precise distance in kilometers or miles. The tool is lightweight, easy to use, and can be integrated into various programming workflows.

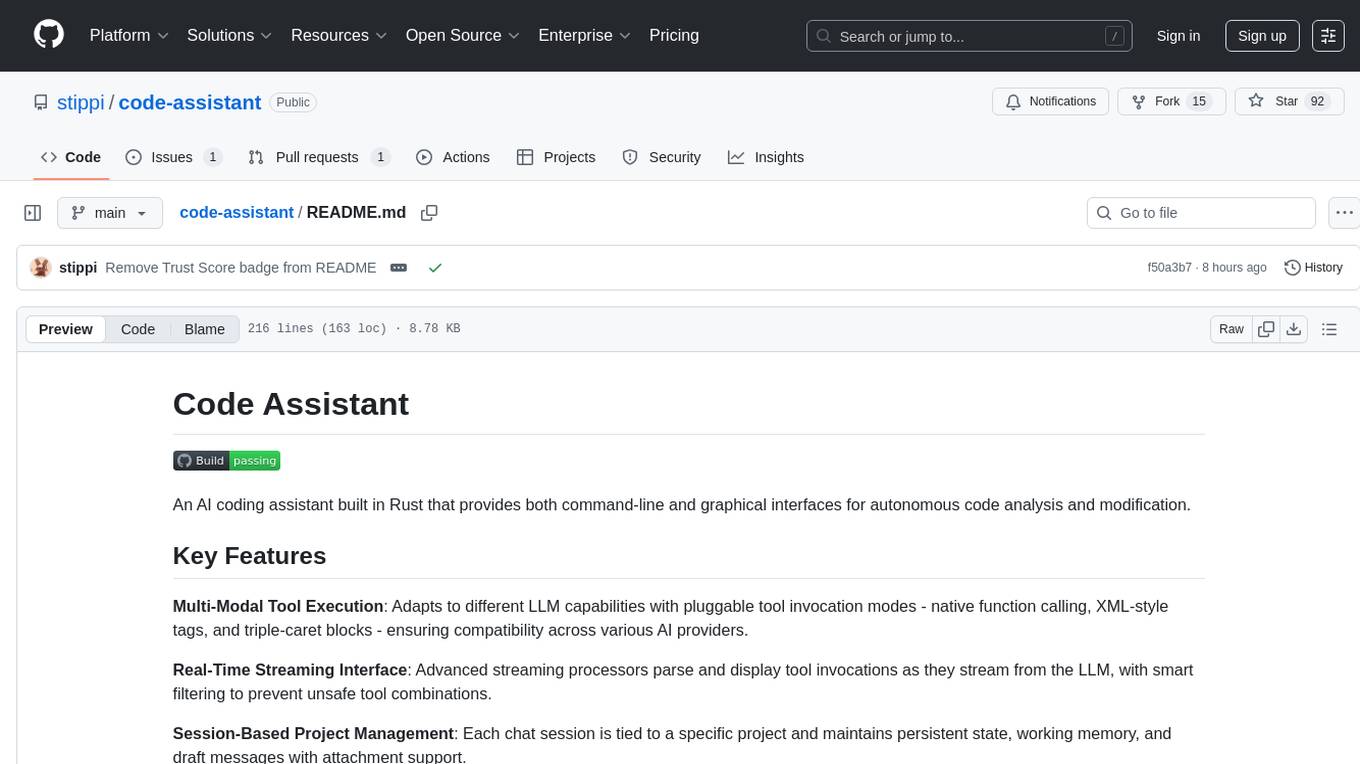

code-assistant

Code Assistant is an AI coding tool built in Rust that offers command-line and graphical interfaces for autonomous code analysis and modification. It supports multi-modal tool execution, real-time streaming interface, session-based project management, multiple interface options, and intelligent project exploration. The tool provides auto-loaded repository guidance and allows for project configuration with format-on-save feature. Users can interact with the tool in GUI, terminal, or MCP server mode, and configure LLM providers for advanced options. The architecture highlights adaptive tool syntax, smart tool filtering, and multi-threaded streaming for efficient performance. Contributions are welcome, and the roadmap includes features like block replacing in changed files, compact tool use failures, UI improvements, memory tools, security enhancements, fuzzy matching search blocks, editing user messages, and selecting in messages.

DesktopCommanderMCP

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

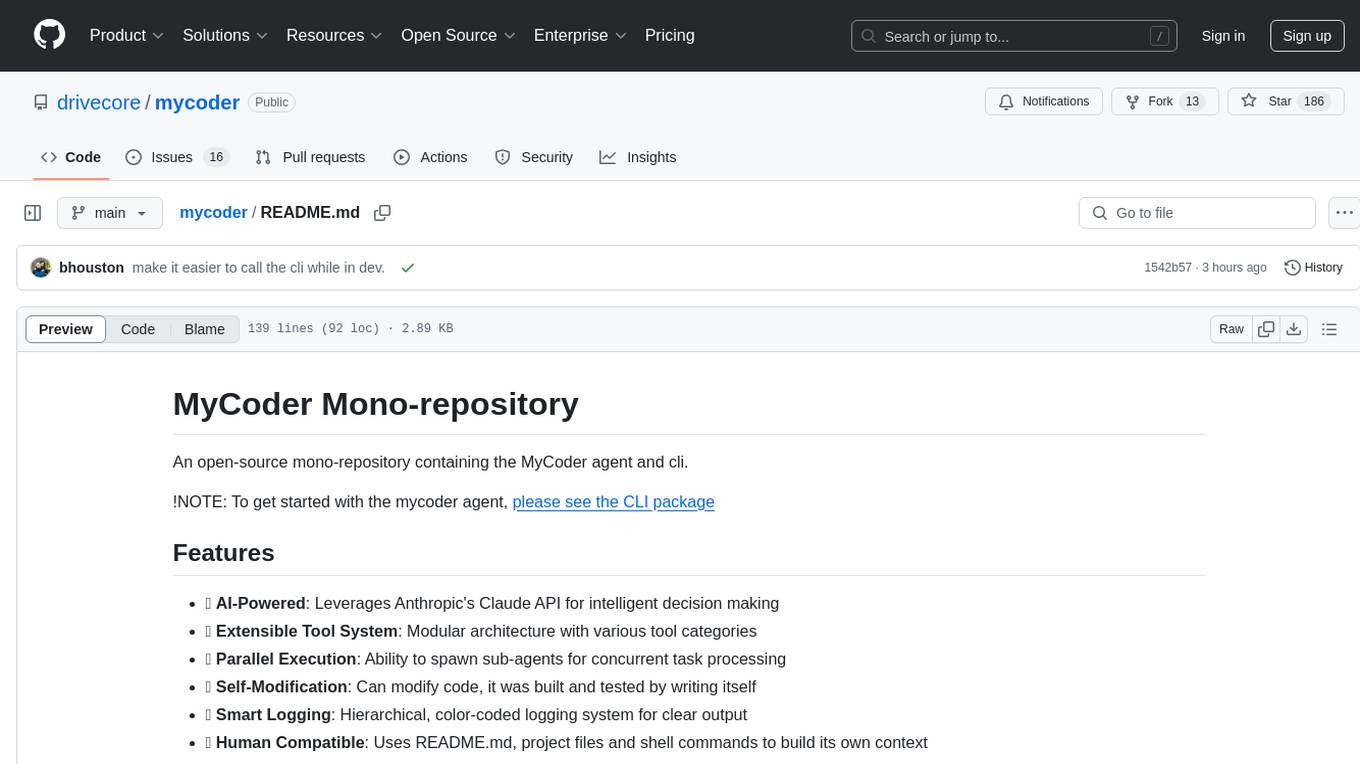

mycoder

An open-source mono-repository containing the MyCoder agent and CLI. It leverages Anthropic's Claude API for intelligent decision making, has a modular architecture with various tool categories, supports parallel execution with sub-agents, can modify code by writing itself, features a smart logging system for clear output, and is human-compatible using README.md, project files, and shell commands to build its own context.

For similar tasks

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

LLM-Finetuning-Toolkit

LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. It allows users to control all elements of a typical experimentation pipeline - prompts, open-source LLMs, optimization strategy, and LLM testing - through a single YAML configuration file. The toolkit supports basic, intermediate, and advanced usage scenarios, enabling users to run custom experiments, conduct ablation studies, and automate fine-tuning workflows. It provides features for data ingestion, model definition, training, inference, quality assurance, and artifact outputs, making it a comprehensive tool for fine-tuning large language models.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.