DesktopCommanderMCP

This is MCP server for Claude that gives it terminal control, file system search and diff file editing capabilities

Stars: 4524

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

README:

Work with code and text, run processes, and automate tasks, going far beyond other AI editors - without API token costs.

- Features

- How to install

- Getting Started

- Usage

- Handling Long-Running Commands

- Work in Progress and TODOs

- Sponsors and Supporters

- Website

- Media

- Testimonials

- Frequently Asked Questions

- Contributing

- License

All of your AI development tools in one place. Desktop Commander puts all dev tools in one chat. Execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). Built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities.

- Enhanced terminal commands with interactive process control

- Execute code in memory (Python, Node.js, R) without saving files

- Instant data analysis - just ask to analyze CSV/JSON files

- Interact with running processes (SSH, databases, development servers)

- Execute terminal commands with output streaming

- Command timeout and background execution support

- Process management (list and kill processes)

- Session management for long-running commands

- Server configuration management:

- Get/set configuration values

- Update multiple settings at once

- Dynamic configuration changes without server restart

- Full filesystem operations:

- Read/write files

- Create/list directories

- Move files/directories

- Search files

- Get file metadata

- Negative offset file reading: Read from end of files using negative offset values (like Unix tail)

- Code editing capabilities:

- Surgical text replacements for small changes

- Full file rewrites for major changes

- Multiple file support

- Pattern-based replacements

- vscode-ripgrep based recursive code or text search in folders

- Comprehensive audit logging:

- All tool calls are automatically logged

- Log rotation with 10MB size limit

- Detailed timestamps and arguments

Desktop Commander offers multiple installation methods to fit different user needs and technical requirements.

📋 Update & Uninstall Information: Before choosing an installation option, note that only Options 1, 2, 3, and 6 have automatic updates. Options 4 and 5 require manual updates. See the sections below for update and uninstall instructions for each option.

Just run this in terminal:

npx @wonderwhy-er/desktop-commander@latest setup

For debugging mode (allows Node.js inspector connection):

npx @wonderwhy-er/desktop-commander@latest setup --debug

Command line options during setup:

-

--debug: Enable debugging mode for Node.js inspector -

--no-onboarding: Disable onboarding prompts for new users

Restart Claude if running.

✅ Auto-Updates: Yes - automatically updates when you restart Claude

🔄 Manual Update: Run the setup command again

🗑️ Uninstall: Run npx @wonderwhy-er/desktop-commander@latest remove

For macOS users, you can use our automated bash installer which will check your Node.js version, install it if needed, and automatically configure Desktop Commander:

curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install.sh | bash

This script handles all dependencies and configuration automatically for a seamless setup experience.

✅ Auto-Updates: Yes - requires manual updates

🔄 Manual Update: Re-run the bash installer command above

🗑️ Uninstall: Run npx @wonderwhy-er/desktop-commander@latest remove

To install Desktop Commander for Claude Desktop via Smithery:

- Visit the Smithery page: https://smithery.ai/server/@wonderwhy-er/desktop-commander

- Login to Smithery if you haven't already

- Select your client (Claude Desktop) on the right side

- Install with the provided key that appears after selecting your client

- Restart Claude Desktop

The old command-line installation method is no longer supported. Please use the web interface above for the most reliable installation experience.

✅ Auto-Updates: Yes - automatically updates when you restart Claude

🔄 Manual Update: Visit the Smithery page and reinstall

Add this entry to your claude_desktop_config.json:

- On Mac:

~/Library/Application\ Support/Claude/claude_desktop_config.json - On Windows:

%APPDATA%\Claude\claude_desktop_config.json - On Linux:

~/.config/Claude/claude_desktop_config.json

{

"mcpServers": {

"desktop-commander": {

"command": "npx",

"args": [

"-y",

"@wonderwhy-er/desktop-commander@latest"

]

}

}

}Restart Claude if running.

✅ Auto-Updates: Yes - automatically updates when you restart Claude

🔄 Manual Update: Run the setup command again

🗑️ Uninstall: Run npx @wonderwhy-er/desktop-commander@latest remove or remove the "desktop-commander" entry from your claude_desktop_config.json file

- Clone and build:

git clone https://github.com/wonderwhy-er/DesktopCommanderMCP.git

cd DesktopCommanderMCP

npm run setupRestart Claude if running.

The setup command will:

- Install dependencies

- Build the server

- Configure Claude's desktop app

- Add MCP servers to Claude's config if needed

❌ Auto-Updates: No - requires manual git updates

🔄 Manual Update: cd DesktopCommanderMCP && git pull && npm run setup

🗑️ Uninstall: Run npx @wonderwhy-er/desktop-commander@latest remove or remove the cloned directory and remove MCP server entry from Claude config

Perfect for users who want complete or partial isolation or don't have Node.js installed. Desktop Commander runs in a sandboxed Docker container with a persistent work environment.

- Docker Desktop installed and running

- Claude Desktop app installed

Important: Make sure Docker Desktop is fully started before running the installer.

macOS/Linux:

bash <(curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.sh)Windows PowerShell:

# Download and run the installer (one-liner)

iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.ps1'))The automated installer will:

- Check Docker installation

- Pull the latest Docker image

- Prompt you to select folders for mounting

- Configure Claude Desktop automatically

- Restart Claude if possible

Desktop Commander creates a persistent work environment that remembers everything between sessions:

- Your development tools: Any software you install (Node.js, Python, databases, etc.) stays installed

- Your configurations: Git settings, SSH keys, shell preferences, and other personal configs are preserved

- Your work files: Projects and files in the workspace area persist across restarts

- Package caches: Downloaded packages and dependencies are cached for faster future installs

Think of it like having your own dedicated development computer that never loses your setup, but runs safely isolated from your main system.

If you prefer manual setup, add this to your claude_desktop_config.json:

Basic setup (no file access):

{

"mcpServers": {

"desktop-commander-in-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"mcp/desktop-commander:latest"

]

}

}

}With folder mounting:

{

"mcpServers": {

"desktop-commander-in-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-v", "/Users/username/Desktop:/mnt/desktop",

"-v", "/Users/username/Documents:/mnt/documents",

"mcp/desktop-commander:latest"

]

}

}

}Advanced folder mounting:

{

"mcpServers": {

"desktop-commander-in-docker": {

"command": "docker",

"args": [

"run", "-i", "--rm",

"-v", "dc-system:/usr",

"-v", "dc-home:/root",

"-v", "dc-workspace:/workspace",

"-v", "dc-packages:/var",

"-v", "/Users/username/Projects:/mnt/Projects",

"-v", "/Users/username/Downloads:/mnt/Downloads",

"mcp/desktop-commander:latest"

]

}

}

}✅ Controlled Isolation: Runs in sandboxed environment with persistent development state ✅ No Node.js Required: Everything included in the container ✅ Cross-Platform: Same experience on all operating systems ✅ Persistent Environment: Your tools, files, configs, and work survives restarts

✅ Auto-Updates: Yes - latest tag automatically gets newer versions

🔄 Manual Update: docker pull mcp/desktop-commander:latest then restart Claude

macOS/Linux:

Check installation status:

bash <(curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.sh) --statusReset all persistent data (removes all installed tools and configs):

bash <(curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.sh) --resetWindows PowerShell:

Check status:

$script = (New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.ps1'); & ([ScriptBlock]::Create("$script")) -StatusReset all data:

$script = (New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.ps1'); & ([ScriptBlock]::Create("$script")) -ResetShow help:

$script = (New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.ps1'); & ([ScriptBlock]::Create("$script")) -HelpVerbose output:

$script = (New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.ps1'); & ([ScriptBlock]::Create("$script")) -VerboseOutputIf you broke the Docker container or need a fresh start:

# Reset and reinstall from scratch

bash <(curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.sh) --reset && bash <(curl -fsSL https://raw.githubusercontent.com/wonderwhy-er/DesktopCommanderMCP/refs/heads/main/install-docker.sh)This will completely reset your persistent environment and reinstall everything fresh with exception of not touching mounted folders

Options 1 (npx), Option 2 (bash installer), 3 (Smithery), 4 (manual config), and 6 (Docker) automatically update to the latest version whenever you restart Claude. No manual intervention needed.

-

Option 5 (local checkout):

cd DesktopCommanderMCP && git pull && npm run setup

The easiest way to completely remove Desktop Commander:

npx @wonderwhy-er/desktop-commander@latest removeThis automatic uninstaller will:

- ✅ Remove Desktop Commander from Claude's MCP server configuration

- ✅ Create a backup of your Claude config before making changes

- ✅ Provide guidance for complete package removal

- ✅ Restore from backup if anything goes wrong

If the automatic uninstaller doesn't work or you prefer manual removal:

- Locate your Claude Desktop config file:

-

macOS:

~/Library/Application Support/Claude/claude_desktop_config.json -

Windows:

%APPDATA%\Claude\claude_desktop_config.json -

Linux:

~/.config/Claude/claude_desktop_config.json

- Edit the config file:

- Open the file in a text editor

- Find and remove the

"desktop-commander"entry from the"mcpServers"section - Save the file

Example - Remove this section:

{

"desktop-commander": {

"command": "npx",

"args": ["@wonderwhy-er/desktop-commander@latest"]

}

}Close and restart Claude Desktop to complete the removal.

If automatic uninstallation fails:

- Use manual uninstallation as a fallback

If Claude won't start after uninstalling:

- Restore the backup config file created by the uninstaller

- Or manually fix the JSON syntax in your claude_desktop_config.json

Need help?

- Join our Discord community: https://discord.com/invite/kQ27sNnZr7

Once Desktop Commander is installed and Claude Desktop is restarted, you're ready to supercharge your Claude experience!

Desktop Commander includes intelligent onboarding to help you discover what's possible:

For New Users: When you're just getting started (fewer than 10 successful commands), Claude will automatically offer helpful getting-started guidance and practical tutorials after you use Desktop Commander successfully.

Request Help Anytime: You can ask for onboarding assistance at any time by simply saying:

- "Help me get started with Desktop Commander"

- "Show me Desktop Commander examples"

- "What can I do with Desktop Commander?"

Claude will then show you beginner-friendly tutorials and examples, including:

- 📁 Organizing your Downloads folder automatically

- 📊 Analyzing CSV/Excel files with Python

- ⚙️ Setting up GitHub Actions CI/CD

- 🔍 Exploring and understanding codebases

- 🤖 Running interactive development environments

The server provides a comprehensive set of tools organized into several categories:

| Category | Tool | Description |

|---|---|---|

| Configuration | get_config |

Get the complete server configuration as JSON (includes blockedCommands, defaultShell, allowedDirectories, fileReadLineLimit, fileWriteLineLimit, telemetryEnabled) |

set_config_value |

Set a specific configuration value by key. Available settings: • blockedCommands: Array of shell commands that cannot be executed• defaultShell: Shell to use for commands (e.g., bash, zsh, powershell)• allowedDirectories: Array of filesystem paths the server can access for file operations (• fileReadLineLimit: Maximum lines to read at once (default: 1000)• fileWriteLineLimit: Maximum lines to write at once (default: 50)• telemetryEnabled: Enable/disable telemetry (boolean) |

|

| Terminal | start_process |

Start programs with smart detection of when they're ready for input |

interact_with_process |

Send commands to running programs and get responses | |

read_process_output |

Read output from running processes | |

force_terminate |

Force terminate a running terminal session | |

list_sessions |

List all active terminal sessions | |

list_processes |

List all running processes with detailed information | |

kill_process |

Terminate a running process by PID | |

| Filesystem | read_file |

Read contents from local filesystem or URLs with line-based pagination (supports positive/negative offset and length parameters) |

read_multiple_files |

Read multiple files simultaneously | |

write_file |

Write file contents with options for rewrite or append mode (uses configurable line limits) | |

create_directory |

Create a new directory or ensure it exists | |

list_directory |

Get detailed listing of files and directories | |

move_file |

Move or rename files and directories | |

start_search |

Start streaming search for files by name or content patterns (unified ripgrep-based search) | |

get_more_search_results |

Get paginated results from active search with offset support | |

stop_search |

Stop an active search gracefully | |

list_searches |

List all active search sessions | |

get_file_info |

Retrieve detailed metadata about a file or directory | |

| Text Editing | edit_block |

Apply targeted text replacements with enhanced prompting for smaller edits (includes character-level diff feedback) |

| Analytics | get_usage_stats |

Get usage statistics for your own insight |

give_feedback_to_desktop_commander |

Open feedback form in browser to provide feedback to Desktop Commander Team |

Data Analysis:

"Analyze sales.csv and show top customers" → Claude runs Python code in memory

Remote Access:

"SSH to my server and check disk space" → Claude maintains SSH session

Development:

"Start Node.js and test this API" → Claude runs interactive Node session

Search/Replace Block Format:

filepath.ext

<<<<<<< SEARCH

content to find

=======

new content

>>>>>>> REPLACE

Example:

src/main.js

<<<<<<< SEARCH

console.log("old message");

=======

console.log("new message");

>>>>>>> REPLACE

The edit_block tool includes several enhancements for better reliability:

- Improved Prompting: Tool descriptions now emphasize making multiple small, focused edits rather than one large change

- Fuzzy Search Fallback: When exact matches fail, it performs fuzzy search and provides detailed feedback

-

Character-level Diffs: Shows exactly what's different using

{-removed-}{+added+}format -

Multiple Occurrence Support: Can replace multiple instances with

expected_replacementsparameter - Comprehensive Logging: All fuzzy searches are logged for analysis and debugging

When a search fails, you'll see detailed information about the closest match found, including similarity percentage, execution time, and character differences. All these details are automatically logged for later analysis using the fuzzy search log tools.

Desktop Commander can be run in Docker containers for complete isolation from your host system, providing zero risk to your computer. This is perfect for testing, development, or when you want complete sandboxing.

-

Install Docker for Windows/Mac

- Download and install Docker Desktop from docker.com

-

Get Desktop Commander Docker Configuration

- Visit: https://hub.docker.com/mcp/server/desktop-commander/manual

- Option A: Use the provided terminal command for automated setup

-

Option B: Click "Standalone" to get the config JSON and add it manually to your Claude Desktop config

-

Mount Your Machine Folders (Coming Soon)

- Instructions on how to mount your local directories into the Docker container will be provided soon

- This will allow you to work with your files while maintaining complete isolation

- Complete isolation from your host system

- Consistent environment across different machines

- Easy cleanup - just remove the container when done

- Perfect for testing new features or configurations

-

read_filecan now fetch content from both local files and URLs - Example:

read_filewithisUrl: trueparameter to read from web resources - Handles both text and image content from remote sources

- Images (local or from URLs) are displayed visually in Claude's interface, not as text

- Claude can see and analyze the actual image content

- Default 30-second timeout for URL requests

The fuzzy search logging system includes convenient npm scripts for analyzing logs outside of the MCP environment:

# View recent fuzzy search logs

npm run logs:view -- --count 20

# Analyze patterns and performance

npm run logs:analyze -- --threshold 0.8

# Export logs to CSV or JSON

npm run logs:export -- --format json --output analysis.json

# Clear all logs (with confirmation)

npm run logs:clearFor detailed documentation on these scripts, see scripts/README.md.

Desktop Commander includes comprehensive logging for fuzzy search operations in the edit_block tool. When an exact match isn't found, the system performs a fuzzy search and logs detailed information for analysis.

Every fuzzy search operation logs:

- Search and found text: The text you're looking for vs. what was found

- Similarity score: How close the match is (0-100%)

- Execution time: How long the search took

- Character differences: Detailed diff showing exactly what's different

- File metadata: Extension, search/found text lengths

- Character codes: Specific character codes causing differences

Logs are automatically saved to:

-

macOS/Linux:

~/.claude-server-commander-logs/fuzzy-search.log -

Windows:

%USERPROFILE%\.claude-server-commander-logs\fuzzy-search.log

The fuzzy search logs help you understand:

- Why exact matches fail: Common issues like whitespace differences, line endings, or character encoding

- Performance patterns: How search complexity affects execution time

- File type issues: Which file extensions commonly have matching problems

- Character encoding problems: Specific character codes that cause diffs

Desktop Commander now includes comprehensive logging for all tool calls:

- Every tool call is logged with timestamp, tool name, and arguments (sanitized for privacy)

- Logs are rotated automatically when they reach 10MB in size

Logs are saved to:

-

macOS/Linux:

~/.claude-server-commander/claude_tool_call.log -

Windows:

%USERPROFILE%\.claude-server-commander\claude_tool_call.log

This audit trail helps with debugging, security monitoring, and understanding how Claude is interacting with your system.

For commands that may take a while:

For comprehensive security information and vulnerability reporting: See SECURITY.md

-

Known security limitations: Directory restrictions and command blocking can be bypassed through various methods including symlinks, command substitution, and absolute paths or code execution

-

Always change configuration in a separate chat window from where you're doing your actual work. Claude may sometimes attempt to modify configuration settings (like

allowedDirectories) if it encounters filesystem access restrictions. -

The

allowedDirectoriessetting currently only restricts filesystem operations, not terminal commands. Terminal commands can still access files outside allowed directories. -

For production security: Use the Docker installation which provides complete isolation from your host system.

You can manage server configuration using the provided tools:

// Get the entire config

get_config({})

// Set a specific config value

set_config_value({ "key": "defaultShell", "value": "/bin/zsh" })

// Set multiple config values using separate calls

set_config_value({ "key": "defaultShell", "value": "/bin/bash" })

set_config_value({ "key": "allowedDirectories", "value": ["/Users/username/projects"] })The configuration is saved to config.json in the server's working directory and persists between server restarts.

The fileWriteLineLimit setting controls how many lines can be written in a single write_file operation (default: 50 lines). This limit exists for several important reasons:

Why the limit exists:

- AIs are wasteful with tokens: Instead of doing two small edits in a file, AIs may decide to rewrite the whole thing. We're trying to force AIs to do things in smaller changes as it saves time and tokens

- Claude UX message limits: There are limits within one message and hitting "Continue" does not really work. What we're trying here is to make AI work in smaller chunks so when you hit that limit, multiple chunks have succeeded and that work is not lost - it just needs to restart from the last chunk

Setting the limit:

// You can set it to thousands if you want

set_config_value({ "key": "fileWriteLineLimit", "value": 1000 })

// Or keep it smaller to force more efficient behavior

set_config_value({ "key": "fileWriteLineLimit", "value": 25 })Maximum value: You can set it to thousands if you want - there's no technical restriction.

Best practices:

- Keep the default (50) to encourage efficient AI behavior and avoid token waste

- The system automatically suggests chunking when limits are exceeded

- Smaller chunks mean less work lost when Claude hits message limits

-

Create a dedicated chat for configuration changes: Make all your config changes in one chat, then start a new chat for your actual work.

-

Be careful with empty

allowedDirectories: Setting this to an empty array ([]) grants access to your entire filesystem for file operations. -

Use specific paths: Instead of using broad paths like

/, specify exact directories you want to access. -

Always verify configuration after changes: Use

get_config({})to confirm your changes were applied correctly.

Desktop Commander supports several command line options for customizing behavior:

By default, Desktop Commander shows helpful onboarding prompts to new users (those with fewer than 10 tool calls). You can disable this behavior:

# Disable onboarding for this session

node dist/index.js --no-onboarding

# Or if using npm scripts

npm run start:no-onboarding

# For npx installations, modify your claude_desktop_config.json:

{

"mcpServers": {

"desktop-commander": {

"command": "npx",

"args": [

"-y",

"@wonderwhy-er/desktop-commander@latest",

"--no-onboarding"

]

}

}

}When onboarding is automatically disabled:

- When the MCP client name is set to "desktop-commander"

- When using the

--no-onboardingflag - After users have used onboarding prompts or made 10+ tool calls

Debug information:

The server will log when onboarding is disabled: "Onboarding disabled via --no-onboarding flag"

You can specify which shell to use for command execution:

// Using default shell (bash or system default)

execute_command({ "command": "echo $SHELL" })

// Using zsh specifically

execute_command({ "command": "echo $SHELL", "shell": "/bin/zsh" })

// Using bash specifically

execute_command({ "command": "echo $SHELL", "shell": "/bin/bash" })This allows you to use shell-specific features or maintain consistent environments across commands.

-

execute_commandreturns after timeout with initial output - Command continues in background

- Use

read_outputwith PID to get new output - Use

force_terminateto stop if needed

If you need to debug the server, you can install it in debug mode:

# Using npx

npx @wonderwhy-er/desktop-commander@latest setup --debug

# Or if installed locally

npm run setup:debugThis will:

- Configure Claude to use a separate "desktop-commander" server

- Enable Node.js inspector protocol with

--inspect-brk=9229flag - Pause execution at the start until a debugger connects

- Enable additional debugging environment variables

To connect a debugger:

- In Chrome, visit

chrome://inspectand look for the Node.js instance - In VS Code, use the "Attach to Node Process" debug configuration

- Other IDEs/tools may have similar "attach" options for Node.js debugging

Important debugging notes:

- The server will pause on startup until a debugger connects (due to the

--inspect-brkflag) - If you don't see activity during debugging, ensure you're connected to the correct Node.js process

- Multiple Node processes may be running; connect to the one on port 9229

- The debug server is identified as "desktop-commander-debug" in Claude's MCP server list

Troubleshooting:

- If Claude times out while trying to use the debug server, your debugger might not be properly connected

- When properly connected, the process will continue execution after hitting the first breakpoint

- You can add additional breakpoints in your IDE once connected

This project extends the MCP Filesystem Server to enable:

- Local server support in Claude Desktop

- Full system command execution

- Process management

- File operations

- Code editing with search/replace blocks

Created as part of exploring Claude MCPs: https://youtube.com/live/TlbjFDbl5Us

- 20-05-2025 v0.1.40 Release - Added audit logging for all tool calls, improved line-based file operations, enhanced edit_block with better prompting for smaller edits, added explicit telemetry opt-out prompting

- 05-05-2025 Fuzzy Search Logging - Added comprehensive logging system for fuzzy search operations with detailed analysis tools, character-level diffs, and performance metrics to help debug edit_block failures

- 29-04-2025 Telemetry Opt Out through configuration - There is now setting to disable telemetry in config, ask in chat

- 23-04-2025 Enhanced edit functionality - Improved format, added fuzzy search and multi-occurrence replacements, should fail less and use edit block more often

- 16-04-2025 Better configurations - Improved settings for allowed paths, commands and shell environments

- 14-04-2025 Windows environment fixes - Resolved issues specific to Windows platforms

- 14-04-2025 Linux improvements - Enhanced compatibility with various Linux distributions

- 12-04-2025 Better allowed directories and blocked commands - Improved security and path validation for file read/write and terminal command restrictions. Terminal still can access files ignoring allowed directories.

- 11-04-2025 Shell configuration - Added ability to configure preferred shell for command execution

-

07-04-2025 Added URL support -

read_filecommand can now fetch content from URLs - 28-03-2025 Fixed "Watching /" JSON error - Implemented custom stdio transport to handle non-JSON messages and prevent server crashes

- 25-03-2025 Better code search (merged) - Enhanced code exploration with context-aware results

The following features are currently being explored:

- Support for WSL - Windows Subsystem for Linux integration

- Support for SSH - Remote server command execution

- Better file support for formats like CSV/PDF

- Terminal sandboxing for Mac/Linux/Windows for better security

- File reading modes - For example, allow reading HTML as plain text or markdown

- Interactive shell support - ssh, node/python repl

- Improve large file reading and writing

Desktop Commander MCP is free and open source, but needs your support to thrive!

Our philosophy is simple: we don't want you to pay for it if you're not successful. But if Desktop Commander contributes to your success, please consider contributing to ours.

Ways to support:

- 🌟 GitHub Sponsors - Recurring support

- ☕ Buy Me A Coffee - One-time contributions

- 💖 Patreon - Become a patron and support us monthly

- ⭐ Star on GitHub - Help others discover the project

Generous supporters are featured here. Thank you for helping make this project possible!

Why your support matters

Your support allows us to:

- Continue active development and maintenance

- Add new features and integrations

- Improve compatibility across platforms

- Provide better documentation and examples

- Build a stronger community around the project

Visit our official website at https://desktopcommander.app/ for the latest information, documentation, and updates.

Learn more about this project through these resources:

Claude with MCPs replaced Cursor & Windsurf. How did that happen? - A detailed exploration of how Claude with Model Context Protocol capabilities is changing developer workflows.

Claude Desktop Commander Video Tutorial - Watch how to set up and use the Commander effectively.

This Developer Ditched Windsurf, Cursor Using Claude with MCPs

This Developer Ditched Windsurf, Cursor Using Claude with MCPs

Join our Discord server to get help, share feedback, and connect with other users.

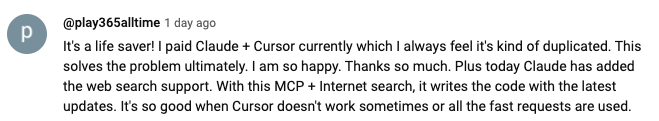

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgyyBt6_ShdDX_rIOad4AaABAg

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgyyBt6_ShdDX_rIOad4AaABAg

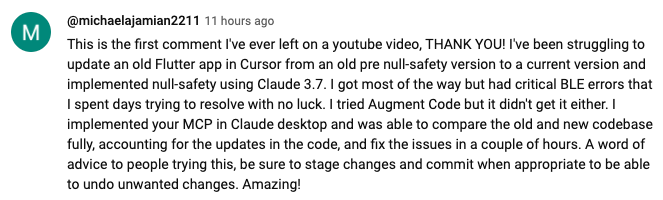

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgztdHvDMqTb9jiqnf54AaABAg

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgztdHvDMqTb9jiqnf54AaABAg

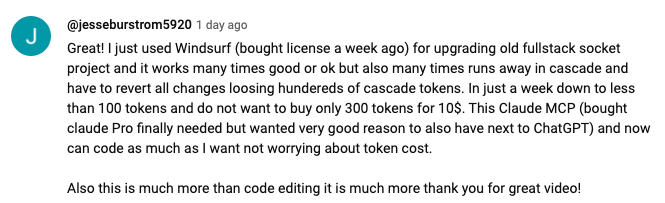

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgyQFTmYLJ4VBwIlmql4AaABAg

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=UgyQFTmYLJ4VBwIlmql4AaABAg

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=Ugy4-exy166_Ma7TH-h4AaABAg

https://www.youtube.com/watch?v=ly3bed99Dy8&lc=Ugy4-exy166_Ma7TH-h4AaABAg

https://medium.com/@pharmx/you-sir-are-my-hero-62cff5836a3e

https://medium.com/@pharmx/you-sir-are-my-hero-62cff5836a3e

If you find this project useful, please consider giving it a ⭐ star on GitHub! This helps others discover the project and encourages further development.

We welcome contributions from the community! Whether you've found a bug, have a feature request, or want to contribute code, here's how you can help:

- Found a bug? Open an issue at github.com/wonderwhy-er/DesktopCommanderMCP/issues

- Have a feature idea? Submit a feature request in the issues section

- Want to contribute code? Fork the repository, create a branch, and submit a pull request

- Questions or discussions? Start a discussion in the GitHub Discussions tab

All contributions, big or small, are greatly appreciated!

If you find this tool valuable for your workflow, please consider supporting the project.

Here are answers to some common questions. For a more comprehensive FAQ, see our detailed FAQ document.

It's an MCP tool that enables Claude Desktop to access your file system and terminal, turning Claude into a versatile assistant for coding, automation, codebase exploration, and more.

Unlike IDE-focused tools, Claude Desktop Commander provides a solution-centric approach that works with your entire OS, not just within a coding environment. Claude reads files in full rather than chunking them, can work across multiple projects simultaneously, and executes changes in one go rather than requiring constant review.

No. This tool works with Claude Desktop's standard Pro subscription ($20/month), not with API calls, so you won't incur additional costs beyond the subscription fee.

Yes, when installed through npx or Smithery, Desktop Commander automatically updates to the latest version when you restart Claude. No manual update process is needed.

- Exploring and understanding complex codebases

- Generating diagrams and documentation

- Automating tasks across your system

- Working with multiple projects simultaneously

- Making surgical code changes with precise control

Join our Discord server for community support, check the GitHub issues for known problems, or review the full FAQ for troubleshooting tips. You can also visit our website FAQ section for a more user-friendly experience. If you encounter a new issue, please consider opening a GitHub issue with details about your problem.

Please create a GitHub Issue with detailed information about any security vulnerabilities you discover. See our Security Policy for complete guidelines on responsible disclosure.

Desktop Commander collects limited anonymous telemetry data to help improve the tool. No personal information, file contents, file paths, or command arguments are collected.

-

Local usage statistics are always collected and stored locally on your machine for functionality and the

get_usage_statstool - Use the

get_usage_statstool to view your personal usage patterns, success rates, and performance metrics - This data is NOT sent anywhere - it remains on your computer for your personal insights

- Use the

give_feedback_to_desktop_commandertool to provide feedback about Desktop Commander - Opens a browser-based feedback form to send suggestions and feedback to the development team

- Only basic usage statistics (tool call count, days using, platform) are pre-filled to provide context but you can remove them

External telemetry (sent to analytics services) is enabled by default but can be disabled:

- Open the chat and simply ask: "Disable telemetry"

- The chatbot will update your settings automatically.

Note: This only disables external telemetry. Local usage analytics remain active for tool functionality but is not share externally

For complete details about data collection, please see our Privacy Policy.

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DesktopCommanderMCP

Similar Open Source Tools

DesktopCommanderMCP

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

RooFlow

RooFlow is a VS Code extension that enhances AI-assisted development by providing persistent project context and optimized mode interactions. It reduces token consumption and streamlines workflow by integrating Architect, Code, Test, Debug, and Ask modes. The tool simplifies setup, offers real-time updates, and provides clearer instructions through YAML-based rule files. It includes components like Memory Bank, System Prompts, VS Code Integration, and Real-time Updates. Users can install RooFlow by downloading specific files, placing them in the project structure, and running an insert-variables script. They can then start a chat, select a mode, interact with Roo, and use the 'Update Memory Bank' command for synchronization. The Memory Bank structure includes files for active context, decision log, product context, progress tracking, and system patterns. RooFlow features persistent context, real-time updates, mode collaboration, and reduced token consumption.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

sd-webui-agent-scheduler

AgentScheduler is an Automatic/Vladmandic Stable Diffusion Web UI extension designed to enhance image generation workflows. It allows users to enqueue prompts, settings, and controlnets, manage queued tasks, prioritize, pause, resume, and delete tasks, view generation results, and more. The extension offers hidden features like queuing checkpoints, editing queued tasks, and custom checkpoint selection. Users can access the functionality through HTTP APIs and API callbacks. Troubleshooting steps are provided for common errors. The extension is compatible with latest versions of A1111 and Vladmandic. It is licensed under Apache License 2.0.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

recommendarr

Recommendarr is a tool that generates personalized TV show and movie recommendations based on your Sonarr, Radarr, Plex, and Jellyfin libraries using AI. It offers AI-powered recommendations, media server integration, flexible AI support, watch history analysis, customization options, and dark/light mode toggle. Users can connect their media libraries and watch history services, configure AI service settings, and get personalized recommendations based on genre, language, and mood/vibe preferences. The tool works with any OpenAI-compatible API and offers various recommended models for different cost options and performance levels. It provides personalized suggestions, detailed information, filter options, watch history analysis, and one-click adding of recommended content to Sonarr/Radarr.

AiR

AiR is an AI tool built entirely in Rust that delivers blazing speed and efficiency. It features accurate translation and seamless text rewriting to supercharge productivity. AiR is designed to assist non-native speakers by automatically fixing errors and polishing language to sound like a native speaker. The tool is under heavy development with more features on the horizon.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

bagel

Bagel is a tool that allows users to chat with their robotics and drone data similar to using ChatGPT. It generates deterministic and auditable DuckDB SQL queries to analyze data, supporting various robotics and sensor log formats. Users can interact with Bagel through a Discord server, and it can be integrated with different language models. Bagel provides tutorials, Docker images for easy deployment, and a roadmap for upcoming features like Computer Vision Module, Anomaly Detection, and more.

preswald

Preswald is a full-stack platform for building, deploying, and managing interactive data applications in Python. It simplifies the process by combining ingestion, storage, transformation, and visualization into one lightweight SDK. With Preswald, users can connect to various data sources, customize app themes, and easily deploy apps locally. The platform focuses on code-first simplicity, end-to-end coverage, and efficiency by design, making it suitable for prototyping internal tools or deploying production-grade apps with reduced complexity and cost.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

UCAgent

UCAgent is an AI-powered automated UT verification agent for chip design. It automates chip verification workflow, supports functional and code coverage analysis, ensures consistency among documentation, code, and reports, and collaborates with mainstream Code Agents via MCP protocol. It offers three intelligent interaction modes and requires Python 3.11+, Linux/macOS OS, 4GB+ memory, and access to an AI model API. Users can clone the repository, install dependencies, configure qwen, and start verification. UCAgent supports various verification quality improvement options and basic operations through TUI shortcuts and stage color indicators. It also provides documentation build and preview using MkDocs, PDF manual build using Pandoc + XeLaTeX, and resources for further help and contribution.

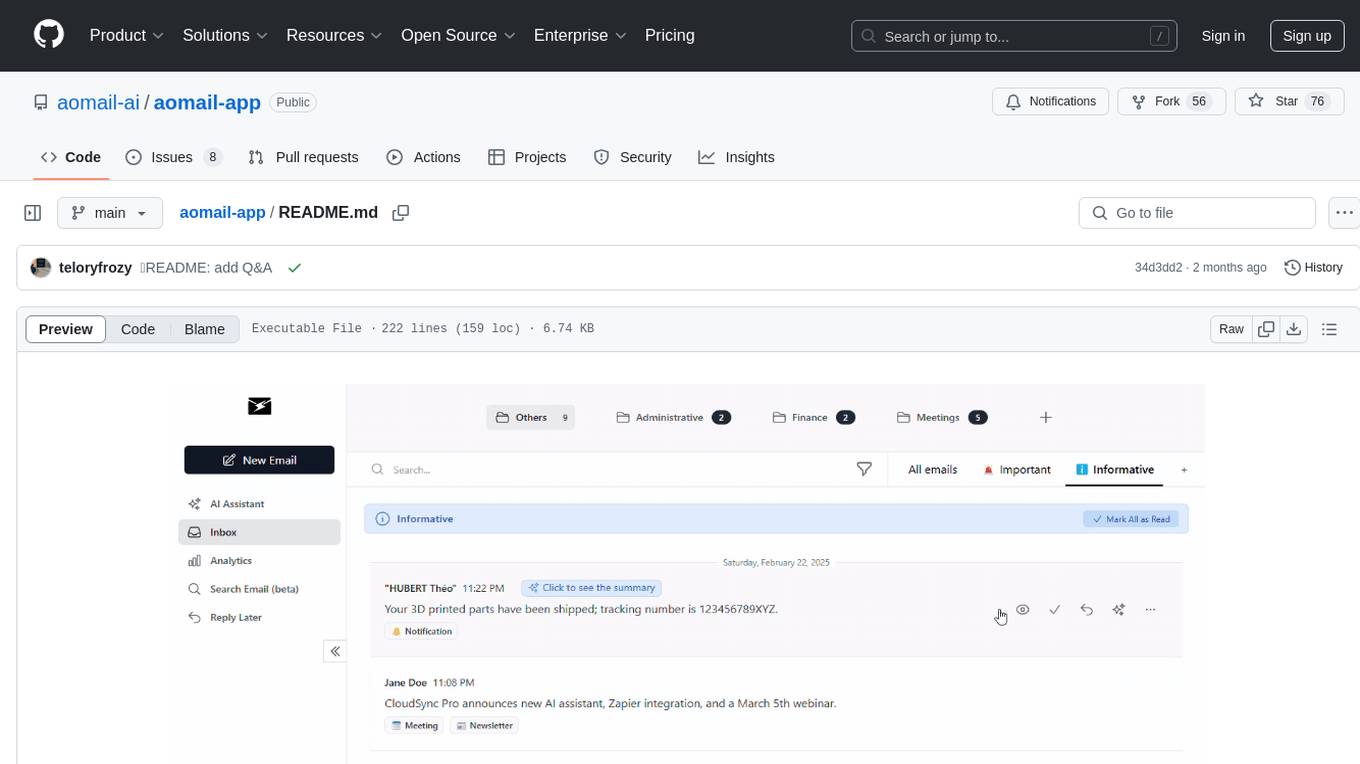

aomail-app

Aomail is an intelligent, open-source email management platform with AI capabilities. It offers email provider integration, AI-powered tools for smart categorization and assistance, analytics and management features. Users can self-host for complete control. Coming soon features include AI custom rules, platform integration with Discord & Slack, and support for various AI providers. The tool is designed to revolutionize email management by providing advanced AI features and analytics.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

For similar tasks

mentat

Mentat is an AI tool designed to assist with coding tasks directly from the command line. It combines human creativity with computer-like processing to help users understand new codebases, add new features, and refactor existing code. Unlike other tools, Mentat coordinates edits across multiple locations and files, with the context of the project already in mind. The tool aims to enhance the coding experience by providing seamless assistance and improving edit quality.

mandark

Mandark is a lightweight AI tool that can perform various tasks, such as answering questions about codebases, editing files, verifying diffs, estimating token and cost before execution, and working with any codebase. It supports multiple AI models like Claude-3.5 Sonnet, Haiku, GPT-4o-mini, and GPT-4-turbo. Users can run Mandark without installation and easily interact with it through command line options. It offers flexibility in processing individual files or folders and allows for customization with optional AI model selection and output preferences.

wcgw

wcgw is a shell and coding agent designed for Claude and Chatgpt. It provides full shell access with no restrictions, desktop control on Claude for screen capture and control, interactive command handling, large file editing, and REPL support. Users can use wcgw to create, execute, and iterate on tasks, such as solving problems with Python, finding code instances, setting up projects, creating web apps, editing large files, and running server commands. Additionally, wcgw supports computer use on Docker containers for desktop control. The tool can be extended with a VS Code extension for pasting context on Claude app and integrates with Chatgpt for custom GPT interactions.

k8m

k8m is an AI-driven Mini Kubernetes AI Dashboard lightweight console tool designed to simplify cluster management. It is built on AMIS and uses 'kom' as the Kubernetes API client. k8m has built-in Qwen2.5-Coder-7B model interaction capabilities and supports integration with your own private large models. Its key features include miniaturized design for easy deployment, user-friendly interface for intuitive operation, efficient performance with backend in Golang and frontend based on Baidu AMIS, pod file management for browsing, editing, uploading, downloading, and deleting files, pod runtime management for real-time log viewing, log downloading, and executing shell commands within pods, CRD management for automatic discovery and management of CRD resources, and intelligent translation and diagnosis based on ChatGPT for YAML property translation, Describe information interpretation, AI log diagnosis, and command recommendations, providing intelligent support for managing k8s. It is cross-platform compatible with Linux, macOS, and Windows, supporting multiple architectures like x86 and ARM for seamless operation. k8m's design philosophy is 'AI-driven, lightweight and efficient, simplifying complexity,' helping developers and operators quickly get started and easily manage Kubernetes clusters.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

DesktopCommanderMCP

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

codexia

Codexia is a powerful GUI and Toolkit for Codex CLI, offering features like fork chat, file-tree integration, notepad, git diff, built-in pdf/csv/xlsx viewer, and more. It provides multi-file format support, flexible configuration with multiple AI providers, professional UX with responsive UI, security features like sandbox execution modes, and prioritizes privacy. The tool supports interactive chat, code generation/editing, file operations with sandbox, command execution with approval, multiple AI providers, project-aware assistance, streaming responses, and built-in web search. The roadmap includes plans for MCP tool call, more file format support, better UI customization, plugin system, real-time collaboration, performance optimizations, and token count.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.