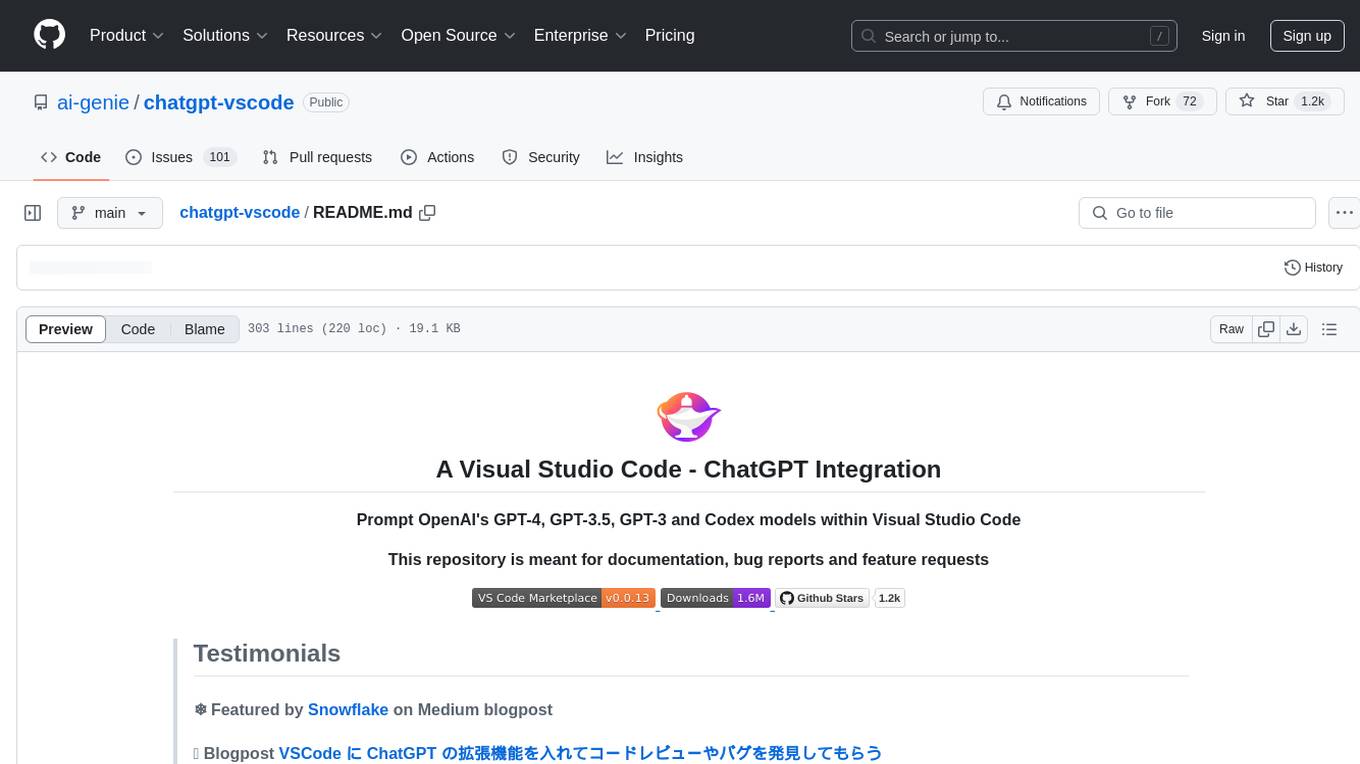

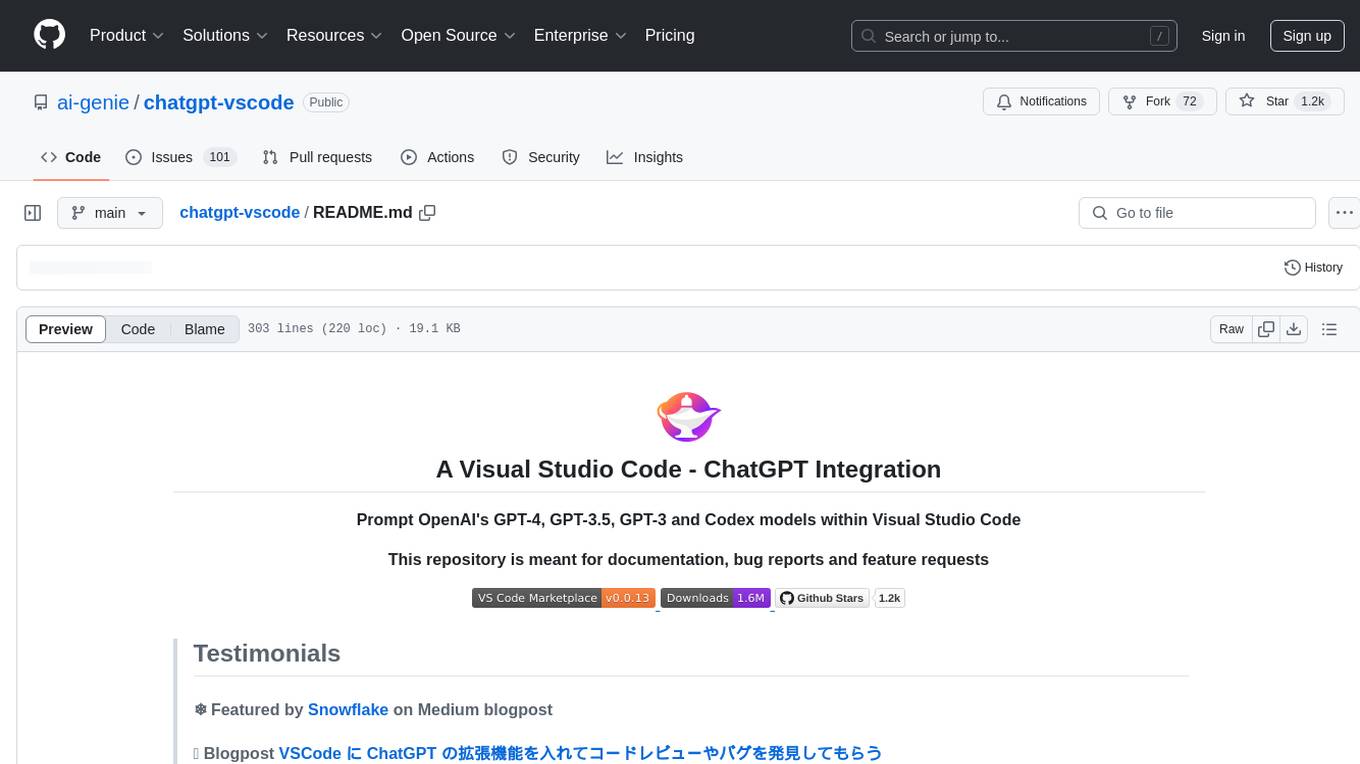

chatgpt-vscode

Your best AI pair programmer in VS Code

Stars: 1161

ChatGPT-VSCode is a Visual Studio Code integration that allows users to prompt OpenAI's GPT-4, GPT-3.5, GPT-3, and Codex models within the editor. It offers features like using improved models via OpenAI API Key, Azure OpenAI Service deployments, generating commit messages, storing conversation history, explaining and suggesting fixes for compile-time errors, viewing code differences, and more. Users can customize prompts, quick fix problems, save conversations, and export conversation history. The extension is designed to enhance developer experience by providing AI-powered assistance directly within VS Code.

README:

Prompt OpenAI's GPT-4, GPT-3.5, GPT-3 and Codex models within Visual Studio Code

This repository is meant for documentation, bug reports and feature requests

❄️ Featured by Snowflake on Medium blogpost

🎌 Blogpost VSCode に ChatGPT の拡張機能を入れてコードレビューやバグを発見してもらう

💙 Reviews on Twitter

❤️ ChatGPT the pair programmer - VS Code on Youtube

💙 Generative AI on LinkedIn

- ➕ Use GPT-4o and other improved models via your own OpenAI API Key.

- ✨ Use your own Azure OpenAI Service deployments

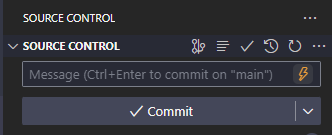

- ⚡ Generate commit messages from your git changes

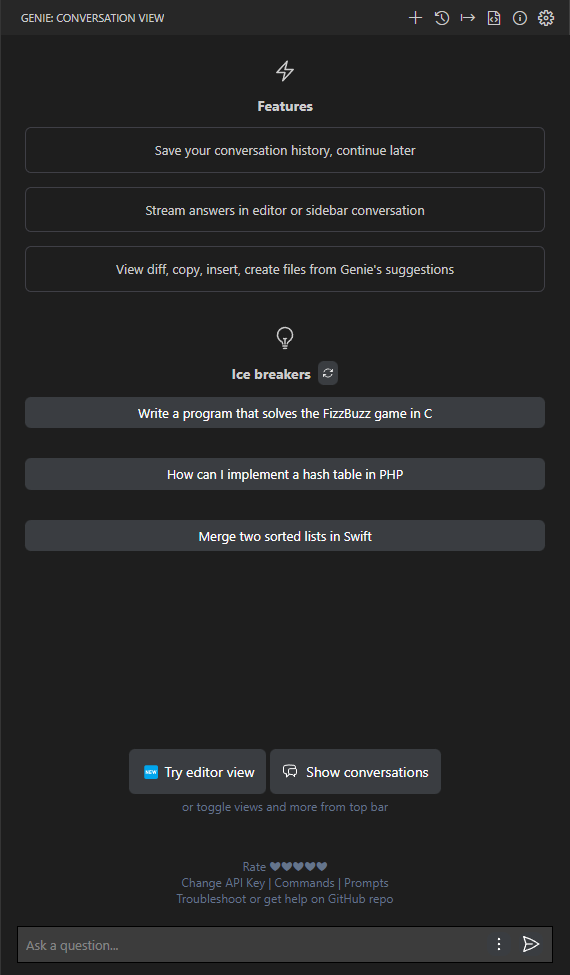

- 💬 Store your conversation history on your disk and continue at any time.

- 💡 Use Genie in Problems window to explain and suggest fix for compile-time errors.

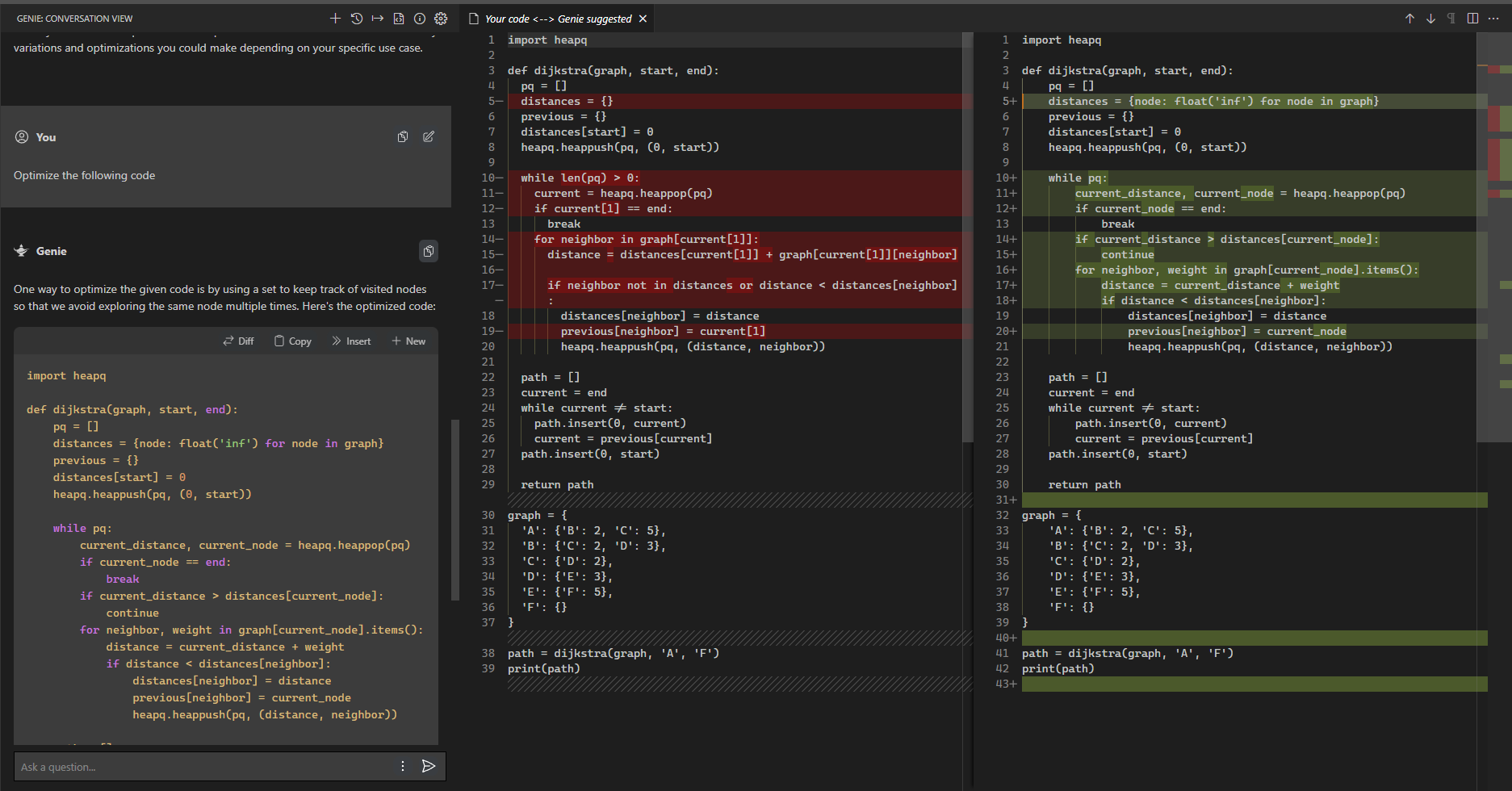

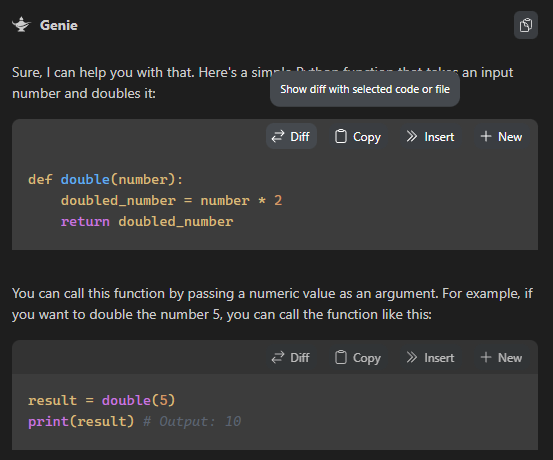

- 🔁 See diff between your code and Genie's suggestion right within editor with one click.

- 👤 Rename and personalize your assistant.

- 📃 Get streaming answers to your prompt in editor or sidebar conversation.

- 🔥 Streaming conversation support and stop the response to save your tokens.

- 📝 Create files or fix your code with one click or with keyboard shortcuts.

- ➡️ Export all your conversation history at once in Markdown format.

🌞 Custom system message & o1-mini and o1-preview models

- Added a new setting to customize the system message / context that starts your conversation with the AI.

- Update the

genieai.systemMessagesetting to customize your system message. - You can now use o1-mini and o1-preview models. Please note that these new models have usage tier limitations. See Usage tiers.

⏫ GPT-4o & 2024 Models available

- You can now use gpt-4o and other 2024 models with improved maxTokens.

- New models include:

gpt-4o,gpt-4o-2024-05-13,gpt-4-turbo,gpt-4-turbo-2024,gpt-4-turbo-preview,gpt-4-0125-preview - Editor View is now fixed and uses your selected model instead of legacy models.

- Fixed Genie: Generate commit message problems due to vscode updating its APIs.

- Added new menu item to run Genie: Generate commit message command.

⚡ Generate commit messages functionality added

-

Generate commit messages right within VS Code:

-

You can update your commit message prompt from the extension settings. You may also opt-out if you prefer to use other commit message generators.

-

Genie: Generate a commit messagecommand and shortcut supports multi-folder workspaces. -

Update your generate commit message prompt:

genieai.promptPrefix.commit-message -

Opt-out of the Quick Fix actions setting is added:

genieai.quickFix.enable -

Opt-out of the Generate Commit Message functionality:

genieai.enableGenerateCommitMessage -

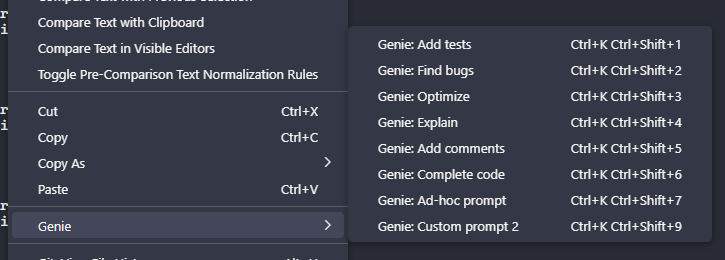

All of Genie's context menu items are now wrapped under

Geniesubmenu

⏫ GPT-4 & GPT-3.5 Turbo models added

- Updated model selection

- You can now use gpt-4-1106-preview (GPT-4 Turbo) and gpt-3.5-turbo-1106 (GPT-3.5 Turbo) via Genie.

- New models include:

gpt-4-1106-preview,gpt-4-0613,gpt-4-32k-0613,gpt-3.5-turbo-1106,gpt-3.5-turbo-16k,gpt-3.5-turbo-instruct - Deprecated

gpt-4-0314,gpt-4-32k-0314,gpt-3.5-turbo-0301in favor of the replacement models.

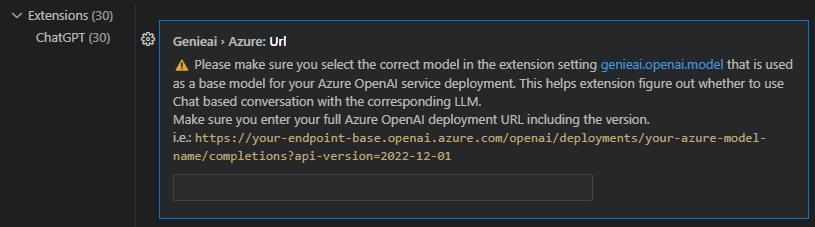

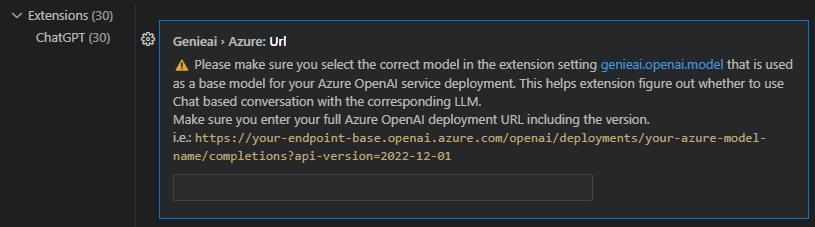

✨ Azure OpenAI Service support & more

- Azure OpenAI Service

-

You can now use your Azure OpenAI deployments with Genie

-

Set your full Azure OpenAI deployment URL in setting:

genieai.azure.urlfollowing the instructions mentioned in the setting description -

Ensure to set the extension's model setting to the right base model you used for Azure deployment

- Rename and remove your conversations within sidebar

- Improved autoscroll behaviour

- Autoscroll will be disabled if you interrupt the stream

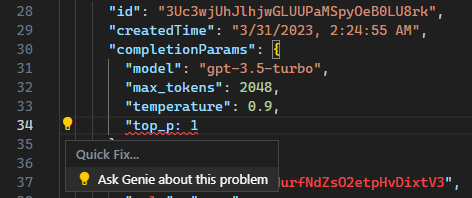

💡 Quick fix problems

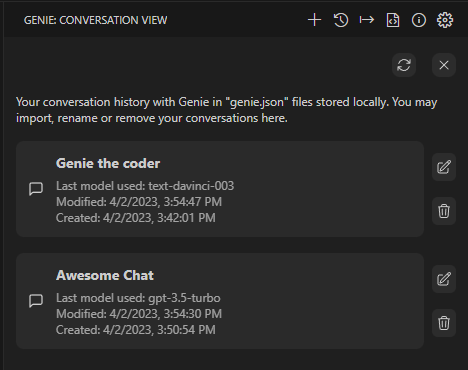

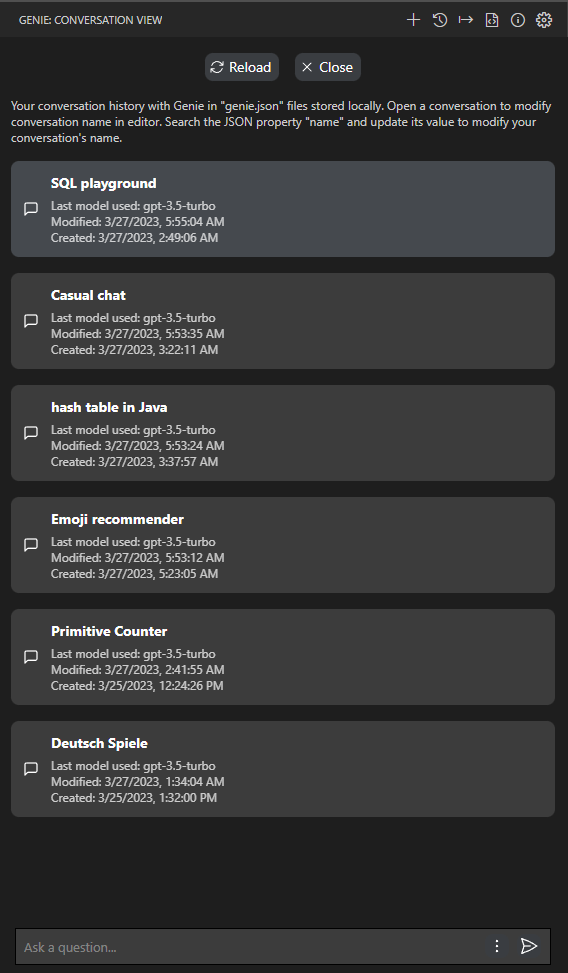

💬 Save your conversations and continue at any time

- Conversation history

- The goal: Collect feedback and measure the compatibility across different machine, OS setups.

- We are experimenting a new feature to help you store your conversations in your disk using VS Code global storage API.

- You need to opt-in to use this feature as this is experimental to collect feedback from the users. Setting name:

genieai.enableConversationHistory - With this experimental feature, keep in mind this feature has limitations at the moment and may have bugs, use it at your own risk.

- You may want to remove the stored files manually for privacy from time to time, extension doesn't have any way to modify the files other than writing new threads to files.

- All conversations start with name 'New chat' and you can change it in

genie.jsonfile. - The conversations are stored only on your machine, using VS Code's provided global storage API for extensions.

- Misc. bug fixes and improvements

Genie - ChatGPT Conversation History - Watch Video

Get your API Key from here: OpenAI and see OpenAI official docs for available model details

- Simply ask any coding question by selecting a code fragment.

- Once asked, provide your API Key.

If you face issues regarding your API Key, see FAQ for details on how to reenter/clear it

The extension comes with context menu commands, copy/move suggested code into editor with one-click, conversation window and customization options for OpenAI's ChatGPT prompts.

We recently introduced Genie to Problems window. You can investigate your compile-time errors asking Genie. Simply click on Lightbulb/suggestion icon to ask Genie to help you. The credits for this idea goes to @cahaseler; if you are interested in seeing his Genie-companion extension visit this issue

-

💬 Store your conversation history on your disk and continue at any time.

-

💡 Quick fix the problems in your code

-

✨ Supports Azure OpenAI Service

-

🔁 See diff between your code and Genie's suggestion right within editor with one click.

-

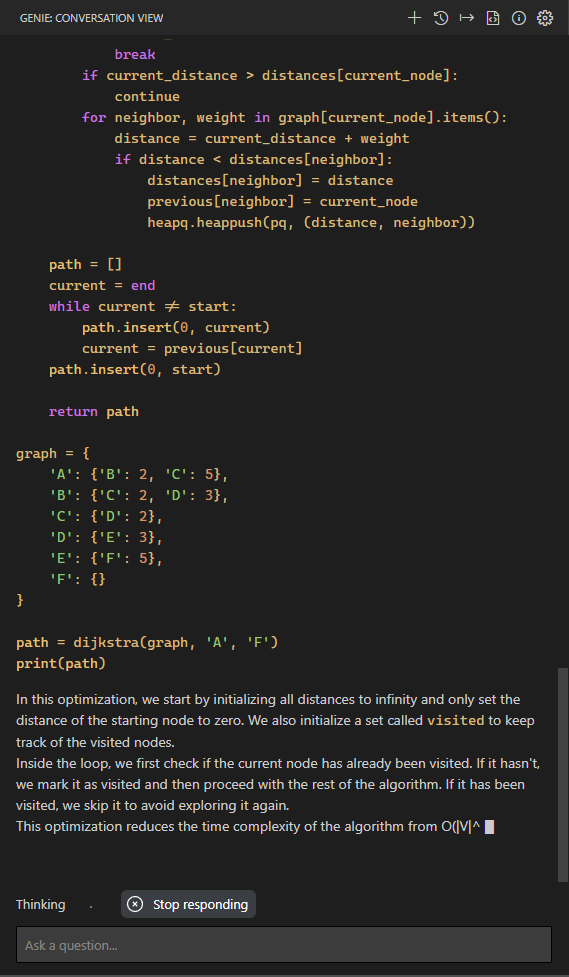

📃 Get streaming answers to your prompt in editor or sidebar conversation.

-

Customize what you are asking with the selected code. The extension will remember your prompt for subsequent questions.

-

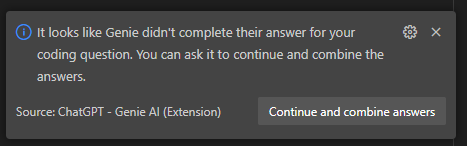

Automatic partial code response detection. If AI doesn't finish responding, you will have the option to continue and combine answers

-

Copy or insert the code ChatGPT is suggesting right into your editor.

-

🍻 Optimized for dialogue

-

Edit and resend a previous prompt

-

📤 Export all your conversation history with one click

-

Ad-hoc prompt prefixes for you to customize what you are asking ChatGPT

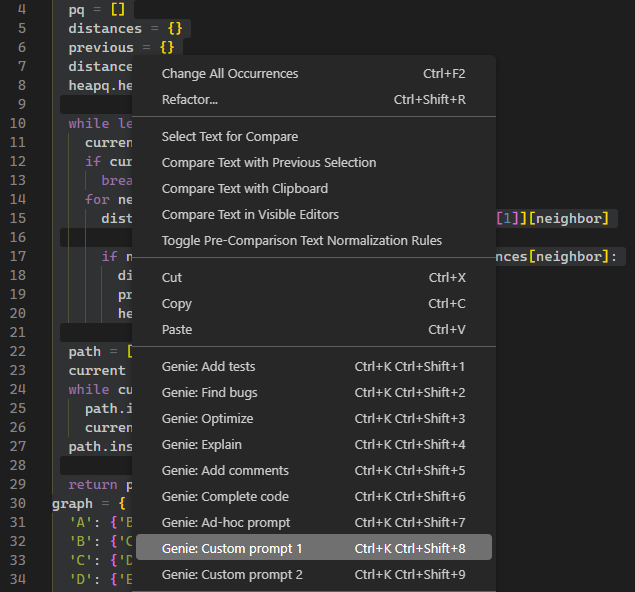

You may assign a keyboard shortcut to any of the following commands using VS Code's built-in keybindings menu.

- You can enable/disable all of your context menu items. Simply go to settings and find the prompt that you would like to disable. Custom prompts are hidden by default.

-

Genie: Ad-hoc prompt: Ad-hoc custom prompt prefix for the selected code. Right click on a selected block of code, run command.- You will be asked to fill in your preferred custom prefix and the extension will remember that string for your subsequent ad-hoc queries.

-

Genie: Add tests: Write tests for you. Right click on a selected block of code, run command.- "default": "Implement tests for the following code",

- "description": "The prompt prefix used for adding tests for the selected code"

-

Genie: Find bugs: Analyze and find bugs in your code. Right click on a selected block of code, run command.- "default": "Find problems with the following code",

- "description": "The prompt prefix used for finding problems for the selected code"

-

Genie: Optimize: Add suggestions to your code to improve. Right click on a selected block of code, run command.- "default": "Optimize the following code",

- "description": "The prompt prefix used for optimizing the selected code"

-

Genie: Explain: Explain the selected code. Right click on a selected block of code, run command.- "default": "Explain the following code",

- "description": "The prompt prefix used for explaining the selected code"

-

Genie: Add comments: Add comments for the selected code. Right click on a selected block of code, run command.- "default": "Add comments for the following code",

- "description": "The prompt prefix used for adding comments for the selected code"

-

Genie: Custom prompt 1: Your custom prompt 1. It's disabled by default, please set to a custom prompt and enable it if you prefer using customized prompt- "default": "",

-

Genie: Custom prompt 2: Your custom prompt 2. It's disabled by default, please set to a custom prompt and enable it if you prefer using customized prompt- "default": "",

-

Genie: Generate code: If you select a Codex model (code-*) you will see this option in your context menu. This option will not feed the ChatGPT with any context like the other text completion prompts.

-

Genie: Clear API Key: Clears the API Key from VS Code Secrets Storage -

Genie: Show conversations: List of conversations that Genie stored after enabling conversation history setting. -

Genie: What's new: See what is recently released. -

Genie: Start a new chat: Start a new chat with AI. -

Genie: Ask anything: Free-form text questions within conversation window. -

Genie: Reset session: Clears the current session and resets your connection with ChatGPT -

Genie: Clear conversation: Clears the conversation window and resets the thread to start a new conversation with ChatGPT. -

Genie: Export conversation: Exports the whole conversation in Markdown for you to easily store and find the Q&A list. -

Genie: Focus on Genie View: Focuses on Genie window if it was hidden. You can move Genie window to right sidebar or bottom bar by dragging the Genie icon.

- For general FAQ please visit OpenAI's own page: https://help.openai.com/en/articles/7039783-chatgpt-api-faq

-

How can I clear or re-enter API key: use

Genie: Clear API Keycommand. ClickCommandson the home page to see all commands available. You can also click onChange API Keyon the home page. - Editor view uses text-davinci-003: It's by design at the moment since it's the only model that can guarantee a code response and the view doesn't need a conversational context. Please follow this issue for details #24

- Is the ChatGPT API included in the ChatGPT Plus subscription?: No, the ChatGPT API and ChatGPT Plus subscription are billed separately.

- Can I view the API Key after storing it?: VS Code secrets storage won't allow you to read the API Key after storing it. You may clear or reenter another key if you are facing issues.

- Does Genie support proxies: See this issue to enable local proxy: https://github.com/ai-genie/chatgpt-vscode/issues/7

- Usage in Remote environments: See this issue about remote/SSH: https://github.com/ai-genie/chatgpt-vscode/issues/3

- Unable To Use GPT-4 Models: You need GPT-4 API Access (Different than GPT-4 on ChatGPT Plus subscription) https://github.com/ai-genie/chatgpt-vscode/issues/6

- Azure OpenAI Service: Unsupported data type Error: This means you didn't select the right base model for your Azure OpenAI service. Make sure to select the base GPT model you used to create your Azure OpenAI Service deployment

-

It's possible that OpenAI systems may experience issues responding to your queries due to high-traffic from time to time.

-

If you get

HTTP 429 Too Many Requests, it means that you are either making too many requests OR your account doesn't have enough credit. Your account may also have expired.- Please check your plan and billing details

- If you see

insufficient_quotain the error, you could run the following cURL command to check if your account has enough quota. (Make sure to replace$OPENAI_API_KEYwith your key that you use in this extension)

curl https://api.openai.com/v1/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $OPENAI_API_KEY" \ -d '{ "model": "text-davinci-003", "prompt": "Can I make a request?\n\n", "temperature": 0.7, "max_tokens": 256, "top_p": 1, "frequency_penalty": 0, "presence_penalty": 0 }' -

If you get

HTTP 404 Not Founderror, it means one of the parameters you provided is unknown (i.e.genieai.openai.model). Most likely switching to defaultmodelin your settings would fix this issue. -

If you get

HTTP 400 Bad Requesterror, it means that your conversation's length is more than GPT/Codex models can handle. Or you supplied an invalid argument via customized settings. -

If you encounter persistent issues with your queries

- Try

Genie: Reset sessionto clear your session/conversation orGenie: Clear API Keyto clear your API Key and re-enter - As a last resort try restarting your VS-Code and retry logging in.

- Try

-

If you are using Remote Development and cannot use ChatGPT

- In

settings.jsonadd"remote.extensionKind": {"genieai.chatgpt-vscode": ["ui"]}

- In

- There is no guarantee that the extension will continue to work as-is without any issues or side-effects. Please use it at your own risk.

- This extension never uses/stores your personally identifiable information.

- If you would like to help us improve our features please enable the telemetry in the settings. It's disabled by default but if you enable it, it will start collecting metadata to improve its features. No personally identifiable information is collected. You can opt-out from telemetry either by setting the global 'telemetry.telemetryLevel' or by setting 'genieai.telemetry.disable' to true(Disabled by default). The extension will respect both of these settings and will collect metadata only if both allow telemetry. We use the official telemetry package provided by the vscode team here to understand this extension's usage patterns to better plan new feature releases.

- We assume no responsibility of any issues that you may face using this extension. Your use of OpenAI services is subject to OpenAI's Privacy Policy and Terms of Use.

- 💻 OpenAI: https://openai.com/

- 🧪 Uses NodeJS OpenAI API wrapper

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatgpt-vscode

Similar Open Source Tools

chatgpt-vscode

ChatGPT-VSCode is a Visual Studio Code integration that allows users to prompt OpenAI's GPT-4, GPT-3.5, GPT-3, and Codex models within the editor. It offers features like using improved models via OpenAI API Key, Azure OpenAI Service deployments, generating commit messages, storing conversation history, explaining and suggesting fixes for compile-time errors, viewing code differences, and more. Users can customize prompts, quick fix problems, save conversations, and export conversation history. The extension is designed to enhance developer experience by providing AI-powered assistance directly within VS Code.

TerminalGPT

TerminalGPT is a terminal-based ChatGPT personal assistant app that allows users to interact with OpenAI GPT-3.5 and GPT-4 language models. It offers advantages over browser-based apps, such as continuous availability, faster replies, and tailored answers. Users can use TerminalGPT in their IDE terminal, ensuring seamless integration with their workflow. The tool prioritizes user privacy by not using conversation data for model training and storing conversations locally on the user's machine.

MiniSearch

MiniSearch is a minimalist search engine with integrated browser-based AI. It is privacy-focused, easy to use, cross-platform, integrated, time-saving, efficient, optimized, and open-source. MiniSearch can be used for a variety of tasks, including searching the web, finding files on your computer, and getting answers to questions. It is a great tool for anyone who wants a fast, private, and easy-to-use search engine.

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

parllama

PAR LLAMA is a Text UI application for managing and using LLMs, designed with Textual and Rich and PAR AI Core. It runs on major OS's including Windows, Windows WSL, Mac, and Linux. Supports Dark and Light mode, custom themes, and various workflows like Ollama chat, image chat, and OpenAI provider chat. Offers features like custom prompts, themes, environment variables configuration, and remote instance connection. Suitable for managing and using LLMs efficiently.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

sd-webui-agent-scheduler

AgentScheduler is an Automatic/Vladmandic Stable Diffusion Web UI extension designed to enhance image generation workflows. It allows users to enqueue prompts, settings, and controlnets, manage queued tasks, prioritize, pause, resume, and delete tasks, view generation results, and more. The extension offers hidden features like queuing checkpoints, editing queued tasks, and custom checkpoint selection. Users can access the functionality through HTTP APIs and API callbacks. Troubleshooting steps are provided for common errors. The extension is compatible with latest versions of A1111 and Vladmandic. It is licensed under Apache License 2.0.

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

voice-chat-ai

Voice Chat AI is a project that allows users to interact with different AI characters using speech. Users can choose from various characters with unique personalities and voices, and have conversations or role play with them. The project supports OpenAI, xAI, or Ollama language models for chat, and provides text-to-speech synthesis using XTTS, OpenAI TTS, or ElevenLabs. Users can seamlessly integrate visual context into conversations by having the AI analyze their screen. The project offers easy configuration through environment variables and can be run via WebUI or Terminal. It also includes a huge selection of built-in characters for engaging conversations.

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

aimeos-typo3

Aimeos is a professional, full-featured, and high-performance e-commerce extension for TYPO3. It can be installed in an existing TYPO3 website within 5 minutes and can be adapted, extended, overwritten, and customized to meet specific needs.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

For similar tasks

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

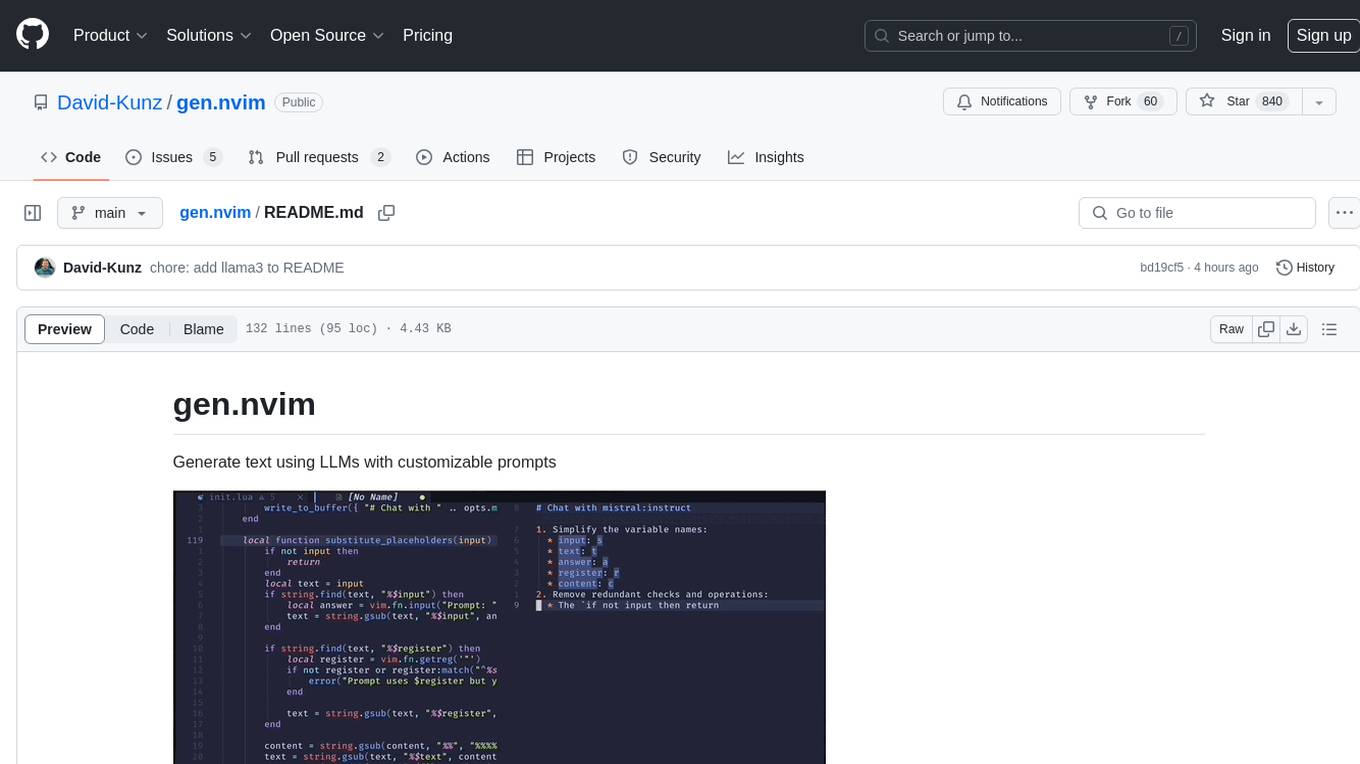

gen.nvim

gen.nvim is a tool that allows users to generate text using Language Models (LLMs) with customizable prompts. It requires Ollama with models like `llama3`, `mistral`, or `zephyr`, along with Curl for installation. Users can use the `Gen` command to generate text based on predefined or custom prompts. The tool provides key maps for easy invocation and allows for follow-up questions during conversations. Additionally, users can select a model from a list of installed models and customize prompts as needed.

llm-document-ocr

LLM Document OCR is a Node.js tool that utilizes GPT4 and Claude3 for OCR and data extraction. It converts PDFs into PNGs, crops white-space, cleans up JSON strings, and supports various image formats. Users can customize prompts for data extraction. The tool is sponsored by Mercoa, offering API for BillPay and Invoicing.

talemate

Talemate is a roleplay tool that allows users to interact with AI agents for dialogue, narration, summarization, direction, editing, world state management, character/scenario creation, text-to-speech, and visual generation. It supports multiple AI clients and APIs, offers long-term memory using ChromaDB, and provides tools for managing NPCs, AI-assisted character creation, and scenario creation. Users can customize prompts using Jinja2 templates and benefit from a modern, responsive UI. The tool also integrates with Runpod for enhanced functionality.

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

RSSbrew

RSSBrew is a self-hosted RSS tool designed for aggregating multiple RSS feeds, applying custom filters, and generating AI summaries. It allows users to control content through custom filters based on Link, Title, and Description, with various match types and relationship operators. Users can easily combine multiple feeds into a single processed feed and use AI for article summarization and digest creation. The tool supports Docker deployment and regular installation, with ongoing documentation and development. Licensed under AGPL-3.0, RSSBrew is a versatile tool for managing and summarizing RSS content.

unify

The Unify Python Package provides access to the Unify REST API, allowing users to query Large Language Models (LLMs) from any Python 3.7.1+ application. It includes Synchronous and Asynchronous clients with Streaming responses support. Users can easily use any endpoint with a single key, route to the best endpoint for optimal throughput, cost, or latency, and customize prompts to interact with the models. The package also supports dynamic routing to automatically direct requests to the top-performing provider. Additionally, users can enable streaming responses and interact with the models asynchronously for handling multiple user requests simultaneously.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.