llm-document-ocr

LLM Based OCR and Document Parsing for Node.js

Stars: 61

LLM Document OCR is a Node.js tool that utilizes GPT4 and Claude3 for OCR and data extraction. It converts PDFs into PNGs, crops white-space, cleans up JSON strings, and supports various image formats. Users can customize prompts for data extraction. The tool is sponsored by Mercoa, offering API for BillPay and Invoicing.

README:

Sponsored by Mercoa, the API for BillPay and Invoicing. Everything you need to launch accounts payable in your product with a single API!

LLM Based OCR and Document Parsing for Node.js. Uses GPT4 and Claude3 for OCR and data extraction.

- Converts PDFs (including multi page PDFs) into PNGs for use with GPT4

- Automatically crops white-space to create smaller inputs

- Cleans up JSON string returned by the LLM and converts it to an JSON object

- Custom prompt support for capturing any data you need

Supports:

- ✅ PNG

- ✅ WEBP

- ✅ JPEG / JPG

- ✅ GIF

- ✅ Multi-page PDF

- ❌ DOC

- ❌ DOCX

npm i --save llm-document-ocryarn add llm-document-ocrNote: If you are deploying via Docker, see the Dockerfile for an example Alpine base image.

import { DocumentOcr, prompts } from "llm-document-ocr";

const documentOcr = new DocumentOcr({

apiKey: 'YOUR-OpenAi/Anthropic-API-KEY' // required, defaults to process.env.OPENAI_API_KEY. OpenAI models need an OpenAI API key, Antrhopic models need an Anthropic API key.

model: "gpt-4-turbo", // optional, defaults to "gpt-4-turbo". Options are: "gpt-4-turbo", "gpt-4-vision-preview", "claude-3-opus-20240229", "claude-3-sonnet-20240229", "claude-3-haiku-20240307"

standardFontDataUrl: "https://unpkg.com/[email protected]/standard_fonts/", // optional, defaults to "https://unpkg.com/[email protected]/standard_fonts/". You can use the systems fonts or the fonts under ./node_modules/pdfjs-dist/standard_fonts/ as well.

});

const documentData = await documentOcr.process({

model: "gpt-4-turbo", // optional, defaults to "gpt-4-turbo". Options are "gpt-4-turbo" or "gpt-4-large"

document: 'JVBERi0xLjMNCiXi48/TDQoNCjEgMCBvYmoNCjw8DQ...', // Base64 String, Base64 URI, or Buffer

mimeType: 'application/pdf', // mime-type of the document or image

prompt: 'invoiceStartDate, invoiceEndDate, amount', // system prompt for data extraction. See examples below.

pageOptions: 'FIRST_AND_LAST' // optional, defaults to 'ALL'. Determines which page of the PDF will be processed. Available options are 'ALL', 'FIRST_AND_LAST', 'FIRST', 'LAST'.

})Prompts will be automatically prefixed to tell the LLM to return JSON. You will need to specify the data you wish to extract, and the LLM will return a JSON object with those keys.

For example, the prompt we use at Mercoa for invoice processing is the following:

`invoice number, invoice amount, currency (as ISO 4217 code), dueDate, invoiceDate, serviceStartDate, serviceEndDate,

vendor's [name, email with @, website],

line items [amnt, price, qty, des, name, cur (as ISO 4217 code)]`;And this returns a JSON object that looks like:

{

invoiceNumber?: string | number

invoiceAmount?: string | number

currency?: string

dueDate?: string

invoiceDate?: string

serviceStartDate?: string

serviceEndDate?: string

vendor: {

name?: string

email?: string

website?: string

}

lineItems: Array<{

des?: string

qty?: string | number

price?: string | number

amnt?: string | number

name?: string

cur?: string

}>

}If you encounter a bug or want to see something added/changed, please go ahead and open an issue

If you wish to contribute to the library, thanks! Please see the CONTRIBUTING guide for more details.

MIT © Mercoa, Inc

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-document-ocr

Similar Open Source Tools

llm-document-ocr

LLM Document OCR is a Node.js tool that utilizes GPT4 and Claude3 for OCR and data extraction. It converts PDFs into PNGs, crops white-space, cleans up JSON strings, and supports various image formats. Users can customize prompts for data extraction. The tool is sponsored by Mercoa, offering API for BillPay and Invoicing.

json-repair

JSON Repair is a toolkit designed to address JSON anomalies that can arise from Large Language Models (LLMs). It offers a comprehensive solution for repairing JSON strings, ensuring accuracy and reliability in your data processing. With its user-friendly interface and extensive capabilities, JSON Repair empowers developers to seamlessly integrate JSON repair into their workflows.

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

receipt-ocr

An efficient OCR engine for receipt image processing, providing a comprehensive solution for Optical Character Recognition (OCR) on receipt images. The repository includes a dedicated Tesseract OCR module and a general receipt processing package using LLMs. Users can extract structured data from receipts, configure environment variables for multiple LLM providers, process receipts using CLI or programmatically in Python, and run the OCR engine as a Docker web service. The project also offers direct OCR capabilities using Tesseract and provides troubleshooting tips, contribution guidelines, and license information under the MIT license.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

llm-client

LLMClient is a JavaScript/TypeScript library that simplifies working with large language models (LLMs) by providing an easy-to-use interface for building and composing efficient prompts using prompt signatures. These signatures enable the automatic generation of typed prompts, allowing developers to leverage advanced capabilities like reasoning, function calling, RAG, ReAcT, and Chain of Thought. The library supports various LLMs and vector databases, making it a versatile tool for a wide range of applications.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

partialjson

PartialJson is a Python library that allows users to parse partial and incomplete JSON data with ease. With just 3 lines of Python code, users can parse JSON data that may be missing key elements or contain errors. The library provides a simple solution for handling JSON data that may not be well-formed or complete, making it a valuable tool for data processing and manipulation tasks.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

redis-vl-python

The Python Redis Vector Library (RedisVL) is a tailor-made client for AI applications leveraging Redis. It enhances applications with Redis' speed, flexibility, and reliability, incorporating capabilities like vector-based semantic search, full-text search, and geo-spatial search. The library bridges the gap between the emerging AI-native developer ecosystem and the capabilities of Redis by providing a lightweight, elegant, and intuitive interface. It abstracts the features of Redis into a grammar that is more aligned to the needs of today's AI/ML Engineers or Data Scientists.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

funcchain

Funcchain is a Python library that allows you to easily write cognitive systems by leveraging Pydantic models as output schemas and LangChain in the backend. It provides a seamless integration of LLMs into your apps, utilizing OpenAI Functions or LlamaCpp grammars (json-schema-mode) for efficient structured output. Funcchain compiles the Funcchain syntax into LangChain runnables, enabling you to invoke, stream, or batch process your pipelines effortlessly.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

CrackSQL

CrackSQL is a powerful SQL dialect translation tool that integrates rule-based strategies with large language models (LLMs) for high accuracy. It enables seamless conversion between dialects (e.g., PostgreSQL → MySQL) with flexible access through Python API, command line, and web interface. The tool supports extensive dialect compatibility, precision & advanced processing, and versatile access & integration. It offers three modes for dialect translation and demonstrates high translation accuracy over collected benchmarks. Users can deploy CrackSQL using PyPI package installation or source code installation methods. The tool can be extended to support additional syntax, new dialects, and improve translation efficiency. The project is actively maintained and welcomes contributions from the community.

For similar tasks

json-repair

JSON Repair is a toolkit designed to address JSON anomalies that can arise from Large Language Models (LLMs). It offers a comprehensive solution for repairing JSON strings, ensuring accuracy and reliability in your data processing. With its user-friendly interface and extensive capabilities, JSON Repair empowers developers to seamlessly integrate JSON repair into their workflows.

llm-document-ocr

LLM Document OCR is a Node.js tool that utilizes GPT4 and Claude3 for OCR and data extraction. It converts PDFs into PNGs, crops white-space, cleans up JSON strings, and supports various image formats. Users can customize prompts for data extraction. The tool is sponsored by Mercoa, offering API for BillPay and Invoicing.

markdrop

Markdrop is a Python package that facilitates the conversion of PDFs to markdown format while extracting images and tables. It also generates descriptive text descriptions for extracted tables and images using various LLM clients. The tool offers additional functionalities such as PDF URL support, AI-powered image and table descriptions, interactive HTML output with downloadable Excel tables, customizable image resolution and UI elements, and a comprehensive logging system. Markdrop aims to simplify the process of handling PDF documents and enhancing their content with AI-generated descriptions.

olmocr

olmOCR is a toolkit designed for training language models to work with PDF documents in real-world scenarios. It includes various components such as a prompting strategy for natural text parsing, an evaluation toolkit for comparing pipeline versions, filtering by language and SEO spam removal, finetuning code for specific models, processing PDFs through a finetuned model, and viewing documents created from PDFs. The toolkit requires a recent NVIDIA GPU with at least 20 GB of RAM and 30GB of free disk space. Users can install dependencies, set up a conda environment, and utilize olmOCR for tasks like converting single or multiple PDFs, viewing extracted text, and running batch inference pipelines.

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

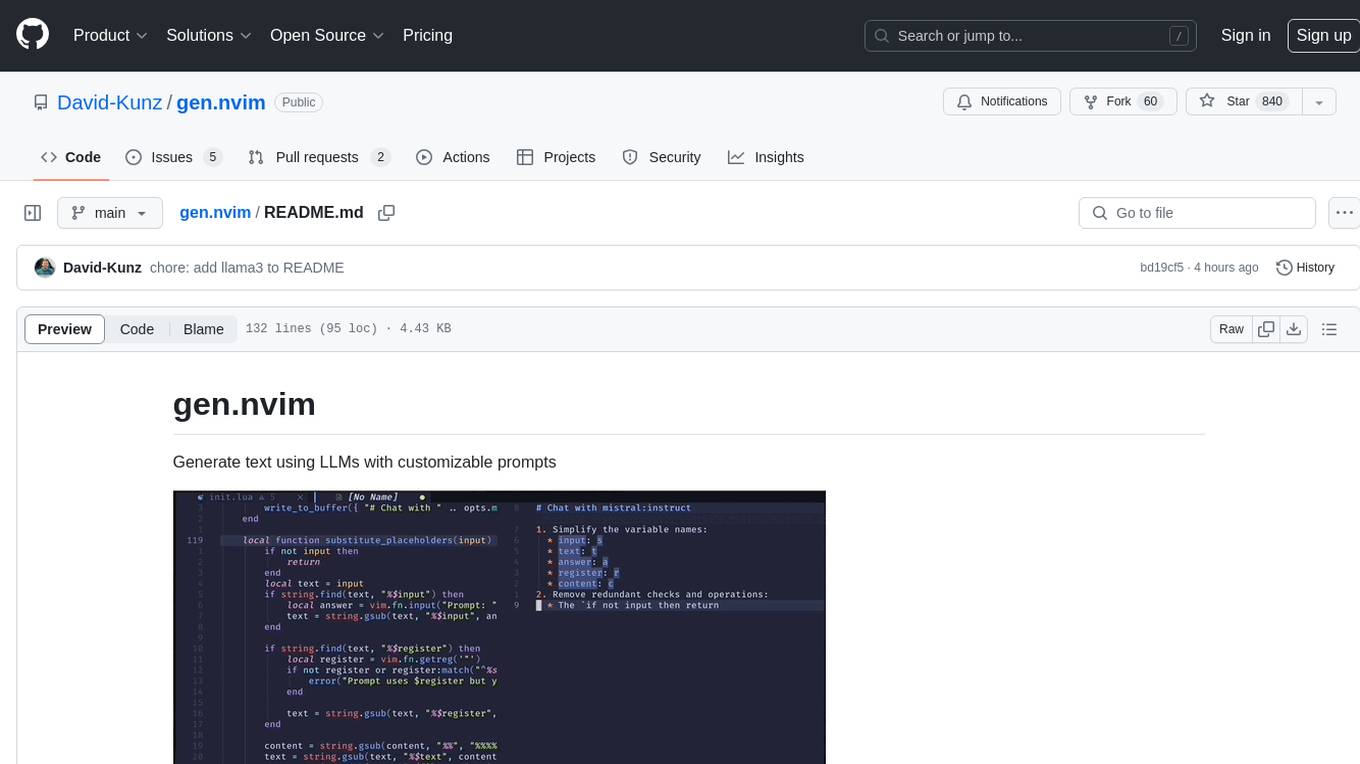

gen.nvim

gen.nvim is a tool that allows users to generate text using Language Models (LLMs) with customizable prompts. It requires Ollama with models like `llama3`, `mistral`, or `zephyr`, along with Curl for installation. Users can use the `Gen` command to generate text based on predefined or custom prompts. The tool provides key maps for easy invocation and allows for follow-up questions during conversations. Additionally, users can select a model from a list of installed models and customize prompts as needed.

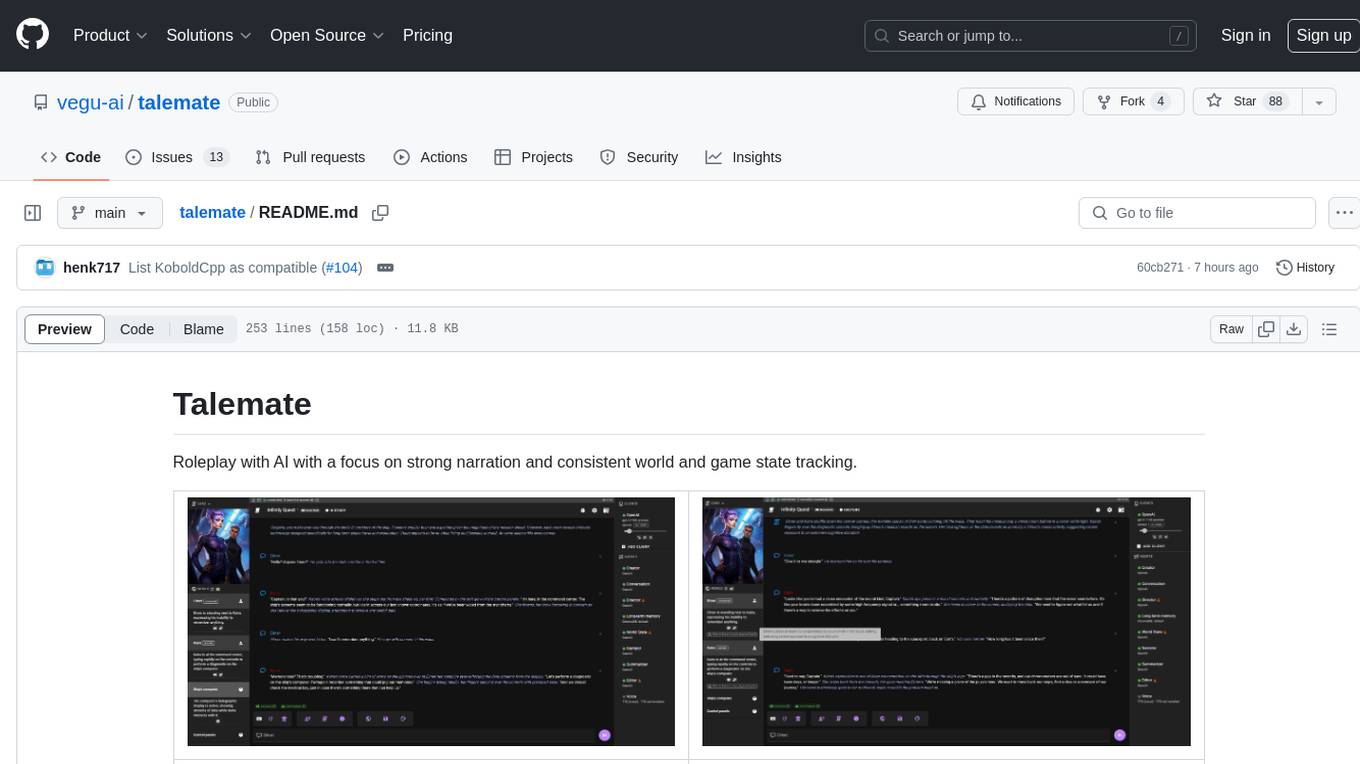

talemate

Talemate is a roleplay tool that allows users to interact with AI agents for dialogue, narration, summarization, direction, editing, world state management, character/scenario creation, text-to-speech, and visual generation. It supports multiple AI clients and APIs, offers long-term memory using ChromaDB, and provides tools for managing NPCs, AI-assisted character creation, and scenario creation. Users can customize prompts using Jinja2 templates and benefit from a modern, responsive UI. The tool also integrates with Runpod for enhanced functionality.

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.